hkust-aerial-robotics / vins-fusion Goto Github PK

View Code? Open in Web Editor NEWAn optimization-based multi-sensor state estimator

License: GNU General Public License v3.0

An optimization-based multi-sensor state estimator

License: GNU General Public License v3.0

Considering my experience in VINS-Mono, synchronized device in hardware is needed. but this time, in VINS-Fusion, I face the same drifting problem as I have faced in previous time. do you have any suggestion in hardware (since I use zed stereo camera and MPU9250 ,not well synchronized)?

`

bool PoseLocalParameterization::ComputeJacobian(const double *x, double *jacobian) const

{

Eigen::Map<Eigen::Matrix<double, 7, 6, Eigen::RowMajor>> j(jacobian);

j.topRows<6>().setIdentity();

j.bottomRows<1>().setZero();

return true;

}

`

In the code, the quaternion jacobian about the delta angle is [1, 0, 0; 0, 1, 0; 0, 0, 1; 0, 0, 0], but actually I think it is not correct.

it should be 0.5 * L(q) * [1, 0, 0; 0, 1, 0; 0, 0, 1; 0, 0, 0].

is it right?

why not fusion wheel odometery?

I'v noticed that there is a version of mynteye in the config folder, I want to know if anyone has tested the D435I equipment?

body_T_cam0: !!opencv-matrix

rows: 4

cols: 4

dt: d

data: [ 4.2812441490024389e-03, -9.9997001507473682e-01,

-6.4528985710044385e-03, 5.2583356071589790e-05,

9.9996900935734523e-01, 4.2384270612576547e-03,

6.6344601088757426e-03, -4.2174706544162562e-02,

-6.6069110351583190e-03, -6.4811023350536514e-03,

9.9995717110239080e-01, 1.9238715201769417e-02, 0., 0., 0., 1. ]

body_T_cam1: !!opencv-matrix

rows: 4

cols: 4

dt: d

data: [ 1.6525177162010074e-03, -9.9998509853672479e-01,

-5.2030654132755290e-03, -2.9251875934051923e-03,

9.9994935393851081e-01, 1.6007594247438028e-03,

9.9361525360214843e-03, 7.4379061731550719e-02,

-9.9276756168115085e-03, -5.2192215666013279e-03,

9.9993709851324453e-01, 1.9302672329335892e-02, 0., 0., 0., 1. ]

For example, I want to run this project in Gazebo? How can I configure some parameters?

Hello!

We are running your state estimator on a public agricultural dataset recorded by us (you can check it out here: http://www.cifasis-conicet.gov.ar/robot/doku.php). Some of the results we got are shown in this screenshot:

The estimation of VINS-Fusion always exceeds the ground truth so it makes us think that there could be some error in the configuration files:

cam0_pinhole_sequence01.txt

cam1_pinhole_sequence01.txt

zavalla_stereo_imu_config_sequence01.txt

Calibrations were obtained with Kalibr and other SLAM systems (SPTAM, ORB_SLAM2, MSCKF_VIO) were tested with it on this dataset and we had no problems. IMU parameters are quiet big but with the "real ones" VINS-Fusion directly diverges from the beginning.

One thing we know that could be wrong is the synchronization between sensors. However, we would expect the whole system to crash from the start if that was the main problem (it actually happened with the "real ones" IMU parameters but we imagine that could be something else wrong).

Is there any recommendation you could give us? Do you have any idea of what could be happening?

Thanks in advance.

Román.

Hi, I am trying to use the Optor visual-inertial camera with the stereo VINS-Fusion. However, it seriously drift and shake. How could I config the system to match this sensor?

Thanks.

I have some zed stereo data in .svo format, I want to know that can VINS be able to deal with the .svo data? Or I need to convert it to other format?

I have tried VINS-FUSION with kitti-dataset with stereo mode without IMU.and I find that the path of the result will not processed by Loop_fusion node even i have run it.

I have run it like this:

roslaunch vins vins_rviz.launch

rosrun loop_fusion loop_fusion_node /localhome/proprak8/catkin_ws/src/VINS-Fusion/config/kitti_odom/kitti_config00-02.yaml

rosrun vins kitti_odom_test /localhome/proprak8/catkin_ws/src/VINS-Fusion/config/kitti_odom/kitti_config00-02.yaml /localhome/proprak8/Dataset/dataset/sequences/00/

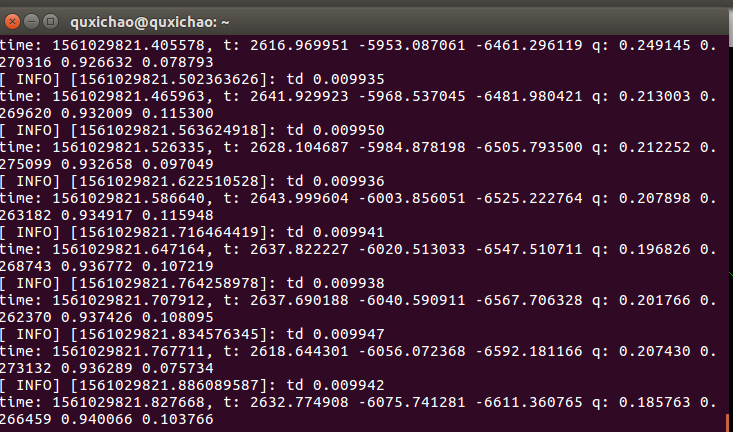

Hello, I recently used D435i to collect data for performance testing.

the rs_camera.launch is

`

<arg name="enable_pointcloud" value="$(arg enable_pointcloud)"/>

<arg name="pointcloud_texture_stream" value="$(arg pointcloud_texture_stream)"/>

<arg name="pointcloud_texture_index" value="$(arg pointcloud_texture_index)"/>

<arg name="enable_sync" value="$(arg enable_sync)"/>

<arg name="align_depth" value="$(arg align_depth)"/>

<arg name="fisheye_width" value="$(arg fisheye_width)"/>

<arg name="fisheye_height" value="$(arg fisheye_height)"/>

<arg name="enable_fisheye" value="$(arg enable_fisheye)"/>

<arg name="depth_width" value="$(arg depth_width)"/>

<arg name="depth_height" value="$(arg depth_height)"/>

<arg name="enable_depth" value="$(arg enable_depth)"/>

<arg name="color_width" value="$(arg color_width)"/>

<arg name="color_height" value="$(arg color_height)"/>

<arg name="enable_color" value="$(arg enable_color)"/>

<arg name="infra_width" value="$(arg infra_width)"/>

<arg name="infra_height" value="$(arg infra_height)"/>

<arg name="enable_infra1" value="$(arg enable_infra1)"/>

<arg name="enable_infra2" value="$(arg enable_infra2)"/>

<arg name="fisheye_fps" value="$(arg fisheye_fps)"/>

<arg name="depth_fps" value="$(arg depth_fps)"/>

<arg name="infra_fps" value="$(arg infra_fps)"/>

<arg name="color_fps" value="$(arg color_fps)"/>

<arg name="gyro_fps" value="$(arg gyro_fps)"/>

<arg name="accel_fps" value="$(arg accel_fps)"/>

<arg name="enable_gyro" value="$(arg enable_gyro)"/>

<arg name="enable_accel" value="$(arg enable_accel)"/>

<arg name="filters" value="$(arg filters)"/>

<arg name="clip_distance" value="$(arg clip_distance)"/>

<arg name="linear_accel_cov" value="$(arg linear_accel_cov)"/>

<arg name="initial_reset" value="$(arg initial_reset)"/>

<arg name="unite_imu_method" value="$(arg unite_imu_method)"/>

<arg name="topic_odom_in" value="$(arg topic_odom_in)"/>

<arg name="calib_odom_file" value="$(arg calib_odom_file)"/>

<arg name="publish_odom_tf" value="$(arg publish_odom_tf)"/>

</include>

the realsense_stereo_imu_config.yaml is

`%YAML:1.0

#common parameters

#support: 1 imu 1 cam; 1 imu 2 cam: 2 cam;

imu: 1

num_of_cam: 2

imu_topic: "/camera/imu"

image0_topic: "/camera/infra1/image_rect_raw"

image1_topic: "/camera/infra2/image_rect_raw"

output_path: "/home/quxichao/catkin-ws/output/"

cam0_calib: "left.yaml"

cam1_calib: "right.yaml"

image_width: 640

image_height: 480

estimate_extrinsic: 1 # 0 Have an accurate extrinsic parameters. We will trust the following imu^R_cam, imu^T_cam, don't change it.

# 1 Have an initial guess about extrinsic parameters. We will optimize around your initial guess.

body_T_cam0: !!opencv-matrix

rows: 4

cols: 4

dt: d

data: [ -5.7586305857286746e-03, -4.0463318787729019e-03,

9.9997523237933461e-01, 2.0329267950355900e-02,

-9.9998287214160420e-01, -1.0224590553211677e-03,

-5.7628118925283633e-03, 7.9325209639615653e-03,

1.0457519809151661e-03, -9.9999129084997906e-01,

-4.0403746097850135e-03, 2.8559824645148020e-03, 0., 0., 0., 1. ]

body_T_cam1: !!opencv-matrix

rows: 4

cols: 4

dt: d

data: [ -1.0021770212322867e-03, 3.6313480322730518e-04,

9.9999943188700535e-01, 1.5285779565991807e-02,

-9.9999216342926500e-01, -3.8303422615924010e-03,

-1.0007788055728661e-03, -5.2435791444330505e-02,

3.8299766679101843e-03, -9.9999259827824449e-01,

3.6697063849344680e-04, 8.6931302450199057e-03, 0., 0., 0., 1. ]

#Multiple thread support

multiple_thread: 1

#feature traker paprameters

max_cnt: 150 # max feature number in feature tracking

min_dist: 30 # min distance between two features

freq: 10 # frequence (Hz) of publish tracking result. At least 10Hz for good estimation. If set 0, the frequence will be same as raw image

F_threshold: 1.0 # ransac threshold (pixel)

show_track: 0 # publish tracking image as topic

flow_back: 1 # perform forward and backward optical flow to improve feature tracking accuracy

#optimization parameters

max_solver_time: 0.04 # max solver itration time (ms), to guarantee real time

max_num_iterations: 8 # max solver itrations, to guarantee real time

keyframe_parallax: 10.0 # keyframe selection threshold (pixel)

#imu parameters The more accurate parameters you provide, the better performance

acc_n: 0.1 # accelerometer measurement noise standard deviation. #0.2 0.04

gyr_n: 0.01 # gyroscope measurement noise standard deviation. #0.05 0.004

acc_w: 0.001 # accelerometer bias random work noise standard deviation. #0.002

gyr_w: 0.0001 # gyroscope bias random work noise standard deviation. #4.0e-5

g_norm: 9.805 # gravity magnitude

#unsynchronization parameters

estimate_td: 1 # online estimate time offset between camera and imu

td: 0.00 # initial value of time offset. unit: s. readed image clock + td = real image clock (IMU clock)

#loop closure parameters

load_previous_pose_graph: 0 # load and reuse previous pose graph; load from 'pose_graph_save_path'

pose_graph_save_path: "/home/quxichao/catkin-ws/output/pose_graph/" # save and load path

save_image: 0 # save image in pose graph for visualization prupose; you can close this function by setting 0 `

When I run the code,the track_image is none,and the location is drifting

I test vins-fusion with my own device (global shutter camera + imu + gps(RTK) put those together but not hardware synchronized) and set estimate_td to 1, online estimate time offset between camera and imu.

it seems that the global optimization thread doesn`t work properly, at most time the global optimized path overlay exactly with the vio odometry path, however the gps(RTK) path was not overlay with those two path.

And sometimes the global optimized path jumps for a short distance and ran off very fast , but the gps and vio path still works fine.

I could sent my rosbag to you if you are interested.

My devices are MYNTEYED and tx2 .During the process of using VINS-FUSION, I found that when running stereo + IMU, the drift is very powerful. But when running stereo, it is very stable. Would you like to ask if you have encountered such a problem?

Hi, There seems to be an error while calculating for the Jacobian matrix in Projection factor.

VINS-Fusion/vins_estimator/src/factor/projection_factor.cpp

line 89: jaco_i.rightCols<3>() = ric.transpose() * Rj.transpose() * Ri * -Utility::skewSymmetric(pts_imu_i);

This sub block should be the jacobian of residual with respect to vector part of the quaternion.

However, the formula above is actually treating the vector part of the quaternion as if it was the rotation vector.

I tried the car demo and it looked nice. In the rviz it appeared that only the camera is moving w.r.t. the world frame.

I am wondering how to get the visualization result as in this figure, i.e., making the camera/robot as the fixed reference frame and the world is "moving" w.r.t. the static camera. How to set the configuration of rviz? Or do we need other modification?

I tried to change rviz panel Displays->Global Options->Fixed Frame from "world" to "camera". I could get the result that camera was fixed in the middle while the trajectory was moving. However the PointCloud and HistoryPointCloud were not shown in the right way. They were moving with the camera, not static w.r.t. the world frame.

Thank you!

Thank you for your great work. And I can run the stereo vins with Kitti data set perfect. But when I ran it with my own data set, the trajectory is bad.

roslaunch vins vins_rviz.launch

rosrun vins kitti_odom_test ~/catkin_ws/src/VINS-Fusion/config/kitti_odom/my_kitti.yaml My_DATASET_FOLDER/sequences/seq25/

The dataset is from parking lot.

I already changed the camera calibration file and time stamp has the same unit as kitti. Besides, I saw the feature can track well.

So I am wondering what can cause this issue? And I can not see the parameter setting of baseline of stereo camera in the setting file. Where is it?

Hi,

I tested VINS-Fusion in Stereo+GPS mode with Kitti dataset, but the results are different from video presentation. Differences are in these aspects:

So I wan't to ask if I config something wrong or there is another version that you don't open source?

I've been running VINS-Fusion on a standard intel laptop, but want to try it on a raspberry pi 3 b+ and see what I can do through tuning low feature tracks and low speeds/framerates. I've compiled it on the pi, but when I attempt to run the same configuration as what is on my intel laptop, I get the error described here: https://eigen.tuxfamily.org/dox/group__TopicUnalignedArrayAssert.html

The Eigen version that's installed on the laptop is 3.2.92 and the one on the raspberry pi is 3.3.2. The error seems to be coming from Estimator::processImage. Is this something that needs to be fixed according to the above link? Or is there a recommended version of eigen that I should be using?

I have opencv 3.4 installed but it asks for 3.2 library, is there a workaround?

make[2]: *** No rule to make target '/usr/lib/x86_64-linux-gnu/libopencv_core.so.3.2.0', needed by '/home/george/catkin_ws/devel/lib/loop_fusion/loop_fusion_node'. Stop.

CMakeFiles/Makefile2:1439: recipe for target 'VINS-Fusion/loop_fusion/CMakeFiles/loop_fusion_node.dir/all' failed

make[1]: *** [VINS-Fusion/loop_fusion/CMakeFiles/loop_fusion_node.dir/all] Error 2

make[1]: *** Waiting for unfinished jobs....

make[2]: *** No rule to make target '/usr/lib/x86_64-linux-gnu/libopencv_core.so.3.2.0', needed by '/home/george/catkin_ws/devel/lib/libvins_lib.so'. Stop.

CMakeFiles/Makefile2:2461: recipe for target 'VINS-Fusion/vins_estimator/CMakeFiles/vins_lib.dir/all' failed

make[1]: *** [VINS-Fusion/vins_estimator/CMakeFiles/vins_lib.dir/all] Error 2

Makefile:140: recipe for target 'all' failed

make: *** [all] Error 2

Invoking "make -j4 -l4" failed

Hi,

Does VINS-Fusion work with fisheye stereo?

Thank you for your great work.

Could this code run using Monocualr camera + IMU + GPS on ROS environment?

Or how to modify ?

thank you very much.

Nelosn Wu

i have catkin make problem.

/home/gurturk/catkin_ws/src/VINS-Fusion/camera_models/src/chessboard/Chessboard.cc: In member function ‘void camodocal::Chessboard::generateQuads(std::vector<boost::shared_ptr<camodocal::ChessboardQuad> >&, cv::Mat&, int, int, bool)’: /home/gurturk/catkin_ws/src/VINS-Fusion/camera_models/src/chessboard/Chessboard.cc:1175:50: error: ‘CV_RETR_CCOMP’ was not declared in this scope cv::findContours(image, contours, hierarchy, CV_RETR_CCOMP, CV_CHAIN_APPROX_SIMPLE); ^~~~~~~~~~~~~ /home/gurturk/catkin_ws/src/VINS-Fusion/camera_models/src/chessboard/Chessboard.cc:1175:65: error: ‘CV_CHAIN_APPROX_SIMPLE’ was not declared in this scope cv::findContours(image, contours, hierarchy, CV_RETR_CCOMP, CV_CHAIN_APPROX_SIMPLE); ^~~~~~~~~~~~~~~~~~~~~~ VINS-Fusion/camera_models/CMakeFiles/Calibrations.dir/build.make:110: recipe for target 'VINS-Fusion/camera_models/CMakeFiles/Calibrations.dir/src/calib/CameraCalibration.cc.o' failed make[2]: *** [VINS-Fusion/camera_models/CMakeFiles/Calibrations.dir/src/calib/CameraCalibration.cc.o] Error 1 /home/gurturk/catkin_ws/src/VINS-Fusion/camera_models/src/chessboard/Chessboard.cc:1241:27: error: ‘CV_CALIB_CB_FILTER_QUADS’ was not declared in this scope if (!(flags & CV_CALIB_CB_FILTER_QUADS) || ^~~~~~~~~~~~~~~~~~~~~~~~ VINS-Fusion/camera_models/CMakeFiles/camera_models.dir/build.make:86: recipe for target 'VINS-Fusion/camera_models/CMakeFiles/camera_models.dir/src/calib/CameraCalibration.cc.o' failed make[2]: *** [VINS-Fusion/camera_models/CMakeFiles/camera_models.dir/src/calib/CameraCalibration.cc.o] Error 1 make[2]: *** Waiting for unfinished jobs.... /home/gurturk/catkin_ws/src/VINS-Fusion/camera_models/src/chessboard/Chessboard.cc: In member function ‘bool camodocal::Chessboard::checkChessboard(const cv::Mat&, cv::Size) const’: /home/gurturk/catkin_ws/src/VINS-Fusion/camera_models/src/chessboard/Chessboard.cc:1586:72: error: ‘CV_THRESH_BINARY’ was not declared in this scope cv::threshold(white, thresh, threshLevel + blackWhiteGap, 255, CV_THRESH_BINARY); ^~~~~~~~~~~~~~~~ /home/gurturk/catkin_ws/src/VINS-Fusion/camera_models/src/chessboard/Chessboard.cc:1592:55: error: ‘CV_RETR_CCOMP’ was not declared in this scope cv::findContours(thresh, contours, hierarchy, CV_RETR_CCOMP, CV_CHAIN_APPROX_SIMPLE); ^~~~~~~~~~~~~ /home/gurturk/catkin_ws/src/VINS-Fusion/camera_models/src/chessboard/Chessboard.cc:1592:70: error: ‘CV_CHAIN_APPROX_SIMPLE’ was not declared in this scope cv::findContours(thresh, contours, hierarchy, CV_RETR_CCOMP, CV_CHAIN_APPROX_SIMPLE); ^~~~~~~~~~~~~~~~~~~~~~ /home/gurturk/catkin_ws/src/VINS-Fusion/camera_models/src/chessboard/Chessboard.cc:1597:56: error: ‘CV_THRESH_BINARY_INV’ was not declared in this scope cv::threshold(black, thresh, threshLevel, 255, CV_THRESH_BINARY_INV); ^~~~~~~~~~~~~~~~~~~~ VINS-Fusion/camera_models/CMakeFiles/Calibrations.dir/build.make:62: recipe for target 'VINS-Fusion/camera_models/CMakeFiles/Calibrations.dir/src/intrinsic_calib.cc.o' failed make[2]: *** [VINS-Fusion/camera_models/CMakeFiles/Calibrations.dir/src/intrinsic_calib.cc.o] Error 1 CMakeFiles/Makefile2:676: recipe for target 'VINS-Fusion/camera_models/CMakeFiles/Calibrations.dir/all' failed make[1]: *** [VINS-Fusion/camera_models/CMakeFiles/Calibrations.dir/all] Error 2 make[1]: *** Waiting for unfinished jobs.... VINS-Fusion/camera_models/CMakeFiles/camera_models.dir/build.make:62: recipe for target 'VINS-Fusion/camera_models/CMakeFiles/camera_models.dir/src/chessboard/Chessboard.cc.o' failed make[2]: *** [VINS-Fusion/camera_models/CMakeFiles/camera_models.dir/src/chessboard/Chessboard.cc.o] Error 1 CMakeFiles/Makefile2:447: recipe for target 'VINS-Fusion/camera_models/CMakeFiles/camera_models.dir/all' failed make[1]: *** [VINS-Fusion/camera_models/CMakeFiles/camera_models.dir/all] Error 2 Makefile:140: recipe for target 'all' failed make: *** [all] Error 2 Invoking "make -j8 -l8" failed

I'm confused that I try to run VINS-Fusion on Intel NUC ,however there is always a few seconds delay in the output.

Is this related to NUC devices?

Hi,

I’m excited to see this release!

I wonder if there is a way to use more than 2 cameras at the moment?

Thanks in advance!

Chang

Hi,thank you for your excellent work! Now, I'm using a stereo camera with an IMU to run this project while something strange happened during Initialization.The pose is waving but the vehicle is not moving at all.

Hello, thank you for sharing this great work.

I have a question about loop closure. It seems that in VINS-Fusion, relocalization is only used for 4-DoF pose graph optimization and acts as a completely independent module than the VI odometry one. However, in VINS-Mono, it was used to constrain the optimization problem during the tracking.

Could you explain why you decided to remove this energy term from the optimization and how it affects the final results ?

Hi, in author's example, the version of stereo+imu is tested on the EuRoc dataset. Can this version be used for kitty dataset? Will it work better than the stereo version?

All modes are working with Mynteye, but tracking is getting delayed , when make camera movements, odometry information is good enough but real time running, i'm getting delayed odom output

Hi, if I use another stereo camera, how can I transform

cam0_pinhole.yaml to cam0_mei.yaml?

Thanks!

My devices are MYNTEYED and tx2 .During the process of using VINS-FUSION, I found that when running stereo + IMU, the drift is very powerful. But when running stereo, it is very stable. Would you like to ask if you have encountered such a problem?

Tracking is very slow in Jetson TX2, I reduced number of features but still tracking is very slow more than 10 seconds.

Dear QingTong

RGBD camera slam is very important . I want to change your VINS-Fusion to support RGBD camera such as

Kinect2 ,Xion2,Orbbec, etc. I think this is achievable. So Could you give some suggestion?

Why not add a localizer module? Isn't this more perfect?

How to calibrate cameras in simulated environments like Airsim or Flightgoggles? We can access ROS topic for camerainfo. Can we extract the required calibration parameters from camerainfo?

Thank you for your great work. I can run the car demenstration and Kitti GPS Fusion perfectly. But when I run it with realsense d435i,I get the following error.

$rosrun vins vins_node ~/catkin_ws/src/VINS-Fusion/config/realsense_d435i/realsense_stereo_imu_config.yaml

[ INFO] [1556271031.868824114]: init begins

config_file: realsense_stereo_imu_config.yaml

USE_IMU: 1

IMU_TOPIC: /camera/imu

result path /home/dji/output//vio.csv

[ WARN] [1556271031.872582533]: Optimize extrinsic param around initial guess!

camera number 2

[ INFO] [1556271031.872806431]: Unsynchronized sensors, online estimate time offset, initial td: 0

[ INFO] [1556271031.872823934]: ROW: 480 COL: 640

exitrinsic cam 0

-0.00575863 -0.00404633 0.999975

-0.999983 -0.00102246 -0.00576281

0.00104575 -0.999991 -0.00404037

0.0203293 0.00793252 0.00285598

exitrinsic cam 1

-0.00100218 0.000363135 0.999999

-0.999992 -0.00383034 -0.00100078

0.00382998 -0.999993 0.000366971

0.0152858 -0.0524358 0.00869313

set g 0 0 9.805

[ INFO] [1556271031.872958979]: reading paramerter of camera realsense_stereo_imu_config.yaml/left.yaml

[ INFO] [1556271031.873021335]: reading paramerter of camera realsense_stereo_imu_config.yaml/right.yaml

MULTIPLE_THREAD is 1

[ WARN] [1556271031.873101001]: waiting for image and imu...

vins_node: /usr/include/boost/smart_ptr/shared_ptr.hpp:648: typename boost::detail::sp_member_access<T>::type boost::shared_ptr<T>::operator->() const [with T = camodocal::Camera; typename boost::detail::sp_member_access<T>::type = camodocal::Camera*]: Assertion `px != 0' failed.

Aborted (core dumped)

ROS node was launched with following comands:

roslaunch vins vins_rviz.launch

rosrun loop_fusion loop_fusion_node ~/catkin_ws/src/VINS-Fusion/config/realsense_d435i/realsense_stereo_imu_config.yaml

rosrun vins vins_node ~/catkin_ws/src/VINS-Fusion/config/realsense_d435i/realsense_stereo_imu_config.yaml

I have checked the /camera/infra1/image_rect_raw,/camera/infra2/image_rect_rawand /camera/imutopics, these nodes looked fine.

Any idea about the error would be appreciate.

I make a team of wechat for VINS-Fusion, This team can discuss all kinds of issue of vins and other vio slam.

If anyone want to join it. Please ,first add wechat number:31781410.

我建了一个微信群供大家一起讨论VINS-Fusion以及其它vio slam, 如果大家想要加入可以先加一下微信号31781410

Thank you sharing the code.

I am wondering if saving and reloading the map is still available in this version, similar to vins-mono?

Thanks

In ./config/euroc/cam0_pinhole.yaml, the intrinsics are:

distortion_parameters:

k1: -2.9545645106987750e-01

k2: 8.6623215640186171e-02

p1: 2.0132892276082517e-06

p2: 1.3924531371276508e-05

projection_parameters:

fx: 4.6115862106007575e+02

fy: 4.5975286598073296e+02

cx: 3.6265929181685937e+02

cy: 2.4852105668448124e+02

However, what the EuRoC dataset provided in cam0 folder are:

camera_model: pinhole

intrinsics: [458.654, 457.296, 367.215, 248.375] #fu, fv, cu, cv

distortion_model: radial-tangential

distortion_coefficients: [-0.28340811, 0.07395907, 0.00019359, 1.76187114e-05]

I just wonder how you obtained your own camera calibration? Thanks in advance.

I want to ask that why the function "Estimator::failureDetection" in VINS Fusion always return false? Is this function cannot work in VINS Fusion?

When I used the function and let it return true, the program will stop rather than reboot.

I tried to run the vins FUSION as per the instructions for, but it get an error after like 2 seconds and it stops. basically it mentions "STACK SMASHING DETECTED"

[ INFO] [1550620405.682573881]: Initialization finish!

time: 1403637131.588319, t: -0.000260 -0.000035 -0.000052 q: 1.000000 -0.000010 0.000556 0.000094

time: 1403637131.688319, t: -0.000228 -0.000094 0.000004 q: 1.000000 0.000311 0.000445 0.000057

time: 1403637131.788319, t: -0.000063 -0.000040 -0.000013 q: 1.000000 0.000191 -0.000131 -0.000072

time: 1403637131.888319, t: -0.000049 -0.000002 -0.000035 q: 1.000000 -0.000036 -0.000057 -0.000011

time: 1403637131.988319, t: -0.000071 -0.000014 -0.000039 q: 1.000000 0.000157 -0.000170 -0.000254

time: 1403637132.088319, t: -0.000004 0.000096 -0.000015 q: 1.000000 -0.000152 -0.000054 -0.000741

time: 1403637132.188319, t: 0.000017 0.000139 -0.000030 q: 1.000000 -0.000232 -0.000095 -0.000753

time: 1403637132.288319, t: 0.000013 0.000144 -0.000021 q: 0.999999 -0.000100 -0.000136 -0.001037

time: 1403637132.388319, t: 0.000000 -0.000021 0.000008 q: 1.000000 0.000114 0.000089 0.000028

time: 1403637132.488319, t: -0.000015 0.000106 -0.000061 q: 1.000000 -0.000116 -0.000059 -0.000776

time: 1403637132.588319, t: 0.000051 0.000258 -0.000015 q: 0.999999 -0.000300 -0.000106 -0.001571

time: 1403637132.688319, t: 0.000045 0.000088 0.000027 q: 1.000000 -0.000144 -0.000043 -0.000530

time: 1403637132.788319, t: 0.000091 0.000041 0.000068 q: 1.000000 -0.000068 0.000075 -0.000344

time: 1403637132.888319, t: 0.000178 0.000132 0.000071 q: 1.000000 -0.000209 0.000352 0.000138

time: 1403637132.988319, t: -0.000112 0.000046 0.000033 q: 0.999999 -0.000398 0.000783 0.001066

time: 1403637133.088319, t: 0.000195 -0.000014 -0.000074 q: 0.999999 -0.000514 0.000976 0.000640

time: 1403637133.188319, t: 0.001971 0.000117 -0.002725 q: 0.999996 0.000633 0.002543 0.001089

time: 1403637133.288319, t: 0.004889 0.001171 -0.004884 q: 0.999984 -0.003344 0.001760 -0.004173

*** stack smashing detected ***: /home/yuthika/vins-ws/devel/lib/vins/vins_node terminated

Aborted (core dumped)

Thank you for your great work.

My device is MYNTEYE-D, the image size is 1280*720. When I move the device, the track image and odometry in RVIZ have large delay. For example, I hold the device move around, the track image and odometry needs 5~10 second to respond. Do you know why?

I can't have a good initial result when I run vins_fusion with multi_camera system. How does the InitialStructure part work when using a stereo camera and an IMU . Is there any paper to detail that part?

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.