hku-mars / r2live Goto Github PK

View Code? Open in Web Editor NEWR2LIVE: A Robust, Real-time, LiDAR-Inertial-Visual tightly-coupled state Estimator and mapping package

License: GNU General Public License v2.0

R2LIVE: A Robust, Real-time, LiDAR-Inertial-Visual tightly-coupled state Estimator and mapping package

License: GNU General Public License v2.0

Good day!

I have a running environment with FAST-LIO2 using an external IMU, thanks for that!

Would be possible in the future to use an external IMU also with r2live? will be great for MID40 and MID70.

Thanks.

After following your instructions, either with livox_ros_driver (The main) or livox_ros_driver_for_R2LIVE, several CMake Errors appear

-- looking for PCL_SURFACE

-- looking for PCL_REGISTRATION

-- looking for PCL_KEYPOINTS

-- looking for PCL_TRACKING

-- looking for PCL_RECOGNITION

-- looking for PCL_STEREO

-- looking for PCL_APPS

-- looking for PCL_IN_HAND_SCANNER

-- looking for PCL_MODELER

-- looking for PCL_POINT_CLOUD_EDITOR

-- looking for PCL_OUTOFCORE

-- looking for PCL_PEOPLE

-- livox_ros_driver: 2 messages, 0 services

CMake Error at /home/ubuntu/Agroscope/Post_processing/third_party/lidar/ROS_workspace_2/build/livox_ros_driver/cmake/livox_ros_driver-genmsg.cmake:57 (add_custom_target):

add_custom_target cannot create target

"livox_ros_driver_generate_messages_cpp" because another target with the

same name already exists. The existing target is a custom target created

in source directory

"/home/ubuntu/Agroscope/Post_processing/third_party/lidar/ROS_workspace_2/src/r2live/r2live".

See documentation for policy CMP0002 for more details.

Call Stack (most recent call first):

/opt/ros/melodic/share/genmsg/cmake/genmsg-extras.cmake:307 (include)

livox_ros_driver/CMakeLists.txt:46 (generate_messages)

CMake Error at /home/ubuntu/Agroscope/Post_processing/third_party/lidar/ROS_workspace_2/build/livox_ros_driver/cmake/livox_ros_driver-genmsg.cmake:98 (add_custom_target):

add_custom_target cannot create target

"livox_ros_driver_generate_messages_eus" because another target with the

same name already exists. The existing target is a custom target created

in source directory

"/home/ubuntu/Agroscope/Post_processing/third_party/lidar/ROS_workspace_2/src/r2live/r2live".

See documentation for policy CMP0002 for more details.

Call Stack (most recent call first):

/opt/ros/melodic/share/genmsg/cmake/genmsg-extras.cmake:307 (include)

livox_ros_driver/CMakeLists.txt:46 (generate_messages)

CMake Error at /home/ubuntu/Agroscope/Post_processing/third_party/lidar/ROS_workspace_2/build/livox_ros_driver/cmake/livox_ros_driver-genmsg.cmake:139 (add_custom_target):

add_custom_target cannot create target

"livox_ros_driver_generate_messages_lisp" because another target with the

same name already exists. The existing target is a custom target created

in source directory

"/home/ubuntu/Agroscope/Post_processing/third_party/lidar/ROS_workspace_2/src/r2live/r2live".

See documentation for policy CMP0002 for more details.

Call Stack (most recent call first):

/opt/ros/melodic/share/genmsg/cmake/genmsg-extras.cmake:307 (include)

livox_ros_driver/CMakeLists.txt:46 (generate_messages)

CMake Error at /home/ubuntu/Agroscope/Post_processing/third_party/lidar/ROS_workspace_2/build/livox_ros_driver/cmake/livox_ros_driver-genmsg.cmake:180 (add_custom_target):

add_custom_target cannot create target

"livox_ros_driver_generate_messages_nodejs" because another target with the

same name already exists. The existing target is a custom target created

in source directory

"/home/ubuntu/Agroscope/Post_processing/third_party/lidar/ROS_workspace_2/src/r2live/r2live".

See documentation for policy CMP0002 for more details.

Call Stack (most recent call first):

/opt/ros/melodic/share/genmsg/cmake/genmsg-extras.cmake:307 (include)

livox_ros_driver/CMakeLists.txt:46 (generate_messages)

CMake Error at /home/ubuntu/Agroscope/Post_processing/third_party/lidar/ROS_workspace_2/build/livox_ros_driver/cmake/livox_ros_driver-genmsg.cmake:221 (add_custom_target):

add_custom_target cannot create target

"livox_ros_driver_generate_messages_py" because another target with the

same name already exists. The existing target is a custom target created

in source directory

"/home/ubuntu/Agroscope/Post_processing/third_party/lidar/ROS_workspace_2/src/r2live/r2live".

See documentation for policy CMP0002 for more details.

Call Stack (most recent call first):

/opt/ros/melodic/share/genmsg/cmake/genmsg-extras.cmake:307 (include)

livox_ros_driver/CMakeLists.txt:46 (generate_messages)

/usr/include/apr-1.0

apr-1

find livox sdk library success

-- Configuring incomplete, errors occurred!

See also "/home/ubuntu/Agroscope/Post_processing/third_party/lidar/ROS_workspace_2/build/CMakeFiles/CMakeOutput.log".

See also "/home/ubuntu/Agroscope/Post_processing/third_party/lidar/ROS_workspace_2/build/CMakeFiles/CMakeError.log".

Invoking "cmake" failed

Thanks for the amazing paper and code.

If I got it correctly, the codebase does not handle 3D lidar. Is that correct?

If yes, why is that? Is it inherant to the method or is it possible to make it work with small modifications?

Thanks.

A solution to this problem is:

in /usr/include/flann/util/serialization.h at the top change:

#include "flann/ext/lz4.h"

#include "flann/ext/lz4hc.h"

to:

#include "lz4.h"

#include "lz4hc.h"

More details here:

ethz-asl/lidar_align#16

Hi @ziv-lin , thanks for sharing your great work!

The fast-lio2 has been released, it's more precise and robust, and can be applied to both solid-state and mechanical lidar. Will you update the lidar odometry to fast-lio2?

Hi, Thanks for your great work!

But I have a question about the depends version.

my computer is Ubuntu16.04, the eigen version is 3.2.8, cmake version 3.5.1, gcc & g++ version 5.4.0;

I find that,the compiler require C++17 in the camkelists.txt;

What is the appropriate dependency version about r2live? Should I update the cmake and gcc version or update the ubuntu version 18.04?

Hi author,

I'm curious about Fδx and Fw derivation in Supplementary Material B. There are some question want to ask for your help, hope this will not make you annoyed and angry. Thanks for your help and time a lot!

This is the whole derivation process about delta_x{i+1}_hat, namely equ(6) of paper and Fδx and Fw, i am not sure whether there is some wrong place, if anybody find it, please give a comment, thanks for author's wonderful work!

diff_vins_lio_q = eigen_q(estimator.Rs[WINDOW_SIZE].transpose() * state_aft_integration.rot_end);

diff_vins_lio_t = state_aft_integration.pos_end - estimator.Ps[WINDOW_SIZE];

int Estimator::refine_vio_system(eigen_q q_extrinsic, vec_3 t_extrinsic)

{

Eigen::Matrix<double, 3, 3> R_mat_e = q_extrinsic.toRotationMatrix();

for (int win_idx = 0; win_idx <= WINDOW_SIZE; win_idx++)

{

Rs[win_idx] = Rs[win_idx] * R_mat_e;

Ps[win_idx] = Ps[win_idx] + t_extrinsic;

Vs[win_idx] = R_mat_e * Vs[win_idx];

}

for (int win_idx = 0; win_idx <= WINDOW_SIZE; win_idx++)

{

pre_integrations[win_idx]->delta_p = R_mat_e * pre_integrations[win_idx]->delta_p;

}

}

what is the formula for diff_vins_lio_q?I can not undestand it‘s meaning

And I found vins often reboot when run function “refine_vio_system” with my dataset, it shows big angle for fail

Thank you for sharing your amazing work. I am trying to catkin_make r2live but it is failing and I guess the error is related to opecv version. Currently I am using opencv 4.4.

Thanks for your great job!

I have livox-horizon,which have the build-in imu, and which camera I should prepare to run your code?

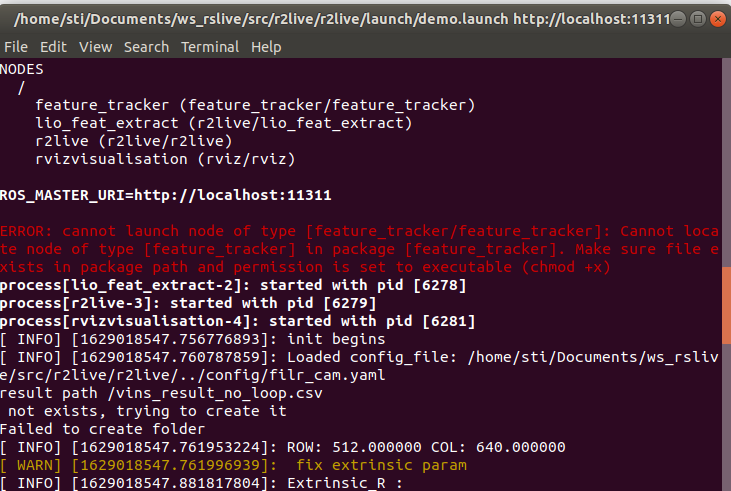

ERROR: cannot launch node of type [feature_tracker/feature_tracker]: Cannot locate node of type [feature_tracker] in package [feature_tracker]. Make sure file exists in package path and permission is set to executable (chmod +x)

ERROR: cannot launch node of type [r2live/lio_feat_extract]: Cannot locate node of type [lio_feat_extract] in package [r2live]. Make sure file exists in package path and permission is set to executable (chmod +x)

ERROR: cannot launch node of type [r2live/r2live]: Cannot locate node of type [r2live] in package [r2live]. Make sure file exists in package path and permission is set to executable (chmod +x)

How do I resolve this? thanks

Hi,

while compiling r2live, there are few files missing error-

fatal error: r2live/States.h: No such file or directory

and

fatal error: r2live/Pose6D.h: No such file or directory

Any suggestions?

Thanks.

Hey guys! Great work.

Could you please already indicate already your requirements? (at least which LiDAR, Ubuntu and ROS version)

It will be helpfully to prepare the material in the meanwhile..

Thanks!

Hei guys.

Looking forward for your work! Any estimations of when will be available? Just asking me a couple of questions. If it is possible, could you please incorporate two things in your code or document where they can be modified

I believe that been able to easily modify these parameters will be the best way to integrate your code in different scenarios.

Thanks!

Ps: If you could incorporate a way to also separate the capturing and the data processing for later post processing with PPK will be awesome too but that is more work ....

Hi,

i am using livox avia with IDS global shutter camera.

I have calibrated camera and livox-camera as well through this-

https://github.com/hku-mars/livox_camera_calib

Checked the optimizer result and it was good overlaying.

Using the livox-ros-driver -

https://github.com/ziv-lin/livox_ros_driver_for_R2LIVE

Run commands-

As soon it started, it continously drift and goes along like a pipe. Not giving the results.

Note-

i got extrinsic after livox-camera-calibration, and did -

rotation = r^(-1), translation = -r^(-1) * t, where r, t is the origin rotation and translation in result/extrinsic.txt. As i found

Rotation from camera frame to imu frame, imu^R_cam and Translation from camera frame to imu frame, imu^T_cam.

Any suggestions to check ? like parameters or something else.

Thanks.

Does anyone have a schematic of how to pair all the hardware together? It especially bothers me how to connect the camera and make sync with others ...

I got the following error when replaying the indoor_aggressive.bag.

I am on Ubuntu 20.04. ROS-Noetic

r2live/src/fast_lio/IMU_Processing.cpp, 135, check_state fail !!!! 10.3042 -5.29684 -9.93136

r2live/src/fast_lio/IMU_Processing.cpp, 135, check_state fail !!!! 10.3042 -5.29684 -9.93136

r2live/src/fast_lio/IMU_Processing.cpp, 135, check_state fail !!!! 10.3042 -5.29684 -9.93136

r2live/src/fast_lio/IMU_Processing.cpp, 135, check_state fail !!!! 10.3042 -5.29684 -9.93136

r2live/src/fast_lio/IMU_Processing.cpp, 135, check_state fail !!!! 10.3042 -5.29684 -9.93136

r2live/src/fast_lio/IMU_Processing.cpp, 135, check_state fail !!!! 10.3042 -5.29684 -9.93136

r2live/src/fast_lio/IMU_Processing.cpp, 135, check_state fail !!!! 10.3042 -5.29684 -9.93136

r2live/src/fast_lio/IMU_Processing.cpp, 135, check_state fail !!!! 10.3042 -5.29684 -9.93136

r2live/src/fast_lio/IMU_Processing.cpp, 135, check_state fail !!!! 10.3042 -5.29684 -9.93136

r2live/src/fast_lio/IMU_Processing.cpp, 135, check_state fail !!!! 10.3042 -5.29684 -9.93136

W0914 16:21:12.606252 1219659 levenberg_marquardt_strategy.cc:115] Linear solver failure. Failed to compute a step: Eigen failure. Unable to perform dense Cholesky factorization.

W0914 16:21:12.606863 1219659 levenberg_marquardt_strategy.cc:115] Linear solver failure. Failed to compute a step: Eigen failure. Unable to perform dense Cholesky factorization.

Can I use a simple DSLR Camera? If not, why do we need to use this industrial camera that has not many megapixels? sry for stupid question:)

We see a nice touch screen monitor in your sample video here - what device is this? Something from DJI?

Great work! I can't wait to try it out.

In your opinion, can placing the system on a gimbal reduce the error propagation?

I have a dataset with velodyne point cloud, which is in the format of sensor_msgs/PointCloud2. There exists a velo16_handler:

https://github.com/hku-mars/r2live/blob/master/r2live/src/fast_lio/feature_extract.cpp#L115. So i changed the lidar_type from 1 to 2: https://github.com/hku-mars/r2live/blob/master/config/lio_config.yaml#L2. Afterwards, i launched the program and replayed my dataset.

I got the following issue:

Failed to find match for field 'normal_x'.

Failed to find match for field 'normal_y'.

Failed to find match for field 'normal_z'.

Failed to find match for field 'curvature'.

Failed to find match for field 'normal_x'.

Failed to find match for field 'normal_y'.

Failed to find match for field 'normal_z'.

Failed to find match for field 'curvature'.

Does that mean that i have to pre-compute the normal_x, normal_y, normal_z and curvature for the velodyne point cloud?

Is there any method in your code to do this?

When I run demo.launch and the bag file you provided, I encountered the following problems:

/home/wangbin/catkin_ws/src/r2live/r2live/src/fast_lio/IMU_Processing.cpp, 400, dt = 0.104579

QObject::connect: Cannot queue arguments of type 'QVector'

(Make sure 'QVector' is registered using qRegisterMetaType().)

QObject::connect: Cannot queue arguments of type 'QVector'

(Make sure 'QVector' is registered using qRegisterMetaType().)

QObject::connect: Cannot queue arguments of type 'QVector'

(Make sure 'QVector' is registered using qRegisterMetaType().)

QObject::connect: Cannot queue arguments of type 'QVector'

(Make sure 'QVector' is registered using qRegisterMetaType().)

QObject::connect: Cannot queue arguments of type 'QVector'

(Make sure 'QVector' is registered using qRegisterMetaType().)

QObject::connect: Cannot queue arguments of type 'QVector'

(Make sure 'QVector' is registered using qRegisterMetaType().)

QObject::connect: Cannot queue arguments of type 'QVector'

(Make sure 'QVector' is registered using qRegisterMetaType().)

QObject::connect: Cannot queue arguments of type 'QVector'

(Make sure 'QVector' is registered using qRegisterMetaType().)

[rvizvisualisation-5] process has died [pid 2346, exit code -11, cmd /opt/ros/melodic/lib/rviz/rviz -d /home/wangbin/catkin_ws/src/r2live/r2live/../config/rviz/vins_rviz_config.rviz __name:=rvizvisualisation __log:=/home/wangbin/.ros/log/bfc04080-0e2c-11ec-9a78-000c29ba4d3c/rvizvisualisation-5.log].

log file: /home/wangbin/.ros/log/bfc04080-0e2c-11ec-9a78-000c29ba4d3c/rvizvisualisation-5*.log

And the rviz crashed. What should I do to solve this problem?

when I use Velodyne 16, switch lidar type to 2, the feature extract node has the following warning.

Failed to find match for field 'normal_x'.

Failed to find match for field 'normal_y'.

Failed to find match for field 'normal_z'.

Failed to find match for field 'curvature'.

I don't think the velodyne_points field has those attributes and I cannot find in the source code of this warning.

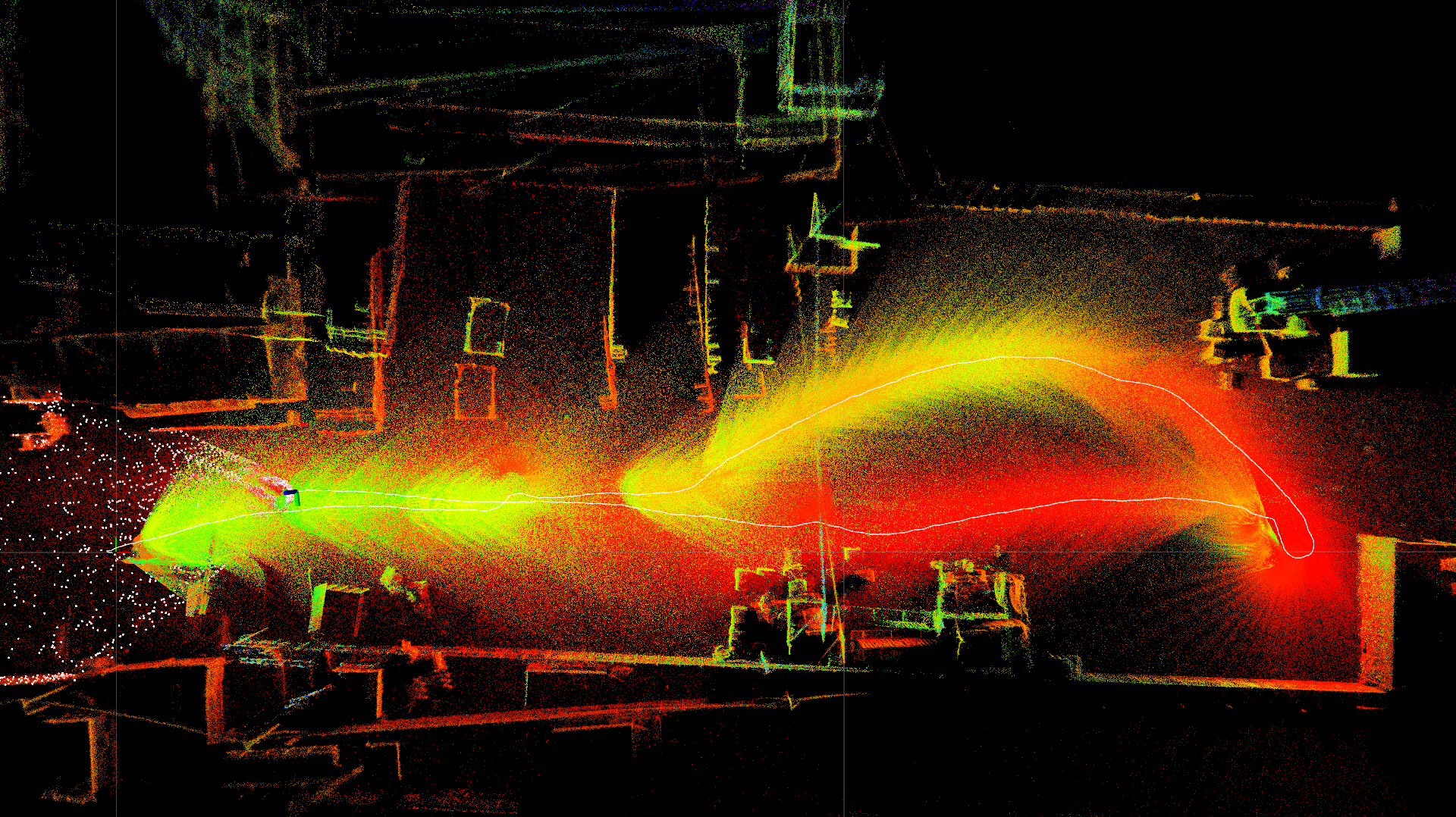

when I use the harbor.bag run the ${ws_rslive}, the overlapping area of the trajectory has a significant ghosting phenomenon, and the details are shown below:

Besides, when I running the ${ws_rslive}, the following error message will appear:

Here, I would like to ask, what is the above problem caused by the cumulative error of the sensor?

The distance between this test data is not long, but there is a significant ghosting phenomenon in the trajectory coincidence area. Is this normal situation?

Can I use a loop detection (SCAN Contex) or join GPS to do a priori information to correct the problem of building a map? @ @ziv-lin

Hello,

I am able to build the project with opecv4 changes it is successful. But when I running

roslaunch r2live demo.launch

It is crashing and giving the following error:

Multi thread started

/home/adit/catkin_ws/src/r2live/r2live/src/./fast_lio/fast_lio.hpp 791

[Ros_parameter]: fast_lio/dense_map_enable ==> 1

[Ros_parameter]: fast_lio/lidar_time_delay ==> 0

[Ros_parameter]: fast_lio/max_iteration ==> 4

[Ros_parameter]: fast_lio/fov_degree ==> 360

[Ros_parameter]: fast_lio/filter_size_corner ==> 0.4

[Ros_parameter]: fast_lio/filter_size_surf ==> 0.4

[Ros_parameter]: fast_lio/filter_size_surf_z ==> 0.4

[Ros_parameter]: fast_lio/filter_size_map ==> 0.4

[Ros_parameter]: fast_lio/cube_side_length ==> 1e+09

[Ros_parameter]: fast_lio/maximum_pt_kdtree_dis ==> 0.5

[Ros_parameter]: fast_lio/maximum_res_dis ==> 0.3

[Ros_parameter]: fast_lio/planar_check_dis ==> 0.1

[Ros_parameter]: fast_lio/long_rang_pt_dis ==> 50

[Ros_parameter]: fast_lio/publish_feature_map ==> 0

/home/adit/catkin_ws/src/r2live/r2live/src/./fast_lio/fast_lio.hpp 816

/home/adit/catkin_ws/src/r2live/r2live/src/./fast_lio/fast_lio.hpp 829

/home/adit/catkin_ws/src/r2live/r2live/src/./fast_lio/fast_lio.hpp 831

[ WARN] [1625127750.923615584]: waiting for image and imu...

~~~~/home/adit/catkin_ws/src/r2live/r2live/ doesn't exist

[r2live-4] process has died [pid 33098, exit code -11, cmd /home/adit/catkin_ws/devel/lib/r2live/r2live __name:=r2live __log:=/home/adit/.ros/log/7a2ab4f8-da45-11eb-a931-3df647905bdb/r2live-4.log].

log file: /home/adit/.ros/log/7a2ab4f8-da45-11eb-a931-3df647905bdb/r2live-4*.log

Thank you very much for your contribution and work. How to use velodyne lidar data to test r2live? FAST_LIO supports velodyne data, how to modify it in this project? Please advise,thanks.

Hello, thank you for your great work! Now I want to save a global .pcd map, how to do it?

Hello, I make some changes in feature_tracker_node.cpp to receive and process sensor_msgs/CompressedImage.

cv::Mat show_img = cv::imdecode(cv::Mat(img_msg->data),1); Note that img_msg is a type of sensor_msgs::CompressedImageConstPtr.

However, error occured when program uses this cv::Mat show_img to do feature tracking. Error is as follows.

OpenCV Error: Assertion failed (src.type() == CV_8UC1 || src.type() == CV_32FC1) in cornerEigenValsVecs, file /build/opencv-L2vuMj/opencv-3.2.0+dfsg/modules/imgproc/src/corner.cpp, line 287

terminate called after throwing an instance of 'cv::Exception'

what(): /build/opencv-L2vuMj/opencv-3.2.0+dfsg/modules/imgproc/src/corner.cpp:287: error: (-215) src.type() == CV_8UC1 || src.type() == CV_32FC1 in function cornerEigenValsVecs

Any suggestions to this opencv type error?

Is the current code base compatible with FAST-LIO2?

and #include <vins_estimator/Pose6D.h>

Thanks for sharing such amazing work. I saw the hardware requirements it needs IMU, Lidar, and RGB data. As per my knowledge all these things are available in realsense L515. Is there any way I can the project with bag file of l515?

Thank you

It's really great, but can you please tell me how to integrate mid40 with external imu, because I have done it according to your algorithm a month ago, according to the original loam version (although your algorithm has been upgraded many times ) I want to upgrade now. It is too extravagant for me to buy a lidar with imu built-in again. I have a nine-axis imu. How can I upgrade? Thanks, the poor from the northeast of the mainland, thank you again

HI:

thanks for your work,it is awesome!

can you provide the Approximate time when release code?we need it badly @ziv-lin

hello, I can‘t find the source codes, and where can I download it from?

Hello, I used my livox_horizon radar and realsenseD435 camera to reproduce your code. I did not calibrate imu and set estimate_extrinsic: 2 in filr_cam.yaml. The following problems occurred:

setting /run_id to bd46673e-ead8-11eb-951f-00e93a01d3ad

process[rosout-1]: started with pid [23836]

started core service [/rosout]

process[feature_tracker-2]: started with pid [23843]

process[lio_feat_extract-3]: started with pid [23844]

process[r2live-4]: started with pid [23845]

process[rvizvisualisation-5]: started with pid [23851]

[INFO] [1626950218.102325585]: init begins

[INFO] [1626950218.105416167]: Loaded config_file: /home/nvidia/r2live/src/r2live/r2live/../config/filr_cam.yaml

result path /vins_result_no_loop.csv

not exists, trying to create it

Failed to create folder

[INFO] [1626950218.106288960]: ROW: 480.000000 COL: 640.000000

[WARN] [1626950218.106301497]: have no prior about extrinsic param, calibrate extrinsic param

[INFO] [1626950218.106328359]: Unsynchronized sensors, online estimate time offset, initial td: 0

[r2live-4] process has died [pid 23845, exit code -11, cmd /home/nvidia/r2live/devel/lib/r2live/r2live __name:=r2live __log:=/home/nvidia/.ros/log/bd46673e -ead8-11eb-951f-00e93a01d3ad/r2live-4.log].

log file: /home/nvidia/.ros/log/bd46673e-ead8-11eb-951f-00e93a01d3ad/r2live-4*.log

How can I solve it?

I don't see anything in this paper about dynamic scene processing, but in the demonstration to see a lot of dynamic objects in the scene can also get better results, want to ask why?

Hi sir,

Could you provide datasets in BaiduYunPan?

Thanks. :)

What is the unit of linear acceleration in the IMU topic?

In livox _ros _driver, the unit of acceleration in the IMU topic is [g] by default. (Livox-SDK/livox_ros_driver#63)

But in your sample bag file(harbor.bag), the unit of acceleration in your IMU topic(/livox/imu) looks like [m/s^2] for me.

Best regards,

Hello,

Thanks for sharing such amazing work. I am experiencing large drift when I am running demo.launch on live stream. I have changed the calibration params in config file.

For livox, I am using the following driver (https://github.com/ziv-lin/livox_ros_driver_for_R2LIVE)

The following is the set of errors I am gettting:

/opt/catkin_ws/src/r2live/src/fast_lio/IMU_Processing.cpp, 400, dt = 0.105697 /opt/catkin_ws/src/r2live/src/fast_lio/IMU_Processing.cpp, 135, check_state fail !!!! 5.55778 -9.90081 -10.1357 /opt/catkin_ws/src/r2live/src/fast_lio/IMU_Processing.cpp, 135, check_state fail !!!! 5.55778 -9.90081 -10.1357 /opt/catkin_ws/src/r2live/src/fast_lio/IMU_Processing.cpp, 135, check_state fail !!!! 5.55778 -9.90081 -10.1357 /opt/catkin_ws/src/r2live/src/fast_lio/IMU_Processing.cpp, 135, check_state fail !!!! 5.55778 -9.90081 -10.1357 /opt/catkin_ws/src/r2live/src/fast_lio/IMU_Processing.cpp, 135, check_state fail !!!! 5.55778 -9.90081 -10.1357 /opt/catkin_ws/src/r2live/src/fast_lio/IMU_Processing.cpp, 135, check_state fail !!!! 5.55778 -9.90081 -10.1357 /opt/catkin_ws/src/r2live/src/fast_lio/IMU_Processing.cpp, 135, check_state fail !!!! 5.55778 -9.90081 -10.1357 /opt/catkin_ws/src/r2live/src/fast_lio/IMU_Processing.cpp, 135, check_state fail !!!! 5.55778 -9.90081 -10.1357 /opt/catkin_ws/src/r2live/src/fast_lio/IMU_Processing.cpp, 135, check_state fail !!!! 5.55778 -9.90081 -10.1357 /opt/catkin_ws/src/r2live/src/fast_lio/IMU_Processing.cpp, 135, check_state fail !!!! 5.55778 -9.90081 -10.1357 state_inout |[673.69852] | (-12.992, -47.860, 54.054) | (18.039, -1.217, -9.484) | (5.558, -9.901, 0.000) | (-0.150, -1.614, 0.984) | (-1.413, 3.676, -6.752) state_in |[673.69852] | (-12.992, -47.860, 54.054) | (18.039, -1.217, -9.484) | (5.558, -9.901, -10.136) | (-0.150, -1.614, 0.984) | (-1.413, 3.676, -6.752) /opt/catkin_ws/src/r2live/src/fast_lio/IMU_Processing.cpp, 135, check_state fail !!!! 5.31588 -10.1107 -0.17032

Everytime after the following error I am getting large drift.

[ INFO] [1632737692.276809736]: big translation [ WARN] [1632737692.277026159]: failure detection! [ WARN] [1632737692.281113827]: system reboot!

Hi guys what a great work!

Could you please give us more accurate information about the hardware used?

Especially

Thank you!

It's really a great job!

I have two questions about the camera here.

Question 1: Do the rolling shutter and the global shutter of the camera (the camera FLIR Blackfly you used is the global shutter) have a great influence on the experimental results? (Theoretically speaking, the shutter at low speed will not have too much deformation deformation will not be too large). Question 2: does the dark environment have a great impact on the construction of the map, and if so, how can it be set to reduce the impact? thank you

问题1: 视觉eskf计算结果没有使用 (estimator_node.cpp的prcoess函数,740行开始)

g_lio_state = state_before_esikf;

t_s.tic();

estimator.solve_image_pose(img_msg->header);

g_lio_state = state_before_esikf; -->使得视觉的eskf计算结果后面没有作用

solve_image_pose -->计算也没有也没有使用视觉的eskf计算结果

问题2 视觉初始化后第一次solveOdometry使用的m_lio_state_prediction_vec为默认值 :

在视觉初始化成功后的第一次solveOdometry中

if( ESTIMATE_EXTRINSIC != 2 && (header.stamp.toSec() - initial_timestamp) > 0.1)

{

result = initialStructure();

}

if(result)

{

solver_flag = NON_LINEAR;

**solveOdometry();**

slideWindow();

}

内部会使用m_lio_state_prediction_vec,这时全为默认值

for (int i = 0; i <= WINDOW_SIZE; i++)

{

---------------------------------------------------------------------------------------------------------------------

LiDAR_prior_factor_15 *lidar_prior_factor = new LiDAR_prior_factor_15(&m_lio_state_prediction_vec[i]);

----------------------------------------------------------------------------------------------------------------------

L_prior_factor l_prior_factor;

l_prior_factor.add_lidar_prior_factor(this, lidar_prior_factor, i);

lidar_prior_factor_vec.push_back(l_prior_factor);

}

问题3 直接强行更新Vs:

void sync_lio_to_vio(Estimator &estimator)

{

check_state(g_lio_state);

int frame_idx = estimator.frame_count;

frame_idx = WINDOW_SIZE;

if (abs(g_camera_lidar_queue.m_last_visual_time - g_lio_state.last_update_time) < 1.0)

{

if (g_lio_state.bias_a.norm() < 0.5 && g_lio_state.bias_g.norm() < 1.0)

{

estimator.Bas[frame_idx] = g_lio_state.bias_a;

estimator.Bgs[frame_idx] = g_lio_state.bias_g;

---------------------------------------------------------------------------------------------------------------------

estimator.Vs[frame_idx] = diff_vins_lio_q.toRotationMatrix().inverse() * g_lio_state.vel_end;

---------------------------------------------------------------------------------------------------------------------

estimator.m_gravity = g_lio_state.gravity;

G_gravity = estimator.m_gravity;

update();

}

}

}

1秒时间速度可以变化很大,特别是方向,直接更新觉得很粗暴

estimator_node.cpp的prcoess函数()756行开始

if ((g_lio_state.last_update_time - g_camera_lidar_queue.m_visual_init_time > g_camera_lidar_queue.m_lidar_drag_cam_tim))

{

StatesGroup state_before_esikf = g_lio_state;

if(estimator.Bas[WINDOW_SIZE].norm() < 0.5)

{

g_lio_state.bias_a = estimator.Bas[WINDOW_SIZE];

}

g_lio_state.bias_g = estimator.Bgs[WINDOW_SIZE];

---------------------------------------------------------------------------------------------------------------------

g_lio_state.vel_end = diff_vins_lio_q.toRotationMatrix() * estimator.Vs[WINDOW_SIZE];

---------------------------------------------------------------------------------------------------------------------

g_lio_state.cov = state_aft_integration.cov;

Eigen::Matrix3d temp_R = estimator.Rs[WINDOW_SIZE] * diff_vins_lio_q.toRotationMatrix();

Eigen::Vector3d temp_T = estimator.Ps[WINDOW_SIZE] + diff_vins_lio_t;

eigen_q q_I = eigen_q(1.0, 0, 0, 0);

double angular_diff = eigen_q(temp_R.transpose() * state_before_esikf.rot_end).angularDistance(q_I) * 57.3;

double t_diff = (temp_T - state_before_esikf.pos_end).norm();

if ((t_diff < 0.2) && (angular_diff < 2.0))

{

g_lio_state.cov = state_aft_integration.cov;

g_lio_state.last_update_time = cam_update_tim;

g_lio_state.rot_end = temp_R;

g_lio_state.pos_end = temp_T;

}

unlock_lio(estimator);

}

位姿解算不对时,所以位姿不用,但速度使用了(位姿不对时,速度不会差很多吗),这个行吗?

As in Fast_lio2 , Is there a way to save the trajectory of the camera/lidar? Thanks!

Dear sir ,

Thank you for opensourcing the code but I can't seem to find the ekf based vio you mentioned in paper.

Thank you.

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.