opendrivelab / openlane Goto Github PK

View Code? Open in Web Editor NEW[ECCV 2022 Oral] OpenLane: Large-scale Realistic 3D Lane Dataset

License: Apache License 2.0

[ECCV 2022 Oral] OpenLane: Large-scale Realistic 3D Lane Dataset

License: Apache License 2.0

Hi! Thank you for the great work.

I have a question regarding step b of the below pipeline :

What is the exact meaning of the sentence "The position of points on the 2D annotation are interpolated to get a dense point set".

Is the interpolation done in 3D (using the matched 3D points of step a) or is it done in the 2D plan (somehow using the remaining 2D points that were not matched with any projected 3D points in step a)?

Thanks for your clarification !

Hi! Thank you for your great work. I am trying to map your 3D points onto the corresponding pointclouds of waymo dataset. I noticed a problem:

Certain lanes seem to have very turbulent curves, especially curbs as shown in purple.

I have checked the raw data and the z values do seem to be not smooth from point to point. I am not sure if any post-processing is required, or certain types of lane are not smooth by nature.

Hello,

I am trying the download the dataset. Unfortunately, I could not succeed in downloading the images.tar. I have tried several times from google drive. Is there any chance that the file has become corrupt?

Thanks for your help

Esat

hi,

As far as I understand from the documentation and discussions, the lane labels coordinate system is that of the camera. So by multiplying them by the extrinsic matrix of the camera (as appear on the file), I should get them in the vehicle coordinate system.

I've attempted to plot the point cloud (pcd) as retrieved from Waymo (cartesian representation in vehicle coordinate system)

rame_utils.parse_range_image_and_camera_projection

frame_utils.convert_range_image_to_point_cloud

What I see is:

Projecting pcd and labeling to image seems to fit nicely. The high-intensity lane line marking points from pcd appears to fit their respective pixels in image.

Plotting them in open 3d indicates that labels (in vehicle coordinates) appear slightly below the point clouds.

--> as such, while intensities of pcd are projected in (1) to the image and seem to fit the picture, their labeling 3d representation appear below them and on the side.

Here are examples referring to 'segment-10770759614217273359_1465_000_1485_000_with_camera_labels/152271424044879700' in the training. The projection to the image is here

(lane lines annotations projected to the image are magenta, intensities color point clouds, and green/yellow intensities are of high intensity and seem to correspond to reflective lane lines).

On the other hand, looking at them from the side in open 3d, we see:

And also (the gray/black line is on z = 0 plane, and magenta is still the lane lines color).

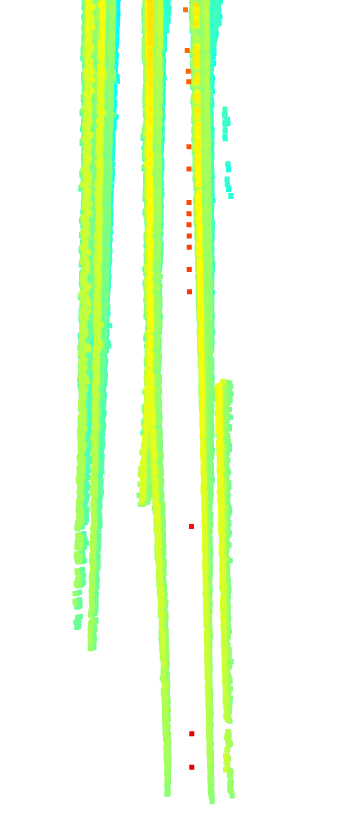

Looking from BEV, we get (here we draw on x,y plane, red is the color of annotations, and pcd has higher intensity as it becomes darker. The intensities indicate lane lines, and lane annotations seem to deviate.

This seems like a discrepancy in the labeling procedure described in the paper. What am I missing here?

Why there are some json labels in this dataset do not have corresponding images and there are also some images do not have corresponding json labels?

Excuse me I wanna know how to generate labels according to the json file.

I've drawn the 2d labels in sequence, but the lanes are not smooth.

Hi, thanks for your nice work. When I download the Openlane, I found that lane3d_1000_v1.2.zip cann't unzip. Maybe the file is damaged.

hi, as the title.

Hi @zihanding819 ,

I notice that gt_lanes = [prune_3d_lane_by_visibility(np.array(gt_lane), np.array(gt_visibility[k])) for k, gt_lane enumerate(gt_lanes)] is removed in v1.1 and gt_visibility is not used in bench, does this mean we should not use the visibility labels in OpenLane?

First of all thanks for your open source dataset, I found a problem when using the dataset.

In Lane Annotation Criterion, it is mentioned that there are "attribute" and "track_id" fields in the lane annotation, but these two fields are not found in the downloaded annotation files.

[你好!非常感谢你的优秀的工作和数据集。我通过你们提供的evaluate代码对标注数据进行可视化。发现结果是类似以下的情况,这好像与预期的和显示。所以还请确认是否我处理的流程存在不妥的地方。期待你的解答,谢谢。

Hi,

Do have a tool to visualize the groundtruth labels on the images before training or validation? I just want to browse through the OpenLane dataset with the projected labels (gt) on the images.

Thank you!

Thank you in advance!

Hi @dyfcalid , This one is related to the cam_representation question. Why are the extrinsics re computed? What are the original extrinsics with respect to? The following is the piece of code that I am referring to:

cam_extrinsics = np.array(info_dict['extrinsic'])

# Re-calculate extrinsic matrix based on ground coordinate

R_vg = np.array([[0, 1, 0],

[-1, 0, 0],

[0, 0, 1]], dtype=float)

R_gc = np.array([[1, 0, 0],

[0, 0, 1],

[0, -1, 0]], dtype=float)

cam_extrinsics[:3, :3] = np.matmul(np.matmul(

np.matmul(np.linalg.inv(R_vg), cam_extrinsics[:3, :3]),

R_vg), R_gc)

cam_extrinsics[0:2, 3] = 0.0

Thank you for releasing this excellent work by your team!

Could you provide more information about your labeling pipeline for 3D lanes? Are they labeled by human labelers or by PersFormer? If they are labeled by PersFormer, how did you incorporate the LiDAR data?

Hi @ChonghaoSima @zihanding819 , I found some bugs in v1.1

both_invisible_indices are counted in num_match_mat, but https://github.com/OpenPerceptionX/OpenLane/blob/main/eval/LANE_evaluation/lane3d/eval_3D_lane.py#L236 the denominator only counts visible points.-1s should be removed bofore computing avg.I got this with your realeased persformer ckpt

===> Evaluation laneline F-measure: 0.78679105

===> Evaluation laneline Recall: 0.69620109

===> Evaluation laneline Precision: 0.90448399

===> Evaluation laneline Category Accuracy: 0.87950062

===> Evaluation laneline x error (close): 0.18658032 m

===> Evaluation laneline x error (far): -0.22041094 m

===> Evaluation laneline z error (close): -0.02688632 m

===> Evaluation laneline z error (far): -0.34050375 m

pred_lanes should be converted to ndarray before https://github.com/OpenPerceptionX/OpenLane/blob/main/eval/LANE_evaluation/lane3d/eval_3D_lane.py#L95

I downloaded the OpenLane dataset via your released python client and visualized several cases, noticeable noise labels are found for almost all images, take the below figures as example (red and yellow lines indicate lane2d and lane3d), lane2d and lane3d are just figure to the image by coordinate mapping but no other filters. looking forward to your reply, thx

thanks for your great job.I have some questions about lane annotaion as follow:

(1) what "attribute:0" means? And I found that most of time it equals 0

(2) how to project 3D points under xyz camera coordinate to uv image coordinate? I tried [x,y,1]^T=K[X,Y,Z,1],which XYZ came from 3D points ["xyz"] in json and K came from ["intrinsic"], but points generated seemed wrong.

Hi,

I am writing in regards of the reference frames documentation and transformation between several frames.

In special, in:

cam_extrinsics[:3, :3] = np.matmul(np.matmul(

np.matmul(np.linalg.inv(R_vg), cam_extrinsics[:3, :3]),

R_vg), R_gc)

gt_cam_height = cam_extrinsics[2, 3]

gt_cam_pitch = 0

cam_extrinsics[0:2, 3] = 0.0

I have read from previous issues that the fact of setting x, and y to 0 is to force that when lanes are transformed into Lanelet reference frame, the x, and y coincides with the one of the Waymo Camera coordinate system, right?

I am trying to project the lane points into BEV and obtain both the homography and the 3D Affine transformation that would transform the image and the lane points respectively. I am using the method of https://github.com/yuliangguo/Pytorch_Generalized_3D_Lane_Detection.

For transforming lanes into the plane z=0, they perform the following transformation:

, and for the image, it uses an homography that depends on the height of the camera with respect to the ground, and the pitch of the camera. When doing this transformations that require cam_pitch and height, the transformation to BEV is wrong

Here are my questions:

cam_extrinsics[2, 3] = 0.0 , then lanes in Lanelet notation should have the same height as with Waymo Camera Coordinate System, right?Thank you very much for the dataset and your attention!

Thanks for your nice paper. Your openlane dataset is helpful for lane detection task! And I wonder if you could provide coresponding lidar data to the image data you already provided? thanks!

In original Waymo dataset, there is a global pose of every frame. It's very beneficial to study on or evaluate some work related to temporal model, such as temporal segmentation, semantic mapping and so on.

Do you have any plan to add this attribute to your label json? I think it will be very beneficial to many communities!

Hi, thanks for your nice paper. Could you please share the code related to the 3d lane generation pipeline? This was used for the ground truth if I understood correctly?

I have opencv 4 installed. And most of the time it just "worked".

But for this project, we need to set OPENCV_INCLUDE and OPENCV_LIB_PATH for opencv 3.4.

Therefore I downloaded the source code, and make it without install to system path.

Then I tried different paths but none worked.

Here are paths I have tried:

OPENCV_INCLUDE = /home/derek/OpenLane/opencv34/build/include

OPENCV_LIB_PATH = /home/derek/OpenLane/opencv34/build/lib

OPENCV_INCLUDE = /home/derek/OpenLane/opencv34/include/

OPENCV_LIB_PATH = /home/derek/OpenLane/opencv34/modules/

OPENCV_INCLUDE = /usr/local/include

OPENCV_LIB_PATH = /usr/local/lib

Any suggestions on how to set the path correctly?

Hi, thanks for opensource this awesome dataset!

Since the Openlane is extracted form waymo dataset, which has lidar pointcloud, do you think openlane dataset can be used as lidar-camera fusion curb/lane detection task?

A reminder, it seems zip files for cipo & scene are named as xxx.tar.

If I force prediction to be equal to groud truth, I can't get a F-score of 1.

I add these code before "self.bench" function

pred_lanes = gt_lanes

pred_category = gt_category

However, I get the following result for night case.

===> Evaluation on validation set:

laneline F-measure 0.84169872

laneline Recall 0.99912995

laneline Precision 0.72712661

laneline Category Accuracy 0.99956497

laneline x error (close) 0.0017959209 m

laneline x error (far) 0.003160552 m

laneline z error (close) 0.00084101858 m

laneline z error (far) 0.0027049253 m

hi,

Thank you for releasing the new version of the labels. After extracting it I've noticed that many jsons on the train-set are missing their lanes annotation:

For example:

segment-6142170920525844857_2080_000_2100_000_with_camera_labels/155010091827345700.json

segment-2224716024428969146_1420_000_1440_000_with_camera_labels/155803393634753600.json

segment-17782258508241656695_1354_000_1374_000_with_camera_labels/151547637346155800.json

Can you please advise?

Thanks

thanks for your great job.I have some questions about lane annotaion as follow:

(1) what "attribute:0" means? And I found that most of time it equals 0

(2) how to project 3D points under xyz camera coordinate to uv image coordinate? I tried [x,y,1]^T=K[X,Y,Z,1],which XYZ came from 3D points ["xyz"] in json and K came from ["intrinsic"], but points generated seemed wrong.

k1 = np.c_[np.array(json["intrinsic"]),np.zeros(3)]

t = np.array(XYZ.reshape(-1,1))

xyz = np.matmul(k1,t)

Hi, you don't provide 3D CIPO data in your database, right?

Only the 2D bounding box of CIPO in the image is provided, right?

If I want to get the 3D position of CIPO in your dataset, can I get it through Waymo?

I note there is such an operation in the code:

gt_cam_height = cam_extrinsics[2, 3]

gt_cam_pitch = 0

cam_extrinsics[0:2, 3] = 0.0

I do not understand why there needs "cam_extrinsics[0:2, 3] = 0.0". The cam_extrinsics is used for transforming the points in normal camera coordinate system to LaneNet ego coordinate system. Setting the last column as 0 means removing the offset item. Why do this?

Please share the link to be able to download from google drive

As you mentioned that

"Then for each frame, the point clouds are first filtered with the original 3D object bounding boxes and then projected back into the corresponding image. We further keep those points related to 2D lanes only with a certain threshold."

I wonder :

hope for your answer,thanks.

Hi guys, thanks for the amazing dataset!

However, me and my colleagues have encountered several issues with your evaluation script, which made us unable to get 100% accuracy when testing GT against GT:

dist_th:https://github.com/OpenPerceptionX/OpenLane/blob/f74ecca299e032e100c0ca200a3299c1745de084/eval/LANE_evaluation/lane3d/eval_3D_lane.py#L159

https://github.com/OpenPerceptionX/OpenLane/blob/f74ecca299e032e100c0ca200a3299c1745de084/eval/LANE_evaluation/lane3d/eval_3D_lane.py#L179

https://github.com/OpenPerceptionX/OpenLane/blob/f74ecca299e032e100c0ca200a3299c1745de084/eval/LANE_evaluation/lane3d/eval_3D_lane.py#L190

So the x & z error counting will be off, they will be at least dist_th = 1.5 for invisible points, I'm guessing these distances should be ignored here.

dist_th = 1.5, then it won't pass the initial check here:division by 0 later in:Anyways, this problem should not show because the script filters lines to have at least 2 visible points. However, the x range filtering is inconsistent between:

https://github.com/OpenPerceptionX/OpenLane/blob/f74ecca299e032e100c0ca200a3299c1745de084/eval/LANE_evaluation/lane3d/eval_3D_lane.py#L104

and

Also, there is no filtering after interpolation, if a line has 2 visible points before interpolation but don't afterwards, it will also produce entirely invisible lines. For example, one line has y coordinates [23.5 23.8] and is valid, but y_samples are only integers, it won't be valid after (ex)interpolation.

Btw, by testing GT against GT, I can only get around 87% F1. I saved GT after the coordinate transform and filtering. If you could clarify the ignore mechanism, I can make a pull request to fix this for you. There are two popular ignore mechanisms in metrics, I think the first one sounds better and aligns more with your original metric (only suggestions here):

I think these issues could have been inherited from the synthetic benchmark. And they could non-trivially influence your already evaluated results.

https://github.com/OpenPerceptionX/PersFormer_3DLane

47.8 and 52.9. what's the difference between them?

Hello,

I just installed caravel user project on a remote server (centos 7). %Make setup was successful but make user_proj_example gives me:

Flow.tcl command not found error.

And Openlane and pdk roots are correctly exported.

Could you please tell me what is causing this error?

Thank you.

% pwd

/local/data0/Students/AS/CARAVEL/caravel_user_project

% make openlane

cd openlane && make openlane

make[1]: Entering directory '/local/data0/Students/AS/CARAVEL/caravel_user_project/openlane'

if [ -d "/local/data0/Students/AS/CARAVEL/caravel_user_project/dependencies/openlane_src" ]; then

echo "Deleting exisiting /local/data0/Students/AS/CARAVEL/caravel_user_project/dependencies/openlane_src" &&

rm -rf /local/data0/Students/AS/CARAVEL/caravel_user_project/dependencies/openlane_src && sleep 2;

fi

Deleting exisiting /local/data0/Students/AS/CARAVEL/caravel_user_project/dependencies/openlane_src

git clone https://github.com/The-OpenROAD-Project/OpenLane --branch=2022.07.02_01.38.08 --depth=1 /local/data0/Students/AS/CARAVEL/caravel_user_project/dependencies/openlane_src &&

cd /local/data0/Students/AS/CARAVEL/caravel_user_project/dependencies/openlane_src &&

export OPENLANE_IMAGE_NAME=efabless/openlane:2022.07.02_01.38.08 &&

export IMAGE_NAME=efabless/openlane:2022.07.02_01.38.08 &&

make pull-openlane

Cloning into '/local/data0/Students/AS/CARAVEL/caravel_user_project/dependencies/openlane_src'...

remote: Enumerating objects: 508, done.

remote: Counting objects: 100% (508/508), done.

remote: Compressing objects: 100% (428/428), done.

remote: Total 508 (delta 101), reused 254 (delta 35), pack-reused 0

Receiving objects: 100% (508/508), 2.77 MiB | 13.49 MiB/s, done.

Resolving deltas: 100% (101/101), done.

Note: switching to 'f9b5781f5ef0bbdf39ab1c2bbd78be8db11b27f2'.

You are in 'detached HEAD' state. You can look around, make experimental

changes and commit them, and you can discard any commits you make in this

state without impacting any branches by switching back to a branch.

If you want to create a new branch to retain commits you create, you may

do so (now or later) by using -c with the switch command. Example:

git switch -c

Or undo this operation with:

git switch -

Turn off this advice by setting config variable advice.detachedHead to false

make[2]: Entering directory '/local/data0/Students/AS/CARAVEL/caravel_user_project/dependencies/openlane_src'

Makefile:23: warning: undefined variable 'DOCKER_SWAP'

Makefile:26: warning: undefined variable 'DOCKER_MEMORY'

Makefile:42: warning: undefined variable 'ROUTING_CORES'

Trying to pull registry.access.redhat.com/efabless/openlane:2022.07.02_01.38.08...

name unknown: Repo not found

Trying to pull registry.redhat.io/efabless/openlane:2022.07.02_01.38.08...

unable to retrieve auth token: invalid username/password: unauthorized: Please login to the Red Hat Registry using your Customer Portal credentials. Further instructions can be found here: https://access.redhat.com/RegistryAuthentication

Trying to pull docker.io/efabless/openlane:2022.07.02_01.38.08...

Getting image source signatures

Copying blob e23e0ddb585d [--------------------------------------] 0b / 0b

Copying blob d4d650a92807 [--------------------------------------] 0b / 0b

Copying blob 00da99f6da93 [--------------------------------------] 0b / 0b

Copying blob 6288ff22c326 [--------------------------------------] 0b / 0b

Copying blob 4273e1cebc47 [--------------------------------------] 0b / 0b

Copying blob 2d473b07cdd5 [--------------------------------------] 0b / 0b

Copying blob 0bb3fd49f294 [--------------------------------------] 0b / 0b

Copying blob e23e0ddb585d skipped: already exists

Copying blob d4d650a92807 skipped: already exists

Copying blob 00da99f6da93 skipped: already exists

Copying blob 6288ff22c326 skipped: already exists

Copying blob 4273e1cebc47 skipped: already exists

Copying blob 2d473b07cdd5 skipped: already exists

Copying blob 0bb3fd49f294 skipped: already exists

Copying blob 0046c99aea39 skipped: already exists

Copying blob 5c2567a679fb skipped: already exists

Copying blob a7cc087578da skipped: already exists

Copying blob 2fe7c73cb171 skipped: already exists

Copying blob 4a454693240e skipped: already exists

Copying blob bdfdcd774996 skipped: already exists

Copying blob b8f4e67850a8 skipped: already exists

Copying blob c8f71bfe3963 skipped: already exists

Copying blob 89f0b07d31a4 skipped: already exists

Copying blob a4337c6d6c3b skipped: already exists

Copying blob d6266388c01d skipped: already exists

Copying blob dbc522c54251 skipped: already exists

Copying blob 7d94296f04fd skipped: already exists

Copying blob 2c637aa86889 skipped: already exists

Copying blob 9000a623b0a2 skipped: already exists

Copying blob f4886a0ced97 skipped: already exists

Copying blob e3e05bf88f0f skipped: already exists

Copying blob ecd6b35221ba skipped: already exists

Copying blob de892deb34c8 skipped: already exists

Copying blob 75688db7a987 skipped: already exists

Copying blob bcd756ce1ebf skipped: already exists

Copying blob 72ee0d5243a6 skipped: already exists

Copying blob 4f4fb700ef54 skipped: already exists

Copying config 195c88fbb8 done

Writing manifest to image destination

Storing signatures

195c88fbb8ec91aa12e2182070c62744ab03d47ffb2fde61745e5bb8ad31f05a

make[2]: Leaving directory '/local/data0/Students/AS/CARAVEL/caravel_user_project/dependencies/openlane_src'

make[1]: Leaving directory '/local/data0/Students/AS/CARAVEL/caravel_user_project/openlane'

% make user_proj_example

make -C openlane user_proj_example

make[1]: Entering directory '/local/data0/Students/AS/CARAVEL/caravel_user_project/openlane'

mkdir -p ./user_proj_example/runs/22_09_01_13_15

rm -rf ./user_proj_example/runs/user_proj_example

ln -s $(realpath ./user_proj_example/runs/22_09_01_13_15) ./user_proj_example/runs/user_proj_example

docker run -it -v

efabless/openlane:2022.07.02_01.38.08 sh -c "flow.tcl -design

sh: flow.tcl: command not found

make[1]: *** [Makefile:72: user_proj_example] Error 127

make[1]: Leaving directory '/local/data0/Students/AS/CARAVEL/caravel_user_project/openlane'

make: *** [Makefile:73: user_proj_example] Error 2

#--------------------------------------------------------------------------------------------------------------

And the caravel/dependencies/ includes these:

% ls dependencies/*

dependencies/openlane_src:

AUTHORS.md docker Makefile run_designs.py

configuration docs README.md run_issue_regressions.py

conf.py env.py regression_results scripts

CONTRIBUTING.md flow.tcl requirements_dev.txt tests

dependencies Jenkinsfile requirements_lint.txt

designs LICENSE requirements.txt

dependencies/pdks:

sky130A sky130B volare

hello and thanks for this useful code

I have a question regarding the matching score check

for both invisible gt and pred points the matching distance is set to 0 :

euclidean_dist[both_invisible_indices] = 0

and the cost for matching is set as:

cost_ = np.sum(euclidean_dist)

however the matching score threshold is set to number of all points multiply by dist_th

but invisible points distance is set to zero, causing higher threshold for shorter lanes

match_results[i, 2] < self.dist_th * self.y_samples.shape[0]

what have I missed here ?

Hi,

you might want to point to https://github.com/The-OpenROAD-Project/OpenLane in the README/docs and say that this is a different project.

There are two pictures selected from segment-9350921499281634194_2403_251_2423_251_with_camera_labels.

The first picture shows the raw image while the second shows the GT lanes which colours represent their categories.

So, you can see the second picture. There is left-curbside, white-solid, white-dashed, yellow, white-solid, white-dashed, white-solid and right-curbside from left to right.

For the points range, you select [3, 103] as your y-axis range, while the original waymo point cloud only reaches 75 meters away. However, there are points > 75m in your labels. And you also select 103m (>75m) as your valid range. This seems do not make sense, in my opinion. It is Instead, introduces noise for far points. Although you adopt (3D) interpolation to generate labels, points should not be generated over end points for each 3d lane.

to sum up, I wonder why you adopt points that are further than the waymo lidar point cloud range (i.e., 75m) in your label? ( they should be not accurate. )

could you update the dataset link?

Hi, thanks for releasing v1.2 label files. I have one question about the relationship among "xyz", "visibility" and "uv".

In v1.1, for one single lane, sum(visibility) = len(xyz[visibility]) = len(uv) (pseudo python code). Therefore, each 3D point has its corresponding 2D point.

However, in v1.2, this relationship no longer exists. I found that sum(visibility) = len(xyz[visibility]) <= len(uv). If I want to find corresponding 3D and 2D points, should I use uv and reproject them to 3D coord, or use xyz and project them to 2D coord? Thanks!

Hi,

there is a "44" category in this label file lane3d_1000/training/segment-1926967104529174124_5214_780_5234_780_with_camera_labels/150914294030533900.json. Is it correct?

Thanks!

I accumulate the 3D lane GT using pose provided by origin waymo dataset. I find there is a serious blur of accumulated 3D submap in the segment(segment-9568394837328971633_466_365_486_365_with_camera_labels).

Let's look at the first small section of this submap. There are even some outliers such as red points.

For easy understanding, the corresponding images are as follows

I think there are two ways to improve the quality of map:

First, use only the closer lane points. For example, 20m

Second, fit the 3D lanes again in the submap and project them to every frame.

Thanks for the awesome work!

May I know how shall I download openlane v1.2? It seems GoogleDrive only has the original version of annotations. Besides, do we need to download new images.tar for openlane-v1.2?

Thank you again!

thx for your great work, I have downloaded the dataset but find some json file does not exists:

lane3d_1000/training/segment-1191788760630624072_3880_000_3900_000_with_camera_labels/155381187479907700.json

lane3d_1000/training/segment-12212767626682531382_2100_150_2120_150_with_camera_labels/150863260385562800.json

..........

I want to figure out if my file was broken

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.