This repo is implementation for PointNet(https://arxiv.org/abs/1612.00593) in pytorch. The model is in pointnet/model.py.

It is tested with pytorch-1.0.

git clone https://github.com/fxia22/pointnet.pytorch

cd pointnet.pytorch

pip install -e .

Download and build visualization tool

cd scripts

bash build.sh #build C++ code for visualization

bash download.sh #download dataset

Training

cd utils

python train_classification.py --dataset <dataset path> --nepoch=<number epochs> --dataset_type <modelnet40 | shapenet>

python train_segmentation.py --dataset <dataset path> --nepoch=<number epochs>

Use --feature_transform to use feature transform.

On ModelNet40:

| Overall Acc | |

|---|---|

| Original implementation | 89.2 |

| this implementation(w/o feature transform) | 86.4 |

| this implementation(w/ feature transform) | 87.0 |

| Overall Acc | |

|---|---|

| Original implementation | N/A |

| this implementation(w/o feature transform) | 98.1 |

| this implementation(w/ feature transform) | 97.7 |

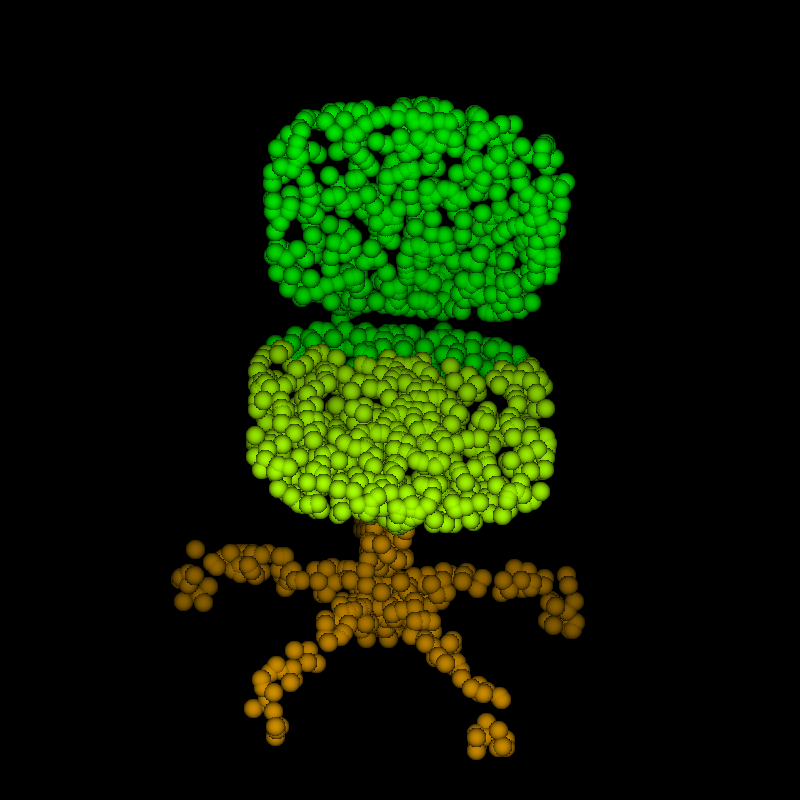

Segmentation on A subset of shapenet.

| Class(mIOU) | Airplane | Bag | Cap | Car | Chair | Earphone | Guitar | Knife | Lamp | Laptop | Motorbike | Mug | Pistol | Rocket | Skateboard | Table |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Original implementation | 83.4 | 78.7 | 82.5 | 74.9 | 89.6 | 73.0 | 91.5 | 85.9 | 80.8 | 95.3 | 65.2 | 93.0 | 81.2 | 57.9 | 72.8 | 80.6 |

| this implementation(w/o feature transform) | 73.5 | 71.3 | 64.3 | 61.1 | 87.2 | 69.5 | 86.1 | 81.6 | 77.4 | 92.7 | 41.3 | 86.5 | 78.2 | 41.2 | 61.0 | 81.1 |

| this implementation(w/ feature transform) | 87.6 | 81.0 |

Note that this implementation trains each class separately, so classes with fewer data will have slightly lower performance than reference implementation.