dora-rs / dora Goto Github PK

View Code? Open in Web Editor NEWlow latency, composable, and distributed dataflow for AI and robotic application

Home Page: https://dora.carsmos.ai

License: Apache License 2.0

low latency, composable, and distributed dataflow for AI and robotic application

Home Page: https://dora.carsmos.ai

License: Apache License 2.0

Environment: Ubuntu 22.04

Follow the README, using Rust template to build rust example (default), I have the following error (why we have iceoryx dependecy?):

(base) demo@:~/src/dora-rs/releases/0.1.0/rust_proj$ cargo build -p node_1

warning: skipping duplicate package `rust-dataflow-example-sink` found at `/home/demo/.cargo/git/checkouts/dora-865db9b4eb79312d/c9f4a1a/examples/rust-dataflow/sink`

Compiling iceoryx-sys v0.1.0 (https://github.com/eclipse-iceoryx/iceoryx-rs.git#68fbd034)

error: failed to run custom build command for `iceoryx-sys v0.1.0 (https://github.com/eclipse-iceoryx/iceoryx-rs.git#68fbd034)`

Caused by:

process didn't exit successfully: `/home/demo/src/dora-rs/releases/0.1.0/rust_proj/target/debug/build/iceoryx-sys-6b251d9e411e136f/build-script-build` (exit status: 1)

--- stdout

Output { status: ExitStatus(unix_wait_status(0)), stdout: "", stderr: "HEAD is now at f756b7c99 Merge pull request #1327 from ApexAI/iox-#1323-set-version-to-2.0.2\n" }

--- stderr

CMake Error: The current CMakeCache.txt directory /home/demo/src/dora-rs/releases/0.1.0/rust_proj/target/debug/build/iceoryx-sys-ac30c768c6b445f0/out/iceoryx-build/iceoryx_hoofs/CMakeCache.txt is different than the directory /home/demo/src/dora-rs/releases/0.1.0/c_proj/target/debug/build/iceoryx-sys-ac30c768c6b445f0/out/iceoryx-build/iceoryx_hoofs where CMakeCache.txt was created. This may result in binaries being created in the wrong place. If you are not sure, reedit the CMakeCache.txt

CMake Error: The source "/home/demo/src/dora-rs/releases/0.1.0/rust_proj/target/debug/build/iceoryx-sys-ac30c768c6b445f0/out/iceoryx-git/iceoryx_hoofs/CMakeLists.txt" does not match the source "/home/demo/src/dora-rs/releases/0.1.0/c_proj/target/debug/build/iceoryx-sys-ac30c768c6b445f0/out/iceoryx-git/iceoryx_hoofs/CMakeLists.txt" used to generate cache. Re-run cmake with a different source directory.

Error: Custom { kind: Other, error: "Could not run cmake for 'iceoryx_hoofs'!" }

I have cleaned .cargo/git cache by "cargo cache -a" command, don't know why it still pull in iceoryx and examples/rust-dataflow/sink dependencies

This should considerably improve performance for large messages, compared to zenoh.

iceoryx as an alternative to zenohDescribe the bug

Could not validate yaml: Dataflow could not be validated.: no node exists at ../../target/debug/rust-dataflow-url-example-sink: No such file or directory (os error 2)

(dora3.7) jarvis@jia:~/coding/dora_home/dora/binaries/cli/src$ cargo run start ../../../examples/rust-dataflow-url/dataflow.yml --attach --hot-reload

Compiling dora-operator-api-c v0.2.3-rc6 (/home/jarvis/coding/dora_home/dora/apis/c/operator)

Compiling dora-cli v0.2.3-rc6 (/home/jarvis/coding/dora_home/dora/binaries/cli)

Finished dev [unoptimized + debuginfo] target(s) in 3.97s

Running `/home/jarvis/coding/dora_home/dora/target/debug/dora-cli start ../../../examples/rust-dataflow-url/dataflow.yml --attach --hot-reload`

Could not validate yaml: Dataflow could not be validated.: no node exists at `../../target/debug/rust-dataflow-url-example-sink`: No such file or directory (os error 2)

(dora3.7) jarvis@jia:~/coding/dora_home/dora/binaries/cli/src$

To Reproduce

Steps to reproduce the behavior:

dora updora start examples/rust-dataflow-url/dataflow.yml --attach --hot-reloadEnvironments (please complete the following information):

Is your feature request related to a problem? Please describe.

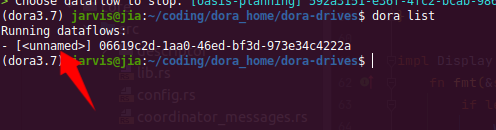

Dataflow with no specified name shows unnamed, as follows:

impl Display for DataflowId {

fn fmt(&self, f: &mut std::fmt::Formatter<'_>) -> std::fmt::Result {

if let Some(name) = &self.name {

write!(f, "[{name}] {}", self.uuid)

} else {

write!(f, "[<unnamed>] {}", self.uuid)

}

}

}

Describe the solution you'd like

Is it possible for dataflow without a name to randomly generate a unique name for it.

Requested by @haixuanTao

This is a problem encountered in porting NDT algorithm from ROS2 ecosystem to DORA framework

In ROS2, the ndt_scan_matcher package adopts the multithreading mechanism: from its code, it can be seen that, the two callback functions ( callbackMapPoints and callbackSensorPoints) and the serviceNDTAlign service share a thread, which are directly related to the setting and execution of the NDT algorithm, while CallbackInitialPose is a separate thread. Extract the relevant codes as follows:

// ndt_scan_matcher_core.cpp

NDTScanMatcher::NDTScanMatcher()

{

//...

rclcpp::CallbackGroup::SharedPtr initial_pose_callback_group;

initial_pose_callback_group =

this->create_callback_group(rclcpp::CallbackGroupType::MutuallyExclusive);

rclcpp::CallbackGroup::SharedPtr main_callback_group;

main_callback_group = this->create_callback_group(rclcpp::CallbackGroupType::MutuallyExclusive);

auto initial_pose_sub_opt = rclcpp::SubscriptionOptions();

initial_pose_sub_opt.callback_group = initial_pose_callback_group;

auto main_sub_opt = rclcpp::SubscriptionOptions();

main_sub_opt.callback_group = main_callback_group;

initial_pose_sub_ = this->create_subscription<geometry_msgs::msg::PoseWithCovarianceStamped>(

"ekf_pose_with_covariance", 100,

std::bind(&NDTScanMatcher::callbackInitialPose, this, std::placeholders::_1),

initial_pose_sub_opt);

map_points_sub_ = this->create_subscription<sensor_msgs::msg::PointCloud2>(

"pointcloud_map", rclcpp::QoS{1}.transient_local(),

std::bind(&NDTScanMatcher::callbackMapPoints, this, std::placeholders::_1), main_sub_opt);

sensor_points_sub_ = this->create_subscription<sensor_msgs::msg::PointCloud2>(

"points_raw", rclcpp::SensorDataQoS().keep_last(points_queue_size),

std::bind(&NDTScanMatcher::callbackSensorPoints, this, std::placeholders::_1), main_sub_opt);

service_ = this->create_service<tier4_localization_msgs::srv::PoseWithCovarianceStamped>(

"ndt_align_srv",

std::bind(&NDTScanMatcher::serviceNDTAlign, this, std::placeholders::_1, std::placeholders::_2),

rclcpp::ServicesQoS().get_rmw_qos_profile(), main_callback_group);

}

// ndt_scan_matcher_node.cpp

int main(int argc, char ** argv)

{

rclcpp::init(argc, argv);

auto ndt_scan_matcher = std::make_shared<NDTScanMatcher>();

rclcpp::executors::MultiThreadedExecutor exec;

exec.add_node(ndt_scan_matcher);

exec.spin();

rclcpp::shutdown();

return 0;

}During the migration of NDT algorithm to DORA framework. The on_input function is temporarily implemented as follows:

// ndt_scan_matcher.cpp

OnInputResult on_input(NDTScanMatcher &op, rust::Str id, rust::Slice<const uint8_t> data, OutputSender &output_sender)

{

if (id == "initial_pose") {

// Corresponding to the original callbackInitialPose callback function

} else if (id == "tf_baselink2lidar") {

// ...

}

if (id == "map_points") {

// Corresponding to the original callbackMapPoints callback function

} else if (id == "align_request_pose") {

// Corresponding to the original serviceNDTAlign service

} else if (id == "downsampled_pointcloud") {

// Corresponding to the original callbackSensorPoints callback function

}

}How to implement multithreading mechanism in DORA operator?

Describe the bug

The dora stop uuid unable to delete a running dataflow. The dora destroy command works, but an exception is reported, and the exception is not handled correctly

To Reproduce

Steps to reproduce the behavior:

dora updora start graphs/tutorials/webcam_yolov5.yaml --attach Environments (please complete the following information):

Describe the bug

Compiling dora prompts that the dependent ntapi version is no longer supported.

(py310) D:\coding\rusthome\github.com\meua\dora>cargo build --all

Compiling dora-operator-api-c v0.2.2 (D:\coding\rusthome\github.com\meua\dora\apis\c\operator)

Compiling dora-cli v0.2.2 (D:\coding\rusthome\github.com\meua\dora\binaries\cli)

Finished dev [unoptimized + debuginfo] target(s) in 28.36s

warning: the following packages contain code that will be rejected by a future version of Rust: ntapi v0.3.7

note: to see what the problems were, use the option `--future-incompat-report`, or run `cargo report future-incompatibilities --id 4`To Reproduce

Steps to reproduce the behavior:

cargo build --allExpected behavior

Update dependencies so that make no dependency warnings are reported.

Environments (please complete the following information):

Describe the bug

var m has no value and is an empty string.

(dora3.7) jarvis@jia:~/coding/dora_home/dora/binaries/cli/src$ RUST_LOG=info cargo run start /home/jarvis/coding/rust_home/github.com/meua/dora-drives/graphs/tutorials/webcam_midas_frame.yaml --attach --hot-reload

Compiling dora-operator-api-c v0.2.3-rc6 (/home/jarvis/coding/dora_home/dora/apis/c/operator)

Compiling dora-cli v0.2.3-rc6 (/home/jarvis/coding/dora_home/dora/binaries/cli)

Finished dev [unoptimized + debuginfo] target(s) in 4.08s

Running `/home/jarvis/coding/dora_home/dora/target/debug/dora-cli start /home/jarvis/coding/rust_home/github.com/meua/dora-drives/graphs/tutorials/webcam_midas_frame.yaml --attach --hot-reload`

no daemon listen port for machine ``

(dora3.7) jarvis@jia:~/coding/dora_home/dora/binaries/cli/src$

To Reproduce

Steps to reproduce the behavior:

cd dora/binaries/coordinator/src && cargo runRUST_LOG=info cargo run start /home/jarvis/coding/rust_home/github.com/meua/dora-drives/graphs/tutorials/webcam_midas_frame.yaml --attach --hot-reloadExpected behavior

A clear and concise description of what you expected to happen.

Environments (please complete the following information):

Is your feature request related to a problem? Please describe.

After I execute dora up, an exception occurs after starting dataflow. I don’t know if dora is running abnormally. I want to check the status of dora service through dora-cli.

Describe the solution you'd like

View dora service status through dora-cli, For example the command dora status.

This is a problem encountered in porting NDT algorithm from ROS2 ecosystem to DORA framework.

A problem was found during code debugging. If a function of the DORA operator takes a long time to execute, other input messages cannot be processed in time.

For example, the serviceNDTAlign() function in ndt_scan_matcher operator, see the settings in dataflow.yml. Since Particle filtering is required to find a more accurate initial position, the program takes a long time to execute when receiving the align_request_pose input (In fact, it takes about 3~5s to execute this program in ROS2, but it takes tens of seconds in DORA. I am looking for the reason why the execution takes so long, which will be discussed later). Then other input messages, such as downsampled_pointcloud and initial_pose, will not be processed in time and will accumulate in memory. In fact, messages such as downsampled_pointcloud and initial_pose are time sensitive. After a certain period of time, these messages are meaningless, so there is no need to cache all messages. In ROS2, one can specify how many messages can be cached at most by setting Qos, as follows:

initial_pose_sub_ = this->create_subscription<geometry_msgs::msg::PoseWithCovarianceStamped>(

"ekf_pose_with_covariance", 100,

std::bind(&NDTScanMatcher::callbackInitialPose, this, std::placeholders::_1),

initial_pose_sub_opt);

sensor_points_sub_ = this->create_subscription<sensor_msgs::msg::PointCloud2>(

"points_raw", rclcpp::SensorDataQoS().keep_last(points_queue_size),

std::bind(&NDTScanMatcher::callbackSensorPoints, this, std::placeholders::_1), main_sub_opt);Is there a similar mechanism in Dora, or do we also need such a mechanism?

Describe the bug

(dora3.7) jarvis@jia:~/coding/dora_home/dora-drives$ proxychains dora start graphs/tutorials/webcam_single_dpt_frame.yaml --attach --hot-reload --name dpt_midas

ProxyChains-3.1 (http://proxychains.sf.net)

|S-chain|-<>-127.0.0.1:1080-<><>-127.0.0.1:6012-<><>-OK

Error: failed to send start dataflow message

Caused by:

failed to fill whole buffer

Location:

binaries/cli/src/main.rs:214:10

(dora3.7) jarvis@jia:~/coding/dora_home/dora-drives$

To Reproduce

Steps to reproduce the behavior:

dora upproxychains dora start graphs/tutorials/webcam_single_dpt_frame.yaml --attach --hot-reload --name dpt_midasExpected behavior

dora can support proxy mode, the purpose is to quickly download foreign acceleration algorithm models

Environments (please complete the following information):

Linux jia 5.15.0-70-generic #77~20.04.1-Ubuntu SMP Wed Apr 5 09:38:34 UTC 2023 x86_64 x86_64 x86_64 GNU/LinuxDescribe the bug

Accidentally dora command is unresponsive and stuck

To Reproduce

Steps to reproduce the behavior:

dora up , dora start dataflow.yaml, dora stop, dora destroyEnvironments (please complete the following information):

Describe the bug

sh -c 'conda activate python_virtual_env' can not work normally, but all kinds of conda operations in ubuntu terminal are completely normal.

Customize the node according to the following configuration algorithm.

jarvis@jia:~/coding/dora_home/dora/binaries/cli/src$ dora-cli logs 6382d842-7471-44d7-8b76-2e5ae8b30d45 midas_op

│ Logs from midas_op.

1 │ sh: 1: conda: not found

jarvis@jia:~/coding/dora_home/dora/binaries/cli/src$

To Reproduce

Steps to reproduce the behavior:

dora upRUST_LOG=info RUST_BACKTRACE=full cargo run start /path/to/your_rust_home/github.com/meua/dora-drives/graphs/tutorials/webcam_midas_frame_custom_node.yaml --attach --hot-reloadExpected behavior

The python environments between different algorithms are isolated from each other.

Environments (please complete the following information):

Additional context

- id: midas_op

custom:

source: shell

args: conda run -n midas-py310 python ../../operators/midas_op.py

# args: source /home/jarvis/anaconda3/etc/profile.d/conda.sh && conda activate midas-py310 && python ../../operators/midas_op.py

# args: conda env create -f ../../dependencies/MiDaS/environment.yaml && conda activate midas-py310 && python ../../operators/midas_op.py

# args: conda activate midas-py310 && python ../../operators/midas_op.py

# args: conda info -e && python ../../operators/midas_op.py

outputs:

- depth_frame

inputs:

image: webcam/image

···when used 'ctrl c' or 'kill ' to exit Dora, I will encounter unknown problems,

for example:

the ego vehicle create failed. etc.

Tracking issue for a Pylot MVP on Dora Python API. Pylot is going to validate and benchmark design choices made by DORA.

Those implementation are currently committed into #18.

Describe the bug

Steps to reproduce the behavior:

run pkg_config fail: "`\"pkg-config\" \"--libs\" \"--cflags\" \"openssl\"` did not exit successfully: exit status: 1\nerror: could not find system library 'openssl' required by the 'openssl-sys' crate\n\n--- stderr\nPackage openssl was not found in the pkg-config search path.\nPerhaps you should add the directory containing `openssl.pc'\nto the PKG_CONFIG_PATH environment variable\nNo package 'openssl' found\n"

--- stderr

thread 'main' panicked at '

Could not find directory of OpenSSL installation, and this `-sys` crate cannot

proceed without this knowledge. If OpenSSL is installed and this crate had

trouble finding it, you can set the `OPENSSL_DIR` environment variable for the

compilation process.

Make sure you also have the development packages of openssl installed.

For example, `libssl-dev` on Ubuntu or `openssl-devel` on Fedora.

If you're in a situation where you think the directory *should* be found

automatically, please open a bug at https://github.com/sfackler/rust-openssl

and include information about your system as well as this message.

$HOST = x86_64-unknown-linux-gnu

$TARGET = x86_64-unknown-linux-gnu

openssl-sys = 0.9.76

', /home/navy/.cargo/registry/src/github.com-1ecc6299db9ec823/openssl-sys-0.9.76/build/find_normal.rs:191:5

note: run with `RUST_BACKTRACE=1` environment variable to display a backtrace```

**Expected behavior**

Install openssl automatically

**Screenshots or Video**

**Environments (please complete the following information):**

- System info: Linux jia 5.15.0-67-generic #74~20.04.1-Ubuntu SMP Wed Feb 22 14:52:34 UTC 2023 x86_64 x86_64 x86_64 GNU/Linux

- Dora version:dora-cli 0.2.0

**Additional context**

https://dora.carsmos.ai/dora/installation.html

This is triggered during the execution of the command `cargo build --all --release` abnormal

There is a maximum number of open file handles per process (typically 1024). We currently allocate a separate shared memory region per output message, which can quickly exhaust this limit if the receiver buffers received inputs or when large queue sizes are used.

To avoid this problem, we should allocate larger shared memory buffers and use the same buffer for multiple messages.

Describe the bug

At the beginning, dora-daemon and dora-coordinator are running normally. When dataflow is started, because dora-coordinator responds slowly, daemon will automatically exit alone and cannot be restarted.

/home/jarvis/coding/dora_home/dora/target/debug/dora-daemon

Error: lost connection to coordinator

Location:

/home/jarvis/coding/dora_home/dora/binaries/daemon/src/lib.rs:247:29

Process finished with exit code 1To Reproduce

Steps to reproduce the behavior:

dora up RUST_LOG=info cargo run start /home/jarvis/coding/rust_home/github.com/meua/dora-drives/graphs/tutorials/webcam_midas_frame.yaml --attach --hot-reloadExpected behavior

It is expected that after the command dora up is executed, dora-coodinator and dora-daemon will act as system daemon processes and will not exit abnormally at will.

Environments (please complete the following information):

Additional context

(dora3.7) jarvis@jia:~/coding/dora_home/dora/binaries/cli/src$ RUST_LOG=info cargo run start /home/jarvis/coding/rust_home/github.com/meua/dora-drives/graphs/tutorials/webcam_midas_frame.yaml --attach --hot-reload

Compiling rustix v0.36.13

Compiling dora-operator-api-c v0.2.3-rc6 (/home/jarvis/coding/dora_home/dora/apis/c/operator)

Compiling is-terminal v0.4.4

Compiling clap v4.1.11

Compiling bat v0.23.0

Compiling dora-cli v0.2.3-rc6 (/home/jarvis/coding/dora_home/dora/binaries/cli)

Finished dev [unoptimized + debuginfo] target(s) in 7.92s

Running `/home/jarvis/coding/dora_home/dora/target/debug/dora-cli start /home/jarvis/coding/rust_home/github.com/meua/dora-drives/graphs/tutorials/webcam_midas_frame.yaml --attach --hot-reload`

failed to spawn dataflow on machine ``

(dora3.7) jarvis@jia:~/coding/dora_home/dora/binaries/cli/src$ Describe the bug

dora-daemon hangs up due to heartbeat timeout, but dora-coodinator is running normally, then I restart dora-daemon, when the dataflow is closed by dora stop uuid, it cannot be closed.

(dora3.7) jarvis@jia:~/coding/dora_home/dora$ conda activate py310

(py310) jarvis@jia:~/coding/dora_home/dora$ dora-cli up

started dora coordinator

started dora daemon

(py310) jarvis@jia:~/coding/dora_home/dora$ dora-cli -V

dora-cli 0.2.3-rc6

(py310) jarvis@jia:~/coding/dora_home/dora$ dora-cli check

Dora Coordinator: ok

Dora Daemon: ok

(py310) jarvis@jia:~/coding/dora_home/dora$ dora-cli start examples/python-operator-dataflow/dataflow.yml --attach --hot-reload

10af7c98-604d-4808-b48a-7e028cb3d733

2023-05-19T03:53:57.743423Z WARN dora_coordinator: daemon at `` did not react as expected to watchdog message

Caused by:

0: failed to send watchdog message to daemon

1: Broken pipe (os error 32)

Location:

/home/jarvis/coding/dora_home/dora/binaries/coordinator/src/lib.rs:550:10

at binaries/coordinator/src/lib.rs:468

open new terminal and kill dora-daemon, simulate the daemon process to hang up abnormally

(py310) jarvis@jia:~/coding/dora_home/dora$ dora-cli list

Running dataflows:

- [nappy-back] 10af7c98-604d-4808-b48a-7e028cb3d733

(py310) jarvis@jia:~/coding/dora_home/dora$ ps -ef | grep dora

jarvis 22117 1 0 11:41 pts/12 00:00:00 dora-coordinator

jarvis 22131 1 0 11:41 pts/12 00:00:01 dora-daemon

jarvis 24461 18206 0 11:53 pts/12 00:00:00 dora-cli start dataflow.yml --attach --hot-reload

jarvis 24464 22131 7 11:53 pts/12 00:00:01 python3 -c import dora; dora.start_runtime() # webcam

jarvis 24467 22131 8 11:53 pts/12 00:00:01 python3 -c import dora; dora.start_runtime() # plot

jarvis 24598 22333 0 11:53 pts/3 00:00:00 grep --color=auto dora

(py310) jarvis@jia:~/coding/dora_home/dora$ kill -15 22131

(py310) jarvis@jia:~/coding/dora_home/dora$ dora-cli list

Running dataflows:

- [nappy-back] 10af7c98-604d-4808-b48a-7e028cb3d733

(py310) jarvis@jia:~/coding/dora_home/dora$ dora-cli stop

> Choose dataflow to stop: [nappy-back] 10af7c98-604d-4808-b48a-7e028cb3d733

no daemon connection

(py310) jarvis@jia:~/coding/dora_home/dora$ dora-cli up

started dora daemon

(py310) jarvis@jia:~/coding/dora_home/dora$ dora-cli check

Dora Coordinator: ok

Dora Daemon: ok

(py310) jarvis@jia:~/coding/dora_home/dora$ dora-cli list

Running dataflows:

- [nappy-back] 10af7c98-604d-4808-b48a-7e028cb3d733

(py310) jarvis@jia:~/coding/dora_home/dora$ dora-cli stop

> Choose dataflow to stop: [nappy-back] 10af7c98-604d-4808-b48a-7e028cb3d733

failed to stop dataflow

(py310) jarvis@jia:~/coding/dora_home/dora$ dora-cli list

Running dataflows:

- [nappy-back] 10af7c98-604d-4808-b48a-7e028cb3d733

(py310) jarvis@jia:~/coding/dora_home/dora$ dora-cli -V

dora-cli 0.2.3-rc6

(py310) jarvis@jia:~/coding/dora_home/dora$

To Reproduce

Steps to reproduce the behavior:

dora-cli updora-cli start examples/python-operator-dataflow/dataflow.yaml --attach --hot-reloadkill -15 pid_dora_daemondora-cli updora-cli stop uuid_your_dataflowExpected behavior

I expect dora-coodinator and dora-daemon to live and die together, and they can automatically restart when the heartbeat times out, Or dora-daemon hangs up, and dataflow is also destroyed.

Environments (please complete the following information):

We are planning a dora command line tool with the following subcommands:

For v0.1:

help: Help about any command (generated by clap)check

dora-coordinator is runningiox-roudi deamon is runningbuild: Build the dora nodes/operators (similar to run.rs in current examples)graph: Generate dataflow graph per YAML spec

templates: Dora node/operator template

start: Run dora dataflowstop: Stop dora dataflowdestroy: Cleanup and destroy all dora related processeslist: List running dora entities (resources)up: Install all dora dependencies and start dora coordinator, iceoryx daemon and/or zenoh router etc

upgrade: Upgrade doraLater:

dashboard: Open the dora dashboard in a web browser (future)logs: Tail logs from customer node/operatorsmetrics: Fetch metrics from customer node/operatorsstats: Display dora/traffic statisticsget: Get info about the dora entity (future may need CRUD on dora entity)graph: visualize as image directly (e.g. as svg)Other Ideas:

package: Create an archive file containing all compiled nodes for easier distribution

cargo packageSee the discussion in #81 for more context.

Implementation PRs:

Env: MacOS

I followed README to test v0.1.0 release, and have following errors, please take a look @haixuanTao

(base) ~/GitHub/dora-rs/bin/test1 $ dora start dataflow.yml

dyld[35552]: Library not loaded: /Library/Frameworks/Python.framework/Versions/3.11/Python

Referenced from: <49D90856-1186-3169-BACA-0F128A85669B> /Users/yonghe/GitHub/dora-rs/bin/dora-runtime

Reason: tried: '/Library/Frameworks/Python.framework/Versions/3.11/Python' (no such file), '/System/Volumes/Preboot/Cryptexes/OS/Library/Frameworks/Python.framework/Versions/3.11/Python' (no such file), '/Library/Frameworks/Python.framework/Versions/3.11/Python' (no such file), '/System/Library/Frameworks/Python.framework/Versions/3.11/Python' (no such file, not in dyld cache)

dyld[35551]: Library not loaded: /Library/Frameworks/Python.framework/Versions/3.11/Python

Referenced from: <49D90856-1186-3169-BACA-0F128A85669B> /Users/yonghe/GitHub/dora-rs/bin/dora-runtime

Reason: tried: '/Library/Frameworks/Python.framework/Versions/3.11/Python' (no such file), '/System/Volumes/Preboot/Cryptexes/OS/Library/Frameworks/Python.framework/Versions/3.11/Python' (no such file), '/Library/Frameworks/Python.framework/Versions/3.11/Python' (no such file), '/System/Library/Frameworks/Python.framework/Versions/3.11/Python' (no such file, not in dyld cache)

Traceback (most recent call last):

File "/Users/yonghe/GitHub/dora-rs/bin/test1/./node_1/node_1.py", line 4, in <module>

from dora import Node

ModuleNotFoundError: No module named 'dora'

2022-11-16T17:37:32.302484Z ERROR dora_coordinator: error occured in dataflow `48b40fe9-3a44-4a63-b952-122583f5fb4c`

Caused by:

0: failed to run dataflow at /Users/yonghe/GitHub/dora-rs/bin/test1/dataflow.yml

1: custom node failed

2: runtime node op_1 failed (unknown exit code)

Location:

binaries/coordinator/src/run/runtime.rs:55:17

at binaries/coordinator/src/lib.rs:90

48b40fe9-3a44-4a63-b952-122583f5fb4c

(base) ~/GitHub/dora-rs/bin/test1 $ ls ../

dataflow.yml dora-coordinator dora-v0.1.0-x86_64-macOS.zip test1

dora dora-runtime iceoryx

(base) ~/GitHub/dora-rs/bin/test1 $ python --version

Python 3.9.7

(base) ~/GitHub/dora-rs/bin/test1 $ pip --version

pip 21.2.4 from /usr/local/lib/python3.9/site-packages/pip (python 3.9)

(base) ~/GitHub/dora-rs/bin/test1 $

By trying the following dataflow:

communication:

zenoh:

prefix: /foo

nodes:

- id: timer

custom:

run: ../target/release/examples/example_source_timer

outputs:

- time

- id: rate-limited-timer

custom:

run: ../target/release/examples/rate_limit --seconds 0.5

inputs:

data: timer/time

outputs:

- rate_limited

- id: random

custom:

run: ../target/release/examples/random_number

inputs:

timestamp: rate-limited-timer/rate_limited

outputs:

- number

- id: logger

custom:

run: ../target/release/examples/example_sink_logger

inputs:

random: random/number

time: timer/time

timestamped-random: runtime-node/op-1/timestamped-random

# c-counter: runtime-node/op-2/counter

python-counter: runtime-node/op-4/counter

- id: runtime-node

operators:

- id: op-1

shared-library: ../target/release/libexample_operator.so

inputs:

random: random/number

time: timer/time

outputs:

- timestamped-random

# - id: op-2

# shared-library: ../runtime/examples/c-operator/operator.so

# inputs:

# time: timer/time

# outputs:

# - counter

- id: op-3

python: ../runtime/examples/python-operator/op.py

inputs:

time-python: timer/time

outputs:

- counter

- id: op-4

python: ../runtime/examples/python-operator/op.py

inputs:

counter: runtime-node/op-3/counter

outputs:

- counterwith the recommended command:

cargo run --release -- run examples/mini-dataflow.ymlI am able to lock one of the two operator in a way that the queue of the operator never get unfilled.

I think we have to look at how to enable multithreading for pyo3.

I will investigate

Proposed by @haixuanTao

Describe the bug

The dora service is not started. An error is reported execute dora list

To Reproduce

Steps to reproduce the behavior:

Environments (please complete the following information):

Linux jia 5.15.0-69-generic #76~20.04.1-Ubuntu SMP Mon Mar 20 15:54:19 UTC 2023 x86_64 x86_64 x86_64 GNU/LinuxDescribe the bug

The process cannot be destroyed by the command dora destroy

To Reproduce

Steps to reproduce the behavior:

dora updora destroyEnvironments (please complete the following information):

This is a problem encountered in porting NDT algorithm from ROS2 ecosystem to DORA framework.

The SRV mechanism provided in ROS2 accepts the service request sent by the client and send back the response.

It is known the NDT algorithm requires GNSS signal or manual initial position assignment before it works, and the NDT algorithm is sensitive to the error of the given initial position, while the GNSS (without RTK) signal or manual initial position generally has an error of 2-10 meters, so the Particle filter + NDT algorithm is used to find an accurate position near the given initial position.

In the implementation of the autoware.universe's location module, the process of obtaining the exact initial pose is provided by a SRV mechanism, i.e. the serviceNDTAlign service in ndt_scan_matcher_core.cpp. The call to the serviceNDTAlign service is made in the pose_initializer package. Extract the relevant codes as follows:

// pose_initializer_core.cpp

void PoseInitializer::on_initialize(

const Initialize::Service::Request::SharedPtr req,

const Initialize::Service::Response::SharedPtr res)

{

// ...

try {

change_state(State::Message::INITIALIZING);

auto pose = req->pose.empty() ? get_gnss_pose() : req->pose.front();

if (ndt_) {

pose = ndt_->align_pose(pose);

}

pose.pose.covariance = output_pose_covariance_;

// ...

}

}

// ndt_module.cpp

NdtModule::NdtModule(rclcpp::Node * node) : logger_(node->get_logger())

{

cli_align_ = node->create_client<RequestPoseAlignment>("ndt_align");

}

PoseWithCovarianceStamped NdtModule::align_pose(const PoseWithCovarianceStamped & pose)

{

const auto req = std::make_shared<RequestPoseAlignment::Request>();

req->pose_with_covariance = pose;

if (!cli_align_->service_is_ready()) {

throw component_interface_utils::ServiceUnready("NDT align server is not ready.");

}

RCLCPP_INFO(logger_, "Call NDT align server.");

const auto res = cli_align_->async_send_request(req).get();

if (!res->success) {

RCLCPP_INFO(logger_, "NDT align server failed.");

throw ServiceException(

Initialize::Service::Response::ERROR_ESTIMATION, "NDT align server failed.");

}

RCLCPP_INFO(logger_, "NDT align server succeeded.");

// Overwrite the covariance.

return res->pose_with_covariance;

}From the implementation of align_pose() function in ndt_module.cpp, it can be seen that, The SRV mechanism of ROS2 has two characteristics: first, whether the service is ready can be checked through if (! cli_align_ ->service_is_ready()) before calling the service; Second, service calls are executed asynchronously.

At present, there is no SRV mechanism in the DORA framework, so the Pub/Sub method is temporarily used to replace SRV: from dataflow.yml, it can be seen that first the pose_initializer operator sends a align_request_pose request message, and then the ndt_scan_matcher operator return the response message.

communication:

zenoh:

prefix: /localization-dataflow

nodes:

- id: runtime-node

operators:

- id: pose_initializer

shared-library: ../../../target/debug/pose_initializer

inputs:

manual_pose: manual_pose/initial_pose

fitted_gnss_pose: runtime-node/map_height_fitter/fitted_gnss_pose

aligned_response_pose: runtime-node/ndt_scan_matcher/aligned_response_pose

outputs:

- align_request_pose

- initialpose3d

- id: ndt_scan_matcher

shared-library: ../../../target/debug/ndt_scan_matcher

inputs:

map_points: pointcloud_map_loader/map_points

align_request_pose: runtime-node/pose_initializer/align_request_pose

downsampled_pointcloud: runtime-node/pointcloud_downsample/downsampled_pointcloud

regularization_pose: runtime-node/gnss_poser/gnss_pose_cov

initial_pose: runtime-node/ekf_localizer/ekf_biased_pose_with_cov

tf_baselink2lidar: tf_publisher/tf_baselink2lidar

outputs:

- ndt_pose_with_cov

- aligned_response_poseSuch an approximate alternative cannot realize the two characteristics of SRV mechanism mentioned above: first, it is impossible to determine whether the service is ready before calling the service, because the ndt_align service provided by ndt_scan_matcher is actutally ready only when the operator has received a map_ points and downsampled_pointcloud input message. Since both ROS2 and Dora uses a Pub/Sub distributed communication mode, it is impossible to specify the node/operator startup sequence. So theoretically, it cannot be guaranteed that ndt_scan_matcher operator will receive the map_points and downsampled_pointcloud messages before receiving the align_request_pose request. Secondly, there is no asynchronous call mode, or I do not know how to use asynchronous mechanism in the implementation of DORA operator.

So there will be performance bottlenecks in the current alternatives. I wonder if there is a better alternative to SRV, Looking forward to your reply.

Describe the bug

warning: variant `AllocateOutputSample` is never constructed

--> binaries\runtime\src\operator\mod.rs:71:5

|

70 | pub enum OperatorEvent {

| ------------- variant in this enum

71 | AllocateOutputSample {

| ^^^^^^^^^^^^^^^^^^^^

|

= note: `OperatorEvent` has a derived impl for the trait `Debug`, but this is intentionally ignored during dead code analysis

= note: `#[warn(dead_code)]` on by defaultTo Reproduce

Steps to reproduce the behavior:

git checkout -b v0.2.3-rc6 v0.2.3-rc6cd binaries/daemon && cargo runEnvironments (please complete the following information):

Zenoh channels are sometimes closed failing the stopping a dataflow.

This can be problematic in case of urgent stopping.

(Reported by @haixuanTao)

Some goals that we try to achieve with this:

Describe the bug

jarvis@jia:~/coding/dora_home/dora/examples/python-dataflow$ ./run.sh

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple

Requirement already satisfied: maturin in ./.env/lib/python3.10/site-packages (0.15.1)

Requirement already satisfied: tomli>=1.1.0 in ./.env/lib/python3.10/site-packages (from maturin) (2.0.1)

🍹 Building a mixed python/rust project

🔗 Found pyo3 bindings with abi3 support for Python ≥ 3.7

🐍 Not using a specific python interpreter

📡 Using build options features from pyproject.toml

Finished dev [unoptimized + debuginfo] target(s) in 0.11s

⚠️ Warning: Failed to set rpath for /home/jarvis/coding/dora_home/dora/target/debug/libdora.so: Failed to execute 'patchelf', did you install it? Hint: Try `pip install maturin[patchelf]` (or just `pip install patchelf`)

📦 Built wheel for abi3 Python ≥ 3.7 to /tmp/.tmpeaEO15/dora_rs-0.2.3rc6-cp37-abi3-linux_x86_64.whl

🛠 Installed dora-rs-0.2.3rc6

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple

Requirement already satisfied: pip in ./.env/lib/python3.10/site-packages (23.1.2)

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple

Requirement already satisfied: gitpython in ./.env/lib/python3.10/site-packages (from -r requirements.txt (line 5)) (3.1.31)

Requirement already satisfied: ipython in ./.env/lib/python3.10/site-packages (from -r requirements.txt (line 6)) (8.13.2)

Requirement already satisfied: matplotlib>=3.2.2 in ./.env/lib/python3.10/site-packages (from -r requirements.txt (line 7)) (3.7.1)

Requirement already satisfied: numpy>=1.18.5 in ./.env/lib/python3.10/site-packages (from -r requirements.txt (line 8)) (1.24.3)

Requirement already satisfied: opencv-python>=4.1.1 in ./.env/lib/python3.10/site-packages (from -r requirements.txt (line 9)) (4.7.0.72)

Requirement already satisfied: Pillow>=7.1.2 in ./.env/lib/python3.10/site-packages (from -r requirements.txt (line 10)) (9.5.0)

Requirement already satisfied: psutil in ./.env/lib/python3.10/site-packages (from -r requirements.txt (line 11)) (5.9.5)

Requirement already satisfied: PyYAML>=5.3.1 in ./.env/lib/python3.10/site-packages (from -r requirements.txt (line 12)) (6.0)

Requirement already satisfied: requests>=2.23.0 in ./.env/lib/python3.10/site-packages (from -r requirements.txt (line 13)) (2.30.0)

Requirement already satisfied: scipy>=1.4.1 in ./.env/lib/python3.10/site-packages (from -r requirements.txt (line 14)) (1.10.1)

Requirement already satisfied: thop>=0.1.1 in ./.env/lib/python3.10/site-packages (from -r requirements.txt (line 15)) (0.1.1.post2209072238)

Requirement already satisfied: torch>=1.7.0 in ./.env/lib/python3.10/site-packages (from -r requirements.txt (line 16)) (2.0.1)

Requirement already satisfied: torchvision>=0.8.1 in ./.env/lib/python3.10/site-packages (from -r requirements.txt (line 17)) (0.15.2)

Requirement already satisfied: tqdm>=4.64.0 in ./.env/lib/python3.10/site-packages (from -r requirements.txt (line 18)) (4.65.0)

Requirement already satisfied: tensorboard>=2.4.1 in ./.env/lib/python3.10/site-packages (from -r requirements.txt (line 21)) (2.13.0)

Requirement already satisfied: pandas>=1.1.4 in ./.env/lib/python3.10/site-packages (from -r requirements.txt (line 26)) (2.0.1)

Requirement already satisfied: seaborn>=0.11.0 in ./.env/lib/python3.10/site-packages (from -r requirements.txt (line 27)) (0.12.2)

Requirement already satisfied: gitdb<5,>=4.0.1 in ./.env/lib/python3.10/site-packages (from gitpython->-r requirements.txt (line 5)) (4.0.10)

Requirement already satisfied: backcall in ./.env/lib/python3.10/site-packages (from ipython->-r requirements.txt (line 6)) (0.2.0)

Requirement already satisfied: decorator in ./.env/lib/python3.10/site-packages (from ipython->-r requirements.txt (line 6)) (5.1.1)

Requirement already satisfied: jedi>=0.16 in ./.env/lib/python3.10/site-packages (from ipython->-r requirements.txt (line 6)) (0.18.2)

Requirement already satisfied: matplotlib-inline in ./.env/lib/python3.10/site-packages (from ipython->-r requirements.txt (line 6)) (0.1.6)

Requirement already satisfied: pickleshare in ./.env/lib/python3.10/site-packages (from ipython->-r requirements.txt (line 6)) (0.7.5)

Requirement already satisfied: prompt-toolkit!=3.0.37,<3.1.0,>=3.0.30 in ./.env/lib/python3.10/site-packages (from ipython->-r requirements.txt (line 6)) (3.0.38)

Requirement already satisfied: pygments>=2.4.0 in ./.env/lib/python3.10/site-packages (from ipython->-r requirements.txt (line 6)) (2.15.1)

Requirement already satisfied: stack-data in ./.env/lib/python3.10/site-packages (from ipython->-r requirements.txt (line 6)) (0.6.2)

Requirement already satisfied: traitlets>=5 in ./.env/lib/python3.10/site-packages (from ipython->-r requirements.txt (line 6)) (5.9.0)

Requirement already satisfied: pexpect>4.3 in ./.env/lib/python3.10/site-packages (from ipython->-r requirements.txt (line 6)) (4.8.0)

Requirement already satisfied: contourpy>=1.0.1 in ./.env/lib/python3.10/site-packages (from matplotlib>=3.2.2->-r requirements.txt (line 7)) (1.0.7)

Requirement already satisfied: cycler>=0.10 in ./.env/lib/python3.10/site-packages (from matplotlib>=3.2.2->-r requirements.txt (line 7)) (0.11.0)

Requirement already satisfied: fonttools>=4.22.0 in ./.env/lib/python3.10/site-packages (from matplotlib>=3.2.2->-r requirements.txt (line 7)) (4.39.4)

Requirement already satisfied: kiwisolver>=1.0.1 in ./.env/lib/python3.10/site-packages (from matplotlib>=3.2.2->-r requirements.txt (line 7)) (1.4.4)

Requirement already satisfied: packaging>=20.0 in ./.env/lib/python3.10/site-packages (from matplotlib>=3.2.2->-r requirements.txt (line 7)) (23.1)

Requirement already satisfied: pyparsing>=2.3.1 in ./.env/lib/python3.10/site-packages (from matplotlib>=3.2.2->-r requirements.txt (line 7)) (3.0.9)

Requirement already satisfied: python-dateutil>=2.7 in ./.env/lib/python3.10/site-packages (from matplotlib>=3.2.2->-r requirements.txt (line 7)) (2.8.2)

Requirement already satisfied: charset-normalizer<4,>=2 in ./.env/lib/python3.10/site-packages (from requests>=2.23.0->-r requirements.txt (line 13)) (3.1.0)

Requirement already satisfied: idna<4,>=2.5 in ./.env/lib/python3.10/site-packages (from requests>=2.23.0->-r requirements.txt (line 13)) (3.4)

Requirement already satisfied: urllib3<3,>=1.21.1 in ./.env/lib/python3.10/site-packages (from requests>=2.23.0->-r requirements.txt (line 13)) (1.26.15)

Requirement already satisfied: certifi>=2017.4.17 in ./.env/lib/python3.10/site-packages (from requests>=2.23.0->-r requirements.txt (line 13)) (2023.5.7)

Requirement already satisfied: filelock in ./.env/lib/python3.10/site-packages (from torch>=1.7.0->-r requirements.txt (line 16)) (3.12.0)

Requirement already satisfied: typing-extensions in ./.env/lib/python3.10/site-packages (from torch>=1.7.0->-r requirements.txt (line 16)) (4.5.0)

Requirement already satisfied: sympy in ./.env/lib/python3.10/site-packages (from torch>=1.7.0->-r requirements.txt (line 16)) (1.12)

Requirement already satisfied: networkx in ./.env/lib/python3.10/site-packages (from torch>=1.7.0->-r requirements.txt (line 16)) (3.1)

Requirement already satisfied: jinja2 in ./.env/lib/python3.10/site-packages (from torch>=1.7.0->-r requirements.txt (line 16)) (3.1.2)

Requirement already satisfied: nvidia-cuda-nvrtc-cu11==11.7.99 in ./.env/lib/python3.10/site-packages (from torch>=1.7.0->-r requirements.txt (line 16)) (11.7.99)

Requirement already satisfied: nvidia-cuda-runtime-cu11==11.7.99 in ./.env/lib/python3.10/site-packages (from torch>=1.7.0->-r requirements.txt (line 16)) (11.7.99)

Requirement already satisfied: nvidia-cuda-cupti-cu11==11.7.101 in ./.env/lib/python3.10/site-packages (from torch>=1.7.0->-r requirements.txt (line 16)) (11.7.101)

Requirement already satisfied: nvidia-cudnn-cu11==8.5.0.96 in ./.env/lib/python3.10/site-packages (from torch>=1.7.0->-r requirements.txt (line 16)) (8.5.0.96)

Requirement already satisfied: nvidia-cublas-cu11==11.10.3.66 in ./.env/lib/python3.10/site-packages (from torch>=1.7.0->-r requirements.txt (line 16)) (11.10.3.66)

Requirement already satisfied: nvidia-cufft-cu11==10.9.0.58 in ./.env/lib/python3.10/site-packages (from torch>=1.7.0->-r requirements.txt (line 16)) (10.9.0.58)

Requirement already satisfied: nvidia-curand-cu11==10.2.10.91 in ./.env/lib/python3.10/site-packages (from torch>=1.7.0->-r requirements.txt (line 16)) (10.2.10.91)

Requirement already satisfied: nvidia-cusolver-cu11==11.4.0.1 in ./.env/lib/python3.10/site-packages (from torch>=1.7.0->-r requirements.txt (line 16)) (11.4.0.1)

Requirement already satisfied: nvidia-cusparse-cu11==11.7.4.91 in ./.env/lib/python3.10/site-packages (from torch>=1.7.0->-r requirements.txt (line 16)) (11.7.4.91)

Requirement already satisfied: nvidia-nccl-cu11==2.14.3 in ./.env/lib/python3.10/site-packages (from torch>=1.7.0->-r requirements.txt (line 16)) (2.14.3)

Requirement already satisfied: nvidia-nvtx-cu11==11.7.91 in ./.env/lib/python3.10/site-packages (from torch>=1.7.0->-r requirements.txt (line 16)) (11.7.91)

Requirement already satisfied: triton==2.0.0 in ./.env/lib/python3.10/site-packages (from torch>=1.7.0->-r requirements.txt (line 16)) (2.0.0)

Requirement already satisfied: setuptools in ./.env/lib/python3.10/site-packages (from nvidia-cublas-cu11==11.10.3.66->torch>=1.7.0->-r requirements.txt (line 16)) (67.7.2)

Requirement already satisfied: wheel in ./.env/lib/python3.10/site-packages (from nvidia-cublas-cu11==11.10.3.66->torch>=1.7.0->-r requirements.txt (line 16)) (0.40.0)

Requirement already satisfied: cmake in ./.env/lib/python3.10/site-packages (from triton==2.0.0->torch>=1.7.0->-r requirements.txt (line 16)) (3.26.3)

Requirement already satisfied: lit in ./.env/lib/python3.10/site-packages (from triton==2.0.0->torch>=1.7.0->-r requirements.txt (line 16)) (16.0.3)

Requirement already satisfied: absl-py>=0.4 in ./.env/lib/python3.10/site-packages (from tensorboard>=2.4.1->-r requirements.txt (line 21)) (1.4.0)

Requirement already satisfied: grpcio>=1.48.2 in ./.env/lib/python3.10/site-packages (from tensorboard>=2.4.1->-r requirements.txt (line 21)) (1.54.0)

Requirement already satisfied: google-auth<3,>=1.6.3 in ./.env/lib/python3.10/site-packages (from tensorboard>=2.4.1->-r requirements.txt (line 21)) (2.18.0)

Requirement already satisfied: google-auth-oauthlib<1.1,>=0.5 in ./.env/lib/python3.10/site-packages (from tensorboard>=2.4.1->-r requirements.txt (line 21)) (1.0.0)

Requirement already satisfied: markdown>=2.6.8 in ./.env/lib/python3.10/site-packages (from tensorboard>=2.4.1->-r requirements.txt (line 21)) (3.4.3)

Requirement already satisfied: protobuf>=3.19.6 in ./.env/lib/python3.10/site-packages (from tensorboard>=2.4.1->-r requirements.txt (line 21)) (4.23.0)

Requirement already satisfied: tensorboard-data-server<0.8.0,>=0.7.0 in ./.env/lib/python3.10/site-packages (from tensorboard>=2.4.1->-r requirements.txt (line 21)) (0.7.0)

Requirement already satisfied: werkzeug>=1.0.1 in ./.env/lib/python3.10/site-packages (from tensorboard>=2.4.1->-r requirements.txt (line 21)) (2.3.4)

Requirement already satisfied: pytz>=2020.1 in ./.env/lib/python3.10/site-packages (from pandas>=1.1.4->-r requirements.txt (line 26)) (2023.3)

Requirement already satisfied: tzdata>=2022.1 in ./.env/lib/python3.10/site-packages (from pandas>=1.1.4->-r requirements.txt (line 26)) (2023.3)

Requirement already satisfied: smmap<6,>=3.0.1 in ./.env/lib/python3.10/site-packages (from gitdb<5,>=4.0.1->gitpython->-r requirements.txt (line 5)) (5.0.0)

Requirement already satisfied: cachetools<6.0,>=2.0.0 in ./.env/lib/python3.10/site-packages (from google-auth<3,>=1.6.3->tensorboard>=2.4.1->-r requirements.txt (line 21)) (5.3.0)

Requirement already satisfied: pyasn1-modules>=0.2.1 in ./.env/lib/python3.10/site-packages (from google-auth<3,>=1.6.3->tensorboard>=2.4.1->-r requirements.txt (line 21)) (0.3.0)

Requirement already satisfied: six>=1.9.0 in ./.env/lib/python3.10/site-packages (from google-auth<3,>=1.6.3->tensorboard>=2.4.1->-r requirements.txt (line 21)) (1.16.0)

Requirement already satisfied: rsa<5,>=3.1.4 in ./.env/lib/python3.10/site-packages (from google-auth<3,>=1.6.3->tensorboard>=2.4.1->-r requirements.txt (line 21)) (4.9)

Requirement already satisfied: requests-oauthlib>=0.7.0 in ./.env/lib/python3.10/site-packages (from google-auth-oauthlib<1.1,>=0.5->tensorboard>=2.4.1->-r requirements.txt (line 21)) (1.3.1)

Requirement already satisfied: parso<0.9.0,>=0.8.0 in ./.env/lib/python3.10/site-packages (from jedi>=0.16->ipython->-r requirements.txt (line 6)) (0.8.3)

Requirement already satisfied: ptyprocess>=0.5 in ./.env/lib/python3.10/site-packages (from pexpect>4.3->ipython->-r requirements.txt (line 6)) (0.7.0)

Requirement already satisfied: wcwidth in ./.env/lib/python3.10/site-packages (from prompt-toolkit!=3.0.37,<3.1.0,>=3.0.30->ipython->-r requirements.txt (line 6)) (0.2.6)

Requirement already satisfied: MarkupSafe>=2.1.1 in ./.env/lib/python3.10/site-packages (from werkzeug>=1.0.1->tensorboard>=2.4.1->-r requirements.txt (line 21)) (2.1.2)

Requirement already satisfied: executing>=1.2.0 in ./.env/lib/python3.10/site-packages (from stack-data->ipython->-r requirements.txt (line 6)) (1.2.0)

Requirement already satisfied: asttokens>=2.1.0 in ./.env/lib/python3.10/site-packages (from stack-data->ipython->-r requirements.txt (line 6)) (2.2.1)

Requirement already satisfied: pure-eval in ./.env/lib/python3.10/site-packages (from stack-data->ipython->-r requirements.txt (line 6)) (0.2.2)

Requirement already satisfied: mpmath>=0.19 in ./.env/lib/python3.10/site-packages (from sympy->torch>=1.7.0->-r requirements.txt (line 16)) (1.3.0)

Requirement already satisfied: pyasn1<0.6.0,>=0.4.6 in ./.env/lib/python3.10/site-packages (from pyasn1-modules>=0.2.1->google-auth<3,>=1.6.3->tensorboard>=2.4.1->-r requirements.txt (line 21)) (0.5.0)

Requirement already satisfied: oauthlib>=3.0.0 in ./.env/lib/python3.10/site-packages (from requests-oauthlib>=0.7.0->google-auth-oauthlib<1.1,>=0.5->tensorboard>=2.4.1->-r requirements.txt (line 21)) (3.2.2)

Compiling dora-operator-api-c v0.2.3-rc6 (/home/jarvis/coding/dora_home/dora/apis/c/operator)

Compiling dora-cli v0.2.3-rc6 (/home/jarvis/coding/dora_home/dora/binaries/cli)

Finished dev [unoptimized + debuginfo] target(s) in 4.51s

Running `/home/jarvis/coding/dora_home/dora/target/debug/dora-cli up`

Finished dev [unoptimized + debuginfo] target(s) in 0.11s

Running `/home/jarvis/coding/dora_home/dora/target/debug/dora-daemon --run-dataflow dataflow_without_webcam.yml`

2023-05-23T09:19:39.129115Z ERROR dora_daemon:

ae86c2e2-49b1-4af2-b750-e9672d5abdf2/no_webcam failed with exit code 1.

Check logs using: dora logs ae86c2e2-49b1-4af2-b750-e9672d5abdf2 no_webcam

at binaries/daemon/src/lib.rs:906

2023-05-23T09:19:39.129165Z WARN dora_daemon::pending: node `no_webcam` exited before initializing dora connection

at binaries/daemon/src/pending.rs:76

2023-05-23T09:21:24.222078Z ERROR dora_daemon:

ae86c2e2-49b1-4af2-b750-e9672d5abdf2/object_detection failed with exit code 1.

Check logs using: dora logs ae86c2e2-49b1-4af2-b750-e9672d5abdf2 object_detection

at binaries/daemon/src/lib.rs:906

2023-05-23T09:21:24.222120Z WARN dora_daemon::pending: node `object_detection` exited before initializing dora connection

at binaries/daemon/src/pending.rs:76

2023-05-23T09:21:24.234340Z ERROR dora_daemon:

ae86c2e2-49b1-4af2-b750-e9672d5abdf2/plot failed with exit code 1.

Check logs using: dora logs ae86c2e2-49b1-4af2-b750-e9672d5abdf2 plot

at binaries/daemon/src/lib.rs:906

Error: some nodes failed:

- ae86c2e2-49b1-4af2-b750-e9672d5abdf2/no_webcam:

ae86c2e2-49b1-4af2-b750-e9672d5abdf2/no_webcam failed with exit code 1.

Check logs using: dora logs ae86c2e2-49b1-4af2-b750-e9672d5abdf2 no_webcam

- ae86c2e2-49b1-4af2-b750-e9672d5abdf2/object_detection:

ae86c2e2-49b1-4af2-b750-e9672d5abdf2/object_detection failed with exit code 1.

Check logs using: dora logs ae86c2e2-49b1-4af2-b750-e9672d5abdf2 object_detection

- ae86c2e2-49b1-4af2-b750-e9672d5abdf2/plot:

ae86c2e2-49b1-4af2-b750-e9672d5abdf2/plot failed with exit code 1.

Check logs using: dora logs ae86c2e2-49b1-4af2-b750-e9672d5abdf2 plot

Location:

/home/jarvis/coding/dora_home/dora/binaries/daemon/src/lib.rs:163:13

jarvis@jia:~/coding/dora_home/dora/examples/python-dataflow$ To Reproduce

Steps to reproduce the behavior:

cd examples/python-dataflow && ./run.shEnvironments (please complete the following information):

Describe the bug

(dora3.7) jarvis@jia:~/coding/rust_home/github.com/dora-rs/dora/binaries/daemon/src$ cargo run

Compiling pyo3-build-config v0.18.2

Compiling clap v4.1.11

Compiling pyo3-ffi v0.18.2

Compiling pyo3 v0.18.2

Compiling arrow v35.0.0

Compiling dora-node-api v0.2.2 (/home/jarvis/coding/rust_home/github.com/dora-rs/dora/apis/rust/node)

Compiling dora-operator-api-python v0.2.2 (/home/jarvis/coding/rust_home/github.com/dora-rs/dora/apis/python/operator)

Compiling dora-runtime v0.2.2 (/home/jarvis/coding/rust_home/github.com/dora-rs/dora/binaries/runtime)

Compiling dora-daemon v0.2.2 (/home/jarvis/coding/rust_home/github.com/dora-rs/dora/binaries/daemon)

Finished dev [unoptimized + debuginfo] target(s) in 9.55s

Running `/home/jarvis/coding/rust_home/github.com/dora-rs/dora/target/debug/dora-daemon main.rs`

/home/jarvis/coding/rust_home/github.com/dora-rs/dora/target/debug/dora-daemon: error while loading shared libraries: libpython3.7m.so.1.0: cannot open shared object file: No such file or directory

To Reproduce

Steps to reproduce the behavior:

cd /path/to/dora-rs/dora cargo runEnvironments (please complete the following information):

As performance is very important for us, I think it is important we design a benchmark harness.

I want to use criterion.rs as it is a full feature bench suite with support of async. Although this will come with its complexity.

If you're ok with that @phil-opp, I will go this road.

Good read on benchmarking: https://nnethercote.github.io/perf-book/benchmarking.html

We need to implement MLA as a separate crate to provides a middleware abstraction layer that enables scalable, high performance communications for inter async tasks, intra-process (OS threads), interprocess communication on a single computer node or between different nodes in a computer network. MLA needs to support different communication patterns:

The MLA needs to abstract following details:

Rust eco-system has abundant crates to provide underlaying communications, e.g.,:

MLA also needs to provide high level APIs:

After porting NDT algorithm from ROS2 ecosystem to DORA framework, I conducted some tests on the performance of NDT algorithm. The results show that NDT execution is very time-consuming. I've been trying to find out the reason these days, but can't solve the problem until now. The following is the detailed debugging process.

NDT operator execution time on Dora side

First, add necessary print info in ndt_scan_matcher.cpp to obtain the time required for NDT execution.

// ndt_scan_matcher.cpp

geometry_msgs::msg::PoseWithCovarianceStamped NDTScanMatcher::alignUsingMonteCarlo(

const std::shared_ptr<NormalDistributionsTransformBase<PointSource, PointTarget>> & ndt_ptr,

const geometry_msgs::msg::PoseWithCovarianceStamped & initial_pose_with_cov)

{

// ...

for (unsigned int i = 0; i < initial_poses.size(); i++) {

const auto exe_start_time = std::chrono::system_clock::now(); // for debug

// ...

ndt_ptr->align(*output_cloud, initial_pose_matrix);

// ...

const auto exe_end_time = std::chrono::system_clock::now(); // for debug

const double exe_time = std::chrono::duration_cast<std::chrono::microseconds>(exe_end_time - exe_start_time).count() / 1000.0;

std::cout << "The alignUsingMonteCarlo execute time is: "<< exe_time << " ms, " << "and NumIteration is: " << num_iteration << std::endl; // for debug

// ...

}

}Where ndt_ptr->align(*output_cloud, initial_pose_matrix); calls a multi-core version of NDT algorithm. This multi-core implementation actually uses the OpenMP based multi-threading mechanism. The corresponding parallel settings is in ndt_omp_impl.hpp, extracted as follows.

// ndt_omp_impl.hpp

template<typename PointSource, typename PointTarget> double

pclomp::NormalDistributionsTransform<PointSource, PointTarget>::computeDerivatives(Eigen::Matrix<double, 6, 1> &score_gradient,

Eigen::Matrix<double, 6, 6> &hessian,

PointCloudSource &trans_cloud,

Eigen::Matrix<double, 6, 1> &p,

bool compute_hessian)

{

// ...

// const auto parallel_start_time = std::chrono::system_clock::now(); // for debug

#pragma omp parallel for num_threads(num_threads_) schedule(guided, 8)

for (std::size_t idx = 0; idx < input_->points.size(); idx++)

{

int thread_n = omp_get_thread_num();

// ...

}

// const auto parallel_end_time = std::chrono::system_clock::now(); // for debug

// const double parallel_exe_time = std::chrono::duration_cast<std::chrono::microseconds>(parallel_end_time - parallel_start_time).count() / 1000.0;

// printf("---- computeDerivatives parallel part execute time is %f ms ----\n", parallel_exe_time); // for debug

// const auto serial_start_time = std::chrono::system_clock::now(); // for debug

for (std::size_t i = 0; i < input_->points.size(); i++) {

// ...

}

if (regularization_pose_) {

// ...

}

// const auto serial_end_time = std::chrono::system_clock::now(); // for debug

// const double serial_exe_time = std::chrono::duration_cast<std::chrono::microseconds>(serial_end_time - serial_start_time).count() / 1000.0;

// printf("---- computeDerivatives serial part execute time is %f ms ----\n", serial_exe_time); // for debug

}The test results are shown in the figures below. It can be found that the NDT execution time is usually above 400 ms, far from meeting the real-time requirements of localization. And by using htop and pstree commands, it is indicated that the multi-threading mechanism works properly.

To find out which code is time-consuming to execute, I added more print info to "ndt_omp_impl.hpp", seeing the above code commented with "//" for details. The result shows that the execution time of the "computeDerivatives" function is about 70 ms, and this function will be called many times each time the NDT algorithm is run. Below is the corresponding screenshot.

NDT execution time on ROS2 side

For comparison, on ROS2 side, the screenshots of NDT execution time are as follows. It only takes about 0.7 ms for the "computeDerivatives" function to execute and the total NDT execution time is about 20 ms.

NDT node execution time on Dora side

Considering that the Dora operator itself is a thread, in order to eliminate the influence of this factor on the multi-threading mechanism of ndt, I rewrite the "ndt_scan_matcher" as Dora node and record its execution time. The relevant results are as follows:

It remains similar to the case of porting "ndt_scan_matcher" as Dora operator.

I carefully analyzed the code in ndt_omp_impl.hpp, and there seems nothing special. So it is strange that the same code only takes about 20 ms to execute on the ROS2 side, but it takes about 1000 ms on the Dora side. Looking forward to your advice.

This ROS2-Rust project https://github.com/ros2-rust/ros2_rust is interesting for Dora to support some ROS2 APIs (rclrs)

Describe the bug

error[E0554]: `#![feature]` may not be used on the stable release channel

--> /home/jarvis/.cargo/registry/src/github.com-1ecc6299db9ec823/rustix-0.36.9/src/lib.rs:99:26

|

99 | #![cfg_attr(rustc_attrs, feature(rustc_attrs))]

| ^^^^^^^^^^^^^^^^^^^^To Reproduce

Steps to reproduce the behavior:

Environments (please complete the following information):

While we already have support for zero-copy inputs in Rust nodes, we still require copying the data for Python nodes. This can lead to decreased performance when using messages with large data. To avoid these slowdowns, we want to support zero-copy inputs for Python nodes too.

The fundamental challenge is that Python normally operates on owned data. So we have to use a special type such as the memoryview object to make the data accessible to Python without copying. What makes things more complex is that we need a custom freeing logic because we need to unmap the shared memory and report a drop event back to the sender node.

Using a non-standard data type makes it more complex to interact with the data. For example, special functions might be needed for reading, cloning, and slicing the data. Also, it is common to convert the data to other types (e.g. numpy arrays), which requires special conversion functions.

To keep the Python API simple and easy to use, it's a good idea to use some mature existing data format rather than creating our own custom data format. The Apache Arrow project provides such a data format that supports zero-copy data transfer across language boundaries. It's already quite popular and used in many projects and tools, so it looks like a good candidate for dora.

arrow provides two useful features for us

Array datatyperelease callback.

private_data pointer fieldpyarrow:

let pa = py.import("pyarrow")?;

let array = pa.getattr("Array")?.call_method1(

"_import_from_c",

(array_ptr as Py_uintptr_t, schema_ptr as Py_uintptr_t),

)?;ArrowArray from Rust with custom drop semantics.arrow2 cratePrimitiveArray and the arrow2::ffi::mmap::slice function.

arrow2::array::Array trait for a custom type

arrow2::ffi::export_array_to_c function to create the Arrow array

arrow2 library automatically fills in a release implementation that calls the drop handler of our custom typeexport_array_to_c function only works with the predefined array types (via downcasting)Arrow array with custom drop logic using the arrow2 crateArrow arrays is specified, so we could do the creation manuallyarrow crateBuffer::from_custom_allocation looks quite promising for our use case

Buffer can then be converted to ArrayData using new_unchecked:

ArrayData::new_unchecked(

UInt8Type,

len,

Some(0),

None,

0,

vec![buffer],

vec![],

)ArrayData struct can the be used to construct a FFI_ArrowArray through the new constructor.FFI_ArrowSchema type: FFI_ArrowSchema::try_from(data.data_type())?Env: Ubuntu 22.04

Dora Release: 0.1.1 (0.1.0 on MacOS has similar issue)

Python example:

Rust example (I can build dora new rust_proj example using 0.1.1:):

Ths issue is why after dora stop the dataflow, the dora-coordinator has such a high CPU usage. Further more, dora-coordinator as a control plane component, it should not have high CPU usage

@phil-opp please take a look

Is your feature request related to a problem? Please describe.

Make sure that when sending output the nodes or operators are ready to receive those inputs.

Current solution

Current solution is quite hacky and requires to make a loop, like:

nodes:

- id: oasis_agent

custom:

inputs:

carla_gps_ready: carla_gps_op/ready

outputs:

- check

source: shell

- id: carla_gps_op

operator:

python: ../../carla/carla_gps_op.py

outputs:

- ready

inputs:

check: oasis_agent/checkDescribe the solution you'd like

Event::OutputReady and Event::AllOutputsReady to our event streamNodeEvent::OutputReady { id } to signal to a node that an output is readyNodeEvent::AllOutputsReady to signal that all outputs are ready ( similar to NodeEvent::AllInputsClosed )Ready event to the daemon when it has finished its initialization method (e.g.: __init__ for python).Notes: This is transparent to the user

Ready event to the daemon when the dora node object is initialized. (i.e.: node = Node() in Python)Notes: This is going to be transparent to the user.

We disabled that example on the CI for now in #162. Now that we merged that PR, we should create new test releases for some example nodes and enable the example on the CI again.

It seems that we have a memory leak in the Python API operator. I am not able to reproduce it in the Rust API Operator but might also be present there.

If you unzip the following python dataflow and run it with:

dora-coordinator --run-dataflow dataflow.yml

You will be able to see the memory usage growing.

I found out this issue as I was running the Carla leaderboard and after about 2 hours, I run out of memory. So, we might want to fix this issue for the Carla Leaderboard.

I'm currently investigating the different reasons for this leak. It seems that pyo3 can easily creates copy of python object. See: https://pyo3.rs/v0.15.1/memory.html . What I know so far:

iceoryx and zenoh.Describe the bug

The python installation package of dora 0.2.2 is abnormal on the windows system, and dora-drives cannot recognize the dora installation package

To Reproduce

Steps to reproduce the behavior:

cd apis/python/nodedora start dataflow.yamlpip install maturinmaturin developdora check --dataflow graphs/tutorials/webcam.yamlExpected behavior

python package of dora support windows 10

Environments (please complete the following information):

To Reproduce

Steps to reproduce the behavior:

dora upgradeRUST_BACKTRACE=1 environment variable to display a backtraceEnvironments (please complete the following information):

Is your feature request related to a problem? Please describe.

Dora currently only has English documents and no Chinese documents, which is not very friendly to Chinese domestic users

Describe the solution you'd like

Dora documents can be switched between Chinese and English

Describe alternatives you've considered

dora supports Chinese documents

Describe the bug

cannot exec dora or dora-cli command when install dora with conda on macOS

To Reproduce

Steps to reproduce the behavior:

Expected behavior

expect dora can successfully run.

Describe the bug

If one operator initialisation fails. The other operator wait forever for this operator.

To Reproduce

Steps to reproduce the behavior:

dora updora start dataflow.yaml # With a failed initialized operatorp.s: I've only tested this for python operator.

Is your feature request related to a problem? Please describe.

At present, dora supports c++/c/python/rust language, and has basically supported several commonly used languages, but now the mainstream of cloud native needs to be golang, so some AI algorithms will use golang to encapsulate

Describe the solution you'd like

dora supports golang to define operator/node

Describe alternatives you've considered

Use golang to implement dora operator or node, and run it successfully

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.