Hi,

I come to you because the way Smilei is calculating the sum of weights in Screen diagnostics, and the way to get a physical number of particle from this diag is still not very clear in my mind, even after reading the documentation.

For the context, I'm actually doing 1D simulations of laser-solid interaction, and get the electron weights in function of their kinetic energy on a screen diagnostic inside the target, then put this source term in a Monte Carlo code and get the results I want to compare with some experiments.

For this I need to understand how to normalise the screen output in order to get a number of electrons, not a density ; but I don't know which length I may use in longitudinal direction (on transverse direction I take the surface of the beam spot as a typical surface).

Some people also told me that for 1D PIC simulations, the diagnostic outputs should be in number of particle per unit of surface, because there is a lack of information on the two transverse directions. For 2D, outputs should be in number of particle per unit of length, because information are known in 2 directions and 1 direction is unknown.

The weights calculation are probably different in their case, and for going to these units I only need to multiply my output by a length in longitudinal direction.

I think I understand that if the diagnostic is the sum of weights in function of one space direction (so density in function of space) the bin size is the length to choose, because the sum is done over the whole bin and then the number of particles in this bin will depend on its size.

However, I can't choose the cell length as a longitudinal length with a screen diagnostic, because it does a sum of all particle weights passing through one single place and so my normalisation would strongly depend on the spatial resolution I choose for my simulation, which is clearly not physical.

I did some tests with the simulation length as a longitudinal length. I ran my simulation with different sim length (and adapt sim time for considering the same interaction time) and get these results :

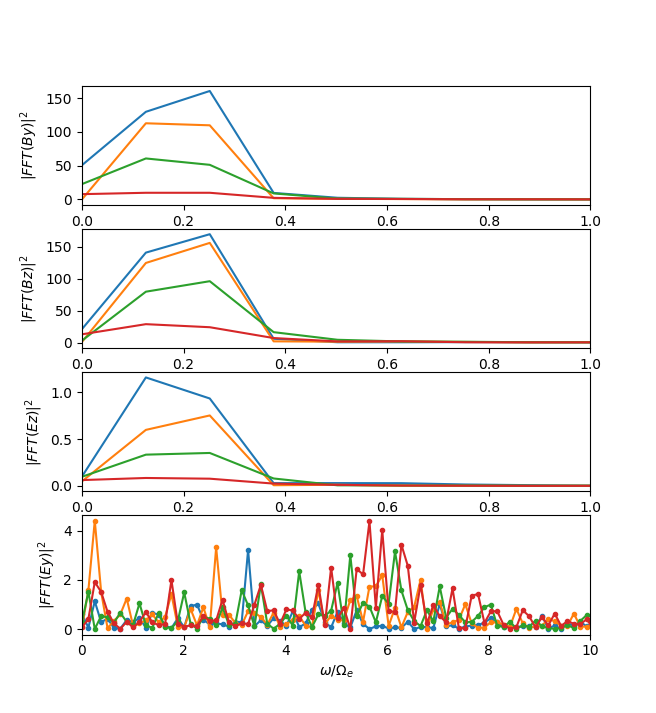

Output in $N_r/MeV$:

I was firstly surprised that the results were different for different sim length, but probably I shouldn't be.

I tried after that to multiply the output by the critical density, my sim length (in meters), and the focal spot surface (in meter squared), and get this result :

Output in number of e-/MeV:

This looks like really better.

If I multiply instead by the total number of cells I would get the same agreement between these 3 curves (because I divide all outputs by the same cell length in meter, but here I get a problem with units). So I need to get a confirmation that multiplying by the simulation length it is the right thing to do, and I would like to understand why.

Putting this apart, I also did some tests about particle_per_cell number, and found that the neutrality condition between electrons and ions have not to be respected for getting good results, if this neutrality condition is respected for density. I did not found this information in the doc (that now looks great !), and I think it could be nice to include it because it's a good feature of Smilei :)

Thank you,

L.