tusen-ai / simpledet Goto Github PK

View Code? Open in Web Editor NEWA Simple and Versatile Framework for Object Detection and Instance Recognition

License: Apache License 2.0

A Simple and Versatile Framework for Object Detection and Instance Recognition

License: Apache License 2.0

I’m reading paper and code implementation of TridentNet. I’m confused about code kept_rois[i].push_back(gt_tmp); in proposal_target_v2-inl.h, Line 204.

I wonder whether gt_tmp should be appended to kept_rois[i] or not. I mean that vector kept_rois[i] keep value of proposal boxes (generated by RPN etc.) at current scale (valid range) i, and gt box may should not be added to this vector. Also, I wonder those invalid gt_boxes (at scale i) should be removed from kept_gtbboxes[i] or not. It seems that invalid gt_boxes (at scale i) don’t be removed from kept_gtbboxes[i] in code implementation.

So incorrect overlap value may be calculated when BBoxOverlap(all_rois, gt_boxes, IOUs) invoked? As tensor ‘all_rois’ may has gt boxes, and tensor ‘gt_boxes’ may has gt boxes which are invalid at current scale (valid range) i.

I wonder it’s an issue or my misunderstanding. Thanks very much.

Hi!

Really great job! Thank you!

I just wonder if you could provide pascal voc format data loader? I think this is also very useful, thanks again.

import mxnext

[Warning] Your mxnet does not support ProposalTarget

[Warning] Your mxnet does not support DecodeBBox

[Warning] Your mxnet does not support BBoxNorm

[Warning] Your mxnet does not support FocalLoss

but in my folder:/home/lc/work/simpledet/operator_cxx/contrib has focal_loss.cu focal_loss.cc focal_loss.o ...

I desire to know what is the meaning of param proposal_wo_gt, fg_fraction, fg_thr, bg_thr_hi and bg_thr_lo in RpnParam.subsample_proposal.

Addtinally, what is class Neck mean? It seems doesn't do anything in code.

class Neck(object):

def __init__(self, pNeck):

self.pNeck = pNeck

def get_rpn_feature(self, rpn_feat):

return rpn_feat

def get_rcnn_feature(self, rcnn_feat):

return rcnn_featIs it a structure in Faster R-CNN?

Thanks to open source, how about the speed of TridentNet, how is it compared with yolo?

Hi,

Does SimpleDet support Ubuntu 18? My current workstation is on Ubuntu 18.

when I train the faster rcnn on my own dataset, the RCNN accuracy decreases from 0.9 to 0.5. It is very strange, do you think what's the problem? Any advice will be appreciated, thanks

in config/tridentnet_r101v2c4_c5_addminival_2x.py, the DatasetParam.image_set is defined as follow

https://github.com/TuSimple/simpledet/blob/30b658cb4ae38b77b14bb27135157d09ab34fa9d/config/tridentnet_r101v2c4_c5_addminival_2x.py#L119

but in utils/generate_roidb.py, there are no mapping to testdev2017_gt_roidb

https://github.com/TuSimple/simpledet/blob/7d1b95901997912f10c99eb2e4b4b39ff028509e/utils/generate_roidb.py#L15

so that you can't genenrate COCO_testdev2017_gt_roidb.roidb

and this will cause error when you use config/tridentnet_r101v2c4_c5_addminival_2x.py to train/test when searching for roidb in here

https://github.com/TuSimple/simpledet/blob/7d1b95901997912f10c99eb2e4b4b39ff028509e/detection_test.py#L39

so i think may be just change to

image_set = ("coco_test-dev2017", )can solve this problem

Hi, thanks for the excellent work. Can you share the python code of these c++ code in the operator_cxx folder? Thanks

Hi,

does cxx op of simpledet support windows?

thanks

The response of command" git submodule init" is below:

yyf@tower-pc:~/simpledet-master/mxnet$ git submodule init

子模组 '3rdparty/cub' (https://github.com/dmlc/cub) 未对路径 '3rdparty/cub' 注册

子模组 '3rdparty/dlpack' (https://github.com/dmlc/dlpack) 未对路径 '3rdparty/dlpack' 注册

子模组 '3rdparty/dmlc-core' (https://github.com/dmlc/dmlc-core.git) 未对路径 '3rdparty/dmlc-core' 注册

子模组 '3rdparty/googletest' (https://github.com/google/googletest.git) 未对路径 '3rdparty/googletest' 注册

子模组 '3rdparty/mkldnn' (https://github.com/intel/mkl-dnn.git) 未对路径 '3rdparty/mkldnn' 注册

子模组 '3rdparty/mshadow' (https://github.com/dmlc/mshadow.git) 未对路径 '3rdparty/mshadow' 注册

子模组 '3rdparty/onnx-tensorrt' (https://github.com/onnx/onnx-tensorrt.git) 未对路径 '3rdparty/onnx-tensorrt' 注册

子模组 '3rdparty/openmp' (https://github.com/llvm-mirror/openmp) 未对路径 '3rdparty/openmp' 注册

子模组 '3rdparty/ps-lite' (https://github.com/dmlc/ps-lite) 未对路径 '3rdparty/ps-lite' 注册

子模组 '3rdparty/tvm' (https://github.com/dmlc/tvm) 未对路径 '3rdparty/tvm' 注册

Is it a wrong behavior?

And then, after finishing the "cp" command, I'm wonderring what's going wrong.

Just a couple files are missing.

And i get the mxnet as the command in your github.How can i solve it?

Thanks.

After finishing the setup,I began to train the model. But the error arose.

How can i address this?

The log follows.

Traceback (most recent call last):

File "detection_train.py", line 12, in

from core.detection_module import DetModule

File "/home/yyf/simpledet/core/detection_module.py", line 29, in

from mxnet import metric

ImportError: cannot import name 'metric'

Tridentnet is a backbone, yolo is a detector. You can apply trident block to yolo to get the best of both worlds.

Originally posted by @RogerChern in #3 (comment)

Thanks for your remarkable works.

I want to make sure how to combine trident with yolo.

Take yolov3(Darknet-53 backbone) as an example, the final 4 conv-blocks, with downsample rate 32, may be replaced with your trident blocks, and three branches may have their own yolo-head independently for different scale obj-detection.

The original upsample operation for FPN will be canceled. And compared with original yolov3, that large objects are detected with small feature map and small objects are detected with large feature map, in this method objects of all sizes (small, medium, large) are detected with feature maps that have the same size, specifically 8x8.

Is that right?

Anybody know if the valid ranges correspond to the object size in the original image or the resized image, say 800x1200?

Also, in class RpnParam, what does the image_anchor mean?

Many Thx!

Different model has its high frequency current sound on my GPUs,I have try two GPU cards.but I run darknet to compare get no current sound

flake8 testing of https://github.com/TuSimple/simpledet on Python 3.7.1

$ flake8 . --count --select=E901,E999,F821,F822,F823 --show-source --statistics

./symbol/builder.py:50:24: F821 undefined name 'RPNParam'

self.p = pRpn # type: RPNParam

^

./symbol/builder.py:240:25: F821 undefined name 'BboxParam'

self.p = pBbox # type: BboxParam

^

./symbol/builder.py:582:24: F821 undefined name 'RoiParam'

self.p = pRoi # type: RoiParam

^

./models/tridentnet/builder.py:74:24: F821 undefined name 'RPNParam'

self.p = pRpn # type: RPNParam

^

./models/maskrcnn/input.py:207:49: F821 undefined name '_WHITE'

cv2.drawContours(img, contours, -1, _WHITE, border_thick, cv2.LINE_AA)

^

./models/retinanet/input.py:20:25: F821 undefined name 'NormParam'

self.p = pNorm # type: NormParam

^

./models/retinanet/builder.py:43:24: F821 undefined name 'RPNParam'

self.p = pRpn # type: RPNParam

^

./models/FPN/builder.py:33:24: F821 undefined name 'RPNParam'

self.p = pRpn # type: RPNParam

^

./models/FPN/builder.py:419:24: F821 undefined name 'RoiParam'

self.p = pRoi # type: RoiParam

^

./core/detection_input.py:56:25: F821 undefined name 'NormParam'

self.p = pNorm # type: NormParam

^

./core/detection_input.py:80:27: F821 undefined name 'ResizeParam'

self.p = pResize # type: ResizeParam

^

./core/detection_input.py:269:24: F821 undefined name 'PadParam'

self.p = pPad # type: PadParam

^

./core/detection_input.py:309:27: F821 undefined name 'AnchorTarget2DParam'

self.p = pAnchor # type: AnchorTarget2DParam

^

13

E901,E999,F821,F822,F823 are the "showstopper" flake8 issues that can halt the runtime with a SyntaxError, NameError, etc. These 5 are different from most other flake8 issues which are merely "style violations" -- useful for readability but they do not effect runtime safety.

namename in __all__ubuntu 16.04

python3.5

Device 0: "GeForce GTX 1070"

CUDA Driver Version / Runtime Version 9.2 / 9.2

CUDA Capability Major/Minor version number: 6.1

echo "USE_OPENCV = 0" >> ./config.mk

echo "USE_BLAS = openblas" >> ./config.mk

echo "USE_CUDA = 1" >> ./config.mk

echo "USE_CUDA_PATH = /usr/local/cuda" >> ./config.mkg

echo "USE_CUDNN = 1" >> ./config.mk

echo "USE_NCCL = 1" >> ./config.mk

echo "USE_DIST_KVSTORE = 1" >> ./config.mk

after all these horrible things,I only got

### Segmentation fault (core dumped)

from python3 detection_train.py --config config/detection_config.py

sudo singularity shell --no-home --nv -s /usr/bin/zsh --bind $WORKDIR /path/to/simpledet.img

what's the meaning of $WORKDIR ?

When expanding anchor ratio, the rcnn loss is becoming bigger, can you help me how to reduce it? Any advice will be appreciated, thanks

I think I didn't find the records of Tridentnet Faster-RCNN with fp16. Can you provide it? Do the performances of Tridentnet Faster-RCNN with fp16 and without fp16 have a large difference? Thanks

In training step, it seems need pretraind model to train.

I'm a freshman to mxnet and don't know how to get this pretraind model.

Wound you mind give the download link of this pretrained model?

How to do e2e single image detection?Any quick example?

I have trained several faster rcnn models with different backbones, then I want to ensemble these models for a better performance, but I am totally confused about how to do this, can you give me some suggestion or reference for this? Thank you anyway.

Hello, I want to know how many GPUS have you used and how long have you trained this network on coco dataset?

Hi!Have you ever tried to add or connect multi-branch output? How was the effect? Thanks!

Thanks for the good work. I have a question for "scale_loss_shift' parameter, I found this parameter is used in the following:

scale_loss_shift = 128.0 if p.fp16 else 1.0

cls_loss = mx.sym.SoftmaxOutput(data=cls_logit_reshape,

label=cls_label,

multi_output=True,

normalization='valid',

use_ignore=True,

ignore_label=-1,

grad_scale=1.0*scale_loss_shift,

name='rpn_cls_loss')

question: why fp16 or fp32 can have an impact on grad_scale of softmax loss? Any advice will be appreciated, thanks

when i install from strach i have got the problem:

I use anaconda 3 and do install cuda9.2 ,cudnn 7.3.1 .

when i input make -j in my anaconda env:

it have something wrong:

In file included from /home/zty/Documents/simpledet/mxnet/3rdparty/mshadow/mshadow/tensor.h:16:0,

from include/mxnet/./base.h:32,

from include/mxnet/operator_util.h:43,

from src/operator/contrib/./quadratic_op-inl.h:29,

from src/operator/contrib/quadratic_op.cc:24:

/home/zty/Documents/simpledet/mxnet/3rdparty/mshadow/mshadow/./base.h:179:21: fatal error: cudnn.h: No such file or directory

When I set gpus to a list not starting from 0 in config:

class KvstoreParam:

kvstore = "local"

batch_image = General.batch_image

gpus = [0, 1, 2, 3, 4, 5, 6, 7]

fp16 = General.fp16

for example, if I set it to [1], [2, 3] or others, it raised this error while trainning:

Check failed: e == cudaSuccess || e == cudaErrorCudartUnloading CUDA: invalid device ordinal

Could you please help to fix it? Thank you.

02-03 11:03:56 lr 0.01125, lr_iters [320000, 426666]

02-03 11:03:56 warmup lr 0.0, warmup step 5333

Traceback (most recent call last):

File "/home/simple/anaconda2/envs/py3/lib/python3.6/site-packages/mxnet-1.3.1-py3.6.egg/mxnet/symbol/symbol.py", line 1522, in simple_bind

ctypes.byref(exe_handle)))

File "/home/simple/anaconda2/envs/py3/lib/python3.6/site-packages/mxnet-1.3.1-py3.6.egg/mxnet/base.py", line 251, in check_call

raise MXNetError(py_str(_LIB.MXGetLastError()))

mxnet.base.MXNetError: [18:04:07] src/storage/storage.cc:65: Check failed: e == cudaSuccess || e == cudaErrorCudartUnloading CUDA: invalid device ordinalStack trace returned 10 entries:

[bt] (0) /home/simple/anaconda2/envs/py3/lib/python3.6/site-packages/mxnet-1.3.1-py3.6.egg/mxnet/libmxnet.so(dmlc::StackTraceabi:cxx11+0x5b) [0x7fdc758f9adb]

[bt] (1) /home/simple/anaconda2/envs/py3/lib/python3.6/site-packages/mxnet-1.3.1-py3.6.egg/mxnet/libmxnet.so(dmlc::LogMessageFatal::~LogMessageFatal()+0x28) [0x7fdc758fa648]

[bt] (2) /home/simple/anaconda2/envs/py3/lib/python3.6/site-packages/mxnet-1.3.1-py3.6.egg/mxnet/libmxnet.so(mxnet::StorageImpl::ActivateDevice(mxnet::Context)+0x5f) [0x7fdc77e7639f]

[bt] (3) /home/simple/anaconda2/envs/py3/lib/python3.6/site-packages/mxnet-1.3.1-py3.6.egg/mxnet/libmxnet.so(mxnet::StorageImpl::Alloc(mxnet::Storage::Handle*)+0x50) [0x7fdc77e718f0]

[bt] (4) /home/simple/anaconda2/envs/py3/lib/python3.6/site-packages/mxnet-1.3.1-py3.6.egg/mxnet/libmxnet.so(mxnet::common::InitZeros(mxnet::NDArrayStorageType, nnvm::TShape const&, mxnet::Context const&, int)+0x73f) [0x7fdc77f0020f]

[bt] (5) /home/simple/anaconda2/envs/py3/lib/python3.6/site-packages/mxnet-1.3.1-py3.6.egg/mxnet/libmxnet.so(mxnet::common::ReshapeOrCreate(std::__cxx11::basic_string<char, std::char_traits, std::allocator > const&, nnvm::TShape const&, int, mxnet::NDArrayStorageType, mxnet::Context const&, std::unordered_map<std::__cxx11::basic_string<char, std::char_traits, std::allocator >, mxnet::NDArray, std::hash<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::equal_to<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::allocator<std::pair<std::__cxx11::basic_string<char, std::char_traits, std::allocator > const, mxnet::NDArray> > >, bool)+0xa26) [0x7fdc77f093a6]

[bt] (6) /home/simple/anaconda2/envs/py3/lib/python3.6/site-packages/mxnet-1.3.1-py3.6.egg/mxnet/libmxnet.so(mxnet::exec::GraphExecutor::InitArguments(nnvm::IndexedGraph const&, std::vector<nnvm::TShape, std::allocatornnvm::TShape > const&, std::vector<int, std::allocator > const&, std::vector<int, std::allocator > const&, std::vector<mxnet::Context, std::allocatormxnet::Context > const&, std::vector<mxnet::Context, std::allocatormxnet::Context > const&, std::vector<mxnet::Context, std::allocatormxnet::Context > const&, std::vector<mxnet::OpReqType, std::allocatormxnet::OpReqType > const&, std::unordered_set<std::__cxx11::basic_string<char, std::char_traits, std::allocator >, std::hash<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::equal_to<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::allocator<std::__cxx11::basic_string<char, std::char_traits, std::allocator > > > const&, mxnet::Executor const, std::unordered_map<std::__cxx11::basic_string<char, std::char_traits, std::allocator >, mxnet::NDArray, std::hash<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::equal_to<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::allocator<std::pair<std::__cxx11::basic_string<char, std::char_traits, std::allocator > const, mxnet::NDArray> > >, std::vector<mxnet::NDArray, std::allocatormxnet::NDArray >, std::vector<mxnet::NDArray, std::allocatormxnet::NDArray >, std::vector<mxnet::NDArray, std::allocatormxnet::NDArray >)+0xd47) [0x7fdc77ef1ff7]

[bt] (7) /home/simple/anaconda2/envs/py3/lib/python3.6/site-packages/mxnet-1.3.1-py3.6.egg/mxnet/libmxnet.so(mxnet::exec::GraphExecutor::Init(nnvm::Symbol, mxnet::Context const&, std::map<std::__cxx11::basic_string<char, std::char_traits, std::allocator >, mxnet::Context, std::less<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::allocator<std::pair<std::__cxx11::basic_string<char, std::char_traits, std::allocator > const, mxnet::Context> > > const&, std::vector<mxnet::Context, std::allocatormxnet::Context > const&, std::vector<mxnet::Context, std::allocatormxnet::Context > const&, std::vector<mxnet::Context, std::allocatormxnet::Context > const&, std::unordered_map<std::__cxx11::basic_string<char, std::char_traits, std::allocator >, nnvm::TShape, std::hash<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::equal_to<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::allocator<std::pair<std::__cxx11::basic_string<char, std::char_traits, std::allocator > const, nnvm::TShape> > > const&, std::unordered_map<std::__cxx11::basic_string<char, std::char_traits, std::allocator >, int, std::hash<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::equal_to<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::allocator<std::pair<std::__cxx11::basic_string<char, std::char_traits, std::allocator > const, int> > > const&, std::unordered_map<std::__cxx11::basic_string<char, std::char_traits, std::allocator >, int, std::hash<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::equal_to<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::allocator<std::pair<std::__cxx11::basic_string<char, std::char_traits, std::allocator > const, int> > > const&, std::vector<mxnet::OpReqType, std::allocatormxnet::OpReqType > const&, std::unordered_set<std::__cxx11::basic_string<char, std::char_traits, std::allocator >, std::hash<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::equal_to<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::allocator<std::__cxx11::basic_string<char, std::char_traits, std::allocator > > > const&, std::vector<mxnet::NDArray, std::allocatormxnet::NDArray >, std::vector<mxnet::NDArray, std::allocatormxnet::NDArray >, std::vector<mxnet::NDArray, std::allocatormxnet::NDArray >, std::unordered_map<std::__cxx11::basic_string<char, std::char_traits, std::allocator >, mxnet::NDArray, std::hash<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::equal_to<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::allocator<std::pair<std::__cxx11::basic_string<char, std::char_traits, std::allocator > const, mxnet::NDArray> > >, mxnet::Executor*, std::unordered_map<nnvm::NodeEntry, mxnet::NDArray, nnvm::NodeEntryHash, nnvm::NodeEntryEqual, std::allocator<std::pair<nnvm::NodeEntry const, mxnet::NDArray> > > const&)+0xa6b) [0x7fdc77efc94b]

[bt] (8) /home/simple/anaconda2/envs/py3/lib/python3.6/site-packages/mxnet-1.3.1-py3.6.egg/mxnet/libmxnet.so(mxnet::Executor::SimpleBind(nnvm::Symbol, mxnet::Context const&, std::map<std::__cxx11::basic_string<char, std::char_traits, std::allocator >, mxnet::Context, std::less<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::allocator<std::pair<std::__cxx11::basic_string<char, std::char_traits, std::allocator > const, mxnet::Context> > > const&, std::vector<mxnet::Context, std::allocatormxnet::Context > const&, std::vector<mxnet::Context, std::allocatormxnet::Context > const&, std::vector<mxnet::Context, std::allocatormxnet::Context > const&, std::unordered_map<std::__cxx11::basic_string<char, std::char_traits, std::allocator >, nnvm::TShape, std::hash<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::equal_to<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::allocator<std::pair<std::__cxx11::basic_string<char, std::char_traits, std::allocator > const, nnvm::TShape> > > const&, std::unordered_map<std::__cxx11::basic_string<char, std::char_traits, std::allocator >, int, std::hash<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::equal_to<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::allocator<std::pair<std::__cxx11::basic_string<char, std::char_traits, std::allocator > const, int> > > const&, std::unordered_map<std::__cxx11::basic_string<char, std::char_traits, std::allocator >, int, std::hash<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::equal_to<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::allocator<std::pair<std::__cxx11::basic_string<char, std::char_traits, std::allocator > const, int> > > const&, std::vector<mxnet::OpReqType, std::allocatormxnet::OpReqType > const&, std::unordered_set<std::__cxx11::basic_string<char, std::char_traits, std::allocator >, std::hash<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::equal_to<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::allocator<std::__cxx11::basic_string<char, std::char_traits, std::allocator > > > const&, std::vector<mxnet::NDArray, std::allocatormxnet::NDArray >, std::vector<mxnet::NDArray, std::allocatormxnet::NDArray >, std::vector<mxnet::NDArray, std::allocatormxnet::NDArray >, std::unordered_map<std::__cxx11::basic_string<char, std::char_traits, std::allocator >, mxnet::NDArray, std::hash<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::equal_to<std::__cxx11::basic_string<char, std::char_traits, std::allocator > >, std::allocator<std::pair<std::__cxx11::basic_string<char, std::char_traits, std::allocator > const, mxnet::NDArray> > >, mxnet::Executor*)+0x169) [0x7fdc77efd099]

[bt] (9) /home/simple/anaconda2/envs/py3/lib/python3.6/site-packages/mxnet-1.3.1-py3.6.egg/mxnet/libmxnet.so(MXExecutorSimpleBind+0x2c99) [0x7fdc77e85559]During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "detection_train.py", line 226, in

train_net(parse_args())

File "detection_train.py", line 209, in train_net

num_epoch=end_epoch

File "/data/simple/simpledet/core/detection_module.py", line 959, in fit

for_training=True, force_rebind=force_rebind)

File "/data/simple/simpledet/core/detection_module.py", line 440, in bind

state_names=self._state_names)

File "/home/simple/anaconda2/envs/py3/lib/python3.6/site-packages/mxnet-1.3.1-py3.6.egg/mxnet/module/executor_group.py", line 279, in init

self.bind_exec(data_shapes, label_shapes, shared_group)

File "/home/simple/anaconda2/envs/py3/lib/python3.6/site-packages/mxnet-1.3.1-py3.6.egg/mxnet/module/executor_group.py", line 375, in bind_exec

shared_group))

File "/home/simple/anaconda2/envs/py3/lib/python3.6/site-packages/mxnet-1.3.1-py3.6.egg/mxnet/module/executor_group.py", line 662, in _bind_ith_exec

shared_buffer=shared_data_arrays, **input_shapes)

File "/home/simple/anaconda2/envs/py3/lib/python3.6/site-packages/mxnet-1.3.1-py3.6.egg/mxnet/symbol/symbol.py", line 1528, in simple_bind

raise RuntimeError(error_msg)

RuntimeError: simple_bind error. Arguments:

data: (3, 3, 800, 1200)

im_info: (3, 3)

gt_bbox: (3, 300, 5)

valid_ranges: (3, 3, 2)

rpn_cls_label: (3, 3, 56250)

rpn_reg_target: (3, 3, 60, 50, 75)

rpn_reg_weight: (3, 3, 60, 50, 75)

[11:04:07] src/storage/storage.cc:65: Check failed: e == cudaSuccess || e == cudaErrorCudartUnloading CUDA: invalid device ordinal

Hi,

I am using the pretrained faster_r50v1_fpn_1x weights and I want to fine tune on my dataset which has 1+2 classes. When I change the config from 1+80 to 1+2, the code runs into "infer_shape error". How do I specify to change the last layer or how should I change the config instead? Thank you.

Sometimes, RcnnL1 is nan for the first 100 epoch, after that the loss is normal. Why it happened?

sudo nvidia-docker run -it -v $HOST-SIMPLEDET-DIR:$CONTAINER-WORKDIR rogerchen/simpledet /usr/bin/zsh

[sudo] password for koufeitian:

docker: Error response from daemon: invalid volume specification: '-SIMPLEDET-DIR:-WORKDIR': invalid mount config for type "volume": invalid mount path: '-WORKDIR' mount path must be absolute.

See 'docker run --help'.

For the scheduler setting,

what is the meaning of '16' in the

r_iter = [60000 * 16 // (len(KvstoreParam.gpus) * KvstoreParam.batch_image),

80000 * 16 // (len(KvstoreParam.gpus) * KvstoreParam.batch_image)]

why it is 16? thanks

class OptimizeParam:

class optimizer:

type = "sgd"

lr = 0.01 / 8 * len(KvstoreParam.gpus) * KvstoreParam.batch_image

momentum = 0.9

wd = 0.0001

clip_gradient = 35

class schedule:

begin_epoch = 0

end_epoch = 6

lr_iter = [60000 * 16 // (len(KvstoreParam.gpus) * KvstoreParam.batch_image),

80000 * 16 // (len(KvstoreParam.gpus) * KvstoreParam.batch_image)]

class warmup:

type = "gradual"

lr = 0.0

iter = 750 * 16 // (len(KvstoreParam.gpus) * KvstoreParam.batch_image)

Hi,

I want to test tridentnet and I run:

python3 detection_test.py --config config/tridentnet_r101v2c4_c5_multiscale_addminival_3x_fp16.py

I get this error:

Traceback (most recent call last):

File "detection_test.py", line 4, in

from core.detection_input import Loader

File "/media/jack/code/simpledet/core/detection_input.py", line 10, in

from operator_py.cython.bbox import bbox_overlaps_cython

ImportError: No module named 'operator_py.cython.bbox'

How should I use tridentnet? Can you help me?

I used nvidia-docker to run the code, and this is the output.

02-17 23:40:30 Epoch[0] Batch [9540] Speed: 18.53 samples/sec Train-RpnAcc=0.958210, RpnL1=0.420432, RcnnAcc=0.871656, RcnnL1=1.303369,

02-17 23:40:42 Epoch[0] Batch [9550] Speed: 13.73 samples/sec Train-RpnAcc=0.958218, RpnL1=0.420353, RcnnAcc=0.871662, RcnnL1=1.303065,

02-17 23:41:00 Epoch[0] Batch [9560] Speed: 8.70 samples/sec Train-RpnAcc=0.958227, RpnL1=0.420287, RcnnAcc=0.871672, RcnnL1=1.302830,

02-17 23:41:09 Epoch[0] Batch [9570] Speed: 17.01 samples/sec Train-RpnAcc=0.958238, RpnL1=0.420242, RcnnAcc=0.871690, RcnnL1=1.302588,

root@1e2726dafa6a /o/i/u/d/simpledet# My training used config tridentnet_r50v2c4_c5_1x which was set to train for 6 epochs with coco2014. But it stopped at Epoch[0] and didn't print any information.

Additionally, I found in ./deteciton_train.py: 213, in mod.fit func, the param num_epoch=end_epoch, is it configured right?

Hi , i am a freshman in object detection. I noticed that you publish a tutorial for training on coco dataset. and i am wondering if there is way that I can finetune on my own dataset with pretrained imagenet/coco model ? and another question is that is there a tutorial for us to infer/test on an new single image for deployment ?

Just like any business choice, costs are a thought when you pick how to dispatch your merchandise. In spite of the fact that airfreight cargo offers a couple of favourable circumstances over ocean freight cargo, for example, quicker dispatching occasions, dependable conveyance dates and less taking care of for high-esteem products—it once in a while tumbles to the base of the rundown in view of its expense.

However, airfreight cargo is an adaptable method of transportation that can be redone to meet your requirements. For instance, in the event that you see airfreight cargo unions, you may find that the advantages push it to the best.

Here’s the means by which it works: If you choose to airfreight cargo your products, you’ll be offered four choices: 1) express, 2) standard, 3) conceded and 4) what the business calls a “consol,”or combined airfreight cargo.

If you choose airfreight consolidations, you’ll get access to three distinct advantages:(On the off chance that you pick airfreight cargo unions, you’ll gain admittance to three unmistakable favourable circumstances.

Benefit #1: The Available Air Freight Services Cost Is Very Low.

ARC Worldwide offers you the chance to package your shipment with a few others to make a full burden.

As such, you’re basically working in collaboration with shippers like you to part a substantial burden, which brings down the expenses for everybody inside the consol. You may consider it like banding together with your neighbor to part a mass pack of 48 moves of paper towels, instead of obtaining them exclusively.

Because of this course of action, ARC Worldwide turns into a considerably more practical choice for moving your products by means of plane.

Also, in light of the fact that you’re picking airfreight cargo, you’ll see extra cost focal points in contrast with ocean freight.

Advantage #2: You Get The Lower Fees On The Receiving Ends

Numerous organisations endeavour to set aside extra cash by having merchandise sent in under compartment (LCL) loads by means of sea cargo. The underlying statement for this administration is likely lower than statements for transportation through ARC Worldwide Ltd.

In any case, remember that the charges on the less than desirable end for sea cargo versus airfreight cargo are very extraordinary.

Sea cargo, then again, accompanies additional charges at the port. These charges are normally excluded in your LCL shipment quote. For instance, when your shipment arrives, the compartment must be gotten, it must be stripped and, if it’s originating from a global goal, it needs to clear traditions. Also, numerous ports have least charges that apply regardless of the extent of your heap.

These energies can include rapidly.

In the event that you figure it out, you may find that transporting those equivalent products by means of combined airfreight cargo would be about a similar expense.

Besides, you’ll get your merchandise to their goal on an a lot quicker, progressively solid course of events.

Advantage #3: More Flexible Schedule

Depending on LCL sea transport requires substantially more lead time than utilizing airfreight cargo unions. Dray age to and from the port, travel over the sea, and stacking compartments on and off vessels can include days (if not weeks!) to the time it takes your shipment to achieve its goal. In the event that you are searching for greater adaptability on when you deliver, airfreight cargo consoles could be the solution to your inventory network dreams.

Also, with regards to sea cargo, you’re totally dependent on the vessel’s cruising plan. In case you’re transportation to a remote goal like Guam, you may need to hold up possibly 14 days before you can even get your compartment on a ship.

So if time is a factor, combined airfreight cargo can get your merchandise where they have to go, at a value that won’t break your financial plan.

For further information please visit us at http://www.arc-worldwide.com/

ModuleNotFoundError: No module named 'mxnext'

what is mxnext?

@RogerChern hi, thanks for your great work.

I run the code recently.

Without any modifications of the config file(except that gpu is reduced from 8 to 4), my results are:

Faster | R50v2-C4 | C5-256ROI | 33.9 (config file: faster_r50v2c4_c5_256roi_1x.py)

Faster | R101v2-C4 | C5-256ROI | 38.9 (config file: faster_r101v2c4_c5_256roi_1x.py)

These are quite different from the accuracy you gave on MODEL_ZOO.md(see below).

What's more, it's also different from the numbers in your trident paper (I guess they are 33.2 and 37.9 respectively).

Could you please give some clarification? Thank you.

this is my sofa

good job

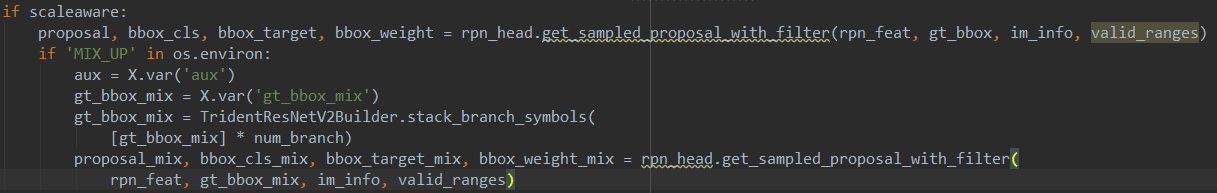

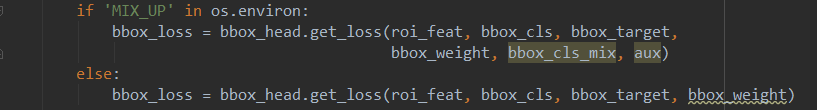

Thanks for the excellent work. I got an error about op proposal_target_v2 when i am adding mixup to training.would you please give me some advice?

thanks.

my code(models/tridentnet/builder.py):

i add gt_bbox_mix and aux in the input_record to record the mixup gtbbox and mix weight, and compute loss of both normal gt_bbox_label and gt_bbox_mix_label, at last combine the loss by weight.

when computing the gt_bbox_mix_label, there was an error:

File "/ML/tian/simpledet/simpledet_code/core/detection_metric.py", line 50, in update pred_label = mx.ndarray.argmax_channel(pred).astype('int32').asnumpy().reshape(-1) File "/ML/tian/venv_mxnet/lib/python3.6/site-packages/mxnet-1.3.1-py3.6.egg/mxnet/ndarray/ndarray.py", line 1972, in asnumpy ctypes.c_size_t(data.size))) File "/ML/tian/venv_mxnet/lib/python3.6/site-packages/mxnet-1.3.1-py3.6.egg/mxnet/base.py", line 251, in check_call raise MXNetError(py_str(_LIB.MXGetLastError())) mxnet.base.MXNetError: [06:52:50] src/operator/./proposal_target_v2-inl.h:138: Check failed: req[proposal_target_v2_enum::kRoiOutput] == kWriteTo (0 vs. 1)

import mxnet as mx

import mxnext as X

where is the package ‘mxnext’?

The code in 'incubator-mxnet-master' has been recompiled sucessfully according to ' Setup from Scratch' in the '`INSTALL.md' file after "make -j4", and libmxnet.so libmxnet.a has been created, but after the

''cd python

python3 setup.py install '' , and run the simpledet code,it shows

"AttributeError: module 'mxnet.symbol.contrib' has no attribute 'ROIAlign_v2' "

I have run the commad "grep -r contrib.ROIAlign_v2",it has been find in libmxnet.so libmxnet.a.

Is there any modification in "incubator-mxnet-master/python"?

d/src/kvstore/kvstore.o

In file included from src/kvstore/kvstore.cc:35:0:

src/kvstore/./kvstore_nccl.h:30:18: fatal error: nccl.h: 没有那个文件或目录

compilation terminated.

Makefile:451: recipe for target 'build/src/kvstore/kvstore.o' failed

make: *** [build/src/kvstore/kvstore.o] Error 1

when i try to make it ,this error happened

First, thank you for sharing these excellent works. Can you share some logs when you trained the model for faster rcnn, mask rcnn or tridentnet work? Because I want to use our own dataset to train the model. So the log information can help me to tune the parameters. Thanks

Hi,

In the module, there is no validation being implemented. Eval_data is not being used. Will there be an update to it?

https://github.com/TuSimple/simpledet/blob/master/core/detection_module.py

python3 detection_train.py --config config/retina_r50v1_fpn_1x.py

[Warning] Your mxnet does not support ProposalTarget

[Warning] Your mxnet does not support DecodeBBox

[Warning] Your mxnet does not support BBoxNorm

[Warning] Your mxnet does not support FocalLoss

Traceback (most recent call last):

File "detection_train.py", line 229, in

train_net(parse_args())

File "detection_train.py", line 21, in train_net

transform, data_name, label_name, metric_list = config.get_config(is_train=True)

File "/home/lc/work/simpledet/config/retina_r50v1_fpn_1x.py", line 112, in get_config

train_sym = detector.get_train_symbol(backbone, neck, rpn_head)

File "/home/lc/work/simpledet/models/retinanet/builder.py", line 23, in get_train_symbol

loss = head.get_loss(feat, rpn_cls_label, rpn_reg_target, rpn_reg_weight)

File "/home/lc/work/simpledet/models/retinanet/builder.py", line 275, in get_loss

cls_loss = X.focal_loss(

AttributeError: module 'mxnext' has no attribute 'focal_loss'

but in my folder:/home/lc/work/simpledet/operator_cxx/contrib has focal_loss.cc focal_loss.cu focal_loss.o

For plain faster rcnn, when I use my own faster rcnn, and faster rcnn implemented in this project to fit my dataset with the same parameter setting and the same network structure, the results are different and have a large difference. Do you think I should set different parameters? Do you have any advice about that? Thanks

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.