This might be related to another issue here but I seem to be getting enough differences that I'm doing this as a new issue.

I am very new to all of this Python and coding stuff so I might have messed something up with the instillation, but it was all followed correctly to my knowledge. This is all on windows 10. I was also running all of the commands in command prompt because when I tried in Python it kept giving me syntax errors. I also made my virtual environment with a different command than what is shown in the installation guide. py -m venv (environment name) was the command I used in command prompt. When I tried what was suggested, both in and out of python, this is what I got:

C:\video editing\Storytoolkit>python3.9 virtualenv -p python3.9 venv 'python3.9' is not recognized as an internal or external command, operable program or batch file.

C:\video editing\Storytoolkit>py Python 3.9.13 (tags/v3.9.13:6de2ca5, May 17 2022, 16:36:42) [MSC v.1929 64 bit (AMD64)] on win32 Type "help", "copyright", "credits" or "license" for more information.

>>> python3.9 virtualenv -p python3.9 venv

File "<stdin>", line 1

python3.9 virtualenv -p python3.9 venv

^

SyntaxError: invalid syntax

Not sure if any of the above is actually responsible for the this issue or not but I included it to be safe

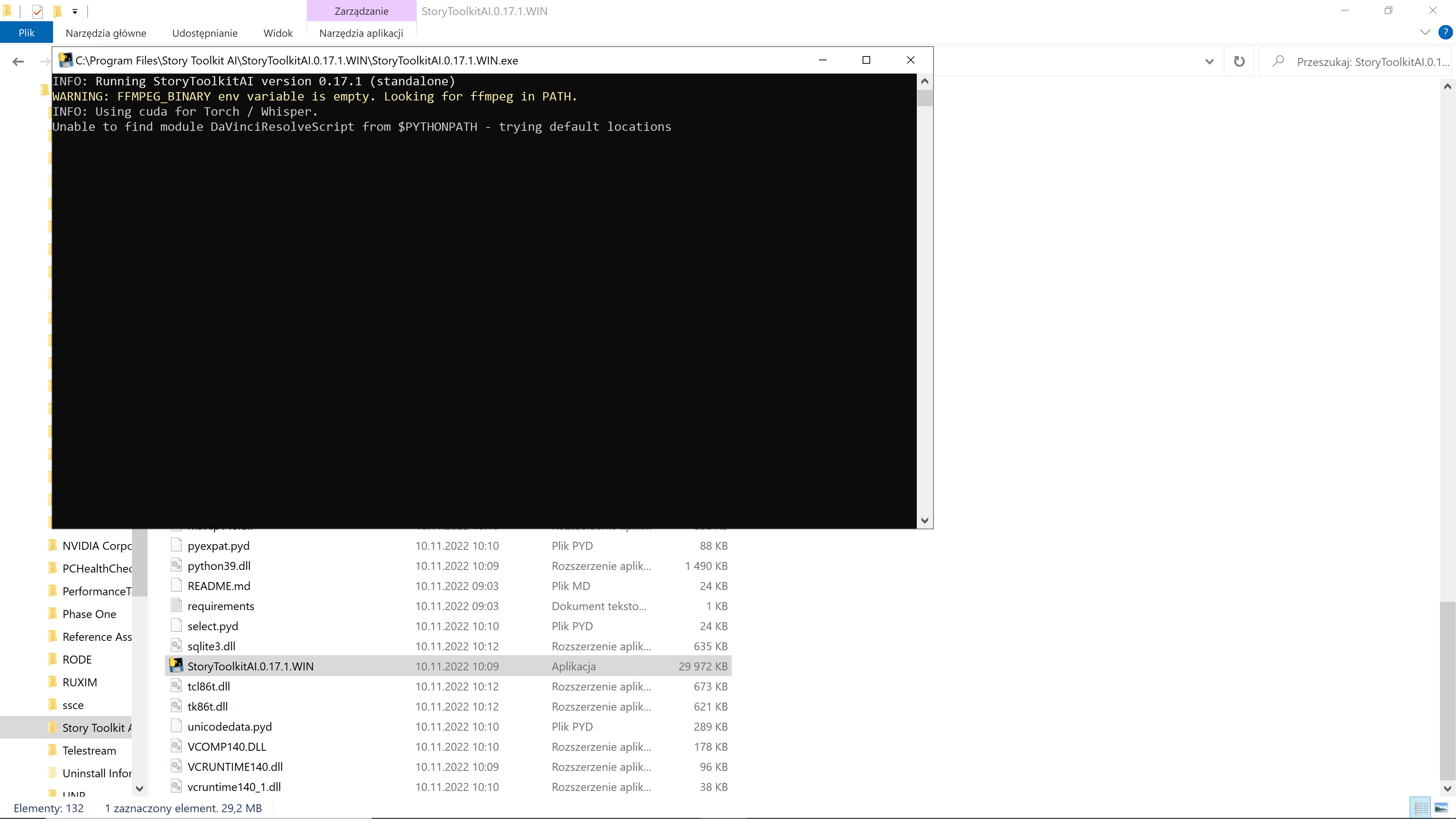

For the issue itself, whenever I run the command python StoryToolkitAI/app.py in my virtual environment, I get this:

(transcript) C:\video editing\Storytoolkit>python StoryToolkitAI/app.py

INFO: Running StoryToolkitAI version 0.16.16

INFO: Using cpu for Torch / Whisper.

Unable to find module DaVinciResolveScript from $PYTHONPATH - trying default locations

Unable to find module DaVinciResolveScript - please ensure that the module DaVinciResolveScript is discoverable by python

For a default DaVinci Resolve installation, the module is expected to be located in: C:\ProgramData\Blackmagic Design\DaVinci Resolve\Support\Developer\Scripting\Modules\

Traceback (most recent call last):

File "C:\video editing\Storytoolkit\StoryToolkitAI\python_get_resolve.py", line 13, in GetResolve import DaVinciResolveScript as bmd

ModuleNotFoundError: No module named 'DaVinciResolveScript'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "C:\video editing\Storytoolkit\StoryToolkitAI\python_get_resolve.py", line 27, in GetResolve bmd = imp.load_source('DaVinciResolveScript', expectedPath+"DaVinciResolveScript.py")

File "C:\Users\username\AppData\Local\Programs\Python\Python39\lib\imp.py", line 171, in load_source module = _load(spec)

File "<frozen importlib._bootstrap>", line 711, in _load

File "<frozen importlib._bootstrap>", line 680, in _load_unlocked

File "<frozen importlib._bootstrap_external>", line 850, in exec_module

File "<frozen importlib._bootstrap>", line 228, in _call_with_frames_removed

File "C:\ProgramData\Blackmagic Design\DaVinci Resolve\Support\Developer\Scripting\Modules\DaVinciResolveScript.py", line 36, in <module>

raise ImportError("Could not locate module dependencies")

ImportError: Could not locate module dependencies

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "C:\video editing\Storytoolkit\StoryToolkitAI\app.py", line 3877, in poll_resolve_data resolve_data = mots_resolve.get_resolve_data(silent=True)

File "C:\video editing\Storytoolkit\StoryToolkitAI\mots_resolve.py", line 89, in get_resolve_data = initialize_resolve(silent=silent)

File "C:\video editing\Storytoolkit\StoryToolkitAI\mots_resolve.py", line 34, in initialize_resolve resolve = GetResolve()

File "C:\video editing\Storytoolkit\StoryToolkitAI\python_get_resolve.py", line 32, in GetResolve sys.exit()

SystemExit

WARNING: Resolve is not reachable.

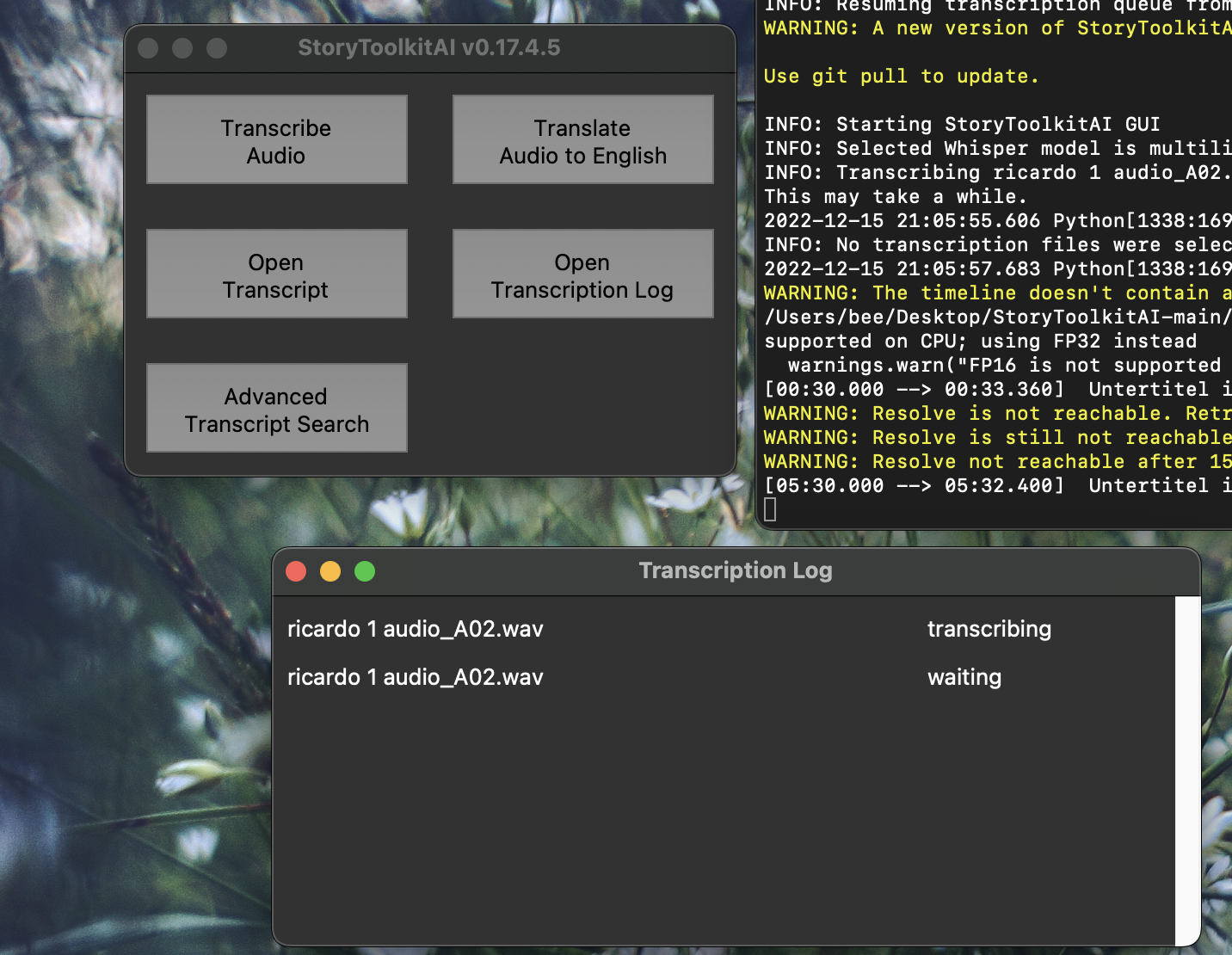

INFO: Starting StoryToolkitAI GUI

Sorry if this is a lot of info, but I'm not sure what is important and what isn't.