Welcome to the landing page of Opaque SQL! Opaque SQL is a package for Apache Spark SQL that enables processing over encrypted DataFrames using the OpenEnclave framework.

Note that Opaque SQL requires the MC2 Client in order to securely run an encrypted query. For a quickstart on that end-to-end workflow, please see the README in the MC2 Client repo.

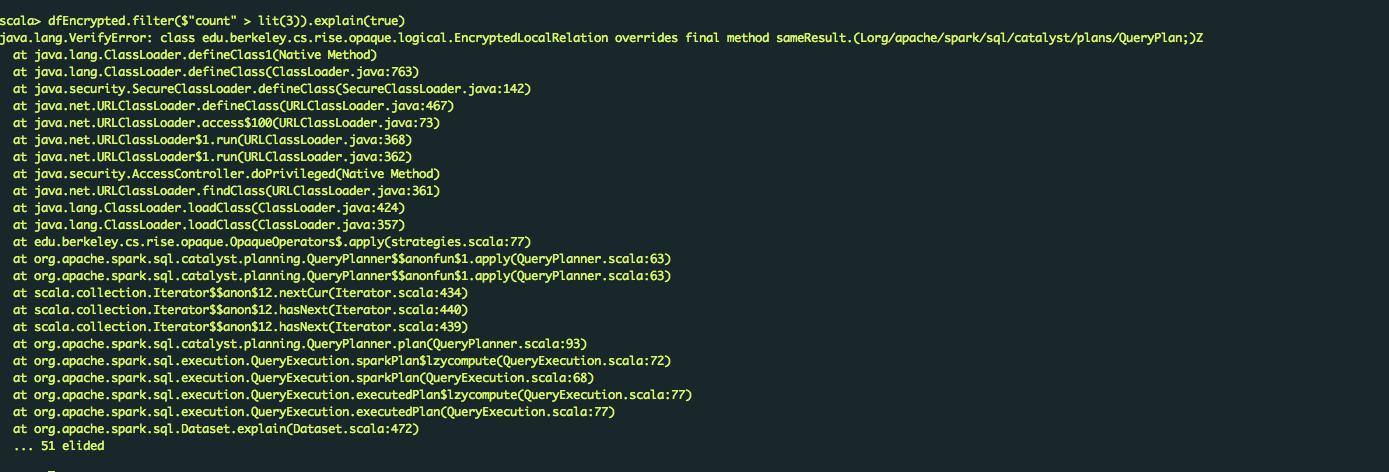

Similar to Apache Spark SQL, Opaque SQL offer an encrypted DataFrame abstraction. Users familiar with the Spark API can easily run queries on encrypted DataFrames using the same API. The main difference is that we support saving and loading of DataFrames, but not actions like .collect or .show. An example script is the following:

// Import hooks to Opaque SQL

import edu.berkeley.cs.rise.opaque.implicits._

import org.apache.spark.sql.types._

// Load an encrypted DataFrame (saved using the MC2 client)

val df_enc = spark.read.format("edu.berkeley.cs.rise.opaque.EncryptedSource").load("/tmp/opaquesql.csv.enc")

// Run a filter query on the encrypted DataFrame

val result = df_enc.filter($"Age" < lit(30))

// This will save the encrypted result to the result directory on the cloud

result.write.format("edu.berkeley.cs.rise.opaque.EncryptedSource").save("/tmp/opaque_sql_result")For more details on how to use Opaque SQL, please refer to this section.

For more details on building, using, and contributing, please see our documentation.

The open source is based on our NSDI 2017 paper.

Join the discussion on Slack or email us at [email protected].