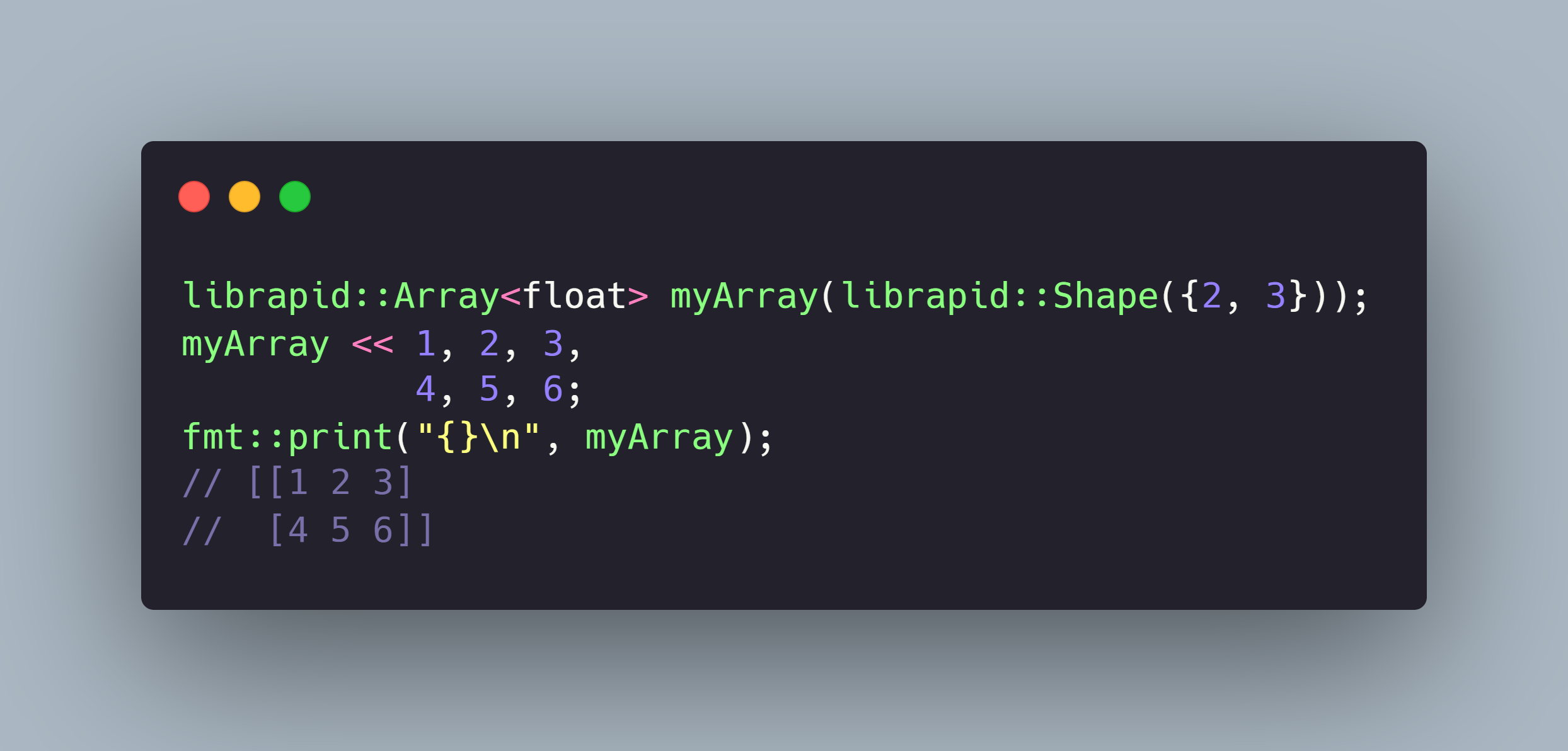

LibRapid is an extremely fast, highly-optimised and easy-to-use C++ library for mathematics, linear algebra and more, with an extremely powerful multidimensional array class at it's core. Every part of LibRapid is designed to provide the best possible performance without making the sacrifices that other libraries often do.

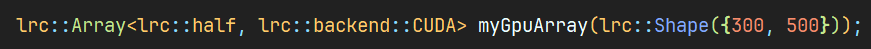

Everything in LibRapid is templated, meaning it'll just work with almost any datatype you throw at it. In addition, LibRapid is engineered with compute-power in mind, meaning it's easy to make the most out of the hardware you have. All array operations are vectorised with SIMD instructions, parallelised via OpenMP and can even be run on external devices via CUDA and OpenCL. LibRapid also supports a range of BLAS libraries to make linear algebra operations even faster.

What's more, LibRapid provides lazy evaluation of expressions, allowing us to perform optimisations at compile-time to

further improve performance. For example, dot(3 * a, 2 * transpose(b)) will be compiled into a single GEMM call,

with alpha=6, beta=0, transA=false and transB=true.

If you need the best possible performance and an intuitive interface that doesn't sacrifice functionality, LibRapid is

for you. You can fine-tune LibRapid's performance via the CMake configuration and change the device used for a

computation by changing a single template parameter (e.g. librapid::backend::CUDA for CUDA compute).

Additionally, LibRapid provides highly-optimised vectors, complex numbers, multiprecision arithmetic (via custom forks of MPIR and MPFR) and a huge range of mathematical functions that operate on all of these types. LibRapid also provides a range of linear algebra functions, machine learning activation functions, and more.

- When you need the best possible performance

- When you want to write one program that can run on multiple devices

- When you want to use a single library for all of your mathematical needs

- When you want a simple interface to develop with

- When you need a rigorously tested and documented library

- LibRapid is still in early development, so it's not yet ready for production use. That said, we still have a wide range of tests which are run on every push to the repository, and we're working on improving the documentation.

- When you need a well-established library.

- LibRapid hasn't been around for long, and we've got a very small community.

- When you need a wider range of functionality.

- While LibRapid implements a lot of functions, there are some features which are not yet present in the library. If you need these features, you may want to look elsewhere. If you would still like to use LibRapid, feel free to open an issue and I'll do my best to implement it.

Latest

Documentation

Develop Branch Docs

LibRapid uses Doxygen to parse the source code and extract documentation information. We then use a combination of Breathe, Exhale and Sphinx to generate a website from this data. The final website is hosted on Read the Docs.

The documentation is rebuilt every time a change is made to the source code, meaning it is always up-to-date.

At the current point in time, LibRapid C++ is being developed solely by me (pencilcaseman).

I'm currently a student in my first year of university, so time and money are both tight. I'm working on LibRapid in my spare time, and I'm not able to spend as much time on it as I'd like to.

If you like the library and would like to support its development, feel free to create issues or pull requests, or reach out to me via Discord and we can chat about new features. Any support is massively appreciated.

The roadmap is a rough outline of what I want to get implemented in the library and by what point, but please don't count on features being implemented quickly -- I can't promise I'll have the time to implement everything as soon as I'd like... (I'll try my best though!)

If you have any feature requests or suggestions, feel free to create an issue describing it. I'll try to get it working as soon as possible. If you really need something implemented quickly, a small donation would be appreciated, and would allow me to bump it to the top of my to-do list.

LibRapid has a few dependencies to improve functionality and performance. Some of these are optional, and can be configured with a CMake option. The following is a list of the external dependencies and their purpose (these are all submodules of the library -- you don't need to install anything manually):

- fmt - Advanced string formatting

- doxygen-awesome-css - A theme for the Doxygen docs

- CLBlast - An OpenCL BLAS library

- Vc - SIMD primitives for C++

- Jitify - A CUDA JIT compiler

- pocketfft - A fast, lightweight FFT library

- scnlib - Advanced string parsing

- OpenMP - Multi-threading library

- CUDA - GPU computing library

- OpenCL - Multi-device computing library

- OpenBLAS - Highly optimised BLAS library

- MPIR - Arbitrary precision integer arithmetic

- MPFR - Arbitrary precision real arithmetic

- FFTW - Fast(est) Fourier Transform library

Thanks to JetBrains for providing LibRapid with free licenses for their amazing tools!