This is the official implementation of our ICCV 2021 paper

- 2023.2.9: Upload the depth evaluation code on the NYUV2 dataset.

- 2021.08.08: The visualization code is available now. You can find it in 'disp.py'. A simple example of how to visualize the results is showed in 'eval_planeTR.py'.

Supplement 2D/3D visualization code.

Clone the repository:

git clone https://github.com/IceTTTb/PlaneTR3D.gitWe use Python 3.6 and PyTorch 1.6.0 in our implementation, please install dependencies:

conda create -n planeTR python=3.6

conda activate planeTR

conda install pytorch=1.6.0 torchvision=0.7.0 torchaudio cudatoolkit=10.2 -c pytorch

pip install -r requirements.txtWe train and test our network on the plane dataset created by PlaneNet. We follow PlaneAE to convert the .tfrecords to .npz files. Please refer to PlaneAE for more details.

We generate line segments using the state-of-the-art line segment detection algorithm HAWP with their pretrained model. The processed line segments data we used can be downloaded here.

The structure of the data folder should be

plane_data/

--train/*.npz

--train_img/*

--val/*.npz

--val_img/*

--train.txt

--val.txt

Download the pretrained model of HRNet and place it under the 'ckpts/' folder.

Change the 'root_dir' in config files to the path where you save the data.

Run the following command to train our network on one GPU:

CUDA_VISIBLE_DEVICES=0 python train_planeTR.pyRun the following command to train our network on multiple GPUs:

CUDA_VISIBLE_DEVICES=0,1,2 python -m torch.distributed.launch --nproc_per_node=3 --master_port 295025 train_planeTR.pyDownload the pretrained model here and place it under the 'ckpts/' folder.

Change the 'resume_dir' in 'config_planeTR_eval.yaml' to the path where you save the weight file.

Change the 'root_dir' in config files to the path where you save the data.

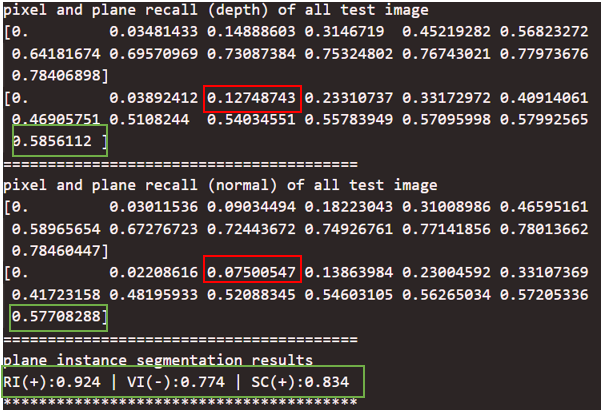

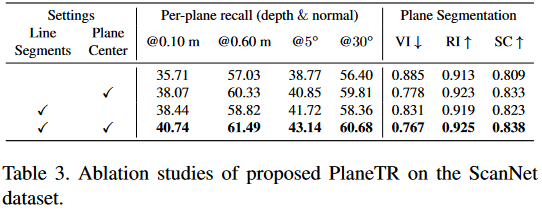

Run the following command to evaluate the performance:

CUDA_VISIBLE_DEVICES=0 python eval_planeTR.pyTo evaluate the depth on the NYUV2 dataset, please first download the original data from here and the official train/test split from here. Then, you also have to download our line detection results from here. After downloading all data, please put them under the folder 'nyudata/'. The structure of the data folder should be:

planeTR3D

--nyudata

|--nyu_depth_v2_labeled.mat

|--splits.mat

|--line_info\

|--*.txt

Run the following command to evaluate the performance:

CUDA_VISIBLE_DEVICES=0 python eval_nyudepth.pyUnfortunately, I lost the original detected line segments used in the paper. Thus I regenerated the line segments on the NYUV2 dataset with the latest HAWPV3. The depth metrics with these regenerated line segments are:

| Rel | log10 | RMSE | d1 | d2 | d3 |

|---|---|---|---|---|---|

| 0.196 | 0.096 | 0.812 | 63.8 | 88.3 | 96.0 |

If you find our work useful in your research, please consider citing:

@inproceedings{tan2021planeTR,

title={PlaneTR: Structure-Guided Transformers for 3D Plane Recovery},

author={Tan, Bin and Xue, Nan and Bai, Song and Wu, Tianfu and Xia, Gui-Song},

booktitle = {International Conference on Computer Vision},

year={2021}

}

We thank the authors of PlaneAE, PlaneRCNN, interplane and DETR. Our implementation is heavily built upon their codes.