Cards provided by GitHub Readme Stats

hvianna / audiomotion-analyzer Goto Github PK

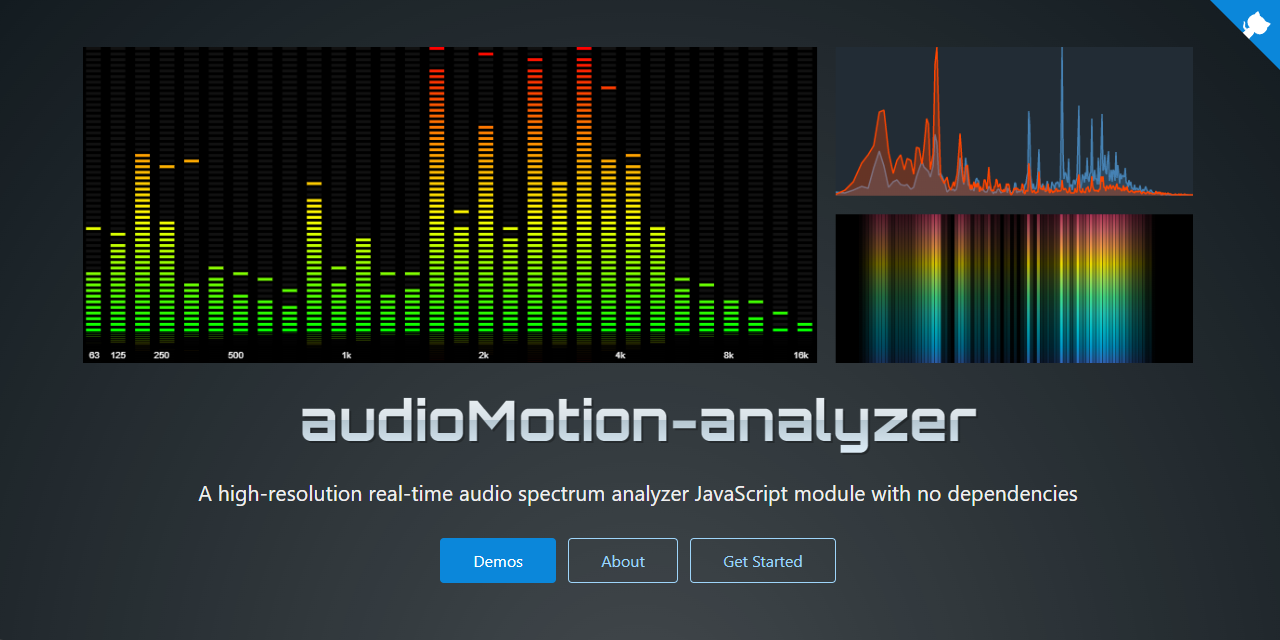

View Code? Open in Web Editor NEWHigh-resolution real-time graphic audio spectrum analyzer JavaScript module with no dependencies.

Home Page: https://audioMotion.dev

License: GNU Affero General Public License v3.0