I'm an open source developer from Germany working on tooling for web-first game development. Here's an overview of my active projects. Follow my public roadmap to see what I'm working on right now, and what I will be working on next.

If you enjoy my work, please consider sponsoring me on GitHub!

- 🤖 Miniplex, an Entity Component System library designed for ease-of-use and development ergonomics. Includes React bindings, but can also be used without a framework.

- 🚜 State Composer, a high-level finite state machine library for macro state in React applications.

- 🎃 Eventery, a lightweight, dependency-free, typed publish-subscribe event emitter for JavaScript/TypeScript.

- 🍍 Statery, a simple proxy-based state container for React.

- ⏰ Timeline Composer, a small collection of React components for orchestrating timelines.

- 🌈 Shader Composer, a library for creating GLSL shaders from a tree of JS primitives (think ShaderGraph et al, but code.)

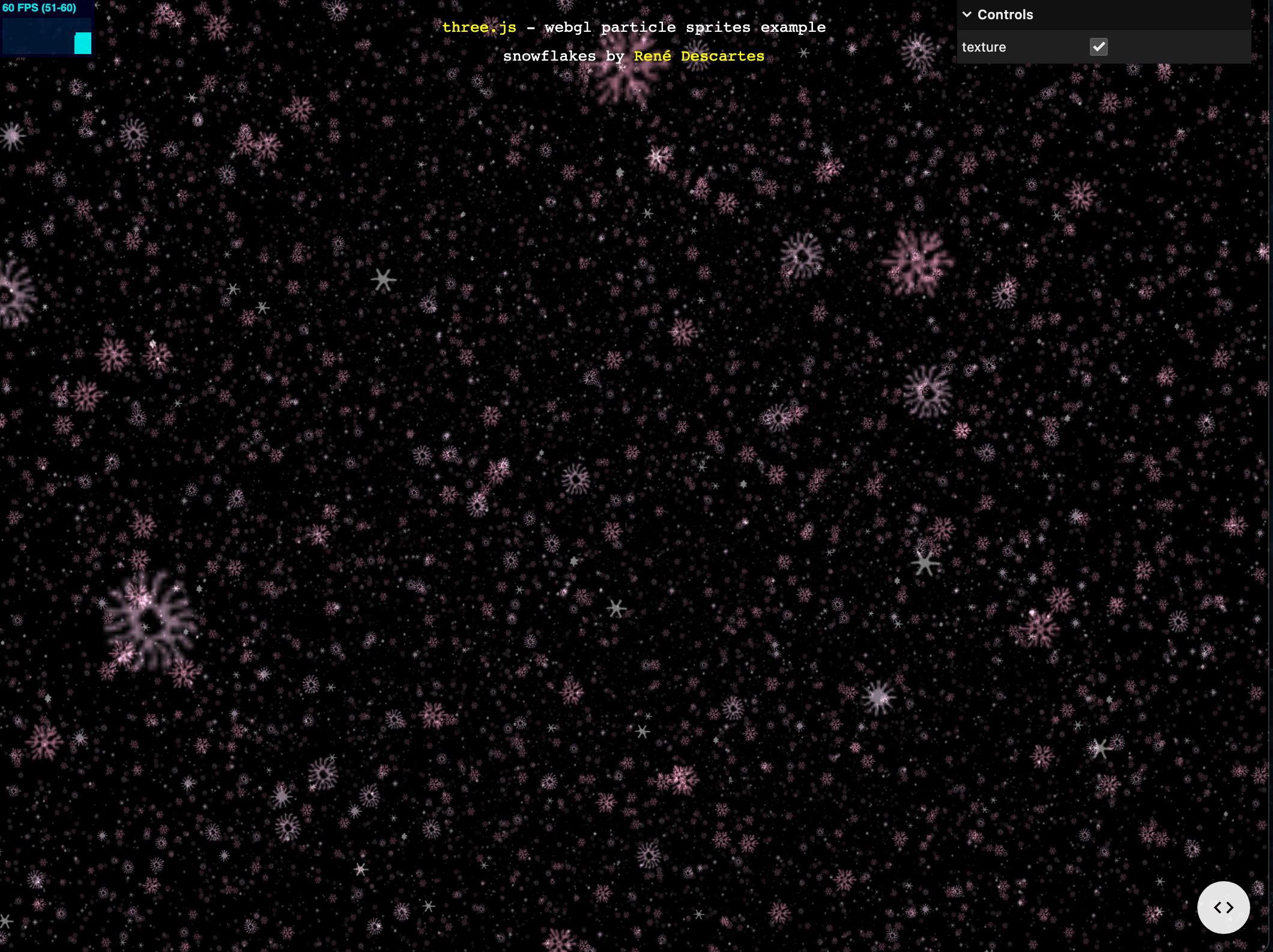

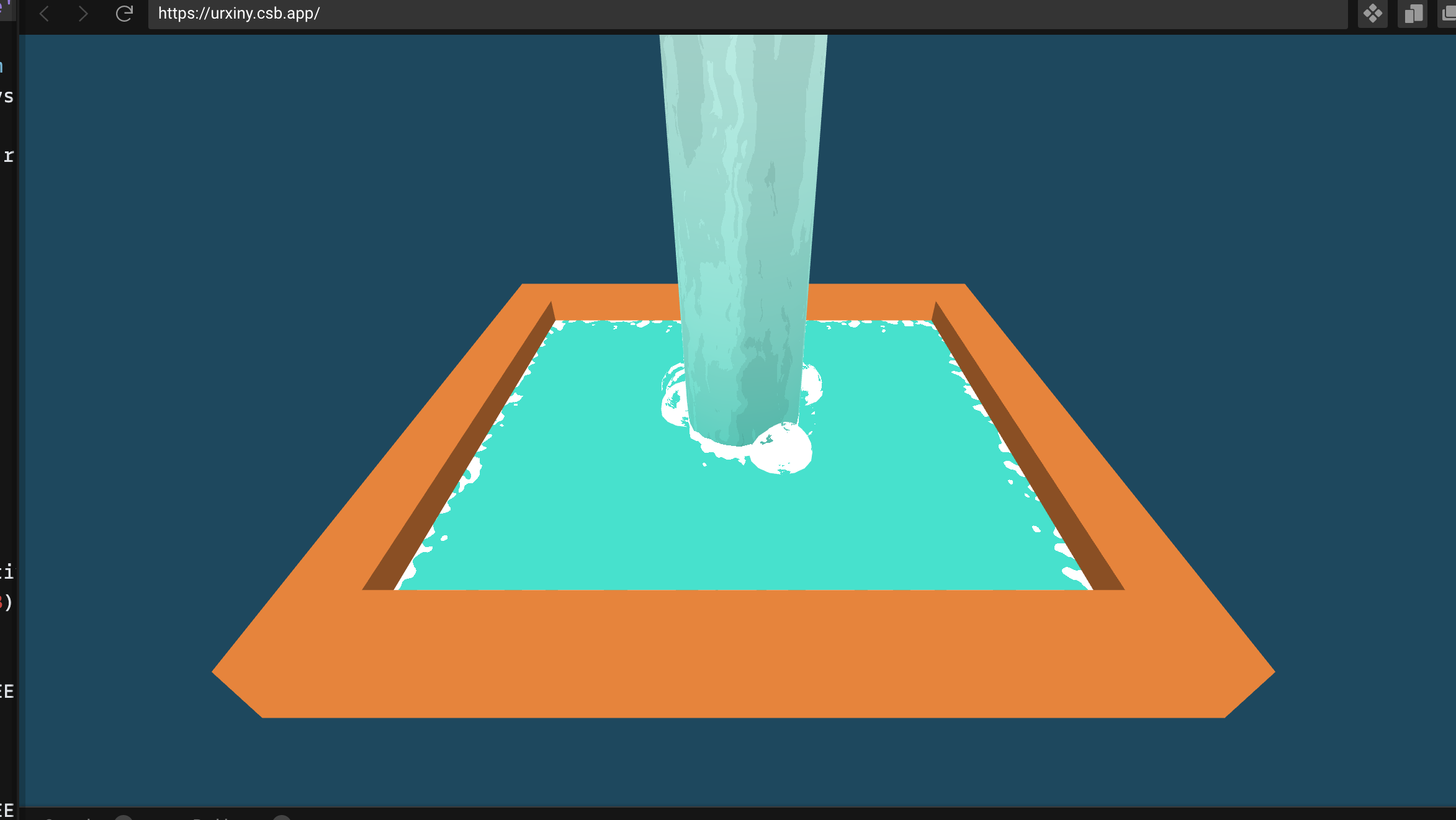

- 🎆 VFX Composer, a high-performance, game-ready visual effects library for Three.js and react-three-fiber.

- 🖼 Render Composer, a preconfigured, customizable render pipeline for react-three-fiber games.

- three-elements, Web Components wrappers for Three.js.

There's a bunch of ways you can get in touch with me:

- [email protected] for some old-school email

- @[email protected] on the Fediverse

- Via LinkedIn, I'm not even kidding

- Find me on the Web Game Dev and Poimandres Discord servers