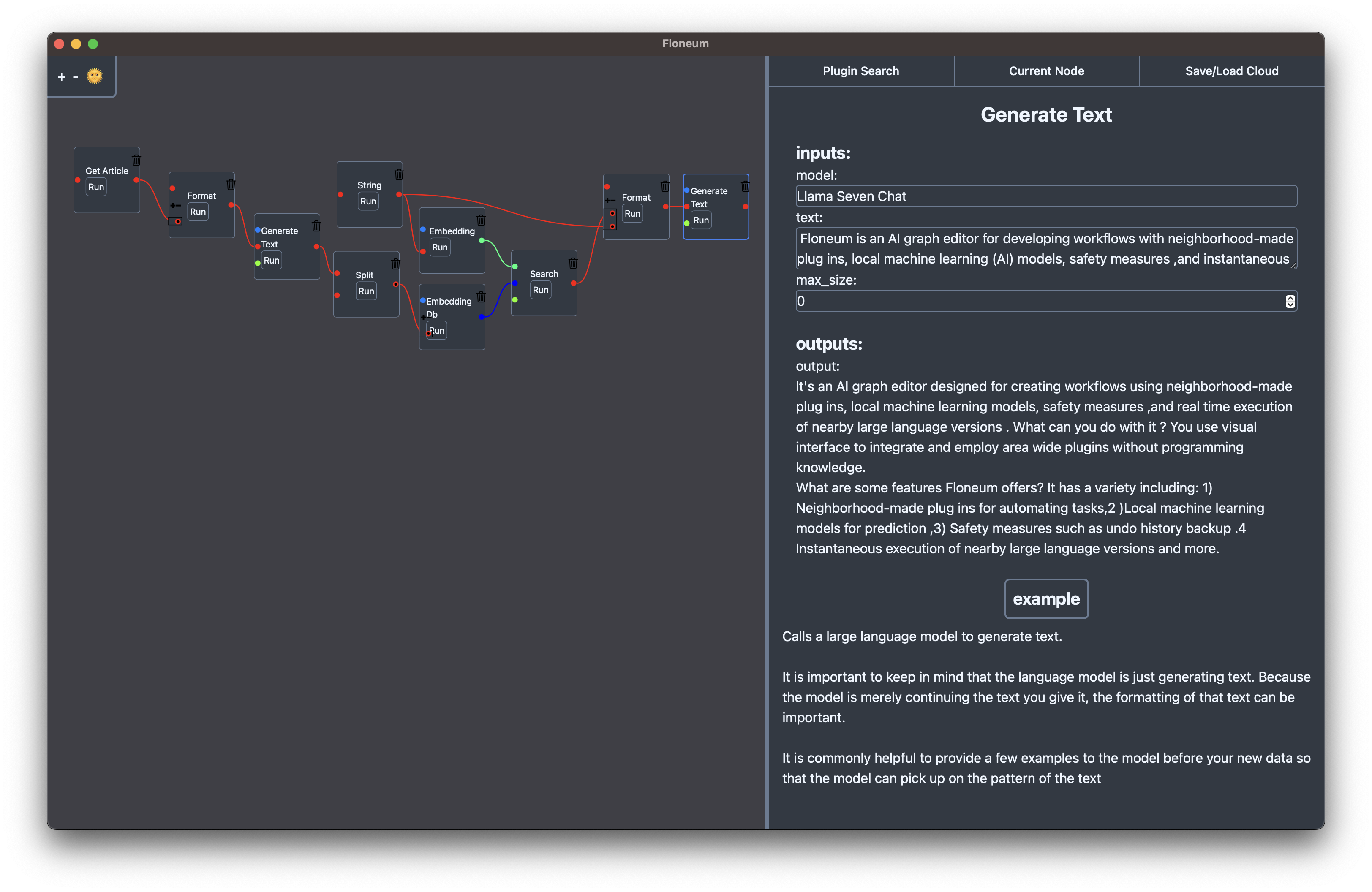

Floneum is a graph editor that makes it easy to develop your own AI workflows

- Visual interface: You can use Floneum without any knowledge of programming. The visual graph editor makes it easy to combine community-made plugins with local AI models

- Instantly run local large language models: Floneum does not require any external dependencies or even a GPU to run. It uses LLM to run large language models locally. Because of this, you can run Floneum with your data without worrying about privacy

- Plugins: By combining large language models with plugins, you can improve their performance and make models work better for your specific use case. All plugins run in an isolated environment so you don't need to trust any plugins you load. Plugins can only interact with their environment in a safe way

- Multi-language plugins: Plugins can be used in any language that supports web assembly. In addition to the API that can be accessed in any language, Floneum has a rust wrapper with ergonomic macros that make it simple to create plugins

- Controlled text generation: Plugins can control the output of the large language models with a process similar to JSONformer or guidance. This allows plugins to force models to output valid JSON, or any other structure they define. This can be useful when communicating between a language model and a typed API

Download the latest release, run the binary, wait a few seconds for all of the plugins to download and start building!

-

If you are looking to use Floneum, you can read the User Documentation.

-

If you are looking to develop plugins for Floneum, you can read the Developer Documentation

Kalosm is a simple interface for pre-trained models in rust that backs Floneum. It makes it easy to interact with pre-trained, language, audio, and image models.

There are three different packages in Kalosm:

kalosm::language - A simple interface for text generation and embedding models and surrounding tools. It includes support for search databases, and text collection from websites, RSS feeds, and search engines. kalosm::audio - A simple interface for audio transcription and surrounding tools. It includes support for microphone input and the whisper model. kalosm::vision - A simple interface for image generation and segmentation models and surrounding tools. It includes support for the wuerstchen and segment-anything models and integration with the image crate. A complete guide for Kalosm is available on the Kalosm website, and examples are available in the examples folder.

Kalosm is a simple interface for pre-trained models in rust. It makes it easy to interact with pre-trained, language, audio, and image models.

There are three different packages in Kalosm:

kalosm::language- A simple interface for text generation and embedding models and surrounding tools. It includes support for search databases, and text collection from websites, RSS feeds, and search engines.kalosm::audio- A simple interface for audio transcription and surrounding tools. It includes support for microphone input and thewhispermodel.kalosm::vision- A simple interface for image generation and segmentation models and surrounding tools. It includes support for thewuerstchenandsegment-anythingmodels and integration with the image crate.

A complete guide for Kalosm is available on the Kalosm website, and examples are available in the examples folder.

- Install rust

- Create a new project:

cargo new next-gen-ai

cd ./next-gen-ai- Add Kalosm as a dependency

cargo add kalosm

cargo add tokio --features full- Add this code to your

main.rsfile

use std::io::Write;

use kalosm::{*, language::*};

#[tokio::main]

async fn main() {

let mut llm = Phi::start().await;

let prompt = "The following is a 300 word essay about Paris:";

print!("{}", prompt);

let stream = llm.stream_text(prompt).with_max_length(1000).await.unwrap();

let mut sentences = stream.words();

while let Some(text) = sentences.next().await {

print!("{}", text);

std::io::stdout().flush().unwrap();

}

}- Run your application with:

cargo run --releaseIf you are interested in either project, you can join the discord to discuss the project and get help.

- Report issues on our issue tracker.

- Help other users in the discord

- If you are interested in contributing, feel free to reach out on discord