Proof of concept of a mixed reality application for the Microsoft HoloLens 2 integrating object recognition using cloud computing and real-time on-device markerless object tracking. The augmented objects provide interaction using hand input, eye-gaze, and voice commands to verify the tracking result visually.

This work is part of the thesis "Mixed Reality Task Assistance for Pharmaceutical Laboratories using Markerless Object Tracking and Cloud Services" submitted to the University of Applied Sciences Northwestern Switzerland at the Institute for Interactive Technologies (IIT).

Author: Severin Pereto

Supervisor: Prof. Dr. Doris Agotai

As I have no longer access to a HoloLens 2 to actively maintain, this repository remains archived.

Lab Assist Vision provides a showcase for markerless tracking in pharmaceutical laboratories using the Microsoft HoloLens 2. Using the voice command "Detect," objects are recognized by a cloud service to initialize markerless tracking on the device. The tracked laboratory items can be confirmed by eye gaze and the voice command "Okay" to change the tooltip color.

A demonstration video in full length is available at https://youtu.be/-usFovY6nD8. Note that the video's quality is limited due to the simultaneous use of the color camera for tracking and mixed reality capture.

- Provides a camera service using the color camera of the Microsoft HoloLens 2 in Unity

- Object Detection using Custom Vision as Cloud Service

- Provides tracker initialization for markerless tracking

- Experimental: Run on repeat to provide tracking by detection

- Experimental: Object detection network (ONNX) locally on the device using Barracuda

- Markerless object tracking using OpenCV Tracking API

- Implemented Trackers: MOSSE, TLD, Boosting, Median Flow, MIL, CSRT, KCF

- Real-time tracking (30 FPS) up to more than five concurrent tracked items is achieved using MOSSE at a resolution of 760x428 @ 30 FPS in grayscale and synchronous mode.

- Mapping the 2D tracked object into 3D space using the Spatial Mesh.

- Interaction using hand input, eye gaze combined with voice commands (e.g. "Detect", "Okay") provided by MRTK.

- Developer Console to change settings (e.g. video profile, color format, tracker, scenario) at runtime.

- Video display of the camera stream, including a debug view of the bounding boxes of tracked objects.

Lab Vision requires OpenCVForUnity and a Custom Vision network, including prediction endpoint and key. The following gives a step-by-step instruction to set up the project:

-

Open the project (e.g., in the Unity Hub) with Unity Version 2019.4.15f1 and Universal Windows Platform. Note: Another Unity version may require adjustments or API updates (e.g. XR Plugin System)

-

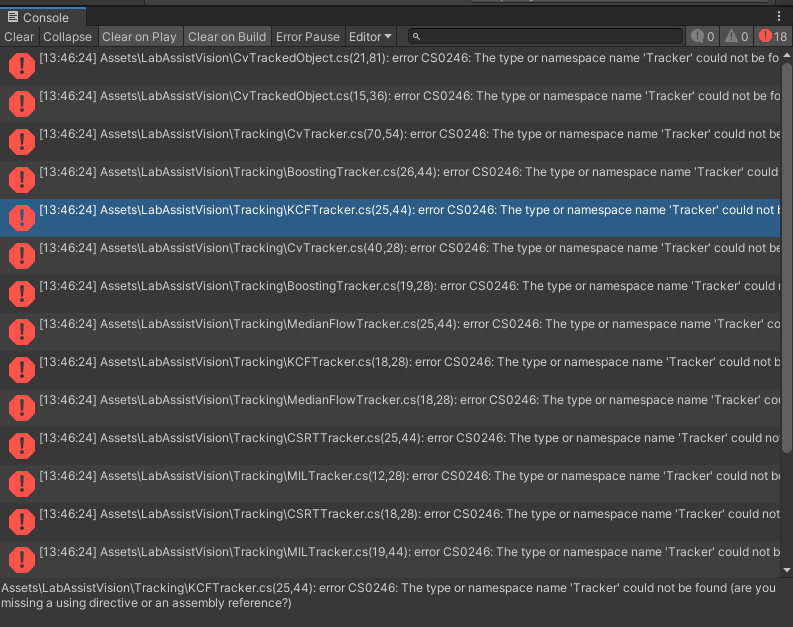

Import OpenCVForUnity version 2.4.1 (e.g., using the Unity Package Manager). Only the OpenCVForUnity Wrapper API and prebuilt OpenCV for Windows and UWP are required.

-

Open the LabAssistVision scene.

-

Change the following in Build Settings (File > Build Settings) if needed:

- Platform: Universal Windows Platform

- Target Device: HoloLens

-

Verify the project settings (see Project Configuration)

-

Add the Custom Vision prediction key and prediction endpoint obtained from the portal to the CustomVisionObjectDetectionService profile. This setting can be found in the Game Object "MixedRealityToolkit" under the tab Extensions > Configurations > CustomVisionObjectDetectionService > DefaultObjectDetectionServiceProfile. Note: If no profile is assigned, assign the default or create a new one.

The following table highlights specific dependencies in this project:

| Dependency | Version | Resolvment | Remark |

|---|---|---|---|

| OpenCVForUnity | 2.4.1 | Import manually (see Setup) | Paid Unity asset with precompiled OpenCV for UWP |

| NuGet for Unity | 2.0.1 | Included in repository | Licensed under the MIT License |

| DotNetWinRT | 0.5.1049 | Included in repository, resolved by NuGet for Unity | NuGet Package |

| Mixed Reality Toolkit for Unity | 2.5.1 | Resolved by Unity Package Manager | Licensed under the MIT License |

| Barracuda | 1.0.4 | Resolved by Unity Package Manager | Required to run the Custom Vision network on the device (experimental feature) |

| Windows Mixed Reality | 4.2.3 | Resolved by Unity Package Manager | The Unity legacy XR plugin is currently used. This package is deprecated and will be removed in Unity 2020.1 |

Ensure that the following settings are configured in Unity:

- Build Settings

- Platform: Universal Windows Platform

- Target Device: HoloLens

- Project Settings

- Player

- Scripting Define Symbols:

OPENCV_USE_UNSAFE_CODE;DOTNETWINRT_PRESENT - Allow 'unsafe' code: true

- Capabilities: InternetClient, InternetClientServer, PrivateNetworkClientServer, Webcam, Microphone, HumanInterfaceDevice, Spatial Perception, Gaze Input

- XR Settings

- Virtual Reality Supported: true

- Depth Format: 16-bit

- Scripting Define Symbols:

- Player

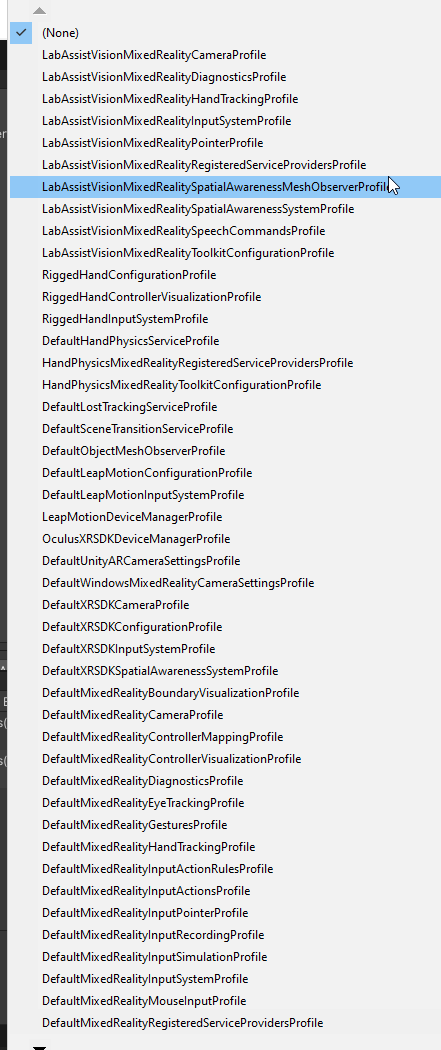

- Unity Editor: Ensure that all MRTK extension services have a profile assigned. (MixedRealityToolkit Game Object > Extension Services)

- Package Manager: The project is built using legacy XR (Windows Mixed Reality).

- Build the Project using ARM64 (Debug/Release). Note that running in Debug mode has high impact on performance.

- It is recommended to disable the Video Display (in the Unity Editor) if not used for development, as it has an impact on tracking performance.

- It is possible to run the application using the Unity Editor and the Play Mode to get fast feedback during development. While object detection and tracking are supported, mapping to 3D is partially possible using Holographic Remoting due to missing intrinsic and extrinsic.

- The

MonoCamerasimulates the Locatable Camera of the device in the Unity Editor. Using PhotoCapture used in a loop delivers the WebCam image in NV12 format (at a low framerate). The camera extrinsic and intrinsic required for mapping the 3D position are ignored. - In Holographic Remoting, the WebCam of the computer is used. A tracked object is then mapped to the collision point of the main camera's gaze and the Spatial Mesh.

- The video profile, color format, and tracker can be switched during runtime. Real-time tracking (30 FPS) is achieved using MOSSE at a resolution of 760x428 @ 30 FPS in grayscale and synchronous mode.

The developer console provides a tool to change parameters at runtime (e.g., video parameters, color format, trackers). In Video Profile Selection, each available resolution and frame rate provided by the Locatable Camera is available for each color format (RGB/Grayscale).

The Tracker Selection allows switching between tracker of the OpenCV Tracking API implemented by OpenCVForUnity. Currently, these are MOSSE, TLD, Boosting, Median Flow, MIL, CSRT, KCF. Note that some trackers only support a specific color format or are converting the image format internally, which impacts performance.

The following lists configurable scenarios and their purpose:

- Process Frame Sync: The camera frame is processed synchronously. If calculations such as processing take longer than the time budget (16ms at 60 FPS, 33ms at 30 FPS), this will cause a drop in the application frame rate. If untoggled, frames are processed asynchronous, which can help evaluate trackers that require more time to process. In both cases, only one camera frame is processed at a time.

- Detect On Repeat: If enabled, tracking is ignored, and detection is invoked repeatedly. The slider Max Concurrent Detections sets the limit for concurrent requests.

- Force Fixed Tracker Count: This option initializes the detected object with the highest prediction multiple times. All other items are ignored. The number of clones can be specified using the slider Tracker Count. This option is used to measure performance across different trackers.

The toolbar follows the user's field of view until the position is fixed. It allows to trigger an object detection, reset the tracked objects, open the developer console, or quit the application.

Scripts for visualization purposes, such as VideoDisplayManager, VisualizationManager, and the Controller, are implemented as MonoBehaviour to enable support from the Unity Editor, and position game objects in the scene.

MRTK is built in a modular approach, provides a service locator component, and centralized as much of the configuration required as possible. This component is configurable in the Unity Editor and manages component lifespans and code behaviors. Extension services are services that extend the functionality of the MRTK. After registration, the service is accessible using the MRTK service registry. See this introduction for an introduction to MRTK.

A CameraService, an ObjectDetectionService, and an ObjectTrackingService are introduced as MRTK extension services. The following list explains the purpose and different implementations of the services.

CameraService: Provides the camera frame depending on the platform. If running on the Microsoft HoloLens 2, the locatable camera is provided. Running in the Unity Editor, the WebCam is used.CustomVisionObjectDetectionService: Provides asynchronous object detection for a camera frame. Currently, Custom Vision as Cloud Service and an experimental local detection service using Barracuda are provided.ObjectTrackingService: Provides markerless object tracking using OpenCV.

Lab Assist Vision is open for use in compliance with the MIT License. The grayscale shader for the video display and FPSUtils for measurement originate from HoloLensARTookit and are licensed under the GNU Lesser General Public License v3.0.