VidGear is a High-Performance Video Processing Python Library that provides an easy-to-use, highly extensible, thoroughly optimised Multi-Threaded + Asyncio API Framework on top of many state-of-the-art specialized libraries like OpenCV, FFmpeg, ZeroMQ, picamera2, starlette, yt_dlp, pyscreenshot, dxcam, aiortc and python-mss serving at its backend, and enable us to flexibly exploit their internal parameters and methods, while silently delivering robust error-handling and real-time performance 🔥

VidGear primarily focuses on simplicity, and thereby lets programmers and software developers to easily integrate and perform Complex Video Processing Tasks, in just a few lines of code.

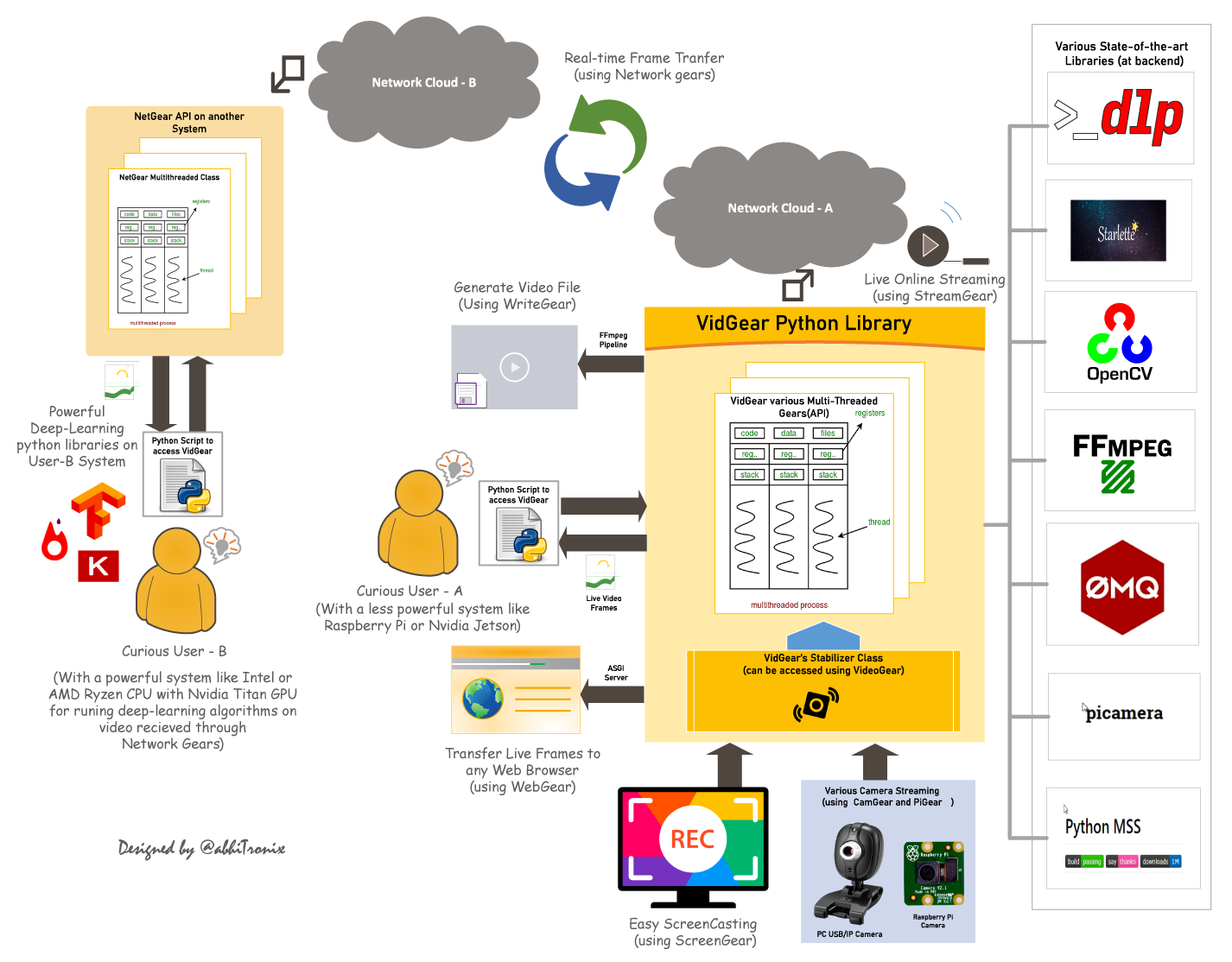

The following functional block diagram clearly depicts the generalized functioning of VidGear APIs:

"VidGear is a cross-platform High-Performance Framework that provides an one-stop Video-Processing solution for building complex real-time media applications in python."

"VidGear can read, write, process, send & receive video files/frames/streams from/to various devices in real-time, and faster than underline libraries."

"Write Less and Accomplish More" — VidGear's Motto

"Built with simplicity in mind, VidGear lets programmers and software developers to easily integrate and perform Complex Video-Processing Tasks in their existing or newer applications without going through hefty documentation and in just a few lines of code. Beneficial for both, if you're new to programming with Python language or already a pro at it."

If this is your first time using VidGear, head straight to the Installation ➶ to install VidGear.

Once you have VidGear installed, Checkout its Well-Documented Function-Specific Gears ➶

Also, if you're already familiar with OpenCV library, then see Switching from OpenCV Library ➶

Or, if you're just getting started with OpenCV-Python programming, then refer this FAQ ➶

VidGear is built with multiple APIs a.k.a Gears, each with some unique functionality.

Each API is designed exclusively to handle/control/process different data-specific & device-specific video streams, network streams, and media encoders/decoders. These APIs provides the user an easy-to-use, dynamic, extensible, and exposed Multi-Threaded + Asyncio optimized internal layer above state-of-the-art libraries to work with, while silently delivering robust error-handling.

These Gears can be classified as follows:

A. Video-Capture Gears:

- CamGear: Multi-Threaded API targeting various IP-USB-Cameras/Network-Streams/Streaming-Sites-URLs.

- PiGear: Multi-Threaded API targeting various Camera Modules and (limited) USB cameras on Raspberry Pis 🍇.

- ScreenGear: High-performance API targeting rapid Screencasting Capabilities.

- VideoGear: Common Video-Capture API with internal Video Stabilizer wrapper.

B. Video-Writer Gears:

- WriteGear: Handles Lossless Video-Writer for file/stream/frames Encoding and Compression.

C. Streaming Gears:

-

StreamGear: Handles Transcoding of High-Quality, Dynamic & Adaptive Streaming Formats.

-

Asynchronous I/O Streaming Gear:

- WebGear: ASGI Video-Server that broadcasts Live MJPEG-Frames to any web-browser on the network.

- WebGear_RTC: Real-time Asyncio WebRTC media server for streaming directly to peer clients over the network.

D. Network Gears:

-

NetGear: Handles High-Performance Video-Frames & Data Transfer between interconnecting systems over the network.

-

Asynchronous I/O Network Gear:

- NetGear_Async: Immensely Memory-Efficient Asyncio Video-Frames Network Messaging Framework.

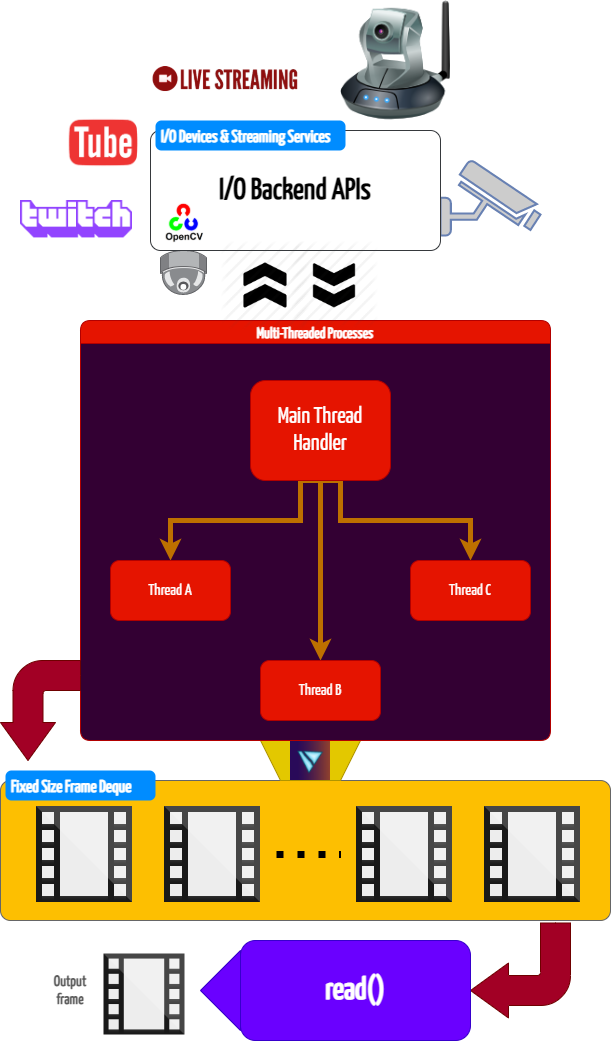

CamGear can grab ultra-fast frames from a diverse range of file-formats/devices/streams, which includes almost any IP-USB Cameras, multimedia video file-formats (upto 4k tested), various network stream protocols such as

http(s), rtp, rtsp, rtmp, mms, etc., and GStreamer's pipelines, plus direct support for live video streaming sites like YouTube, Twitch, LiveStream, Dailymotion etc.

CamGear provides a flexible, high-level, multi-threaded framework around OpenCV's VideoCapture class with access almost all of its available parameters. CamGear internally implements yt_dlp backend class for seamlessly pipelining live video-frames and metadata from various streaming services like YouTube, Twitch, and many more ➶. Furthermore, its framework relies exclusively on Threaded Queue mode for ultra-fast, error-free, and synchronized video-frame handling.

VideoGear API provides a special internal wrapper around VidGear's exclusive Video Stabilizer class.

VideoGear also acts as a Common Video-Capture API that provides internal access for both CamGear and PiGear APIs and their parameters with an exclusive enablePiCamera boolean flag.

VideoGear is ideal when you need to switch to different video sources without changing your code much. Also, it enables easy stabilization for various video-streams (real-time or not) with minimum effort and writing way fewer lines of code.

Below is a snapshot of a VideoGear Stabilizer in action (See its detailed usage here):

Original Video Courtesy @SIGGRAPH2013

Code to generate above result:

# import required libraries

from vidgear.gears import VideoGear

import numpy as np

import cv2

# open any valid video stream with stabilization enabled(`stabilize = True`)

stream_stab = VideoGear(source="test.mp4", stabilize=True).start()

# open same stream without stabilization for comparison

stream_org = VideoGear(source="test.mp4").start()

# loop over

while True:

# read stabilized frames

frame_stab = stream_stab.read()

# check for stabilized frame if Nonetype

if frame_stab is None:

break

# read un-stabilized frame

frame_org = stream_org.read()

# concatenate both frames

output_frame = np.concatenate((frame_org, frame_stab), axis=1)

# put text over concatenated frame

cv2.putText(

output_frame,

"Before",

(10, output_frame.shape[0] - 10),

cv2.FONT_HERSHEY_SIMPLEX,

0.6,

(0, 255, 0),

2,

)

cv2.putText(

output_frame,

"After",

(output_frame.shape[1] // 2 + 10, output_frame.shape[0] - 10),

cv2.FONT_HERSHEY_SIMPLEX,

0.6,

(0, 255, 0),

2,

)

# Show output window

cv2.imshow("Stabilized Frame", output_frame)

# check for 'q' key if pressed

key = cv2.waitKey(1) & 0xFF

if key == ord("q"):

break

# close output window

cv2.destroyAllWindows()

# safely close both video streams

stream_org.stop()

stream_stab.stop()

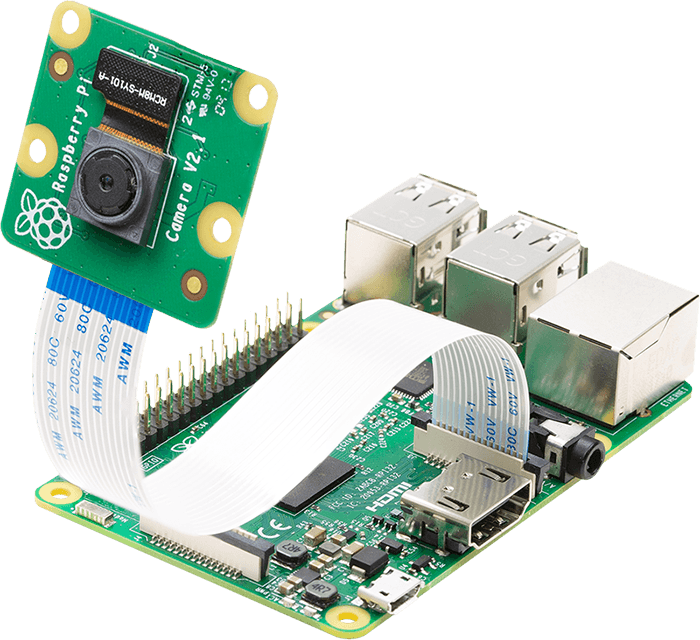

PiGear is a specialized API similar to the CamGear API but optimized for Raspberry Pi 🍇 Boards, offering comprehensive support for camera modules (e.g., OmniVision OV5647 Camera Module, Sony IMX219 Camera Module), along with limited compatibility for USB cameras.

PiGear implements a seamless and robust wrapper around the picamera2 python library, simplifying integration with minimal code changes and ensuring a smooth transition for developers already familiar with the Picamera2 API. PiGear leverages the libcamera API under the hood with multi-threading, providing high-performance 🔥, enhanced control and functionality for Raspberry Pi camera modules.

PiGear handles common configuration parameters and non-standard settings for various camera types, simplifying the integration process. PiGear currently supports PiCamera2 API parameters such as sensor, controls, transform, and format etc., with internal type and sanity checks for robust performance.

While primarily focused on Raspberry Pi camera modules, PiGear also provides basic functionality for USB webcams only with Picamera2 API, along with the ability to accurately differentiate between USB and Raspberry Pi cameras using metadata.

PiGear seamlessly switches to the legacy picamera library if the picamera2 library is unavailable, ensuring seamless backward compatibility. For this, PiGear also provides a flexible multi-threaded framework around complete picamera API, allowing developers to effortlessly exploit a wide range of parameters, such as brightness, saturation, sensor_mode, iso, exposure, and more.

Furthermore, PiGear supports the use of multiple camera modules, including those found on Raspberry Pi Compute Module IO boards and USB cameras (only with Picamera2 API).

Best of all, PiGear contains Threaded Internal Timer - that silently keeps active track of any frozen-threads/hardware-failures and exit safely, if any does occur. That means that if you're running PiGear API in your script and someone accidentally pulls the Camera-Module cable out, instead of going into possible kernel panic, API will exit safely to save resources.

Code to open picamera2 stream with variable parameters in PiGear API:

# import required libraries

from vidgear.gears import PiGear

from libcamera import Transform

import cv2

# formulate various Picamera2 API

# configurational parameters

options = {

"controls": {"Brightness": 0.5, "ExposureValue": 2.0},

"transform": Transform(hflip=1),

"sensor": {"output_size": (480, 320)}, # will override `resolution`

"format": "RGB888", # 8-bit BGR

}

# open pi video stream with defined parameters

stream = PiGear(resolution=(640, 480), framerate=60, logging=True, **options).start()

# loop over

while True:

# read frames from stream

frame = stream.read()

# check for frame if Nonetype

if frame is None:

break

# {do something with the frame here}

# Show output window

cv2.imshow("Output Frame", frame)

# check for 'q' key if pressed

key = cv2.waitKey(1) & 0xFF

if key == ord("q"):

break

# close output window

cv2.destroyAllWindows()

# safely close video stream

stream.stop()

ScreenGear is designed exclusively for targeting rapid Screencasting Capabilities, which means it can grab frames from your monitor in real-time, either by defining an area on the computer screen or full-screen, at the expense of inconsiderable latency. ScreenGear also seamlessly support frame capturing from multiple monitors as well as supports multiple backends.

ScreenGear implements a Lightning-Fast API wrapper around dxcam, pyscreenshot & python-mss python libraries and also supports an easy and flexible direct internal parameters manipulation.

Below is a snapshot of a ScreenGear API in action:

Code to generate the above results:

# import required libraries

from vidgear.gears import ScreenGear

import cv2

# open video stream with default parameters

stream = ScreenGear().start()

# loop over

while True:

# read frames from stream

frame = stream.read()

# check for frame if Nonetype

if frame is None:

break

# {do something with the frame here}

# Show output window

cv2.imshow("Output Frame", frame)

# check for 'q' key if pressed

key = cv2.waitKey(1) & 0xFF

if key == ord("q"):

break

# close output window

cv2.destroyAllWindows()

# safely close video stream

stream.stop()

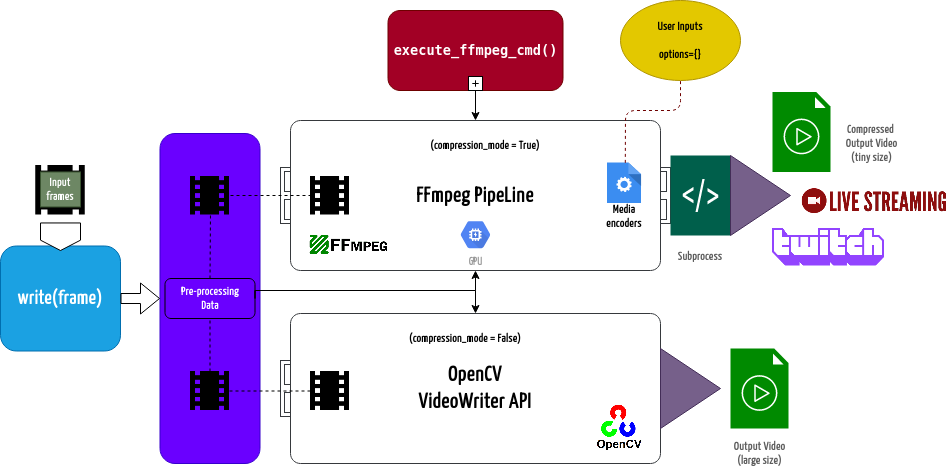

WriteGear handles various powerful Video-Writer Tools that provide us the freedom to do almost anything imaginable with multimedia data.

WriteGear API provides a complete, flexible, and robust wrapper around FFmpeg, a leading multimedia framework. WriteGear can process real-time frames into a lossless compressed video-file with any suitable specifications (such asbitrate, codec, framerate, resolution, subtitles, etc.).

WriteGear also supports streaming with traditional protocols such as RTSP/RTP, RTMP. It is powerful enough to perform complex tasks such as Live-Streaming (such as for Twitch, YouTube etc.) and Multiplexing Video-Audio with real-time frames in just few lines of code.

Best of all, WriteGear grants users the complete freedom to play with any FFmpeg parameter with its exclusive Custom Commands function (see this doc) without relying on any third-party API.

In addition to this, WriteGear also provides flexible access to OpenCV's VideoWriter API tools for video-frames encoding without compression.

WriteGear primarily operates in the following two modes:

-

Compression Mode: In this mode, WriteGear utilizes powerful FFmpeg inbuilt encoders to encode lossless multimedia files. This mode provides us the ability to exploit almost any parameter available within FFmpeg, effortlessly and flexibly, and while doing that it robustly handles all errors/warnings quietly. You can find more about this mode here ➶

-

Non-Compression Mode: In this mode, WriteGear utilizes basic OpenCV's inbuilt VideoWriter API tools. This mode also supports all parameter transformations available within OpenCV's VideoWriter API, but it lacks the ability to manipulate encoding parameters and other important features like video compression, audio encoding, etc. You can learn about this mode here ➶

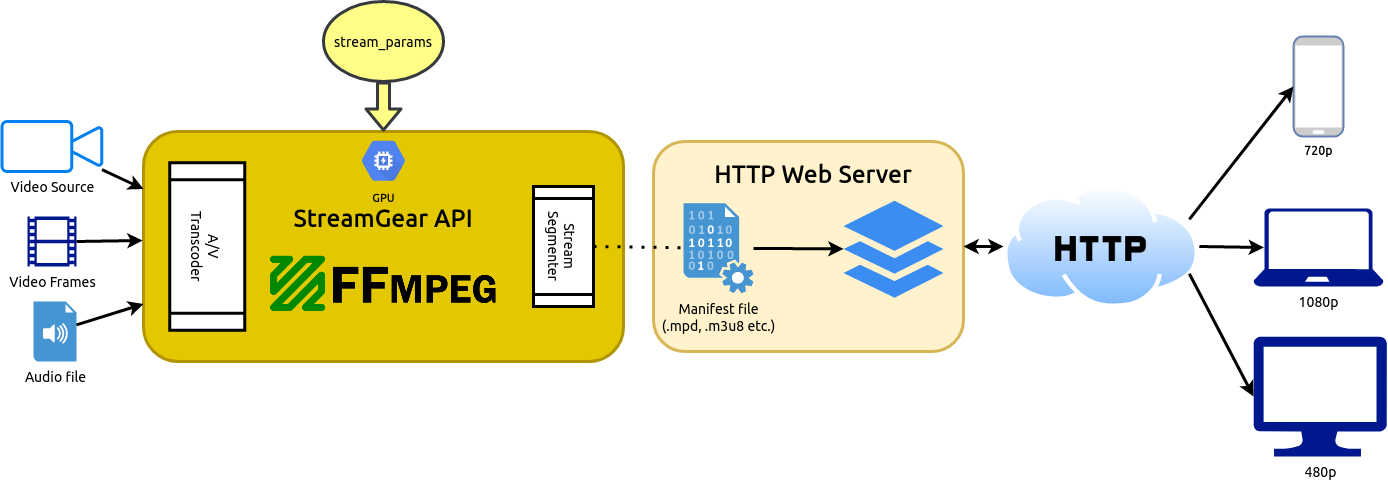

StreamGear streamlines and simplifies the transcoding workflow to generate Ultra-Low Latency, High-Quality, Dynamic & Adaptive Streaming Formats like MPEG-DASH and Apple HLS with just a few lines of Python code, allowing developers to focus on their application logic rather than dealing with the complexities of transcoding and chunking media files.

StreamGear API provides a standalone, highly extensible, and flexible wrapper around the FFmpeg multimedia framework for generating chunk-encoded media segments from your multimedia content effortlessly.

With StreamGear, you can transcode source video/audio files and real-time video frames into a sequence of multiple smaller chunks/segments of suitable lengths. These segments facilitate streaming at different quality levels (bitrates or spatial resolutions) and allow for seamless switching between quality levels during playback based on available bandwidth. You can serve these segments on a web server, making them easily accessible via standard HTTP GET requests.

SteamGear currently supports both MPEG-DASH (Dynamic Adaptive Streaming over HTTP, ISO/IEC 23009-1) and Apple HLS (HTTP Live Streaming).

Additionally, StreamGear generates a manifest file (such as MPD for DASH) or a master playlist (such as M3U8 for Apple HLS) alongside the segments. These files contain essential segment information, including timing, URLs, and media characteristics like video resolution and adaptive bitrates. They are provided to the client before the streaming session begins.

StreamGear primarily works in two Independent Modes for transcoding which serves different purposes:

-

Single-Source Mode 💿 : In this mode, StreamGear transcodes entire video file (as opposed to frame-by-frame) into a sequence of multiple smaller chunks/segments for streaming. This mode works exceptionally well when you're transcoding long-duration lossless videos(with audio) for streaming that required no interruptions. But on the downside, the provided source cannot be flexibly manipulated or transformed before sending onto FFmpeg Pipeline for processing. Learn more about this mode here ➶

-

Real-time Frames Mode 🎞️ : In this mode, StreamGear directly transcodes frame-by-frame (as opposed to a entire video file), into a sequence of multiple smaller chunks/segments for streaming. This mode works exceptionally well when you desire to flexibility manipulate or transform

numpy.ndarrayframes in real-time before sending them onto FFmpeg Pipeline for processing. But on the downside, audio has to added manually (as separate source) for streams. Learn more about this mode here ➶

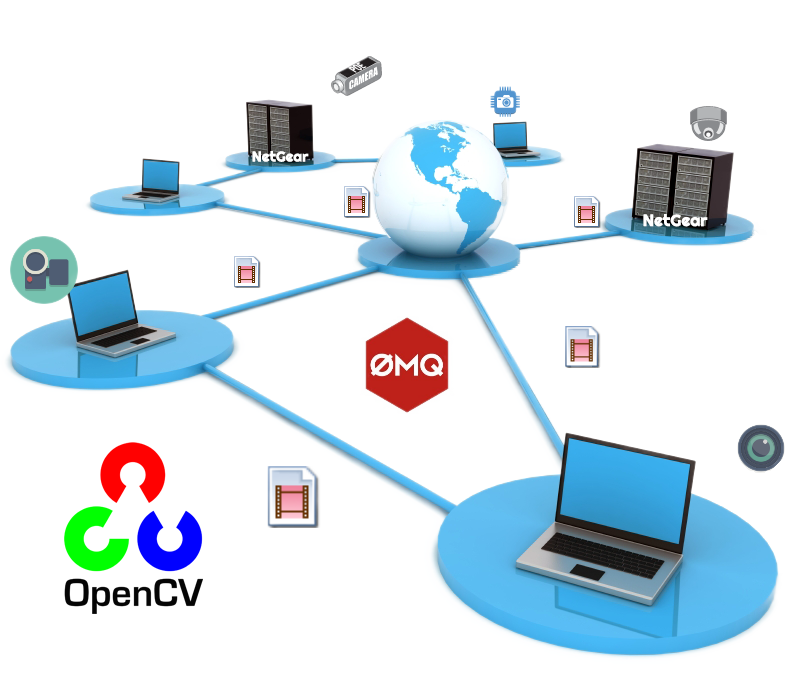

NetGear is exclusively designed to transfer video-frames & data synchronously between interconnecting systems over the network in real-time.

NetGear implements a high-level wrapper around PyZmQ python library that contains python bindings for ZeroMQ - a high-performance asynchronous distributed messaging library.

NetGear seamlessly supports additional bidirectional data transmission between receiver(client) and sender(server) while transferring video-frames all in real-time.

NetGear can also robustly handle Multiple Server-Systems and Multiple Client-Systems and at once, thereby providing access to a seamless exchange of video-frames & data between multiple devices across the network at the same time.

NetGear allows remote connection over SSH Tunnel that allows us to connect NetGear client and server via secure SSH connection over the untrusted network and access its intranet services across firewalls.

NetGear also enables real-time JPEG Frame Compression capabilities for boosting performance significantly while sending video-frames over the network in real-time.

For security, NetGear implements easy access to ZeroMQ's powerful, smart & secure Security Layers that enable Strong encryption on data and unbreakable authentication between the Server and the Client with the help of custom certificates.

NetGear as of now seamlessly supports three ZeroMQ messaging patterns:

zmq.PAIR(ZMQ Pair Pattern)zmq.REQ/zmq.REP(ZMQ Request/Reply Pattern)zmq.PUB/zmq.SUB(ZMQ Publish/Subscribe Pattern)

Whereas supported protocol are: tcp and ipc.

WebGear is a powerful ASGI Video-Broadcaster API ideal for transmitting Motion-JPEG-frames from a single source to multiple recipients via the browser.

WebGear API works on Starlette's ASGI application and provides a highly extensible and flexible async wrapper around its complete framework. WebGear can flexibly interact with Starlette's ecosystem of shared middleware, mountable applications, Response classes, Routing tables, Static Files, Templating engine(with Jinja2), etc.

WebGear API uses an intraframe-only compression scheme under the hood where the sequence of video-frames are first encoded as JPEG-DIB (JPEG with Device-Independent Bit compression) and then streamed over HTTP using Starlette's Multipart Streaming Response and a Uvicorn ASGI Server. This method imposes lower processing and memory requirements, but the quality is not the best, since JPEG compression is not very efficient for motion video.

In layman's terms, WebGear acts as a powerful Video Broadcaster that transmits live video-frames to any web-browser in the network. Additionally, WebGear API also provides a special internal wrapper around VideoGear, which itself provides internal access to both CamGear and PiGear APIs, thereby granting it exclusive power of broadcasting frames from any incoming stream. It also allows us to define our custom Server as source to transform frames easily before sending them across the network(see this doc example).

Below is a snapshot of a WebGear Video Server in action on Chrome browser:

WebGear Video Server at http://localhost:8000/ address.

Code to generate the above result:

# import required libraries

import uvicorn

from vidgear.gears.asyncio import WebGear

# various performance tweaks

options = {

"frame_size_reduction": 40,

"jpeg_compression_quality": 80,

"jpeg_compression_fastdct": True,

"jpeg_compression_fastupsample": False,

}

# initialize WebGear app

web = WebGear(source="foo.mp4", logging=True, **options)

# run this app on Uvicorn server at address http://localhost:8000/

uvicorn.run(web(), host="localhost", port=8000)

# close app safely

web.shutdown()

WebGear_RTC is similar to WeGear API in many aspects but utilizes WebRTC technology under the hood instead of Motion JPEG, which makes it suitable for building powerful video-streaming solutions for all modern browsers as well as native clients available on all major platforms.

WebGear_RTC is implemented with the help of aiortc library which is built on top of asynchronous I/O framework for Web Real-Time Communication (WebRTC) and Object Real-Time Communication (ORTC) and supports many features like SDP generation/parsing, Interactive Connectivity Establishment with half-trickle and mDNS support, DTLS key and certificate generation, DTLS handshake, etc.

WebGear_RTC can handle multiple consumers seamlessly and provides native support for ICE (Interactive Connectivity Establishment) protocol, STUN (Session Traversal Utilities for NAT), and TURN (Traversal Using Relays around NAT) servers that help us to seamlessly establish direct media connection with the remote peers for uninterrupted data flow. It also allows us to define our custom streaming class with suitable source to transform frames easily before sending them across the network(see this doc example).

WebGear_RTC API works in conjunction with Starlette's ASGI application and provides easy access to its complete framework. WebGear_RTC can also flexibly interact with Starlette's ecosystem of shared middleware, mountable applications, Response classes, Routing tables, Static Files, Templating engine(with Jinja2), etc.

Additionally, WebGear_RTC API also provides a special internal wrapper around VideoGear, which itself provides internal access to both CamGear and PiGear APIs.

Below is a snapshot of a WebGear_RTC Media Server in action on Chrome browser:

WebGear_RTC Video Server at http://localhost:8000/ address.

Code to generate the above result:

# import required libraries

import uvicorn

from vidgear.gears.asyncio import WebGear_RTC

# various performance tweaks

options = {

"frame_size_reduction": 30,

}

# initialize WebGear_RTC app

web = WebGear_RTC(source="foo.mp4", logging=True, **options)

# run this app on Uvicorn server at address http://localhost:8000/

uvicorn.run(web(), host="localhost", port=8000)

# close app safely

web.shutdown()

.

NetGear_Async can generate the same performance as NetGear API at about one-third the memory consumption, and also provide complete server-client handling with various options to use variable protocols/patterns similar to NetGear, but lacks in term of flexibility as it supports only a few NetGear's Exclusive Modes.

NetGear_Async is built on zmq.asyncio, and powered by a high-performance asyncio event loop called uvloop to achieve unmatchable high-speed and lag-free video streaming over the network with minimal resource constraints. NetGear_Async can transfer thousands of frames in just a few seconds without causing any significant load on your system.

NetGear_Async provides complete server-client handling and options to use variable protocols/patterns similar to NetGear API. Furthermore, NetGear_Async allows us to define our custom Server as source to transform frames easily before sending them across the network(see this doc example).

NetGear_Async now supports additional bidirectional data transmission between receiver(client) and sender(server) while transferring video-frames. Users can easily build complex applications such as like Real-Time Video Chat in just few lines of code.

NetGear_Async as of now supports all four ZeroMQ messaging patterns:

zmq.PAIR(ZMQ Pair Pattern)zmq.REQ/zmq.REP(ZMQ Request/Reply Pattern)zmq.PUB/zmq.SUB(ZMQ Publish/Subscribe Pattern)zmq.PUSH/zmq.PULL(ZMQ Push/Pull Pattern)

Whereas supported protocol are: tcp and ipc.

We welcome your contributions to help us improve and extend this project. If you want to get involved with VidGear development, checkout the Contribution Guidelines

We're offering support for VidGear on Gitter Community Channel. Come and join the conversation over there!

It is something I am doing with my own free time. But so much more needs to be done, and I need your help to do this. For just the price of a cup of coffee, you can make a difference 🙂

Here is a Bibtex entry you can use to cite this project in a publication:

@software{vidgear,

author = {Abhishek Thakur and

Zoe Papakipos and

Christian Clauss and

Christian Hollinger and

Ian Max Andolina and

Vincent Boivin and

Kyle Ahn and

freol35241 and

Benjamin Lowe and

Mickaël Schoentgen and

Renaud Bouckenooghe and

Ibtsam Ahmad},

title = {abhiTronix/vidgear: VidGear Stable v0.3.2},

month = sep,

year = 2023,

publisher = {Zenodo},

version = {vidgear-0.3.2},

doi = {10.5281/zenodo.8332548},

url = {https://doi.org/10.5281/zenodo.8332548}

}

Copyright © abhiTronix 2019

This library is released under the Apache 2.0 License.