Pretraining transformer-based Thai language models

thai2transformers provides customized scripts to pretrain transformer-based masked language model on Thai texts with various types of tokens as follows:

- spm: a subword-level token from SentencePiece library.

- newmm : a dictionary-based Thai word tokenizer based on maximal matching from PyThaiNLP.

- syllable: a dictionary-based Thai syllable tokenizer based on maximal matching from PyThaiNLP. The list of syllables used is from pythainlp/corpus/syllables_th.txt.

- sefr: a ML-based Thai word tokenizer based on Stacked Ensemble Filter and Refine (SEFR) [Limkonchotiwat et al., 2020] based on probabilities from CNN-based deepcut and SEFR tokenizer is loaded with

engine="best".

We curate a list of sources that can be used to pretrain language model. The statistics for each data source are listed in this page.

Also, you can download current version of cleaned datasets from here.

a) Instruction for RoBERTa BASE model pretraining on Thai Wikipedia dump:

In this example, we demonstrate how pretrain RoBERTa base model on Thai Wikipedia dump from scratch

-

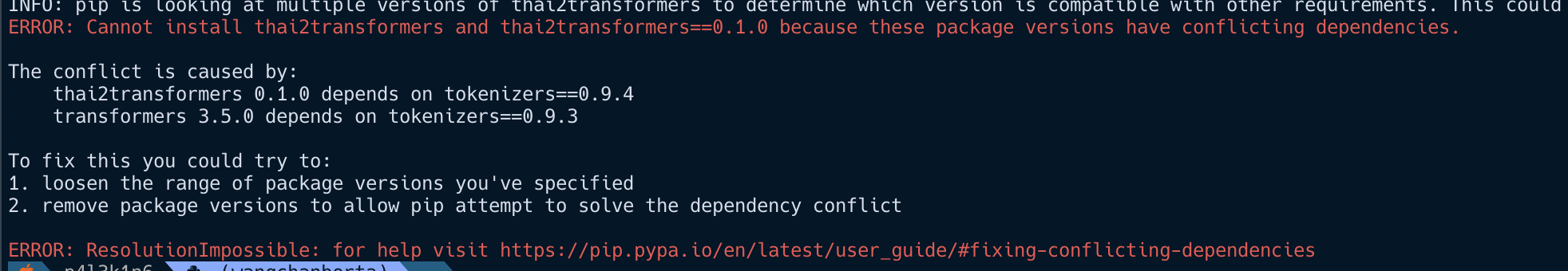

Install required libraries: 1_installation.md

-

Prepare

thwikidataset from Thai Wikipedia dump: 2_thwiki_data-preparation.md -

Tokenizer training and vocabulary building :

a) For SentencePiece BPE (

spm), word-level token (newmm), syllable-level token (syllable): 3_train_tokenizer.mdb) For word-level token from Limkonchotiwat et al., 2020 (

sefr-cut) : 3b_sefr-cut_pretokenize.md -

Pretrain a masked language model: 4_run_mlm.md

b) Instruction for RoBERTa model finetuning on existing Thai text classification, and NER/POS tagging datasets.

In this example, we demonstrate how to finetune WanchanBERTa, a RoBERTa base model pretrained on Thai Wikipedia dump and Thai assorted texts.

-

Finetune model for sequence classification task from exisitng datasets including

wisesight_sentiment,wongnai_reviews,generated_reviews_enth(review star prediction), andprachathai67k: 5a_finetune_sequence_classificaition.md -

Finetune model for token classification task (NER and POS tagging) from exisitng datasets including

thainerandlst20: 5b_finetune_token_classificaition.md

@misc{lowphansirikul2021wangchanberta,

title={WangchanBERTa: Pretraining transformer-based Thai Language Models},

author={Lalita Lowphansirikul and Charin Polpanumas and Nawat Jantrakulchai and Sarana Nutanong},

year={2021},

eprint={2101.09635},

archivePrefix={arXiv},

primaryClass={cs.CL}

}