Visit prometheus.io for the full documentation, examples and guides.

Prometheus, a Cloud Native Computing Foundation project, is a systems and service monitoring system. It collects metrics from configured targets at given intervals, evaluates rule expressions, displays the results, and can trigger alerts when specified conditions are observed.

The features that distinguish Prometheus from other metrics and monitoring systems are:

- A multi-dimensional data model (time series defined by metric name and set of key/value dimensions)

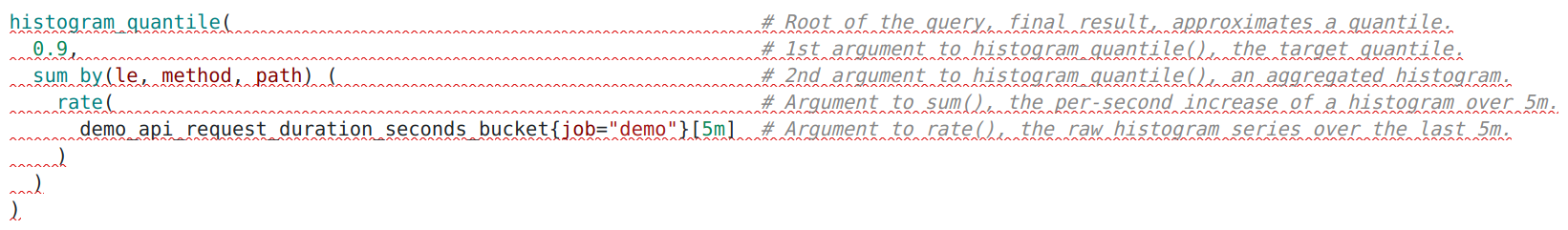

- PromQL, a powerful and flexible query language to leverage this dimensionality

- No dependency on distributed storage; single server nodes are autonomous

- An HTTP pull model for time series collection

- Pushing time series is supported via an intermediary gateway for batch jobs

- Targets are discovered via service discovery or static configuration

- Multiple modes of graphing and dashboarding support

- Support for hierarchical and horizontal federation

There are various ways of installing Prometheus.

Precompiled binaries for released versions are available in the download section on prometheus.io. Using the latest production release binary is the recommended way of installing Prometheus. See the Installing chapter in the documentation for all the details.

Docker images are available on Quay.io or Docker Hub.

You can launch a Prometheus container for trying it out with

docker run --name prometheus -d -p 127.0.0.1:9090:9090 prom/prometheusPrometheus will now be reachable at http://localhost:9090/.

To build Prometheus from source code, You need:

- Go version 1.17 or greater.

- NodeJS version 16 or greater.

- npm version 7 or greater.

Start by cloning the repository:

git clone https://github.com/prometheus/prometheus.git

cd prometheusYou can use the go tool to build and install the prometheus

and promtool binaries into your GOPATH:

GO111MODULE=on go install github.com/prometheus/prometheus/cmd/...

prometheus --config.file=your_config.ymlHowever, when using go install to build Prometheus, Prometheus will expect to be able to

read its web assets from local filesystem directories under web/ui/static and

web/ui/templates. In order for these assets to be found, you will have to run Prometheus

from the root of the cloned repository. Note also that these directories do not include the

React UI unless it has been built explicitly using make assets or make build.

An example of the above configuration file can be found here.

You can also build using make build, which will compile in the web assets so that

Prometheus can be run from anywhere:

make build

./prometheus --config.file=your_config.ymlThe Makefile provides several targets:

- build: build the

prometheusandpromtoolbinaries (includes building and compiling in web assets) - test: run the tests

- test-short: run the short tests

- format: format the source code

- vet: check the source code for common errors

- assets: build the React UI

Prometheus is bundled with many service discovery plugins. When building Prometheus from source, you can edit the plugins.yml file to disable some service discoveries. The file is a yaml-formated list of go import path that will be built into the Prometheus binary.

After you have changed the file, you

need to run make build again.

If you are using another method to compile Prometheus, make plugins will

generate the plugins file accordingly.

If you add out-of-tree plugins, which we do not endorse at the moment,

additional steps might be needed to adjust the go.mod and go.sum files. As

always, be extra careful when loading third party code.

The make docker target is designed for use in our CI system.

You can build a docker image locally with the following commands:

make promu

promu crossbuild -p linux/amd64

make npm_licenses

make common-docker-amd64We are publishing our Remote Write protobuf independently at buf.build.

You can use that as a library:

go get buf.build/gen/go/prometheus/prometheus/protocolbuffers/go@latestThis is experimental.

In order to comply with go mod rules, Prometheus release number do not exactly match Go module releases. For the Prometheus v2.y.z releases, we are publishing equivalent v0.y.z tags.

Therefore, a user that would want to use Prometheus v2.35.0 as a library could do:

go get github.com/prometheus/[email protected]This solution makes it clear that we might break our internal Go APIs between minor user-facing releases, as breaking changes are allowed in major version zero.

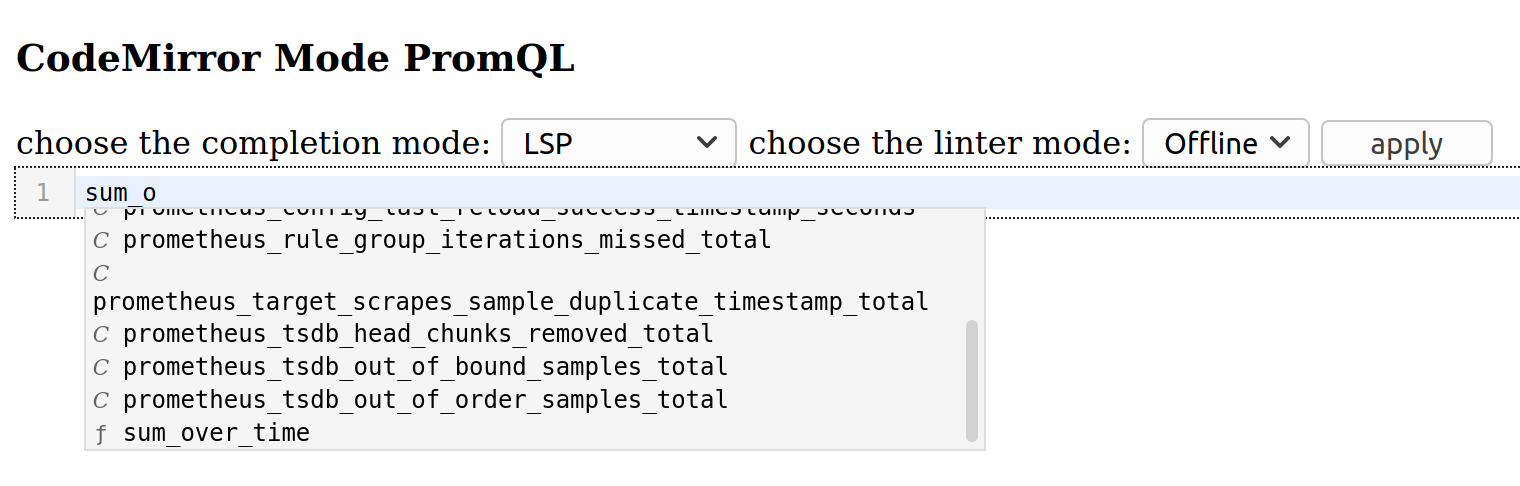

For more information on building, running, and developing on the React-based UI, see the React app's README.md.

- Godoc documentation is available via pkg.go.dev. Due to peculiarities of Go Modules, v2.x.y will be displayed as v0.x.y.

- See the Community page for how to reach the Prometheus developers and users on various communication channels.

Refer to CONTRIBUTING.md

Apache License 2.0, see LICENSE.