Webhook payload delivery service

Usage •

How it works •

Deploying your own Smee.io •

FAQ

Looking for probot/smee-client?

Smee is a webhook payload delivery service - it receives webhook payloads, and sends them to listening clients. You can generate a new channel by visiting https://smee.io, and get a unique URL to send payloads to.

Heads up! Smee.io is intended for use in development, not for production. It's a way to inspect payloads through a UI and receive them on a local machine, not as a proxy for production applications.

Smee works with two components: the public website smee.io and the smee-client. They talk to each other via Server-Sent Events, a type of connection that allows for messages to be sent from a source to any clients listening.

This means that channels are just an abstraction - all Smee does is get a payload and sends it to any actively connected clients.

Smee.io is a simple Node.js application. You can deploy it any way you would deploy any other Node app. The easier solution is probably Heroku, or you can use Docker:

docker run -p 3000:3000 ghcr.io/probot/smee.ioDon't forget to point smee-client to your instance of smee.io:

smee --url https://your-smee.io/channelIf you need to run multiple instances of the web app, you need a way to share events across those instances. A client may be connected to instance A, so if a relevant event is sent to instance B, instance A needs to know about it too.

For that reason, Smee.io has built-in support for Redis as a message bus. To enable it, just set a REDIS_URL environment variable. That will tell the app to use Redis when receiving payloads, and to publish them from each instance of the app.

How long do channels live for?

Channels are always active - once a client is connected, Smee will send any payloads it gets at /:channel to those clients.

Should I use this in production?

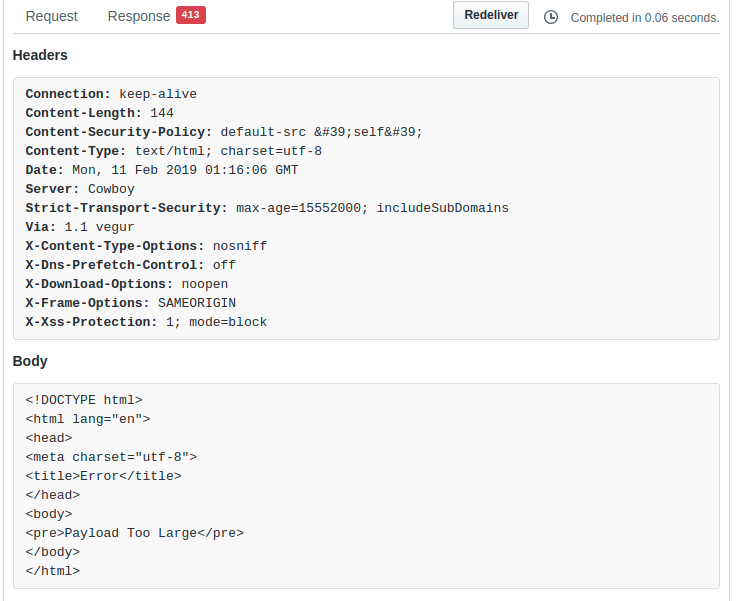

No! Smee is not designed for production use - it is a development and testing tool. Note that channels are not authenticated, so if someone has your channel ID they can see the payloads being sent, so it is not secure for production use.

Are payloads ever stored?

Webhook payloads are never stored on the server, or in any database; the Smee.io server is simply a pass-through. However, we do store payloads in localStorage in your browser, so that revisiting https://smee.io/:channel will persist the payloads you saw there last.