A high-performance and flexible authorization/permission engine built for developers and inspired by Google Zanzibar.

OpenFGA is designed to make it easy for developers to model their application permissions and add and integrate fine-grained authorization into their applications.

It allows in-memory data storage for quick development, as well as pluggable database modules. It currently supports PostgreSQL 14 and MySQL 8.

It offers an HTTP API and a gRPC API. It has SDKs for Java, Node.js/JavaScript, GoLang, Python and .NET. Look in our Community section for third-party SDKs and tools. It can also be used as a library (see example).

The following section aims to help you get started quickly. Please look at our official documentation for in-depth information.

ℹ️ The following sections setup an OpenFGA server using the default configuration values. These are for rapid development and not for a production environment. Data written to an OpenFGA instance using the default configuration with the memory storage engine will not persist after the service is stopped.

For more information on how to configure the OpenFGA server, please take a look at our official documentation on Running in Production.

OpenFGA is available on Dockerhub, so you can quickly start it using the in-memory datastore by running the following commands:

docker pull openfga/openfga

docker run -p 8080:8080 -p 3000:3000 openfga/openfga runTip

The OPENFGA_HTTP_ADDR environment variable can used to configure the address at which the playground expects the OpenFGA server to be. For example, docker run -e OPENFGA_PLAYGROUND_ENABLED=true -e OPENFGA_HTTP_ADDR=0.0.0.0:4000 -p 4000:4000 -p 3000:3000 openfga/openfga run will start the OpenFGA server on port 4000, and configure the playground too.

docker-compose.yaml provides an example of how to launch OpenFGA with Postgres using docker compose.

-

First, either clone this repo or curl the

docker-compose.yamlfile with the following command:curl -LO https://openfga.dev/docker-compose.yaml

-

Then, run the following command:

docker compose up

If you are a Homebrew user, you can install OpenFGA with the following command:

brew install openfgaDownload your platform's latest release and extract it. Then run the binary with the command:

./openfga runThere are two recommended options for building OpenFGA from source code:

Make sure you have Go 1.20 or later installed. See the Go downloads page.

You can install from source using Go modules:

-

First, make sure

$GOBINis on your shell$PATH:export PATH=$PATH:$(go env GOBIN)

-

Then use the install command:

go install github.com/openfga/openfga/cmd/openfga

-

Run the server with:

./openfga run

Alternatively you can build OpenFGA by cloning the project from this Github repo, and then building it with the go build command:

-

Clone the repo to a local directory, and navigate to that directory:

git clone https://github.com/openfga/openfga.git && cd openfga

-

Then use the build command:

go build -o ./openfga ./cmd/openfga

-

Run the server with:

./openfga run

Now that you have Set up and Installed OpenFGA, you can test your installation by creating an OpenFGA Store.

curl -X POST 'localhost:8080/stores' \

--header 'Content-Type: application/json' \

--data-raw '{

"name": "openfga-demo"

}'If everything is running correctly, you should get a response with information about the newly created store, for example:

{

"id": "01G3EMTKQRKJ93PFVDA1SJHWD2",

"name": "openfga-demo",

"created_at": "2022-05-19T17:11:12.888680Z",

"updated_at": "2022-05-19T17:11:12.888680Z"

}The Playground facilitates rapid development by allowing you to visualize and model your application's authorization model(s) and manage relationship tuples with a locally running OpenFGA instance.

To run OpenFGA with the Playground disabled, provide the --playground-enabled=false flag.

./openfga run --playground-enabled=false

Once OpenFGA is running, by default, the Playground can be accessed at http://localhost:3000/playground.

In the event that a port other than the default port is required, the --playground-port flag can be set to change it. For example,

./openfga run --playground-enabled --playground-port 3001Profiling through pprof can be enabled on the OpenFGA server by providing the --profiler-enabled flag.

./openfga run --profiler-enabledThis will start serving profiling data on port 3001. You can see that data by visiting http://localhost:3001/debug/pprof.

If you need to serve the profiler on a different address, you can do so by specifying the --profiler-addr flag. For example,

./openfga run --profiler-enabled --profiler-addr :3002Once the OpenFGA server is running, in another window you can run the following command to generate a compressed CPU profile:

go tool pprof -proto -seconds 60 http://localhost:3001/debug/pprof/profile

# will collect data for 60 seconds and generate a file like pprof.samples.cpu.001.pb.gzThat file can be analyzed visually by running the following command and then visiting http://localhost:8084:

go tool pprof -http=localhost:8084 pprof.samples.cpu.001.pb.gzTake a look at examples of how to:

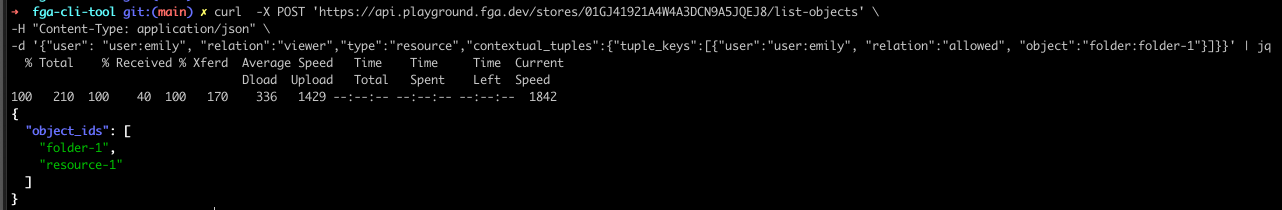

- Write an Authorization Model

- Write Relationship Tuples

- Perform Authorization Checks

- Add Authentication to your OpenFGA server

Don't hesitate to browse the official Documentation, API Reference.

The MySQL storage engine has a lower length limit for some properties of a tuple compared with other storage backends. For more information see the docs.

OpenFGA's MySQL Storage Adapter was contributed to OpenFGA by @twintag. Thanks!

The core OpenFGA service has been in use by Okta FGA in production since December 2021.

OpenFGA's Memory Storage Adapter was built for development purposes only and is not recommended for a production environment, because it is not designed for scalable queries and has no support for persistence.

You can learn about more organizations using OpenFGA in production here. If your organization is using OpenFGA in production please consider adding it to the list.

The OpenFGA team will do its best to address all production issues with high priority.

See CONTRIBUTING.

We hold a monthly meeting to interact with the community, collaborate and receive/provide feedback. You can find more details, including the time, our agenda, and the meeting minutes here.