Hey Jacob,

I had a random idea about predicting satellite images... it's probably really silly!

Background

The idea is slightly inspired by watching Yannic Kilcher's video about Alpha Fold 2 last night (whilst drinking a couple of glasses of wine, so I might have gotten over-excited!). It turns out that, when predicting protein structure, it's really useful to compare the amino acid sequence you're interested in with amino acid sequences from other species. (DeepMind don't take credit for this idea - this was known before DeepMind began work on Alpha Fold). By looking at which amino acids have been conserved by evolution, you can make some guesses about the 3D structure of the protein. So, at inference time, before Alpha Fold does any clever ML, it searches through terabytes of genetic databases to find "similar" amino acid sequences, and then uses those "multiple sequence alignments" (MSAs) as an input to the ML model (along with the amino acid sequence you're interested in, of course). Alpha Fold 1 extracted hand-crafted features from those MSAs. Alpha Fold 2 instead uses self-attention to figure out what's most important from those other sequences (no hand-crafted features).

In forecasting, people use vaguely similar tricks. In the National Grid ESO control room today, they manually pick a couple of "similar" days from the recent past to help them predict national electricity demand (here's a screenshot from their "Demand Predictor" that National Grid ESO shared the day after the England vs Denmark Euro2020 football game:)

And the forecasting literature talks about "analog ensembles" (e.g. Monache et al. 2013) where they trawl through historical weather data to find "analogs" (similar events) to the weather you're trying to forecast.

The idea

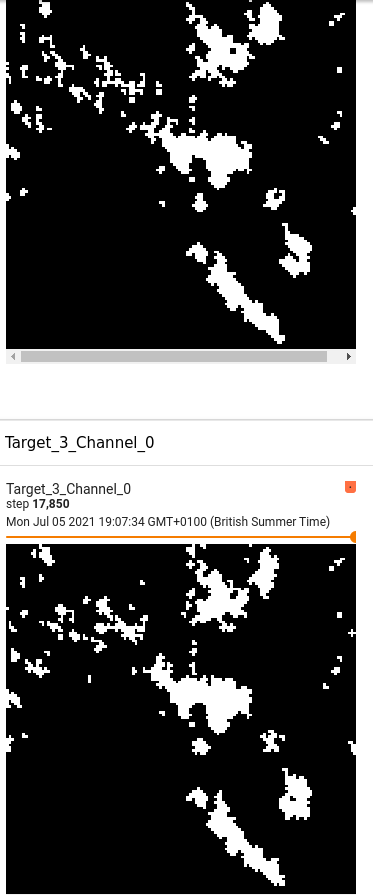

Which got me thinking that maybe we could use a vaguely similar trick for predicting how clouds are going to move & change over the next few hours: Maybe there's a way - at inference time - to find "similar" image sequences from the entire historical dataset, and then extract useful information from those "similar" sequences to help the model predict the future.

But I have little idea how to actually do this!

The conceptually simplest way to do this might be to do self-attention over the entire historical dataset, but that's orders of magnitude more data than any attention mechanism can handle (100,000 timesteps x 128 pixels x 128 pixels x 12 channels)!

But maybe there's a middle-ground... Maybe we hand-code filters to select, say, 5 "vaguely similar" sequences from the historical dataset (maybe we only consider historical data from the same season and same geographical location, and roughly the same time of day, and maybe also consider numerical weather prediction variables like mean temperature, wind speeds, humidity, etc.). Or maybe there's a way to learn some or all of those filters. Or, like in self-attention, pre-compute a "key" (computed by a learnt neural network) for each image sequence, which we can compare to a "query" at inference time.

But, even once we've got a set of, say, 5 "vaguely similar" sequences, I'm not entirely sure how best to let the ML model extract useful info from those sequences (not least because these "similar" sequences won't be perfectly aligned in space or time; far from it, in fact). Maybe we could just use a timeseries model which gets one timestep at a time, and each timestep has a frame from each "similar" sequence concatenated as channels (but that's unlikely to work because the sequences won't be perfectly aligned in time). Or maybe something like The Perceiver could handle 5 "similar" image sequences, each image at 128x128 (for a total of 81,920 input dimensions per timestep!) But The Perceiver can't predict a full image (let alone a full image sequence) so maybe the output from The Perceiver would condition the generator in a GAN?!? (This is getting complicated!)

Anyway... sorry for this epic message! I'm just thinking out loud. And, of course, absolutely no obligation to actually try any of this! It could well be a dumb idea. And, of course, the models you've already implemented should already learn important stuff from historical data during training!