Summary:

Material Canvas (MC) will empower users and eliminate pain points associated with hand authoring material types, materials, and shaders. It will offer intuitive, easy to use, node based, visual scripting interfaces that are consistent with other node-based tools in O3DE. Through MC, users will be able to define modular, material and shader centric nodes, create graphs by dragging the nodes onto a 2D grid, make connections between node input and output slots to control the flow of logic and data. Graphs will be automatically transformed into functional shader, material type, and material assets that otherwise require hand authoring. Workflows like this have been the standard for years in many content-creation tools and game engines. They are used for creating shaders, materials, particle effects, visual effects, animation sequences, game logic, and have many other applications.

What is the relevance of this feature?

O3DE and the Atom rendering engine provide many flexible, data driven, but complex systems like scenes, shaders, materials, passes, and image processing that bridge between high level rendering features and low level render hardware interfaces. Most of the data for controlling these systems is produced by manually editing JSON text files. The exception is that shader code is written in a custom, HLSL-derived, language called AZSL, that can be cross-compiled to different target platforms. Hand authoring any of this data can be daunting and prone to error. Users must learn the syntax, structures, properties, data types, supported values, and how all the pieces fit together. This requires familiarity with the corresponding systems, reviewing C++ code, dissecting or copying examples, and reading whatever user and technical documentation is available.

In addition to that, the data cannot be validated, previewed, or used in the engine until it has been saved, compiled by the Asset Processor (AP), and loaded by the systems that use it. The turnaround time, or "compile time", for this varies based on the AP queue, asset type, asset size, asset complexity, asset dependencies, asset builder performance, AZSL compiler, and other factors. The amount of time it takes to process an asset directly impacts the ability to view it in the engine and make iterations based on the results. This delay may not be as noticeable or impactful for some assets but is exacerbated for shaders and materials in relation to the number of other assets that are automatically reprocessed whenever these low-level dependencies change.

MC can resolve many, but not all, of these issues by hiding most of the data and complexity with visual editing, fast feedback, automatic validation, and live previews instead of studying and editing multiple JSON or AZSL files. This will reduce the learning curve, barrier to entry, and potential for human error.

Example Shader and Material Type Assets

https://github.com/o3de/o3de/tree/development/Gems/Atom/Feature/Common/Assets/Materials/Types

Feature design description:

O3DE already includes multiple node-based tools. Script Canvas is widely used for creating game logic and is being extended for other purposes. Landscape Canvas is used for managing connections between networks of entity and component references for systems like gradients, dynamic vegetation, and terrain. These tools are both built on top of the Graph Canvas (GC) gem that provides UI and systems for managing, rendering, and interacting with node graphs. Landscape Canvas also uses the Graph Model (GM) gem, which attempts to hide some of the complexity of GC’s numerous buses by adapting it to a more conventional set of object-oriented classes.

O3DE and Atom also include a dedicated Material Editor (ME) and other related features to create, customize, and manage material assets for use in the engine. Within ME, material source files, which are also represented in JSON, can be created, opened, and edited in a tabbed, multi-document/view workspace. ME contains a customizable viewport that displays a real-time preview of materials and any changes made to them. The viewport has options for selecting different models and lighting presets to show effects of the material on different surfaces in different lighting conditions. It informs users about external changes, gives them the options to apply those external changes to open materials, supports undo and redo, has its own asset browser filtered to the most relevant asset types, is fully automatable and extensible via Python and PyQt, and has several other features.

All of these tools are established, continuously maintained, hardened, extended, and have gone through many revisions and reviews with stakeholders.

MC will assimilate the systems, features, UI, UX, and workflows developed for these tools, operating in an environment similar to ME. The most obvious UI differences from ME are that the main documents and views center around the graph, the viewport is a dockable window, and the controls from the other node-based tools like the node palette, mini map, and bookmarks are integrated. Other dialogs or wizards may be developed as part of MC to help extend the library of node definitions and configure other data that informs the code generation process.

By taking advantage of these existing systems, MC will give users a familiar experience, environment, and workflows for authoring shaders and materials using node graphs. All shader and material related assets will be generated from the graph as changes are made to it.

An incredibly basic example would involve dragging in an output node representing standard PBR lighting model, dragging in a color variable node (internally it is a float4 with UI to visualize the color), setting it to red, and assigning it to the base color of the output node.

A more detailed description of workflow can be found in Appendix A.

Data Driven Nodes

In most cases, MC nodes will be defined using JSON, with settings for include files and lines of AZSL code that the generator will use to assemble the final shader program. Slot names, not to be confused with display names, on the node must match variable names in the AZSL so the generator can perform substitutions. The node AZSL will generally include calls to user defined functions, intrinsic functions, operators, etc. However, because the generator is primarily performing text substitutions and insertions, node AZSL can potentially contain anything that can be inserted inline in the generated code that does not break it. If segments of the generated code can be applied in different locations, the same system can be used for vertex shader inputs, the material SRG, struct, class, and function definitions, declarations, and function calls.

Nodes representing material properties or shader inputs might have other properties that can be edited in a node inspector, like enumeration values, ranges, group names, etc. These nodes will map directly to members in the material SRG and properties in the material type and material. It might be possible to automatically determine grouping and order from the organization in the graph.

There will be at least one variable node type for every supported material value data type. This would allow for variations of one data type surfaced in different ways, like colors versus vectors. Alternative options may be considered to simplify variable management, like the variable manager from Script Canvas.

Output Nodes (Lighting Model)

The surfaces parameters affecting the lower level lighting model shader implementation and contains the rest of the data needed for generation. It lists all the include files supporting the shading/lighting model, describes the organization of files, which passes are affected, input/output structures for each shader used in a pass, and other data needed to fill out ShaderSourceData and MaterialTypeSourceData structures.

Nodes With Multiple Outputs

GC and the DynamicNodeConfig support an arbitrary number of output slots. Variable, function, and other nodes need to support multiple output slots to access different members of complex types like structures and vectors. There must be a clean option for data driving this without extra code and ideally without node functions for every output. This should be able to append the member name to whatever is returned by the node function. AZSL also supports classes with member functions. It might be an option for the code generator to output complete classes with accessor and mutator functions that input and output slots map to.

Condition and Control Nodes

Branching can be implemented using nodes that resemble logic gates. The nodes should be able to select and output one of multiple inputs based on the value of an input flag.

Code Generation

The code generation process mostly involves performing inline insertions of include statements and shader code samples into the final ASZL file, performing search and replace string operations along the way. There are C++ data structures representing all of the other files that need to be produced. They will be populated with data from all of these supporting files.

Multiple options are being considered for the layout of the data used to drive code generation.

Validation

GC will automatically validate that data types match between node connections. Additional validation can be done to assess the completeness and correctness of the graph. The tool could check for and prevent cyclic dependencies, report general errors, report shader compilation errors, and allow users to view generated code.

Technical design description:

Requirements

- MC will be built on top of established frameworks like AZ Framework, AZ Tools Framework, Atom Tools Framework (AtomTF), GC, and GM.

- MC nodes representing variables, functions, inputs, outputs, and other supporting data sources must be data driven instead of hardcoded wherever possible, simple data structures, serialized in JSON, and thoroughly reflected to edit and behavior contexts to support editing, automation, and testing.

- MC must include basic reflected property editors for all supporting data sources except for AZSL.

- Graph traversal and data processing implementation for generating output data must be decoupled from the graph representation but directly accessible and executable on demand from within MC. This will allow the graph to be evaluated in different ways by different systems.

- MC will implement a data driven dynamic node management system that allows creating and customizing GC and GM nodes without additional C++ code.

- MC DynamicNodeConfig must be able to reference external data sources like AZSLI include files, AZSL code that may include markup, ShaderSourceData definitions, information about pass configuration, vertex and pixel shader input and output structures.

- MC will populate the node library and UI by enumerating and loading node configuration files stored on disk in the project.

- Any systems developed for MC that have a high likelihood of being needed elsewhere should automatically go to AtomTF.

Work Completed

https://github.com/o3de/o3de/pulls?page=1&q=is%3Apr+author%3Agadams3+is%3Aclosed+created%3A%3E2021-12-01

https://github.com/o3de/o3de/tree/development/Gems/Atom/Tools

The development time to create MC and other proposed tools will be greatly accelerated because a lot of the groundwork has already been completed. There was an extensive series of incremental refactors to the Material Editor (https://github.com/o3de/o3de/tree/development/Gems/Atom/Tools/MaterialEditor). More than 70 files worth of code and features was made generic, configurable, and migrated to AtomTF (https://github.com/o3de/o3de/tree/development/Gems/Atom/Tools/AtomToolsFramework).

Shader Management Console (SMC) (https://github.com/o3de/o3de/tree/development/Gems/Atom/Tools/ShaderManagementConsole) is a related tool that was originally created by duplicating the entire codebase from a much older version of ME. It is used for generating and managing shader variant lists containing many permutations of shader options that drive compilation of optimized versions of shaders. This refactor also involved cleaning up, optimizing, deleting a lot of boilerplate code, and bringing SMC up to standard and in synchronization with ME. Both of these projects now only contain a handful of files and will automatically benefit from improvements and fixes going forward.

MC prototype project (https://github.com/o3de/o3de/tree/development/Gems/Atom/Tools/MaterialCanvas) was created after this work was completed. It has a small footprint of mostly application specific code. GC and GM are integrated and operational. This required deep diving into Script Canvas and both gems and to learn and adapt them to work in a different environment. The prototype also implements initial, experimental support and test data for interactive, data driven nodes. It builds, runs, has a small library of test nodes created from node configurations files, and behaves exactly as a user would expect from something described as a cross pollination of Script Canvas and ME.

Much of the basic workflow is operational but the prototype is not complete.

High Level Development Plan

This is a high level breakdown of tasks to be completed. Some of the work is order independent and parallelizable, especially the work in later milestones to create a comprehensive node library for making useful graphs.

Milestone 1: New tools can be built on foundation of ME (Complete)

- Refactor ME and SMC, moving all extracted features and systems to AtomTF in order to support development of MC and other tools requiring similar features and workflows.

- Update all systems and buses to support multiple instances to unblock potential integration into the main editor.

- Update the viewport to support multiple instances within the same process.

- Implement support for multiple document types with multiple views within the same tool.

Milestone 2: MC prototype project running with GC, GM, data driven nodes (Complete)

- Create initial MC project and bare bones application built on top of the refactored ME framework.

- Make the necessary changes to support for integrating GC and GM into MC.

- Implement document and view classes that adapt GC and GM into the document and view systems.

- Develop prototype system for data driven dynamic nodes that can work with GC and GM.

- Create test data to validate that dynamic node system and graph serialization successfully operate in the prototype.

Milestone 3: MC supports basic code generation (In Progress)

- Create this document.

- Design for material canvas template system using existing data structures and AZSL with markup.

- Design for general purpose mechanism to inject code generation data into DynamicNodeConfig.

- Implement support for include files, shader functions, variables, and supporting data in the DynamicNodeConfig.

- Implement support for serializing AZStd::any material value types in DynamicNodeConfig and the graph serializer.

- There are a couple of large blocks for loading and storing data types within a AZStd::any.

- This code was copied from the material component material assignment serializer but it does not support image assets.

- The code should moved to a common location or the JSON serializer could add native support for AZStd::any and AZStd::variant.

- Implement serialize, edit, and behavior context reflection for the required types.

- Primarily includes DynamicNodeConfig, ShaderSourceData, MaterialTypeSourceData.

- Create test data for testing and validating code generation.

- Scalar and vector variable nodes.

- A handful of function nodes for operating on scalar and vector variables.

- A basic output lighting model node with supporting shaders and configuration files.

- Test nodes to test and validate the generation process.

- Test graphs that use these nodes

- Implement code generation for variable, function, and lighting model output nodes.

- This will be implemented in C++ inside the MC project.

- It might be possible to implement this using a Python script if the necessary data is reflected. The main advantages for driving this with script are leaving the process open for extension, keeping it decoupled from the compiled code so that's the strategy for generating code can change or the same executable can be used for other applications.

Milestone 4: MC has testing and extended tooling

- Add missing support to the MC document class

- Capturing graph data for undo redo

- Exposing selected node data and other configuration so that it is surfaced in the document inspector

- Add functions to the document request bus to support automation and testing

- Add RPE/DPE based dialogs for creating and editing node configurations, ShaderSourceData, templates

- This will require edit and behavior context reflection of all of the types for which we plan to provide in editor tools.

- The RPE will eventually be replaced with the DPE. When that happens, these tools will need to be revisited. There should be adapters in place for serialize and edit context reflected data types to work with the DPE.

- Work with QA to create a test plan and tests

- This will require changes to the Python test harness that was recently updated for ME.

- It will need to be further generalized to work with MC and other standalone tools like SMC and the soon to be proposed pass editor.

- Because MC will use AtoomTF, GC, and GM, automation and testing will immediately be able to use the existing behavior context bound buses and data types for testing and automation as proven with LC.

- Some of the tests and cases that were written for ME can also be generalized to run against other tools built on the framework

Milestone 5: MC has extended node library

- Once the end to end workflow is validated and the node configuration format is hardened, it will be time to populate the node library.

- This is where the most support will be needed.

- The effort will be large but completely parallelizable because everything is data driven, automatically discovered and registered.

- It might be worthwhile to investigate writing a Python script to scrape the data from documentation or use the shader compiler to generate nodes for intrinsic functions.

- The node library can be extended with new nodes incrementally and indefinitely.

- This also means that the stock node library must be moderated but that would be done through GH and PRs.

DynamicNodeConfig

These are the very simple but critical data structures currently used for configuring dynamic nodes, the literal building blocks of MC. This is not complete but the data structures are serializable and produce interactive nodes. Strings are intentionally used and suitable for most fields except for the slot values. That will need to be switched to AZStd::any or another dynamic data type so the data can be assigned and serialized. It should not take more than a day to finalize these structures, fill out the contexts and create a property editor that supports adding and removing slots, with you like to select from available types. Using AZStd::any will probably require another custom JSON serializer. Requiring a custom serializer will make it more difficult to maintain and extend with new or different data types.

https://github.com/o3de/o3de/tree/development/Gems/Atom/Tools/AtomToolsFramework/Code/Source/DynamicNode

namespace AtomToolsFramework

{

using DynamicNodeSettingsMap = AZStd::unordered_map<AZStd::string, AZStd::string>;

//! Contains all of the settings for an individual input or output slot on a DynamicNode

struct DynamicNodeSlotConfig final

{

AZ_CLASS_ALLOCATOR(DynamicNodeSlotConfig, AZ::SystemAllocator, 0);

AZ_RTTI(DynamicNodeSlotConfig, "{F2C95A99-41FD-4077-B9A7-B0BF8F76C2CE}");

static void Reflect(AZ::ReflectContext* context);

DynamicNodeSlotConfig(

const AZStd::string& name,

const AZStd::string& displayName,

const AZStd::string& description,

const AZStd::any& defaultValue,

const AZStd::vector<AZStd::string>& supportedDataTypes,

const DynamicNodeSettingsMap& settings);

DynamicNodeSlotConfig() = default;

~DynamicNodeSlotConfig() = default;

//! Unique name or ID of a slot

AZStd::string m_name = "Unnamed";

//! Name displayed next to a slot in the node UI

AZStd::string m_displayName = "Unnamed";

//! Longer description display for tooltips and other UI

AZStd::string m_description;

//! The default value associated with a slot

AZStd::any m_defaultValue;

//! Names of all supported data types that a slot can connect to

AZStd::vector<AZStd::string> m_supportedDataTypes;

//! Container of generic or application specific settings for a slot

DynamicNodeSettingsMap m_settings;

};

} // namespace AtomToolsFramework

namespace AtomToolsFramework

{

//! Structure used to data drive appearance and other settings for dynamic graph model nodes.

struct DynamicNodeConfig final

{

AZ_CLASS_ALLOCATOR(DynamicNodeConfig, AZ::SystemAllocator, 0);

AZ_RTTI(DynamicNodeConfig, "{D43A2D1A-B67F-4144-99AF-72EA606CA026}");

static void Reflect(AZ::ReflectContext* context);

DynamicNodeConfig(

const AZStd::string& category,

const AZStd::string& title,

const AZStd::string& subTitle,

const DynamicNodeSettingsMap& settings,

const AZStd::vector<DynamicNodeSlotConfig>& inputSlots,

const AZStd::vector<DynamicNodeSlotConfig>& outputSlots,

const AZStd::vector<DynamicNodeSlotConfig>& propertySlots);

DynamicNodeConfig() = default;

~DynamicNodeConfig() = default;

//! Save all the configuration settings to a JSON file at the specified path

//! @param path Absolute or aliased path where the configuration will be saved

//! @returns True if the operation succeeded, otherwise false

bool Save(const AZStd::string& path) const;

//! Load all of the configuration settings from JSON file at the specified path

//! @param path Absolute or aliased path from where the configuration will be loaded

//! @returns True if the operation succeeded, otherwise false

bool Load(const AZStd::string& path);

//! The category will be used by the DynamicNodeManager to sort and group node palette tree items

AZStd::string m_category;

//! Title will be displayed at the top of every DynamicNode in the graph view

AZStd::string m_title = "Unnamed";

//! Subtitle will be displayed below the main title of every DynamicNode

AZStd::string m_subTitle;

//! Settings is a container of key value string pairs that can be used for any custom or application specific data

DynamicNodeSettingsMap m_settings;

//! Input slots is a container of DynamicNodeSlotConfig for all inputs into a node

AZStd::vector<DynamicNodeSlotConfig> m_inputSlots;

//! Output slots is a container of DynamicNodeSlotConfig for all outputs from a node

AZStd::vector<DynamicNodeSlotConfig> m_outputSlots;

//! Property slots is a container of DynamicNodeSlotConfig for property widgets that appear directly on the node

AZStd::vector<DynamicNodeSlotConfig> m_propertySlots;

};

} // namespace AtomToolsFramework

DynamicNodeConfig Sample Data

{

"Type": "JsonSerialization",

"Version": 1,

"ClassName": "DynamicNodeConfig",

"ClassData": {

"category": "Math Operations",

"title": "Combine",

"inputSlots": [

{

"name": "inX",

"displayName": "X",

"description": "X",

"supportedDataTypes": [

"float"

],

"defaultValue": {

"$type": "float",

"Value": 0.0

}

},

{

"name": "inY",

"displayName": "Y",

"description": "Y",

"supportedDataTypes": [

"float"

],

"defaultValue": {

"$type": "float",

"Value": 0.0

}

},

{

"name": "inZ",

"displayName": "Z",

"description": "Z",

"supportedDataTypes": [

"float"

],

"defaultValue": {

"$type": "float",

"Value": 0.0

}

},

{

"name": "inW",

"displayName": "W",

"description": "W",

"supportedDataTypes": [

"float"

],

"defaultValue": {

"$type": "float",

"Value": 0.0

}

}

],

"outputSlots": [

{

"name": "outValue",

"displayName": "Value",

"description": "Value",

"supportedDataTypes": [

"float4"

]

}

]

}

}

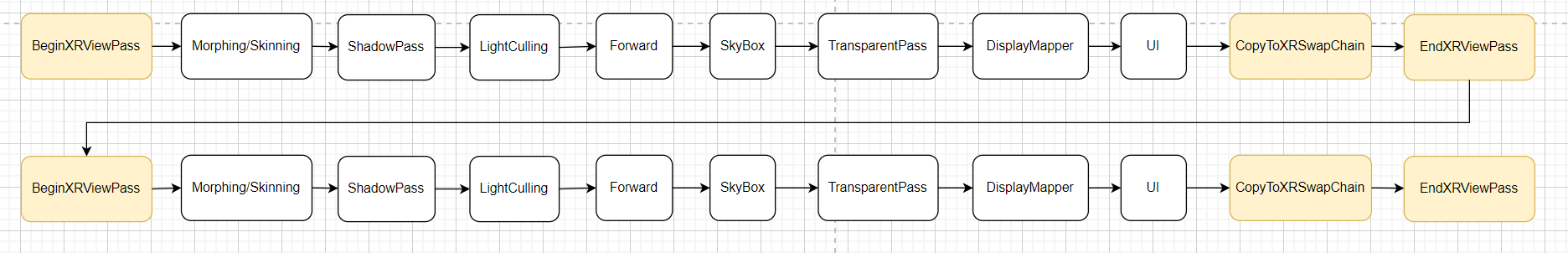

High Level Code Generation Logic

- The process will begin whenever the graph is opened, modified, or saved. This eliminates the need for an export button as part of the iteration and preview process.

- A request will be queued to generate the data, possibly throttled by some minimum interval to not flood the AP with requests.

- The generator collects all of the nodes from the graph and sorts them in execution and dependency order.

- Data from all of the variable nodes will be used to populate the material SRG and property groups in MaterialTypeSourceData structures.

- Ideally, all of the variable names in the SRG and material type can be automatically deduced from node names.

Data will be loaded from the lighting model output node template files and updated with details specific to the graph being processed.

- Template files represent ShaderSourceData, MaterialTypeSourceData, AZSL, and other files that guide or need to be exported by the generation process.

- Template files can be deserialized into their corresponding classes.

* Template files have a filename prefix that will be replaced with the graph name when generated data is saved.

- Template files have an extension suffix to prevent them from being recognized and processed by the AP.

- Template files contain substitutable tokens or markers for inserting different types of generated data.

- AZSL code will be generated for each input slot on the lighting model output node. The generator will traverse the graph, collecting a list of all of the unique include files from each node, inserting the include files at a designated location in the AZSL file, replacing symbols for variable names in function node text and pasting it into the shader in the designated location.

- More advanced cases need additional consideration.

Generating AZSL functions, structures, or classes for each node could possibly make the generation process easier and the code more readable.

- Then only class instances would need unique names.

- Having multiple inputs and outputs could also be handled with member functions.

- Once all of the structures have been filled out and buffers filled with shader code, everything will be saved in the same folder as the graph or a relative, intermediate asset folder.

- The AP will recognize and process the generated assets.

- The viewport will listen for ready and reload notifications for the generated material asset and apply it to the model. If the generated assets always resolve to the same asset IDs, then material hot-reloading will automatically handle this. As described above, the turnaround time for previewing assets that need to be built by the AP can vary but options exist to improve responsiveness if it proves to be an issue.

- ME uses the same classes that the material asset builder does to create preview assets in memory without using the AP. Updates to material properties are applied directly to the displayed material instance. This approach may not be practical for all asset types.

What are the advantages of this feature?

MC will excite, empower, and accelerate users that want to create content and develop new features for O3DE and their projects.

All of the tools built on the same framework will benefit from any enhancements and fixes made in the process of developing MC.

MC can be the foundation for future rendering centric, node-based tools built on the same framework. Hypothetical but very realistic and reasonable examples include editors for particles, passes, post effects, image processing, procedural generation, configuring asset pipelines, and generating other kinds of scripts. The developers of those tools could start from a template, customize their viewport, set up details for the document types and node configuration, and define logic for transforming the graphs into whatever the target data would be. In an ideal scenario, a lot of the hard coding and transformation will move to data or script, making one tool support many types of graphs.

What are the disadvantages of this feature?

There are no disadvantages to MC except for the time that it takes to develop and support it.

How will this be implemented or integrated into the O3DE environment?

MC will be integrated into O3DE initially as a standalone executable exactly like and in a parallel location to ME and SMC under https://github.com/o3de/o3de/tree/development/Gems/Atom/Tools . It will be developed using the same underlying framework, UI controls, UX, and workflows built for those tools. It will also incorporate GC and GM, which were used to build Script Canvas and Landscape Canvas.

Multiple options will be considered to address existing concerns over startup times for these executables. Startup times are primarily impacted by initializing unrelated gems that do unnecessary work in an isolated environment, connecting to the AP, and enumerating information from the asset catalog multiple times to populate controls that list selectable assets.

Some investigation and experimentation to improve startup times and integrate more seamlessly into the O3DE editor has already been done as part of exploration for another RFC proposal and prototype.

Options include:

- Review and refine the list of gems that should not auto load with the tools. This initially resulted in major improvements but dependencies may have changed. The tool does not control other modules with long load times. Those modules can be identified and optimized, which will also help launch times for the main editor.

- Restructure AtomTF based application project configurations, modules, and components so that they can build as both standalone executables and O3DE editor extensions. This was not feasible prior to a massive refactoring effort that was done earlier this year. ME was developed as a dedicated, standalone tool for users who wanted to focus on content creation and integration into workflows specific to materials without opening up the rest of the tools. At the time there were also other engineering and product related reasons for this request. Sandboxing the tool and its systems in their own process ensured that all systems could be developed and tested in modularly and independently. However, because some engine systems and the asset processor are required, launch times were impacted. Changes from the aforementioned refactor can enable optional integration of multiple tools with multiple documents and views into the main editor with some effort.

- Launch the standalone executables as child processes of the O3DE editor when it launches, initially hiding their UI, just like the AP. This will make them available immediately upon request and ensure that they shut down with the editor when it closes. It might also be possible to pass a window ID through the command line so that compatible executables can mount inside of the editor UI.

- Consolidate the number of times project assets are enumerated and cache the results for the duration of execution

- Move away from using azasset and AnyAsset. They are a general purpose file extension and asset type for feeding JSON files through the AP and compiling them into binary streams without registering individual concrete asset types. These assets are not identifiable based on their asset type or extension. They must be loaded and require casting to identify the object. Systems that use this type often have hard coded asset references. ME used it as a convenience mechanism for lighting and model presets. ME also used a double file extension to identify them without opening them. That is still an unnecessary string compare while enumerating thousands of files. There are also usability concerns using the same file extension for different types.

Are there any alternatives to this feature?

While there might be alternatives, MC is in high demand. Internal and external customers and developers have been anticipating this workflow for years. Having familiar, empowering, accessible tools included with the engine is a necessity to improve the user experience and adoption.

Material X

Material X is an open source, open standard, cross platform, C++ SDK for exchanging and representing materials and shaders in a node graph described by a simple XML format. It’s integrated into multiple, commercial content creation tools and other projects but is not standard or fully supported across all of them. It does not currently provide any tooling and requires additional work to adapt it to the needs of Atom. There are also questions about its suitability for real time rendering requirements. There may still be opportunities for integration or converting between Atom and Material X data.

Simplification and Optimization

Regardless of MC, evaluate all systems, data formats, APIs, and look for opportunities to simplify and optimize. One shining example of something like this was the refactor of MaterialTypeSourceData structure to support “remixable material types”. Prior to that, the organization of the data structures and file format was disjointed and unintuitive for navigating between property sets and property definitions. ME originally needed to improvise by stitching all of the data together in the order that it was supposed to appear in the UI. There was also a massive amount of overlap and repetition between material types that used the same sets of properties or even multiple layers of the same repeated property data.

All of that was significantly improved because the organization of the data now translates directly to how it’s presented in the editor. Support was also added for including external references to eliminate thousands of lines of repetition in stock material types. This was a major win for hand authoring material types.

Reflection for Editing and Automation

Most serializable, Atom data structures have not been fully reflected with edit and behavior context bindings.

Edit context reflection exposes details of a class or other structure so that it can be inspected and edited in a reflected property editor. Developers can add display names for variables, detailed descriptions for classes and members, attributes for validating values, specifying options, ranges, and the type of widget used to edit each property. After setting that up, creating a property editor is as simple as instantiating a widget, setting a few parameters, passing in the object pointer and data type, and making it visible.

Behavior context reflection exposes classes, data structures, functions, buses, types, and values to Lua, Python, and Script Canvas.

Filling out all of the contexts will enable users that are familiar with the supported scripting languages to build tools around the data without touching C++ code or worrying about the format. This is the exact same process used for components, extremely simple to if the structure is well organized and does not have any unsupported data types, and absolutely imperative for the sake of tooling, automation, and extensibility. MC will require some types to be fully reflected like ShaderSourceData.

MaterialTypeSourceData reflection and property editor

MaterialTypeSourceData reorganization made the layout much easier to digest for users and tools. It's a complex class but getting it completely reflected to the edit context with controls that auto populate options would enabled using the standard reflected property editor to create material types. ME inspector properties and UI are generated by transforming the data in this structure into something that works with the reflected property editor.

Without the reflection, a non node based editor for MaterialTypeSourceData could be created based off of the material document class. Users would be able to assemble a material type by adding groups, properties, etc and configuring all of the data. The UI would be very similar to the entity inspector. Something similar was described here o3de/o3de#2598 but the user was asking to be able to add and remove groups, opting into and out of material type features, instead of expanding and collapsing them in a complex material type. That sounds intuitive and would free up screen real estate in ME, compared to the original enable button proposal.

Retire AnyAsset and azasset

Not specific to materials and shaders, we should retire AnyAsset and azasset then register and use explicit asset types. It is difficult to identify what the file represents at first glance. At runtime, there is also a performance cost associated with using AnyAsset because the asset type cannot be determined without loading it, attempting to cast, comparing the file extension, or matching another naming convention.

Expose APIs from individual asset builders

One thing to consider is the possibility that the AP shouldn't be used if instantaneous feedback is required and other options are available. If systems that provide custom asset types and builders expose APIs for working with the source data formats and building the target asset formats then custom tools can use them directly. For example, APIs have been exposed from the image processing gem that are used in multiple places for loading and manipulating images in source data formats. Many of the Atom asset types implement “builder” classes so that their product assets can be constructed in code. Other systems could follow this pattern and expose APIs and builder classes. Script bindings could also be added to all of them. This may not be a practical option in all cases depending on the complexity of the asset and builder.

Education and Evangelization

Create a series of user guides, technical documents, video tutorials, and sample content that continuously educate developers and users about how to use these systems, work with their data, and any improvements.

How will users learn this feature?

While user guides and technical documentation will help, learning MC should not require deep study or much effort depending on what users want to do. It will probably be more beneficial to provide samples and video tutorials.

Users that want to build shaders and material types from pre-existing nodes, templates, and examples should only need to open the application and start creating immediately, with minimal documentation.

Users that want to create custom nodes will require some familiarity with writing AZSL and concepts in the engine. MC will eventually have in-editor dialogs to configure all of the node inputs and outputs, required include files, and AZSL.

Users that want to create more extensive, lower-level shading code, templates for completely new lighting models, will require more in-depth knowledge about Atom and AZSL. Teaching these things is beyond the scope of MC but there should be supporting documentation for how to do these things in O3DE. MC can include dialogs for creating everything except the AZSL code definition for the lighting model or other general purpose shader code. Creating extensive, lower-level shader code is probably not impossible in the same environment but might be impractical and unmanageable with extremely complex graphs.

Users that want to automate or extend MC using Python or C++ might need examples and documentation about the available function, class, and bus bindings. Users may also need experience or general documentation about event buses, organization of the Python code, writing Python and C++ code, all of which are beyond the scope of MC.

Are there any open questions?

What is not supported by Material Canvas?

MC does not generate anything that cannot already be created by hand. is just providing tools and transforming one representation of the data to another based on user and developer sourced data sets that get stitched together and fill out data structures.MC

MC does not change the implementation or behavior of existing systems. Other initiatives can be developed in parallel that may improve performance, features, organization, and representation of materials and shaders. If data formats or the shading language change then the nodes and generator might need updating. The graphs should continue to work as long as node slots and connections don’t change.

MC does not improve asset processing times. Iteration and preview times can be improved by optimizing asset queue prioritization, material and shader related asset builders, asset dependencies, and the shader compiler. Application startup times can also be improved by optimizing the time it takes to launch and connect to the AP.

MC does not improve performance of shaders, materials, or rendering in general. In fact, assets generated by MC may initially perform worse. While some level of deduplication and optimization can be done as part of converting the graph to generated data, the data will not be much better organized or optimized than what’s defined in the graph and each node. Generated data will likely not be more performant than something optimized by someone familiar with the shading language, target hardware, compiler, and performance tradeoffs. Providing the ability to export the generated files would allow them to be copied and manually optimized if needed. A lot of that responsibility will fall onto developers adding nodes and the compiler. Ease of use will hopefully lead to wider adoption but also open the floodgates to complex use cases that highlight opportunities for optimizing the rest of the engine. User created performance degradations can be mitigated with performance analysis tools.

Where should generated data be saved?

Users may or may not want to pollute their project or source control branches with generated assets. This makes sense with transient product assets. However, other source types will need references to the generated files. Otherwise users will not be able to create new materials based on them. Therefore, it seems like a hard requirement that the generated files can be output to source folders. We have Python scripts and other tools that are capable of generating source material from other data and they are also checked into source control.

An option would be for the source material data to support referencing either material types or material graphs that generate material types. That would require material graphs to exist, be processed, and function independently, of MC . This also does not cover references to the other generated data types. This would require major changes to many tools and systems like ME, material component, and material preview rendering that need access to MaterialTypeSourceData. For those reasons, this is not recommended or within scope.

What features that exist today does this not cover?

This does not yet include support for material functors. Material functors or lua scripts mutate the material in different ways as values change. They are used within the editor to update property metadata for visibility, readonly, value ranges, and other data. The options for functors are the same as the options for nodes. The template can come with functor script files that can be updated with string substitutions for whatever variables they need to affect. We could also provide a dialogue that allows the user to set the rules for functors and output the lua files from that. we could implement functor nodes, triggered by different conditions, that use the same generation process to also emit lua code.. The use cases and support would be much simpler than Script Canvas.

Initially, there will not be support for conditional or control flow nodes. Having support for function nodes or implementing nodes using shader code will allow users to implement their own behavior. However, if that functionality needs to be represented in the graph itself, options can be explored. It is preferred that we avoid use of the execution slot system provided by GC nodes because it requires manually directing the flow of logic with additional connections. Execution order can be determined by tracing dependencies between input and output slots on nodes in the graph.

What are other applications for this feature?

Appendix A - Workflow

- Users open MC to an empty workspace, surrounded by docked widgets for working with graphs, assets, and properties.

- Users see the node palette populated with all of the nodes defined in their project and active gems.

- Users can create custom nodes types by adding node configuration JSON and supporting files to gems or projects.

- Users can also create custom nodes using dialogs or other workflows to minimize editing text files.

- Users create or open graphs using the file menu or shortcuts.

- Users create new graphs by selecting from a library of existing graphs or templates, which they can also extend.

- Users see open documents represented by tabs at the top of the main window.

- Users see each open document tab contains a graph view in the workspace.

- Users add nodes to the graph view by dragging them from the node palette or using the context menu from within the graph view.

- Users see a node block appear with a title, description, input/output connection slots.

- Users see thumbnails or previews of colors, images, and other state on nodes that support it.

- Users see each node block is color coded and styled to distinguish between different types like variable, function, group, and output nodes.

- Users configure property values for inputs on each node and other property values in a node inspector.

- Users repeat this process to create additional nodes.

- Users click and drag to make connections between input and output slots.

- Users connect output slots from variable and function nodes to input slots on the output node.

- Users see the effects of their changes visualized and applied to a model in the viewport.

- Users find generated files saved in the same location as the graph or another designated location.

- Users save the graph and can reopen it in the same state.

- Workflow involving other windows and features provided from GC or ME can be found elsewhere.

Appendix B - Dynamic Node Configuration and Shader Generation Data Notes

Dynamic nodes and their configurations data drive most aspects of graphs in Material Canvas (MC). Dynamic node configurations specify all of the data for the appearance of a node, the name, description, category, etc. It also provides information about the layout, data types, and default values for all of the slots on a node and what they can connect to in a graph.

Dynamic nodes must be able to provide other details about the objects or code that they represent. Those details will feed into the code generation process in MC.

This should be achievable by including a basic table inside the configuration. Each entry in the table will provide settings for include files, code snippets, or other information that will be assembled into the final output.

In case shader code, or some other complex string, gets included inline the data will need to be escaped. Having a tool that allows entering the text without escape characters would be beneficial. Reading and writing the escape characters could then be left to the JSON serializer. If the embedded code is long or complicated then it’s probably better to put it in an include file.

All of this data would be used to fill out sections of the templates described here: Material Canvas Lighting Model Output Node and Templates.

Examples

"settings": {

"definition" : ["optionally define functions, structures, classes, preprocessor statements, specific to this node that get implanted in a common, global space in lieu of an include file"],

"dependency" : ["path to another file that’s required for generation, like template files"],

"include" : ["path to an include file to be inserted into AZSL code"],

"initialize" : ["optionally declare/initialize variables before they are used"],

"invoke" : ["code snippet that will be modified and injected into the generated code"]

}

Appendix C - Lighting Model Output Node and Templates Notes

Summary

Instead of a new class to represent the template, my recommendation is to provide folders containing the dynamic node configuration file along with partially filled out versions of the files to be generated. The filenames and content will contain markup or tokens that can be replaced by the generator. The template files will be used similarly to what the engine does with project, gem, component, and other templates.

The lighting model output node configuration will store a list of all of the included files and any other dependencies. Other than including the dependencies, and maybe a unique extension, this node can probably be treated like any other function node.

Requirements

The node folder contains stubbed out versions of all of the files that will be generated. These files would:

Have a special extension suffix so they don't get picked up by the AP

Be in the exact same format as the final data so they can be loaded, saved, and possibly edited using existing classes

Contain markup or some form of token that the generator will recognize and substitute with generated shader code, SRG definitions, include file lists

Advantages

- No new classes are required

- No conversion between classes

- Less redundant data

- Clear outline of generated data

- Setting up the edit context for these classes should enable editing them with minimal UI

- New lighting model nodes can be created from code or a simple tool

- Existing material times can be adapted into lighting model nodes more easily than if new classes were required

Examples

Example Folder Contents

An example node folder resembling but not representing Base PBR that contains the node and template files.

Gems/Atom/Feature/Assets/Materials/GraphNodes/YourNodeName would contain:

- YourNodeName_LightingModel.materialcanvasnode

- MaterialGraphName.materialtype.template

- MaterialGraphName.material.template

- MaterialGraphName_ForwardPass.shader.template

- MaterialGraphName_ForwardPass.shader.template

- MaterialGraphName_ForwardPass.azsl.template

- MaterialGraphName_Common.azsli.template

- MaterialGraphName_ForwardPass.shadervariantlist

- MaterialGraphName_LowEndForward.azsl.template

- MaterialGraphName_LowEndForward.shader.template

- MaterialGraphName_LowEndForward.shadervariantlist.template

The generator will load these files using the existing classes or streams to replace all of the tokens with the generated data for include files, shader code, property lists.

MaterialGraphName will be replaced with the name of the graph document.

The template extension will be removed when these files are resaved in the output folder.

Example File Contents

MaterialGraphName.materialtype.template

{

"description": "MaterialGraphName Material Type Template.",

"shaders": [

{

"file": "./MaterialGraphName_ForwardPass.shader",

"tag": "ForwardPass_EDS"

},

{

"file": "./MaterialGraphName_LowEndForward.shader",

"tag": "LowEndForward_EDS"

},

{

"file": "@gemroot:Atom_Feature_Common@/Assets/Materials/Types/Shaders/Shadow/Shadowmap.shader",

"tag": "Shadowmap"

},

{

"file": "@gemroot:Atom_Feature_Common@/Assets/Materials/Types/Shaders/Depth/DepthPass.shader",

"tag": "DepthPass"

},

{

"file": "@gemroot:Atom_Feature_Common@/Assets/Materials/Types/Shaders/MotionVector/MeshMotionVector.shader",

"tag": "MeshMotionVector"

}

],

"functors": [

],

"uvNameMap": {

"UV0": "Tiled",

"UV1": "Unwrapped"

}

}

MaterialGraphName_ForwardPass.shader.template

{

"Source" : "./MaterialGraphName_ForwardPass.azsl",

"DepthStencilState" :

{

"Depth" :

{

"Enable" : true,

"CompareFunc" : "GreaterEqual"

},

"Stencil" :

{

"Enable" : true,

"ReadMask" : "0x00",

"WriteMask" : "0xFF",

"FrontFace" :

{

"Func" : "Always",

"DepthFailOp" : "Keep",

"FailOp" : "Keep",

"PassOp" : "Replace"

},

"BackFace" :

{

"Func" : "Always",

"DepthFailOp" : "Keep",

"FailOp" : "Keep",

"PassOp" : "Replace"

}

}

},

"ProgramSettings":

{

"EntryPoints":

[

{

"name": "MaterialGraphName_ForwardPassVS",

"type": "Vertex"

},

{

"name": "MaterialGraphName_ForwardPassPS_EDS",

"type": "Fragment"

}

]

},

"DrawList" : "forward"

}

MaterialGraphName_Common.azsli.template

/*

* Copyright (c) Contributors to the Open 3D Engine Project.

* For complete copyright and license terms please see the LICENSE at the root of this distribution.

*

* SPDX-License-Identifier: Apache-2.0 OR MIT

*

*/

#pragma once

#include <Atom/Features/SrgSemantics.azsli>

#include <viewsrg.srgi>

#include <Atom/RPI/ShaderResourceGroups/DefaultDrawSrg.azsli>

#include <Atom/Features/PBR/LightingOptions.azsli>

#include <Atom/Features/PBR/AlphaUtils.azsli>

// <GENERATED_COMMON_INCLUDES

// GENERATED_COMMON_INCLUDES>

ShaderResourceGroup MaterialSrg : SRG_PerMaterial

{

// Auto-generate material SRG fields from MaterialGraphName

// <GENERATED_MATERIAL_SRG

// GENERATED_MATERIAL_SRG>

}

// <GENERATED_CLASSES

// GENERATED_CLASSES>

// <GENERATED_FUNCTIONS

// GENERATED_FUNCTIONS>

MaterialGraphName_ForwardPass.azsl.template

/*

* Copyright (c) Contributors to the Open 3D Engine Project.

* For complete copyright and license terms please see the LICENSE at the root of this distribution.

*

* SPDX-License-Identifier: Apache-2.0 OR MIT

*

*/

#include "Atom/Features/ShaderQualityOptions.azsli"

#include "MaterialGraphName_Common.azsli"

// SRGs

#include <Atom/Features/PBR/DefaultObjectSrg.azsli>

#include <Atom/Features/PBR/ForwardPassSrg.azsli>

// Pass Output

#include <Atom/Features/PBR/ForwardPassOutput.azsli>

// Utility

#include <Atom/Features/ColorManagement/TransformColor.azsli>

// Custom Surface & Lighting

#include <Atom/Features/PBR/Lighting/BaseLighting.azsli>

// Decals

#include <Atom/Features/PBR/Decals.azsli>

// ---------- Vertex Shader ----------

struct VSInput

{

// Base fields (required by the template azsli file)...

float3 m_position : POSITION;

float3 m_normal : NORMAL;

float4 m_tangent : TANGENT;

float3 m_bitangent : BITANGENT;

// Extended fields (only referenced in this azsl file)...

float2 m_uv0 : UV0;

float2 m_uv1 : UV1;

};

struct VSOutput

{

// Base fields (required by the template azsli file)...

// "centroid" is needed for SV_Depth to compile

precise linear centroid float4 m_position : SV_Position;

float3 m_normal: NORMAL;

float3 m_tangent : TANGENT;

float3 m_bitangent : BITANGENT;

float3 m_worldPosition : UV0;

float3 m_shadowCoords[ViewSrg::MaxCascadeCount] : UV3;

// Extended fields (only referenced in this azsl file)...

float2 m_uv[UvSetCount] : UV1;

};

#include <Atom/Features/Vertex/VertexHelper.azsli>

// One possibility for generating code for each input on the lighting model node would be to fill in stub functions for every input.

// Using this pattern would allow calling these functions from the main AZSL functions without the graph generated data.

// Vertex and pixel shader input and output structures might also need representation in the graph and to be generated.

// The inputs are going to be needed for anything interesting that varies with vertex and pixel attributes.

// VS/PS I/O might need to be represented in the graph and the structures generated from it like material SRG.

float3 GetBaseColorInput(VSOutput IN)

{

float3 result = float3(1.0, 1.0, 1.0);

// <GENERATED_VSINPUT_FUNCTION_BODY BaseColor

// GENERATED_VSINPUT_FUNCTION_BODY>

return result;

}

float GetMetallicInput(VSOutput IN)

{

float result = 1.0;

// <GENERATED_VSINPUT_FUNCTION_BODY Metallic

// GENERATED_VSINPUT_FUNCTION_BODY>

return result;

}

float GetSpecularF0Input(VSOutput IN)

{

float result = 1.0;

// <GENERATED_VSINPUT_FUNCTION_BODY SpecularF0

// GENERATED_VSINPUT_FUNCTION_BODY>

return result;

}

float3 GetNormalInput(VSOutput IN)

{

float3 result = float3(0.0, 1.0, 0.0);

// <GENERATED_VSINPUT_FUNCTION_BODY Normal

// GENERATED_VSINPUT_FUNCTION_BODY>

return result;

}

VSOutput MaterialGraphName_ForwardPassVS(VSInput IN)

{

VSOutput OUT;

float3 worldPosition = mul(ObjectSrg::GetWorldMatrix(), float4(IN.m_position, 1.0)).xyz;

// By design, only UV0 is allowed to apply transforms.

OUT.m_uv[0] = mul(MaterialSrg::m_uvMatrix, float3(IN.m_uv0, 1.0)).xy;

OUT.m_uv[1] = IN.m_uv1;

// No parallax in BaseBPR, so do shadow coordinate calculations in vertex shader

bool skipShadowCoords = false;

VertexHelper(IN, OUT, worldPosition, skipShadowCoords);

return OUT;

}

// ---------- Pixel Shader ----------

PbrLightingOutput ForwardPassPS_Common(VSOutput IN, bool isFrontFace)

{

// ------- Tangents & Bitangets -------

float3 tangents[UvSetCount] = { IN.m_tangent.xyz, IN.m_tangent.xyz };

float3 bitangents[UvSetCount] = { IN.m_bitangent.xyz, IN.m_bitangent.xyz };

if (o_normal_useTexture)

{

PrepareGeneratedTangent(IN.m_normal, IN.m_worldPosition, isFrontFace, IN.m_uv, UvSetCount, tangents, bitangents);

}

Surface surface;

surface.position = IN.m_worldPosition.xyz;

surface.normal = GetNormalInput(IN);

float3 baseColor = GetBaseColorInput(IN);

float metallic = GetMetallicInput(IN);

float specularF0Factor = GetSpecularF0Input(IN);

surface.SetAlbedoAndSpecularF0(baseColor, specularF0Factor, metallic);

surface.roughnessLinear = GetRoughnessInput(IN);

surface.CalculateRoughnessA();

// ------- Lighting Data -------

LightingData lightingData;

// Light iterator

lightingData.tileIterator.Init(IN.m_position, PassSrg::m_lightListRemapped, PassSrg::m_tileLightData);

lightingData.Init(surface.position, surface.normal, surface.roughnessLinear);

// Directional light shadow coordinates

lightingData.shadowCoords = IN.m_shadowCoords;

// Diffuse and Specular response (used in IBL calculations)

lightingData.specularResponse = FresnelSchlickWithRoughness(lightingData.NdotV, surface.specularF0, surface.roughnessLinear);

lightingData.diffuseResponse = float3(1.0, 1.0, 1.0) - lightingData.specularResponse;

// ------- Multiscatter -------

lightingData.CalculateMultiscatterCompensation(surface.specularF0, o_specularF0_enableMultiScatterCompensation);

// ------- Lighting Calculation -------

// Apply Decals

ApplyDecals(lightingData.tileIterator, surface);

// Apply Direct Lighting

ApplyDirectLighting(surface, lightingData);

// Apply Image Based Lighting (IBL)

ApplyIBL(surface, lightingData);

// Finalize Lighting

lightingData.FinalizeLighting();

float alpha = 1.0f;

PbrLightingOutput lightingOutput = GetPbrLightingOutput(surface, lightingData, alpha);

// Disable subsurface scattering

lightingOutput.m_diffuseColor.w = -1;

// Debug output for opaque objects

DebugModifyOutput(lightingOutput.m_diffuseColor, lightingOutput.m_specularColor, lightingOutput.m_albedo, lightingOutput.m_specularF0,

surface.normal, tangents[MaterialSrg::m_normalMapUvIndex], bitangents[MaterialSrg::m_normalMapUvIndex],

surface.baseColor, surface.albedo, surface.roughnessLinear, surface.metallic);

return lightingOutput;

}

ForwardPassOutput MaterialGraphName_ForwardPassPS_EDS(VSOutput IN, bool isFrontFace : SV_IsFrontFace)

{

ForwardPassOutput OUT;

PbrLightingOutput lightingOutput = ForwardPassPS_Common(IN, isFrontFace);

#ifdef UNIFIED_FORWARD_OUTPUT

OUT.m_color.rgb = lightingOutput.m_diffuseColor.rgb + lightingOutput.m_specularColor.rgb;

OUT.m_color.a = lightingOutput.m_diffuseColor.a;

#else

OUT.m_diffuseColor = lightingOutput.m_diffuseColor;

OUT.m_specularColor = lightingOutput.m_specularColor;

OUT.m_specularF0 = lightingOutput.m_specularF0;

OUT.m_albedo = lightingOutput.m_albedo;

OUT.m_normal = lightingOutput.m_normal;

#endif

return OUT;

}

Material Graph Template Alternative

The approach does not require any new classes to store the data or systems to convert it to the final form. Theoretically, all of the template assets should build as is if the template file extension was removed, no illegal characters are present in the filenames, and the markers in shader code are commented out.

We can also include ready to use graph templates that would be material canvas graph documents, preconfigured with the output node and possibly other nodes.