I am trying to train pix2pixHD model for edge-to-image translation with my own dataset. I prepared my dataset as follows

my_dataset

└── train

├── images

│ ├── 0.jpg

│ ├── 1.jpg

│ ├── 2.jpg

│

└── seg_maps

├── 0.jpg

├── 1.jpg

├── 2.jpg

I also have val set which I omitted above.

b'images/.'

Traceback (most recent call last):

File "train.py", line 90, in <module>

main()

File "train.py", line 69, in main

for it, data in enumerate(train_data_loader):

File "/home/rapsodo/.conda/envs/transformer_env/lib/python3.7/site-packages/torch/utils/data/dataloader.py", line 363, in __next__

data = self._next_data()

File "/home/rapsodo/.conda/envs/transformer_env/lib/python3.7/site-packages/torch/utils/data/dataloader.py", line 403, in _next_data

data = self._dataset_fetcher.fetch(index) # may raise StopIteration

File "/home/rapsodo/.conda/envs/transformer_env/lib/python3.7/site-packages/torch/utils/data/_utils/fetch.py", line 44, in fetch

data = [self.dataset[idx] for idx in possibly_batched_index]

File "/home/rapsodo/.conda/envs/transformer_env/lib/python3.7/site-packages/torch/utils/data/_utils/fetch.py", line 44, in <listcomp>

data = [self.dataset[idx] for idx in possibly_batched_index]

File "/home/rapsodo/Desktop/587/imaginaire/imaginaire/datasets/paired_videos.py", line 302, in __getitem__

return self._getitem(index, concat=True)

File "/home/rapsodo/Desktop/587/imaginaire/imaginaire/datasets/paired_videos.py", line 240, in _getitem

data = self.load_from_dataset(keys, lmdbs)

File "/home/rapsodo/Desktop/587/imaginaire/imaginaire/utils/data.py", line 408, in load_from_lmdb

key.encode(), data_type))

File "/home/rapsodo/Desktop/587/imaginaire/imaginaire/datasets/lmdb.py", line 72, in getitem_by_path

if img.ndim == 3 and img.shape[-1] == 3:

UnboundLocalError: local variable 'img' referenced before assignment

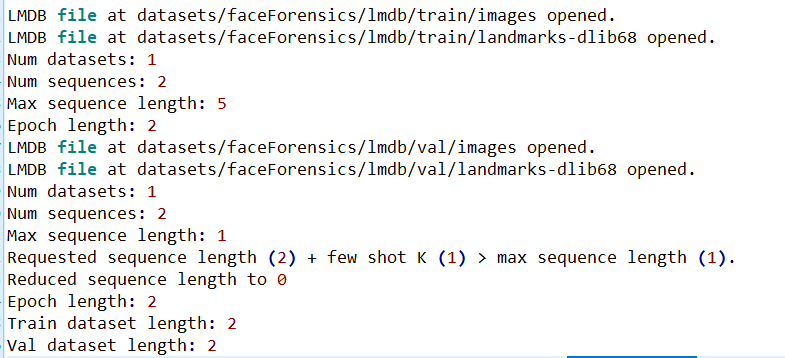

When I debugged the code, I realized that LMDB dataset should be built with paired=True. Am I right?

Thank you.