A framework for interacting with tests within NeoVim.

This is early stage software.

See :h neotest for details on neotest is designed and how to interact with it programmatically.

Neotest uses nvim-nio and plenary.nvim.

Most adapters will also require nvim-treesitter.

Neotest uses the CursorHold event. This uses the updatetime

setting which is by default very high, and lowering this can lead to excessive writes to disk.

It's recommended to use https://github.com/antoinemadec/FixCursorHold.nvim which

allows detaching updatetime from the frequency of the CursorHold event.

The repo claims it is no longer needed but it is still recommended (See this issue)

Install with your favourite package manager alongside nvim-dap

dein:

call dein#add("nvim-lua/plenary.nvim")

call dein#add("antoinemadec/FixCursorHold.nvim")

call dein#add("nvim-treesitter/nvim-treesitter")

call dein#add("nvim-neotest/nvim-nio")

call dein#add("nvim-neotest/neotest")Plug 'nvim-lua/plenary.nvim'

Plug 'antoinemadec/FixCursorHold.nvim'

Plug 'nvim-treesitter/nvim-treesitter'

Plug 'nvim-neotest/nvim-nio'

Plug 'nvim-neotest/neotest'use {

"nvim-neotest/neotest",

requires = {

"nvim-neotest/nvim-nio",

"nvim-lua/plenary.nvim",

"antoinemadec/FixCursorHold.nvim",

"nvim-treesitter/nvim-treesitter"

}

}{

"nvim-neotest/neotest",

dependencies = {

"nvim-neotest/nvim-nio",

"nvim-lua/plenary.nvim",

"antoinemadec/FixCursorHold.nvim",

"nvim-treesitter/nvim-treesitter"

}

}To get started you will also need to install an adapter for your test runner. See the adapter's documentation for their specific setup instructions.

| Test Runner | Adapter |

|---|---|

| pytest | neotest-python |

| python-unittest | neotest-python |

| plenary | neotest-plenary |

| go | neotest-go neotest-golang |

| jest | neotest-jest |

| vitest | neotest-vitest |

| stenciljs | neotest-stenciljs |

| playwright | neotest-playwright |

| rspec | neotest-rspec |

| minitest | neotest-minitest |

| dart, flutter | neotest-dart |

| testthat | neotest-testthat |

| phpunit | neotest-phpunit |

| pest | neotest-pest |

| rust (treesitter) | neotest-rust |

| rust (LSP) | rustaceanvim |

| elixir | neotest-elixir |

| dotnet | neotest-dotnet |

| scala | neotest-scala |

| haskell | neotest-haskell |

| deno | neotest-deno |

| java | neotest-java |

| kotlin | neotest-kotlin |

| foundry | neotest-foundry |

| zig | neotest-zig |

| c++ (google test) | neotest-gtest |

| gradle | neotest-gradle |

| bash | neotest-bash |

| hardhat | neotest-hardhat |

For any runner without an adapter you can use neotest-vim-test which supports any runner that vim-test supports. The vim-test adapter does not support some of the more advanced features such as error locations or per-test output. If you're using the vim-test adapter then install vim-test too.

Provide your adapters and other config to the setup function.

require("neotest").setup({

adapters = {

require("neotest-python")({

dap = { justMyCode = false },

}),

require("neotest-plenary"),

require("neotest-vim-test")({

ignore_file_types = { "python", "vim", "lua" },

}),

},

})See :h neotest.Config for configuration options and :h neotest.setup() for the default values.

It is highly recommended to use lazydev.nvim to enable type checking for neotest to get type checking, documentation and autocompletion for all API functions.

The default icons use codicons. It's recommended to use this fork which fixes alignment issues for the terminal. If your terminal doesn't support font fallback and you need to have icons included in your font, you can patch it via Font Patcher. There is a simple step by step guide here.

The interface for using neotest is very simple.

Run the nearest test

require("neotest").run.run()Run the current file

require("neotest").run.run(vim.fn.expand("%"))Debug the nearest test (requires nvim-dap and adapter support)

require("neotest").run.run({strategy = "dap"})See :h neotest.run.run() for parameters.

Stop the nearest test, see :h neotest.run.stop()

require("neotest").run.stop()Attach to the nearest test, see :h neotest.run.attach()

require("neotest").run.attach()For extra features neotest provides consumers which interact with the state of the tests and their results.

Some consumers will be passive while others can be interacted with.

:h neotest.watch

Watches files related to tests for changes and re-runs tests

watch.mp4

:h neotest.output

:h neotest.output_panel

Records all output of tests over time in a single window

:h neotest.summary

Displays test suite structure from project root.

Provides mappings for running, attaching, stopping and showing output.

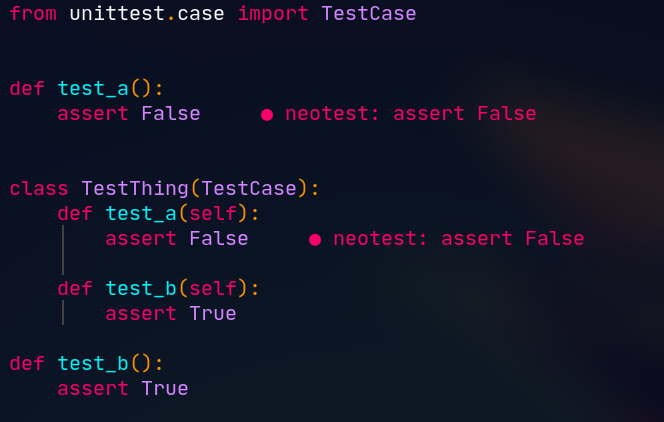

:h neotest.diagnostic

Use vim.diagnostic to display error messages where they occur while running.

:h neotest.status

Displays the status of a test/namespace beside the beginning of the definition.

See the help doc for a list of all consumers and their documentation.

Strategies are methods of running tests. They provide the functionality to attach to running processes and so attaching will mean different things for different strategies.

| Name | Description |

|---|---|

| integrated | Default strategy that will run a process in the background and allow opening a floating terminal to attach. |

| dap | Uses nvim-dap to debug tests (adapter must support providing an nvim-dap configuration) |

Custom strategies can implemented by providing a function which takes a neotest.RunSpec and returns an table that fits the neotest.Process

interface. Plenary's async library can be used to run asynchronously.

This section is for people wishing to develop their own neotest adapters. The documentation here and the underlying libraries are WIP and open to feedback/change. Please raise issues with any problems understanding or using the this doc. The best place to figure out how to create an adapter is by looking at the existing ones.

Adapters must fulfill an interface to run (defined here).

Much of the functionality is built around using a custom tree object that defines the structure of the test suite.

There are helpers that adapters can use within their code (all defined under neotest.lib)

Adapters must solve three problems:

- Parse tests

- Construct test commands

- Collect results

There are two stages to this, finding files which is often a simple file name check (it's OK if a test file has no actual tests in it) and parsing test files.

For languages supported by nvim-treesitter, the easiest way to parse tests is to use the neotest treesitter wrapper to parse a query to constuct a tree structure.

The query can define capture groups for tests and namespaces. Each type must have <type>.name and <type>.definition

capture groups. They can be used multiple times in the query

Example from neotest-plenary:

local lib = require("neotest.lib")

function PlenaryNeotestAdapter.discover_positions(path)

local query = [[

;; describe blocks

((function_call

name: (identifier) @func_name (#match? @func_name "^describe$")

arguments: (arguments (_) @namespace.name (function_definition))

)) @namespace.definition

;; it blocks

((function_call

name: (identifier) @func_name

arguments: (arguments (_) @test.name (function_definition))

) (#match? @func_name "^it$")) @test.definition

;; async it blocks (async.it)

((function_call

name: (

dot_index_expression

field: (identifier) @func_name

)

arguments: (arguments (_) @test.name (function_definition))

) (#match? @func_name "^it$")) @test.definition

]]

return lib.treesitter.parse_positions(path, query, { nested_namespaces = true })

endFor languages unsupported by treesitter you can use regexes like neotest-vim-test or hook into the test runner.

This is the easiest part of writing an adapter. You need to handle the different types of positions that a user may run (directory, file, namespace and test).

If you are hooking into the runner, you may not be running the test runner command directly. neotest-python and neotest-plenary both are examples of this, with a script being used to run each runner to handle parsing results and storing them for result collection later.

Collecting results will be the most involved process in the adapter, with complexity depending on the test runner and desired features.

For the most basic implementation an adapter can choose to only run tests individually and use the exit code as an indicator of the result (this is how neotest-vim-test works) but this impacts peformance and also loses out on more advanced features.

If tests can be run together then the adapter must provide results for at least each individual test. Results for namespaces, files and directories will be inferred from their child tests.

For collecting test specific error messages, error locations etc you'll need to parse output or hook into the runner. See neotest-python and neotest-plenary for examples on how this can be done.