| Flow | Gateway | DataProcessing | |||

|---|---|---|---|---|---|

| Metrics | SimulatedData | Website |

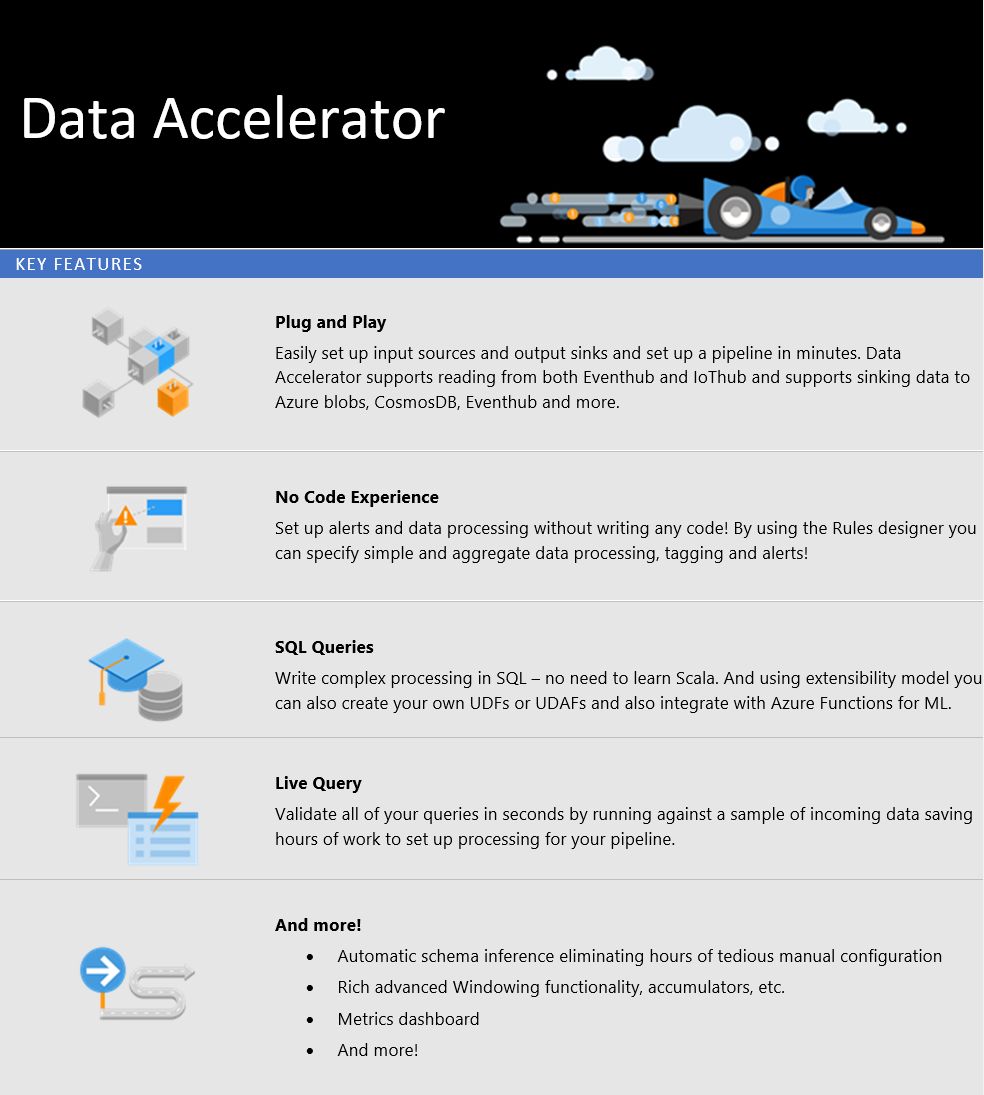

Data Accelerator for Apache Spark democratizes streaming big data using Spark by offering several key features such as a no-code experience to set up a data pipeline as well as fast dev-test loop for creating complex logic. Our team has been using the project for two years within Microsoft for processing streamed data across many internal deployments handling data volumes at Microsoft scale. It offers an easy to use platform to learn and evaluate streaming needs and requirements. We are thrilled to share this project with the wider community as open source!

Azure Friday: We are now featured on Azure Fridays! See the video here.

Data Accelerator offers three level of experiences:

- The first requires no code at all, using rules to create alerts on data content.

- The second allows to quickly write a Spark SQL query with additions like LiveQuery, time windowing, in-memory accumulator and more.

- The third enables integrating custom code written in Scala or via Azure functions.

You can get started locally for Windows, macOs and Linux following these instructions

To deploy to Azure, you can use the ARM template; see instructions deploy to Azure.

The data-accelerator repository contains everything needed to set up an end-to-end data pipeline. There are many ways you can participate in the project:

- Submit bugs and requests

- Review code changes

- Review documentation and make updates ranging from typos to new content.

To unleash the full power Data Accelerator, deploy to Azure and check cloud mode tutorials.

We have also enabled a "hello world" experience that you try out locally by running docker container. When running locally there are no dependencies on Azure, however the functionality is very limited and only there to give you a very cursory overview of Data Accelerator.

To run Data Accelerator locally, deploy locally and then check out the local mode tutorials.

Data Accelerator for Spark runs on the following:

- Azure HDInsight with Spark 2.4 (2.3 also supported)

- Azure Databricks with Spark 2.4

- Service Fabric (v6.4.637.9590) with

- .NET Core 2.2

- ASP.NET

- App Service with Node 10.6

See the wiki pages for further information on how to build, diagnose and maintain your data pipelines built using Data Accelerator for Spark.

If you are interested in fixing issues and contributing to the code base, we would love to partner with you. Try things out, join in the design conversations and make pull requests.

- Request new features on GitHub

- Open a new issue on GitHub

- Ask a question on Stack Overflow

- Contact us: [email protected]

- Check out the contributing page to see the best places to log issues and start discussions.

Please also see our Code of Conduct.

Security issues and bugs should be reported privately, via email, to the Microsoft Security Response Center (MSRC) [email protected]. You should receive a response within 24 hours. If for some reason you do not, please follow up via email to ensure we received your original message. Further information, including the MSRC PGP key, can be found in the Security TechCenter.

This repository is licensed with the MIT license.