LINSTOR®, developed by LINBIT®, is open source software that manages replicated volumes across a group of machines. LINSTOR natively integrates with Kubernetes and other platforms and makes building, running, and controlling block storage simple. LINSTOR is designed to manage block storage devices for large Linux server clusters. It's typically used to provide persistent and highly available Linux block storage for cloud native and hypervisor environments.

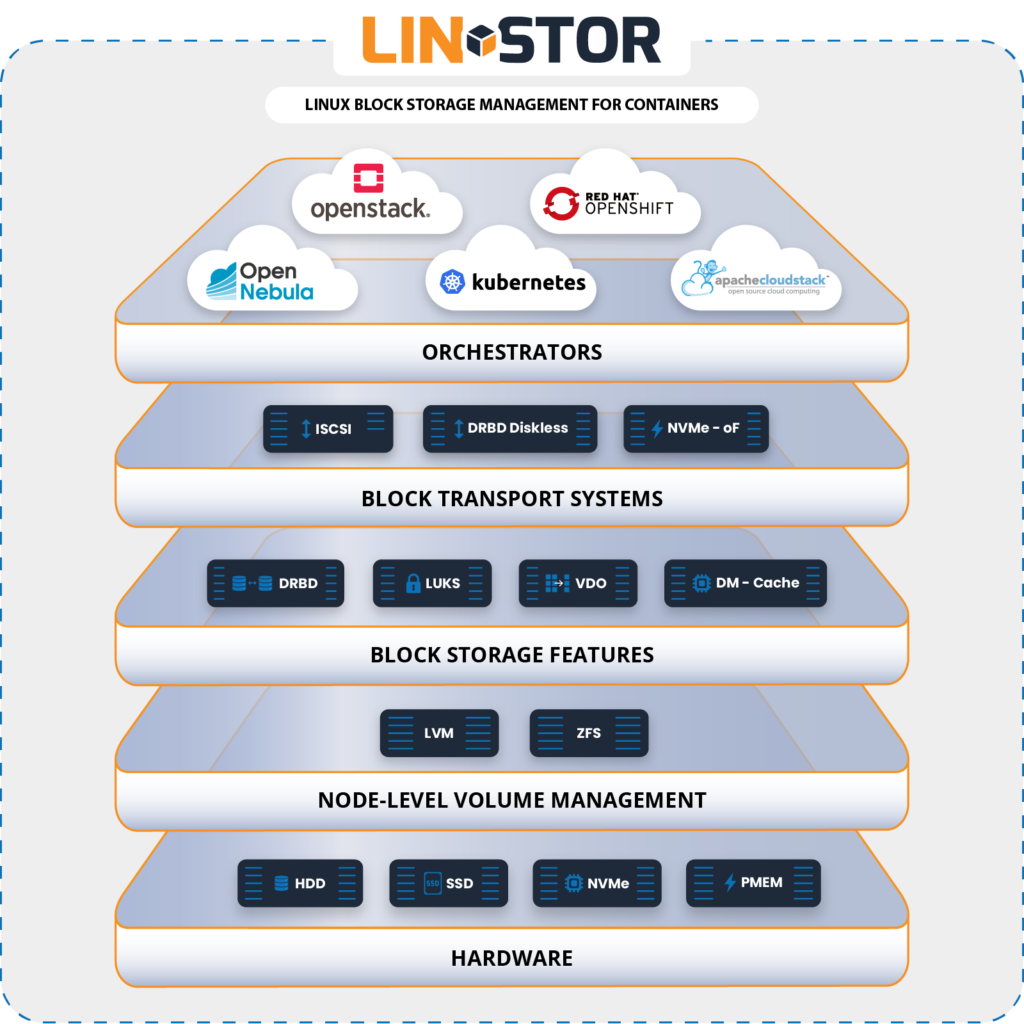

Historically LINSTOR started as a DRBD® resource file generator which conveniently also created LVM or ZFS volumes. Over time LINSTOR steadily grew. LINBIT developers added features and drivers in both directions: south-bound features such as snapshots, LUKS, dm-cache, dm-writecache, or NVMe storage layers; and north-bound drivers for integrating directly with other platforms such as CloudStack, Kubernetes, OpenNebula, OpenShift, OpenStack, Proxmox VE, and VMware.

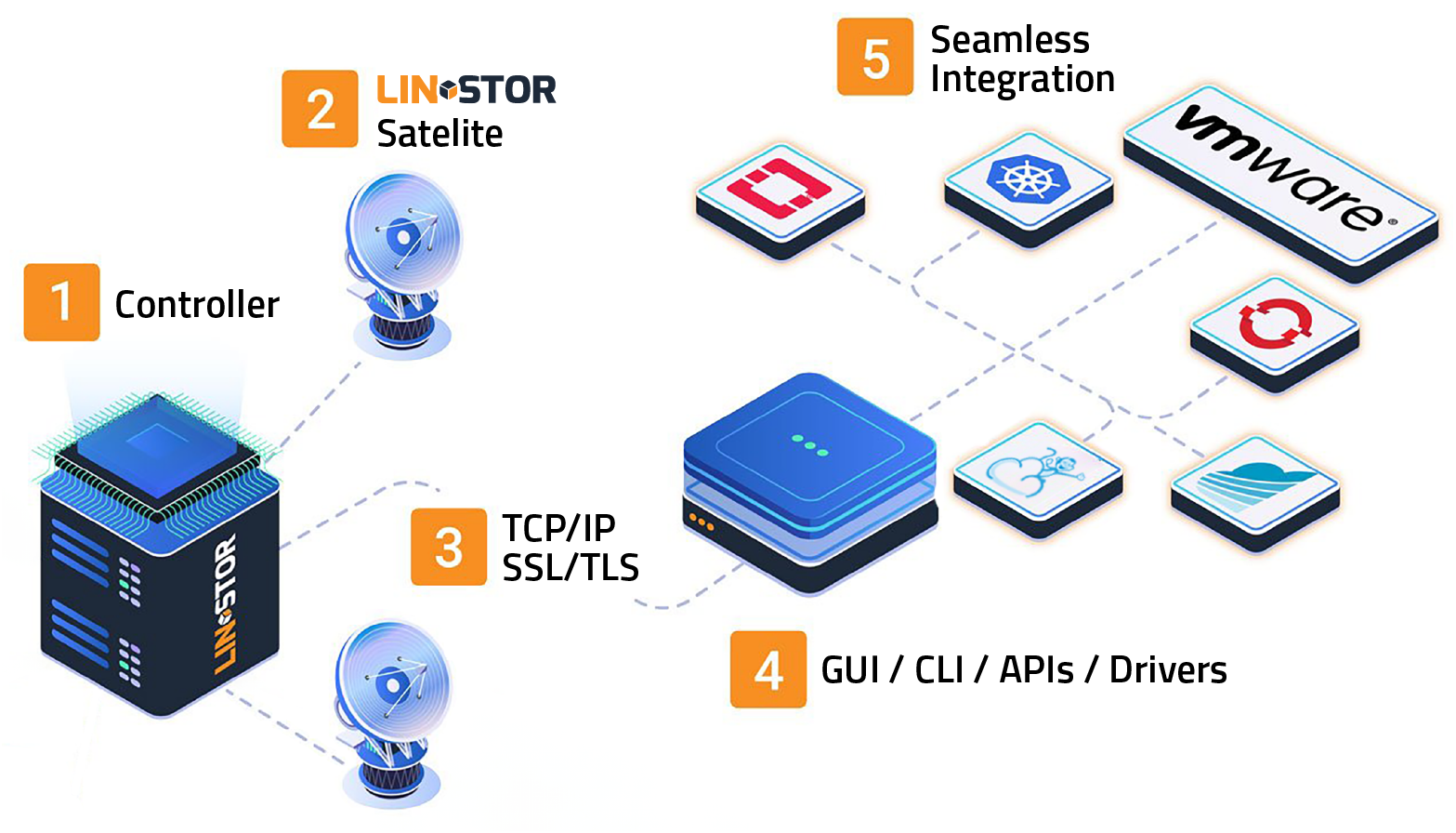

A LINSTOR system consists of multiple server and client components. A LINSTOR controller service manages the configuration of the LINSTOR cluster and all of its managed storage resources. The LINSTOR satellite service manages creation, modification, and deletion of storage resources on each node that provides or uses LINSTOR-managed storage resources.

The storage system can be managed by directly using a command line client utility to interact with the active LINSTOR controller. Alternatively, users can integrate the LINSTOR system into the storage architecture of other software systems, such as Kubernetes, CloudStack, OpenNebula, or Proxmox VE. All communication between LINSTOR components uses LINSTOR's own network protocol, based on TCP/IP network connections.

-

Open Source

-

Main Features

- Provides replicated block storage and persistent container storage

- Separate data and control planes for maximum data availability

- Online live migration of back-end storage

- Compatible with high I/O workloads such as databases

- Supports tiered storage (by using multiple storage pools)

- Choose your own Linux file system; LINSTOR is agnostic to layers above it

- Rich set of plugins

-

Storage Related Features

- Network replication through DRBD integration

- LVM snapshot support

- LVM thin provisioning Support

- RDMA

- Management of persistent memory (PMEM)

- ZFS support, including thin provisioning

- NVMe over fabrics

-

Network Related Features

- Replicate via multiple network cards (for redundancy or load balancing)

- Automatic management of TCP/IP port range, minor number range, and others to provide consistent data across a cluster

- Scale-up and scale-out

- REST API for integrating or customizing to your needs

- Supports LDAP authentication

If you want to use the entirety of LINSTOR's feature set (such as quorum, DRBD® replication, and others), you will need at least three nodes to use LINSTOR. A third node, used for quorum purposes, can be diskless and even something as basic as a low-powered single-board computer such as a Raspberry Pi could suffice. At least one node in the cluster should have the LINSTOR controller service and LINSTOR client software installed, while all nodes should have the LINSTOR satellite service installed. You can make the LINSTOR controller service highly available by installing the service on multiple nodes. For more details, refer to the LINSTOR User's Guide.

LINSTOR can also perform disk operations without using DRBD. However, if you need data replication with DRBD, LINSTOR requires DRBD version 9 installed on LINSTOR satellite nodes. To install DRBD, refer to the DRBD User's Guide.

For a more detailed installation guide, refer to instructions in the LINSTOR User's Guide, linked below.

LINSTOR is currently extended with the following plugins. Instructions for how to use them are linked below.

LINSTOR is an open source software. For individual or development use, you can use the LINSTOR forum topic within the LINBIT community forums to get support from the greater community of LINBIT software users. If you are going to use LINSTOR in enterprise or mission critical production environments, professional support contracts and official software binaries are available through LINBIT. Contact us by using the link below for professional support.

Releases generated by Git tags on GitHub are snapshots of the Git repository at the given time. These snapshots might lack things such as generated man pages, the configure script, and other generated files. If you want to build from a tar archive file, use the ones LINBIT provides.

As an alternative, you can build LINSTOR software from source code. Refer to the "Building" section below for details.

Gradle is used for building LINSTOR.

After a fresh git clone --recursive command, some protocol buffer (protobuf) Java files need to be generated and for that you need a suitable protocol buffer compiler.

Before building you need to run:

$ ./gradlew getProtocAfter installing the correct protocol buffer compiler in the ./tools directory, you can build LINSTOR by entering the following command:

$ ./gradlew assembleRefer to the development documentation for details.

LINSTOR is a SODA ECO Project

Free Software, Hell Yeah!