NB: these images are deprecated in favor of AWS' official images, which you can find at:

https://github.com/aws/aws-lambda-base-images

And browse on the ECR public gallery, eg:

https://gallery.ecr.aws/lambda/python

This project is now archived and will not receive any further updates.

A sandboxed local environment that replicates the live AWS Lambda environment almost identically – including installed software and libraries, file structure and permissions, environment variables, context objects and behaviors – even the user and running process are the same.

You can use it for running your functions in the same strict Lambda environment, knowing that they'll exhibit the same behavior when deployed live. You can also use it to compile native dependencies knowing that you're linking to the same library versions that exist on AWS Lambda and then deploy using the AWS CLI.

- Usage

- Run Examples

- Build Examples

- Using a Dockerfile to build

- Docker tags

- Verifying images

- Environment variables

- Build environment

- Questions

You can run your Lambdas from local directories using the -v arg with

docker run. You can run them in two modes: as a single execution, or as

an API server that listens for invoke events.

The default is single execution mode, which outputs all logging to stderr and the result of the handler to stdout.

You mount your (unzipped) lambda code at /var/task and any (unzipped) layer

code at /opt, and most runtimes take two arguments – the first for the

handler and the second for the event, ie:

docker run --rm \

-v <code_dir>:/var/task:ro,delegated \

[-v <layer_dir>:/opt:ro,delegated] \

lambci/lambda:<runtime> \

[<handler>] [<event>](the --rm flag will remove the docker container once it has run, which is usually what you want,

and the ro,delegated options ensure the directories are mounted read-only and have the highest performance)

You can pass environment variables (eg -e AWS_ACCESS_KEY_ID=abcd) to talk to live AWS services,

or modify aspects of the runtime. See below for a list.

If you pass the environment variable DOCKER_LAMBDA_STAY_OPEN=1 to the container, then instead of

executing the event and shutting down, it will start an API server (on port 9001 by default), which

you can then call with HTTP following the Lambda Invoke API.

This allows you to make fast subsequent calls to your handler without paying the "cold start" penalty each time.

docker run --rm [-d] \

-e DOCKER_LAMBDA_STAY_OPEN=1 \

-p 9001:9001 \

-v <code_dir>:/var/task:ro,delegated \

[-v <layer_dir>:/opt:ro,delegated] \

lambci/lambda:<runtime> \

[<handler>](the -d flag will start the container in detached mode, in the background)

You should then see:

Lambda API listening on port 9001...Then, in another terminal shell/window you can invoke your function using the AWS CLI

(or any http client, like curl):

aws lambda invoke --endpoint http://localhost:9001 --no-sign-request \

--function-name myfunction --payload '{}' output.json(if you're using AWS CLI v2, you'll need to add --cli-binary-format raw-in-base64-out to the above command)

Or just:

curl -d '{}' http://localhost:9001/2015-03-31/functions/myfunction/invocationsIt also supports the documented Lambda API headers

X-Amz-Invocation-Type, X-Amz-Log-Type and X-Amz-Client-Context.

If you want to change the exposed port, eg run on port 3000 on the host, use -p 3000:9001 (then query http://localhost:3000).

You can change the internal Lambda API port from 9001 by passing -e DOCKER_LAMBDA_API_PORT=<port>.

You can also change the custom runtime

port from 9001 by passing -e DOCKER_LAMBDA_RUNTIME_PORT=<port>.

docker-lambda can watch for changes to your handler (and layer) code and restart the internal bootstrap process so you can always invoke the latest version of your code without needing to shutdown the container.

To enable this, pass -e DOCKER_LAMBDA_WATCH=1 to docker run:

docker run --rm \

-e DOCKER_LAMBDA_WATCH=1 -e DOCKER_LAMBDA_STAY_OPEN=1 -p 9001:9001 \

-v "$PWD":/var/task:ro,delegated \

lambci/lambda:java11 handler

Then when you make changes to any file in the mounted directory, you'll see:

Handler/layer file changed, restarting bootstrap...

And the next invoke will reload your handler with the latest version of your code.

NOTE: This doesn't work in exactly the same way with some of the older runtimes due to the way they're loaded. Specifically: nodejs8.10 and earlier, python3.6 and earlier, dotnetcore2.1 and earlier, java8 and go1.x. These runtimes will instead exit with error code 2

when they are in watch mode and files in the handler or layer are changed.

That way you can use the --restart on-failure capabilities of docker run to have the container automatically restart instead.

So, for nodejs8.10, nodejs6.10, nodejs4.3, python3.6, python2.7, dotnetcore2.1, dotnetcore2.0, java8 and go1.x, you'll

need to run watch mode like this instead:

docker run --restart on-failure \

-e DOCKER_LAMBDA_WATCH=1 -e DOCKER_LAMBDA_STAY_OPEN=1 -p 9001:9001 \

-v "$PWD":/var/task:ro,delegated \

lambci/lambda:java8 handler

When you make changes to any file in the mounted directory, you'll see:

Handler/layer file changed, restarting bootstrap...

And then the docker container will restart. See the Docker documentation for more details. Your terminal may get detached, but the container should still be running and the

API should have restarted. You can do docker ps to find the container ID and then docker attach <container_id> to reattach if you wish.

If none of the above strategies work for you, you can use a file-watching utility like nodemon:

# npm install -g nodemon

nodemon -w ./ -e '' -s SIGINT -x docker -- run --rm \

-e DOCKER_LAMBDA_STAY_OPEN=1 -p 9001:9001 \

-v "$PWD":/var/task:ro,delegated \

lambci/lambda:go1.x handlerThe build images have a number of extra system packages installed

intended for building and packaging your Lambda functions. You can run your build commands (eg, gradle on the java image), and then package up your function using zip or the

AWS SAM CLI,

all from within the image.

docker run [--rm] -v <code_dir>:/var/task [-v <layer_dir>:/opt] lambci/lambda:build-<runtime> <build-cmd>You can also use yumda to install precompiled native dependencies using yum install.

# Test a `handler` function from an `index.js` file in the current directory on Node.js v12.x

docker run --rm -v "$PWD":/var/task:ro,delegated lambci/lambda:nodejs12.x index.handler

# Using a different file and handler, with a custom event

docker run --rm -v "$PWD":/var/task:ro,delegated lambci/lambda:nodejs12.x app.myHandler '{"some": "event"}'

# Test a `lambda_handler` function in `lambda_function.py` with an empty event on Python 3.8

docker run --rm -v "$PWD":/var/task:ro,delegated lambci/lambda:python3.8 lambda_function.lambda_handler

# Similarly with Ruby 2.7

docker run --rm -v "$PWD":/var/task:ro,delegated lambci/lambda:ruby2.7 lambda_function.lambda_handler

# Test on Go 1.x with a compiled handler named my_handler and a custom event

docker run --rm -v "$PWD":/var/task:ro,delegated lambci/lambda:go1.x my_handler '{"some": "event"}'

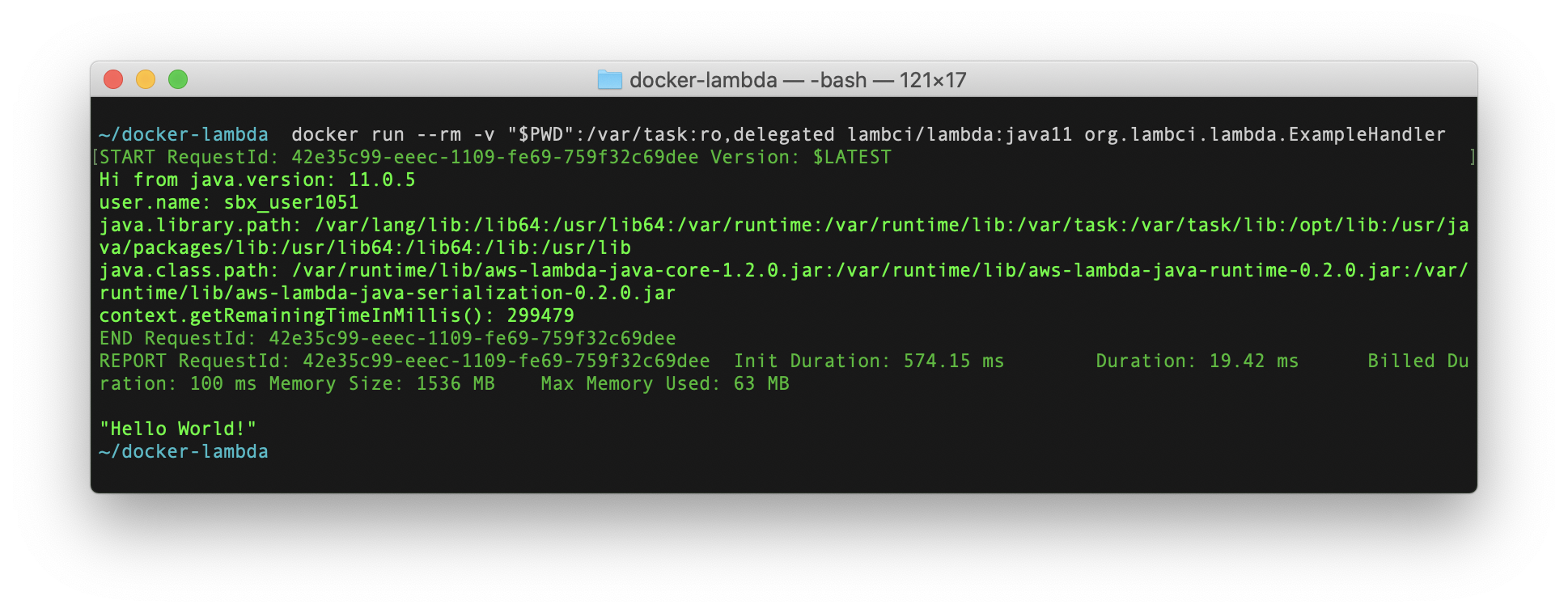

# Test a function from the current directory on Java 11

# The directory must be laid out in the same way the Lambda zip file is,

# with top-level package source directories and a `lib` directory for third-party jars

# https://docs.aws.amazon.com/lambda/latest/dg/java-package.html

docker run --rm -v "$PWD":/var/task:ro,delegated lambci/lambda:java11 org.myorg.MyHandler

# Test on .NET Core 3.1 given a test.dll assembly in the current directory,

# a class named Function with a FunctionHandler method, and a custom event

docker run --rm -v "$PWD":/var/task:ro,delegated lambci/lambda:dotnetcore3.1 test::test.Function::FunctionHandler '{"some": "event"}'

# Test with a provided runtime (assumes you have a `bootstrap` executable in the current directory)

docker run --rm -v "$PWD":/var/task:ro,delegated lambci/lambda:provided handler '{"some": "event"}'

# Test with layers (assumes your function code is in `./fn` and your layers in `./layer`)

docker run --rm -v "$PWD"/fn:/var/task:ro,delegated -v "$PWD"/layer:/opt:ro,delegated lambci/lambda:nodejs12.x

# Run custom commands

docker run --rm --entrypoint node lambci/lambda:nodejs12.x -v

# For large events you can pipe them into stdin if you set DOCKER_LAMBDA_USE_STDIN

echo '{"some": "event"}' | docker run --rm -v "$PWD":/var/task:ro,delegated -i -e DOCKER_LAMBDA_USE_STDIN=1 lambci/lambda:nodejs12.xYou can see more examples of how to build docker images and run different runtimes in the examples directory.

To use the build images, for compilation, deployment, etc:

# To compile native deps in node_modules

docker run --rm -v "$PWD":/var/task lambci/lambda:build-nodejs12.x npm rebuild --build-from-source

# To install defined poetry dependencies

docker run --rm -v "$PWD":/var/task lambci/lambda:build-python3.8 poetry install

# To resolve dependencies on go1.x (working directory is /go/src/handler)

docker run --rm -v "$PWD":/go/src/handler lambci/lambda:build-go1.x go mod download

# For .NET Core, this will publish the compiled code to `./pub`,

# which you can then use to run with `-v "$PWD"/pub:/var/task`

docker run --rm -v "$PWD":/var/task lambci/lambda:build-dotnetcore3.1 dotnet publish -c Release -o pub

# Run custom commands on a build container

docker run --rm lambci/lambda:build-python3.8 aws --version

# To run an interactive session on a build container

docker run -it lambci/lambda:build-python3.8 bashCreate your own Docker image to build and deploy:

FROM lambci/lambda:build-nodejs12.x

ENV AWS_DEFAULT_REGION us-east-1

COPY . .

RUN npm install

RUN zip -9yr lambda.zip .

CMD aws lambda update-function-code --function-name mylambda --zip-file fileb://lambda.zipAnd then:

docker build -t mylambda .

docker run --rm -e AWS_ACCESS_KEY_ID -e AWS_SECRET_ACCESS_KEY mylambdaUsing the Node.js module (npm install docker-lambda) – for example in tests:

var dockerLambda = require('docker-lambda')

// Spawns synchronously, uses current dir – will throw if it fails

var lambdaCallbackResult = dockerLambda({event: {some: 'event'}, dockerImage: 'lambci/lambda:nodejs12.x'})

// Manually specify directory and custom args

lambdaCallbackResult = dockerLambda({taskDir: __dirname, dockerArgs: ['-m', '1.5G'], dockerImage: 'lambci/lambda:nodejs12.x'})Options to pass to dockerLambda():

dockerImagehandlereventtaskDircleanUpaddEnvVarsdockerArgsspawnOptionsreturnSpawnResult

These follow the Lambda runtime names:

nodejs4.3nodejs6.10nodejs8.10nodejs10.xnodejs12.xpython2.7python3.6python3.7python3.8ruby2.5ruby2.7java8java8.al2java11go1.xdotnetcore2.0dotnetcore2.1dotnetcore3.1providedprovided.al2build-nodejs4.3build-nodejs6.10build-nodejs8.10build-nodejs10.xbuild-nodejs12.xbuild-python2.7build-python3.6build-python3.7build-python3.8build-ruby2.5build-ruby2.7build-java8build-java8.al2build-java11build-go1.xbuild-dotnetcore2.0build-dotnetcore2.1build-dotnetcore3.1build-providedbuild-provided.al2

These images are signed using Docker Content Trust, with the following keys:

- Repository Key:

e966126aacd4be5fb92e0160212dd007fc16a9b4366ef86d28fc7eb49f4d0809 - Root Key:

031d78bcdca4171be103da6ffb55e8ddfa9bd113e0ec481ade78d897d9e65c0e

You can verify/inspect an image using docker trust inspect:

$ docker trust inspect --pretty lambci/lambda:provided

Signatures for lambci/lambda:provided

SIGNED TAG DIGEST SIGNERS

provided 838c42079b5fcfd6640d486f13c1ceeb52ac661e19f9f1d240b63478e53d73f8 (Repo Admin)

Administrative keys for lambci/lambda:provided

Repository Key: e966126aacd4be5fb92e0160212dd007fc16a9b4366ef86d28fc7eb49f4d0809

Root Key: 031d78bcdca4171be103da6ffb55e8ddfa9bd113e0ec481ade78d897d9e65c0e(The DIGEST for a given tag may not match the example above, but the Repository and Root keys should match)

AWS_LAMBDA_FUNCTION_HANDLERor_HANDLERAWS_LAMBDA_EVENT_BODYAWS_LAMBDA_FUNCTION_NAMEAWS_LAMBDA_FUNCTION_VERSIONAWS_LAMBDA_FUNCTION_INVOKED_ARNAWS_LAMBDA_FUNCTION_MEMORY_SIZEAWS_LAMBDA_FUNCTION_TIMEOUT_X_AMZN_TRACE_IDAWS_REGIONorAWS_DEFAULT_REGIONAWS_ACCOUNT_IDAWS_ACCESS_KEY_IDAWS_SECRET_ACCESS_KEYAWS_SESSION_TOKENDOCKER_LAMBDA_USE_STDINDOCKER_LAMBDA_STAY_OPENDOCKER_LAMBDA_API_PORTDOCKER_LAMBDA_RUNTIME_PORTDOCKER_LAMBDA_DEBUGDOCKER_LAMBDA_NO_MODIFY_LOGS

Yum packages installed on build images:

development(group, includesgcc-c++,autoconf,automake,git,vim, etc)aws-cliaws-sam-clidocker(Docker in Docker!)clangcmake

The build image for older Amazon Linux 1 based runtimes also include:

python27-develpython36-develImageMagick-develcairo-devellibssh2-devellibxslt-devellibmpc-develreadline-develdb4-devellibffi-develexpat-devellibicu-devellua-develgdbm-develsqlite-develpcre-devellibcurl-develyum-plugin-ovl

-

When should I use this?

When you want fast local reproducibility. When you don't want to spin up an Amazon Linux EC2 instance (indeed, network aside, this is closer to the real Lambda environment because there are a number of different files, permissions and libraries on a default Amazon Linux instance). When you don't want to invoke a live Lambda just to test your Lambda package – you can do it locally from your dev machine or run tests on your CI system (assuming it has Docker support!)

-

Wut, how?

By tarring the full filesystem in Lambda, uploading that to S3, and then piping into Docker to create a new image from scratch – then creating mock modules that will be required/included in place of the actual native modules that communicate with the real Lambda coordinating services. Only the native modules are mocked out – the actual parent JS/PY/Java runner files are left alone, so their behaviors don't need to be replicated (like the overriding of

console.log, and custom defined properties likecallbackWaitsForEmptyEventLoop) -

What's missing from the images?

Hard to tell – anything that's not readable – so at least

/root/*– but probably a little more than that – hopefully nothing important, after all, it's not readable by Lambda, so how could it be! -

Is it really necessary to replicate exactly to this degree?

Not for many scenarios – some compiled Linux binaries work out of the box and an Amazon Linux Docker image can compile some binaries that work on Lambda too, for example – but for testing it's great to be able to reliably verify permissions issues, library linking issues, etc.

-

What's this got to do with LambCI?

Technically nothing – it's just been incredibly useful during the building and testing of LambCI.