描述该错误

- Python == 3.10

- GCC == 10.2.0

- MPFR == 4.1.0

- CUDA == 11.7

- Pytorch == 1.13.1+cu117

- Transformers >= 4.25.1

- 均安装,仍无法正常编译apex

环境信息

packages/torch/include/torch/csrc/api/include/torch/serialize/archive.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/samplers/serialize.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/samplers.h:8,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/datasets/chunk.h:7,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/datasets.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/all.h:8,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/extension.h:4,

from /data2/InternLM/apex/csrc/fused_dense.cpp:1:

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/jit/ir/attributes.h:35:27: note: ‘torch::jit::toString’

35 | static inline const char* toString(AttributeKind kind) {

| ^~~~~~~~

/data2/InternLM/apex/csrc/fused_dense.cpp: In function ‘std::vectorat::Tensor linear_gelu_linear_backward(at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor)’:

/data2/InternLM/apex/csrc/fused_dense.cpp:149:73: warning: ‘at::DeprecatedTypeProperties& at::Tensor::type() const’ is deprecated: Tensor.type() is deprecated. Instead use Tensor.options(), which in many cases (e.g. in a constructor) is a drop-in replacement. If you were using data from type(), that is now available from Tensor itself, so instead of tensor.type().scalar_type(), use tensor.scalar_type() instead and instead of tensor.type().backend() use tensor.device(). [-Wdeprecated-declarations]

149 | auto d_weight1 = at::empty({hidden_features, in_features}, input.type());

| ^

In file included from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/core/Tensor.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/DeviceGuard.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/ATen.h:11,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/types.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader_options.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/base.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/stateful.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/all.h:8,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/extension.h:4,

from /data2/InternLM/apex/csrc/fused_dense.cpp:1:

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/core/TensorBody.h:213:30: note: declared here

213 | DeprecatedTypeProperties & type() const {

| ^~~~

/data2/InternLM/apex/csrc/fused_dense.cpp:150:74: warning: ‘at::DeprecatedTypeProperties& at::Tensor::type() const’ is deprecated: Tensor.type() is deprecated. Instead use Tensor.options(), which in many cases (e.g. in a constructor) is a drop-in replacement. If you were using data from type(), that is now available from Tensor itself, so instead of tensor.type().scalar_type(), use tensor.scalar_type() instead and instead of tensor.type().backend() use tensor.device(). [-Wdeprecated-declarations]

150 | auto d_weight2 = at::empty({out_features, hidden_features}, input.type());

| ^

In file included from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/core/Tensor.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/DeviceGuard.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/ATen.h:11,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/types.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader_options.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/base.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/stateful.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/all.h:8,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/extension.h:4,

from /data2/InternLM/apex/csrc/fused_dense.cpp:1:

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/core/TensorBody.h:213:30: note: declared here

213 | DeprecatedTypeProperties & type() const {

| ^~~~

/data2/InternLM/apex/csrc/fused_dense.cpp:151:58: warning: ‘at::DeprecatedTypeProperties& at::Tensor::type() const’ is deprecated: Tensor.type() is deprecated. Instead use Tensor.options(), which in many cases (e.g. in a constructor) is a drop-in replacement. If you were using data from type(), that is now available from Tensor itself, so instead of tensor.type().scalar_type(), use tensor.scalar_type() instead and instead of tensor.type().backend() use tensor.device(). [-Wdeprecated-declarations]

151 | auto d_bias1 = at::empty({hidden_features}, input.type());

| ^

In file included from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/core/Tensor.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/DeviceGuard.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/ATen.h:11,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/types.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader_options.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/base.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/stateful.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/all.h:8,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/extension.h:4,

from /data2/InternLM/apex/csrc/fused_dense.cpp:1:

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/core/TensorBody.h:213:30: note: declared here

213 | DeprecatedTypeProperties & type() const {

| ^~~~

/data2/InternLM/apex/csrc/fused_dense.cpp:152:55: warning: ‘at::DeprecatedTypeProperties& at::Tensor::type() const’ is deprecated: Tensor.type() is deprecated. Instead use Tensor.options(), which in many cases (e.g. in a constructor) is a drop-in replacement. If you were using data from type(), that is now available from Tensor itself, so instead of tensor.type().scalar_type(), use tensor.scalar_type() instead and instead of tensor.type().backend() use tensor.device(). [-Wdeprecated-declarations]

152 | auto d_bias2 = at::empty({out_features}, input.type());

| ^

In file included from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/core/Tensor.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/DeviceGuard.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/ATen.h:11,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/types.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader_options.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/base.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/stateful.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/all.h:8,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/extension.h:4,

from /data2/InternLM/apex/csrc/fused_dense.cpp:1:

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/core/TensorBody.h:213:30: note: declared here

213 | DeprecatedTypeProperties & type() const {

| ^~~~

/data2/InternLM/apex/csrc/fused_dense.cpp:153:66: warning: ‘at::DeprecatedTypeProperties& at::Tensor::type() const’ is deprecated: Tensor.type() is deprecated. Instead use Tensor.options(), which in many cases (e.g. in a constructor) is a drop-in replacement. If you were using data from type(), that is now available from Tensor itself, so instead of tensor.type().scalar_type(), use tensor.scalar_type() instead and instead of tensor.type().backend() use tensor.device(). [-Wdeprecated-declarations]

153 | auto d_input = at::empty({batch_size, in_features}, input.type());

| ^

In file included from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/core/Tensor.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/DeviceGuard.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/ATen.h:11,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/types.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader_options.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/base.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/stateful.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/all.h:8,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/extension.h:4,

from /data2/InternLM/apex/csrc/fused_dense.cpp:1:

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/core/TensorBody.h:213:30: note: declared here

213 | DeprecatedTypeProperties & type() const {

| ^~~~

/data2/InternLM/apex/csrc/fused_dense.cpp:154:72: warning: ‘at::DeprecatedTypeProperties& at::Tensor::type() const’ is deprecated: Tensor.type() is deprecated. Instead use Tensor.options(), which in many cases (e.g. in a constructor) is a drop-in replacement. If you were using data from type(), that is now available from Tensor itself, so instead of tensor.type().scalar_type(), use tensor.scalar_type() instead and instead of tensor.type().backend() use tensor.device(). [-Wdeprecated-declarations]

154 | auto d_output1 = at::empty({batch_size, hidden_features}, input.type());

| ^

In file included from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/core/Tensor.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/DeviceGuard.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/ATen.h:11,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/types.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader_options.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/base.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/stateful.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/all.h:8,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/extension.h:4,

from /data2/InternLM/apex/csrc/fused_dense.cpp:1:

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/core/TensorBody.h:213:30: note: declared here

213 | DeprecatedTypeProperties & type() const {

| ^~~~

/data2/InternLM/apex/csrc/fused_dense.cpp:157:55: warning: ‘at::DeprecatedTypeProperties& at::Tensor::type() const’ is deprecated: Tensor.type() is deprecated. Instead use Tensor.options(), which in many cases (e.g. in a constructor) is a drop-in replacement. If you were using data from type(), that is now available from Tensor itself, so instead of tensor.type().scalar_type(), use tensor.scalar_type() instead and instead of tensor.type().backend() use tensor.device(). [-Wdeprecated-declarations]

157 | auto lt_workspace = at::empty({1 << 22}, input.type());

| ^

In file included from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/core/Tensor.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/DeviceGuard.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/ATen.h:11,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/types.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader_options.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/base.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/stateful.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/all.h:8,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/extension.h:4,

from /data2/InternLM/apex/csrc/fused_dense.cpp:1:

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/core/TensorBody.h:213:30: note: declared here

213 | DeprecatedTypeProperties & type() const {

| ^~~~

In file included from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/ATen.h:13,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/types.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader_options.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/base.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/stateful.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/all.h:8,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/extension.h:4,

from /data2/InternLM/apex/csrc/fused_dense.cpp:1:

/data2/InternLM/apex/csrc/fused_dense.cpp: In lambda function:

/data2/InternLM/apex/csrc/fused_dense.cpp:159:94: warning: ‘at::DeprecatedTypeProperties& at::Tensor::type() const’ is deprecated: Tensor.type() is deprecated. Instead use Tensor.options(), which in many cases (e.g. in a constructor) is a drop-in replacement. If you were using data from type(), that is now available from Tensor itself, so instead of tensor.type().scalar_type(), use tensor.scalar_type() instead and instead of tensor.type().backend() use tensor.device(). [-Wdeprecated-declarations]

159 | AT_DISPATCH_FLOATING_TYPES_AND2(at::ScalarType::Half, at::ScalarType::BFloat16, input.type(), "linear_bias_backward", [&] {

| ^

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/Dispatch.h:312:28: note: in definition of macro ‘AT_DISPATCH_FLOATING_TYPES_AND2’

312 | const auto& the_type = TYPE;

| ^~~~

In file included from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/core/Tensor.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/DeviceGuard.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/ATen.h:11,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/types.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader_options.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/base.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/stateful.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/all.h:8,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/extension.h:4,

from /data2/InternLM/apex/csrc/fused_dense.cpp:1:

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/core/TensorBody.h:213:30: note: declared here

213 | DeprecatedTypeProperties & type() const {

| ^~~~

In file included from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/ATen.h:13,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/types.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader_options.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/base.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/stateful.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/all.h:8,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/extension.h:4,

from /data2/InternLM/apex/csrc/fused_dense.cpp:1:

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/Dispatch.h:314:56: warning: ‘c10::ScalarType detail::scalar_type(const at::DeprecatedTypeProperties&)’ is deprecated: passing at::DeprecatedTypeProperties to an AT_DISPATCH macro is deprecated, pass an at::ScalarType instead [-Wdeprecated-declarations]

314 | at::ScalarType st = ::detail::scalar_type(the_type);

| ^

/data2/InternLM/apex/csrc/fused_dense.cpp:159:3: note: in expansion of macro ‘AT_DISPATCH_FLOATING_TYPES_AND2’

159 | AT_DISPATCH_FLOATING_TYPES_AND2(at::ScalarType::Half, at::ScalarType::BFloat16, input.type(), "linear_bias_backward", [&] {

| ^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/Dispatch.h:178:23: note: declared here

178 | inline at::ScalarType scalar_type(const at::DeprecatedTypeProperties& t) {

| ^~~~~~~~~~~

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/Dispatch.h:314:56: warning: ‘c10::ScalarType detail::scalar_type(const at::DeprecatedTypeProperties&)’ is deprecated: passing at::DeprecatedTypeProperties to an AT_DISPATCH macro is deprecated, pass an at::ScalarType instead [-Wdeprecated-declarations]

314 | at::ScalarType st = ::detail::scalar_type(the_type);

| ^

/data2/InternLM/apex/csrc/fused_dense.cpp:159:3: note: in expansion of macro ‘AT_DISPATCH_FLOATING_TYPES_AND2’

159 | AT_DISPATCH_FLOATING_TYPES_AND2(at::ScalarType::Half, at::ScalarType::BFloat16, input.type(), "linear_bias_backward", [&] {

| ^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/Dispatch.h:178:23: note: declared here

178 | inline at::ScalarType scalar_type(const at::DeprecatedTypeProperties& t) {

| ^~~~~~~~~~~

/data2/InternLM/apex/csrc/fused_dense.cpp: In lambda function:

/data2/InternLM/apex/csrc/fused_dense.cpp:163:10: warning: unused variable ‘result’ [-Wunused-variable]

163 | auto result = linear_gelu_linear_backward_cuda<scalar_t>(

| ^~~~~~

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/Dispatch.h:68:12: note: in definition of macro ‘AT_PRIVATE_CASE_TYPE_USING_HINT’

68 | return VA_ARGS();

| ^~~~~~~~~~~

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/Dispatch.h:317:7: note: in expansion of macro ‘AT_PRIVATE_CASE_TYPE’

317 | AT_PRIVATE_CASE_TYPE(NAME, at::ScalarType::Double, double, VA_ARGS)

| ^~~~~~~~~~~~~~~~~~~~

/data2/InternLM/apex/csrc/fused_dense.cpp:159:3: note: in expansion of macro ‘AT_DISPATCH_FLOATING_TYPES_AND2’

159 | AT_DISPATCH_FLOATING_TYPES_AND2(at::ScalarType::Half, at::ScalarType::BFloat16, input.type(), "linear_bias_backward", [&] {

| ^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

/data2/InternLM/apex/csrc/fused_dense.cpp: In lambda function:

/data2/InternLM/apex/csrc/fused_dense.cpp:163:10: warning: unused variable ‘result’ [-Wunused-variable]

163 | auto result = linear_gelu_linear_backward_cuda<scalar_t>(

| ^~~~~~

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/Dispatch.h:68:12: note: in definition of macro ‘AT_PRIVATE_CASE_TYPE_USING_HINT’

68 | return VA_ARGS();

| ^~~~~~~~~~~

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/Dispatch.h:318:7: note: in expansion of macro ‘AT_PRIVATE_CASE_TYPE’

318 | AT_PRIVATE_CASE_TYPE(NAME, at::ScalarType::Float, float, VA_ARGS)

| ^~~~~~~~~~~~~~~~~~~~

/data2/InternLM/apex/csrc/fused_dense.cpp:159:3: note: in expansion of macro ‘AT_DISPATCH_FLOATING_TYPES_AND2’

159 | AT_DISPATCH_FLOATING_TYPES_AND2(at::ScalarType::Half, at::ScalarType::BFloat16, input.type(), "linear_bias_backward", [&] {

| ^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

/data2/InternLM/apex/csrc/fused_dense.cpp: In lambda function:

/data2/InternLM/apex/csrc/fused_dense.cpp:163:10: warning: unused variable ‘result’ [-Wunused-variable]

163 | auto result = linear_gelu_linear_backward_cuda<scalar_t>(

| ^~~~~~

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/Dispatch.h:68:12: note: in definition of macro ‘AT_PRIVATE_CASE_TYPE_USING_HINT’

68 | return VA_ARGS();

| ^~~~~~~~~~~

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/Dispatch.h:319:7: note: in expansion of macro ‘AT_PRIVATE_CASE_TYPE’

319 | AT_PRIVATE_CASE_TYPE(

| ^~~~~~~~~~~~~~~~~~~~

/data2/InternLM/apex/csrc/fused_dense.cpp:159:3: note: in expansion of macro ‘AT_DISPATCH_FLOATING_TYPES_AND2’

159 | AT_DISPATCH_FLOATING_TYPES_AND2(at::ScalarType::Half, at::ScalarType::BFloat16, input.type(), "linear_bias_backward", [&] {

| ^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

/data2/InternLM/apex/csrc/fused_dense.cpp: In lambda function:

/data2/InternLM/apex/csrc/fused_dense.cpp:163:10: warning: unused variable ‘result’ [-Wunused-variable]

163 | auto result = linear_gelu_linear_backward_cuda<scalar_t>(

| ^~~~~~

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/Dispatch.h:68:12: note: in definition of macro ‘AT_PRIVATE_CASE_TYPE_USING_HINT’

68 | return VA_ARGS();

| ^~~~~~~~~~~

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/Dispatch.h:324:7: note: in expansion of macro ‘AT_PRIVATE_CASE_TYPE’

324 | AT_PRIVATE_CASE_TYPE(

| ^~~~~~~~~~~~~~~~~~~~

/data2/InternLM/apex/csrc/fused_dense.cpp:159:3: note: in expansion of macro ‘AT_DISPATCH_FLOATING_TYPES_AND2’

159 | AT_DISPATCH_FLOATING_TYPES_AND2(at::ScalarType::Half, at::ScalarType::BFloat16, input.type(), "linear_bias_backward", [&] {

| ^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

In file included from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/c10/core/Device.h:5,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/c10/core/Allocator.h:6,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/ATen.h:7,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/types.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader_options.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/base.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/stateful.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/all.h:8,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/extension.h:4,

from /data2/InternLM/apex/csrc/fused_dense.cpp:1:

/data2/InternLM/apex/csrc/fused_dense.cpp: In lambda function:

/data2/InternLM/apex/csrc/fused_dense.cpp:159:94: warning: ‘at::DeprecatedTypeProperties& at::Tensor::type() const’ is deprecated: Tensor.type() is deprecated. Instead use Tensor.options(), which in many cases (e.g. in a constructor) is a drop-in replacement. If you were using data from type(), that is now available from Tensor itself, so instead of tensor.type().scalar_type(), use tensor.scalar_type() instead and instead of tensor.type().backend() use tensor.device(). [-Wdeprecated-declarations]

159 | AT_DISPATCH_FLOATING_TYPES_AND2(at::ScalarType::Half, at::ScalarType::BFloat16, input.type(), "linear_bias_backward", [&] {

| ^

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/c10/util/Exception.h:260:39: note: in definition of macro ‘C10_EXPAND_MSVC_WORKAROUND’

260 | #define C10_EXPAND_MSVC_WORKAROUND(x) x

| ^

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/c10/util/Exception.h:465:9: note: in expansion of macro ‘TORCH_CHECK_MSG’

465 | TORCH_CHECK_MSG(cond, "", ##VA_ARGS));

| ^~~~~~~~~~~~~~~

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/c10/util/Exception.h:637:32: note: in expansion of macro ‘TORCH_CHECK’

637 | C10_EXPAND_MSVC_WORKAROUND(TORCH_CHECK(false, ::c10::str(VA_ARGS)));

| ^~~~~~~~~~~

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/Dispatch.h:330:9: note: in expansion of macro ‘AT_ERROR’

330 | AT_ERROR(#NAME, " not implemented for '", toString(TYPE), "'");

| ^~~~~~~~

/data2/InternLM/apex/csrc/fused_dense.cpp:159:3: note: in expansion of macro ‘AT_DISPATCH_FLOATING_TYPES_AND2’

159 | AT_DISPATCH_FLOATING_TYPES_AND2(at::ScalarType::Half, at::ScalarType::BFloat16, input.type(), "linear_bias_backward", [&] {

| ^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

In file included from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/core/Tensor.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/DeviceGuard.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/ATen.h:11,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/types.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader_options.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/base.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/stateful.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/all.h:8,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/extension.h:4,

from /data2/InternLM/apex/csrc/fused_dense.cpp:1:

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/core/TensorBody.h:213:30: note: declared here

213 | DeprecatedTypeProperties & type() const {

| ^~~~

In file included from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/c10/core/Device.h:5,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/c10/core/Allocator.h:6,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/ATen.h:7,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/types.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader_options.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/base.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/stateful.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/all.h:8,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/extension.h:4,

from /data2/InternLM/apex/csrc/fused_dense.cpp:1:

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/Dispatch.h:330:51: error: ‘toString’ was not declared in this scope

330 | AT_ERROR(#NAME, " not implemented for '", toString(TYPE), "'");

| ^~~~~~~~

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/c10/util/Exception.h:260:39: note: in definition of macro ‘C10_EXPAND_MSVC_WORKAROUND’

260 | #define C10_EXPAND_MSVC_WORKAROUND(x) x

| ^

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/c10/util/Exception.h:465:9: note: in expansion of macro ‘TORCH_CHECK_MSG’

465 | TORCH_CHECK_MSG(cond, "", ##VA_ARGS));

| ^~~~~~~~~~~~~~~

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/c10/util/Exception.h:637:32: note: in expansion of macro ‘TORCH_CHECK’

637 | C10_EXPAND_MSVC_WORKAROUND(TORCH_CHECK(false, ::c10::str(VA_ARGS)));

| ^~~~~~~~~~~

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/Dispatch.h:330:9: note: in expansion of macro ‘AT_ERROR’

330 | AT_ERROR(#NAME, " not implemented for '", toString(TYPE), "'");

| ^~~~~~~~

/data2/InternLM/apex/csrc/fused_dense.cpp:159:3: note: in expansion of macro ‘AT_DISPATCH_FLOATING_TYPES_AND2’

159 | AT_DISPATCH_FLOATING_TYPES_AND2(at::ScalarType::Half, at::ScalarType::BFloat16, input.type(), "linear_bias_backward", [&] {

| ^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/Dispatch.h:330:51: note: suggested alternatives:

330 | AT_ERROR(#NAME, " not implemented for '", toString(TYPE), "'");

| ^~~~~~~~

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/c10/util/Exception.h:260:39: note: in definition of macro ‘C10_EXPAND_MSVC_WORKAROUND’

260 | #define C10_EXPAND_MSVC_WORKAROUND(x) x

| ^

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/c10/util/Exception.h:465:9: note: in expansion of macro ‘TORCH_CHECK_MSG’

465 | TORCH_CHECK_MSG(cond, "", ##VA_ARGS));

| ^~~~~~~~~~~~~~~

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/c10/util/Exception.h:637:32: note: in expansion of macro ‘TORCH_CHECK’

637 | C10_EXPAND_MSVC_WORKAROUND(TORCH_CHECK(false, ::c10::str(VA_ARGS)));

| ^~~~~~~~~~~

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/Dispatch.h:330:9: note: in expansion of macro ‘AT_ERROR’

330 | AT_ERROR(#NAME, " not implemented for '", toString(TYPE), "'");

| ^~~~~~~~

/data2/InternLM/apex/csrc/fused_dense.cpp:159:3: note: in expansion of macro ‘AT_DISPATCH_FLOATING_TYPES_AND2’

159 | AT_DISPATCH_FLOATING_TYPES_AND2(at::ScalarType::Half, at::ScalarType::BFloat16, input.type(), "linear_bias_backward", [&] {

| ^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

In file included from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/core/op_registration/infer_schema.h:8,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/library.h:61,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/types.h:12,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader_options.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/base.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader/stateful.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/dataloader.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/all.h:8,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/extension.h:4,

from /data2/InternLM/apex/csrc/fused_dense.cpp:1:

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/ATen/core/function_schema.h:522:20: note: ‘c10::toString’

522 | inline std::string toString(const FunctionSchema& schema) {

| ^~~~~~~~

In file included from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/jit/ir/ir.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/jit/api/function_impl.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/jit/api/method.h:7,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/jit/api/object.h:6,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/jit/api/module.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/serialize/input-archive.h:7,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/serialize/archive.h:3,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/samplers/serialize.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/samplers.h:8,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/datasets/chunk.h:7,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data/datasets.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/data.h:4,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include/torch/all.h:8,

from /data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/extension.h:4,

from /data2/InternLM/apex/csrc/fused_dense.cpp:1:

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/jit/ir/attributes.h:35:27: note: ‘torch::jit::toString’

35 | static inline const char* toString(AttributeKind kind) {

| ^~~~~~~~

[2/2] /usr/local/cuda-11.3/bin/nvcc -I/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include -I/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/torch/csrc/api/include -I/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/TH -I/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/THC -I/usr/local/cuda-11.3/include -I/data1/lym/anaconda3/envs/lab2/include/python3.10 -c -c /data2/InternLM/apex/csrc/fused_dense_cuda.cu -o /data2/InternLM/apex/build/temp.linux-x86_64-cpython-310/csrc/fused_dense_cuda.o -D__CUDA_NO_HALF_OPERATORS -D__CUDA_NO_HALF_CONVERSIONS__ -D__CUDA_NO_BFLOAT16_CONVERSIONS__ -D__CUDA_NO_HALF2_OPERATORS__ --expt-relaxed-constexpr --compiler-options ''"'"'-fPIC'"'"'' -O3 -DVERSION_GE_1_1 -DVERSION_GE_1_3 -DVERSION_GE_1_5 -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=fused_dense_cuda -D_GLIBCXX_USE_CXX11_ABI=0 -gencode=arch=compute_86,code=compute_86 -gencode=arch=compute_86,code=sm_86 -std=c++14

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/c10/core/SymInt.h(84): warning: integer conversion resulted in a change of sign

/data2/InternLM/apex/csrc/fused_dense_cuda.cu(1631): warning: variable "beta_zero" was declared but never referenced

/data2/InternLM/apex/csrc/fused_dense_cuda.cu(1755): warning: variable "alpha" was declared but never referenced

/data2/InternLM/apex/csrc/fused_dense_cuda.cu(1756): warning: variable "beta_zero" was declared but never referenced

/data2/InternLM/apex/csrc/fused_dense_cuda.cu(1757): warning: variable "status" was declared but never referenced

/data2/InternLM/apex/csrc/fused_dense_cuda.cu(1812): warning: variable "alpha" was declared but never referenced

/data2/InternLM/apex/csrc/fused_dense_cuda.cu(1813): warning: variable "beta_zero" was declared but never referenced

/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/include/c10/core/SymInt.h(84): warning: integer conversion resulted in a change of sign

ninja: build stopped: subcommand failed.

Traceback (most recent call last):

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/utils/cpp_extension.py", line 1808, in _run_ninja_build

subprocess.run(

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/subprocess.py", line 524, in run

raise CalledProcessError(retcode, process.args,

subprocess.CalledProcessError: Command '['ninja', '-v']' returned non-zero exit status 1.

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "", line 2, in

File "", line 34, in

File "/data2/InternLM/apex/setup.py", line 795, in

setup(

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/setuptools/init.py", line 107, in setup

return distutils.core.setup(**attrs)

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/setuptools/_distutils/core.py", line 185, in setup

return run_commands(dist)

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/setuptools/_distutils/core.py", line 201, in run_commands

dist.run_commands()

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/setuptools/_distutils/dist.py", line 969, in run_commands

self.run_command(cmd)

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/setuptools/dist.py", line 1234, in run_command

super().run_command(command)

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/setuptools/_distutils/dist.py", line 988, in run_command

cmd_obj.run()

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/setuptools/command/install.py", line 74, in run

return orig.install.run(self)

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/setuptools/_distutils/command/install.py", line 697, in run

self.run_command('build')

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/setuptools/_distutils/cmd.py", line 318, in run_command

self.distribution.run_command(command)

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/setuptools/dist.py", line 1234, in run_command

super().run_command(command)

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/setuptools/_distutils/dist.py", line 988, in run_command

cmd_obj.run()

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/setuptools/_distutils/command/build.py", line 131, in run

self.run_command(cmd_name)

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/setuptools/_distutils/cmd.py", line 318, in run_command

self.distribution.run_command(command)

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/setuptools/dist.py", line 1234, in run_command

super().run_command(command)

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/setuptools/_distutils/dist.py", line 988, in run_command

cmd_obj.run()

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/setuptools/command/build_ext.py", line 84, in run

_build_ext.run(self)

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/setuptools/_distutils/command/build_ext.py", line 345, in run

self.build_extensions()

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/utils/cpp_extension.py", line 765, in build_extensions

build_ext.build_extensions(self)

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/setuptools/_distutils/command/build_ext.py", line 467, in build_extensions

self._build_extensions_serial()

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/setuptools/_distutils/command/build_ext.py", line 493, in _build_extensions_serial

self.build_extension(ext)

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/setuptools/command/build_ext.py", line 246, in build_extension

_build_ext.build_extension(self, ext)

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/setuptools/_distutils/command/build_ext.py", line 548, in build_extension

objects = self.compiler.compile(

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/utils/cpp_extension.py", line 586, in unix_wrap_ninja_compile

_write_ninja_file_and_compile_objects(

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/utils/cpp_extension.py", line 1487, in _write_ninja_file_and_compile_objects

_run_ninja_build(

File "/data1/lym/anaconda3/envs/lab2/lib/python3.10/site-packages/torch/utils/cpp_extension.py", line 1824, in _run_ninja_build

raise RuntimeError(message) from e

RuntimeError: Error compiling objects for extension

error: subprocess-exited-with-error

× Running setup.py install for apex did not run successfully.

│ exit code: 1

╰─> See above for output.

note: This error originates from a subprocess, and is likely not a problem with pip.

full command: /data1/lym/anaconda3/envs/lab2/bin/python -u -c '

exec(compile('"'"''"'"''"'"'

This is -- a caller that pip uses to run setup.py

- It imports setuptools before invoking setup.py, to enable projects that directly

import from distutils.core to work with newer packaging standards.

- It provides a clear error message when setuptools is not installed.

- It sets sys.argv[0] to the underlying setup.py, when invoking setup.py so

setuptools doesn'"'"'t think the script is -c. This avoids the following warning:

manifest_maker: standard file '"'"'-c'"'"' not found".

- It generates a shim setup.py, for handling setup.cfg-only projects.

import os, sys, tokenize

try:

import setuptools

except ImportError as error:

print(

"ERROR: Can not execute setup.py since setuptools is not available in "

"the build environment.",

file=sys.stderr,

)

sys.exit(1)

file = %r

sys.argv[0] = file

if os.path.exists(file):

filename = file

with tokenize.open(file) as f:

setup_py_code = f.read()

else:

filename = ""

setup_py_code = "from setuptools import setup; setup()"

exec(compile(setup_py_code, filename, "exec"))

'"'"''"'"''"'"' % ('"'"'/data2/InternLM/apex/setup.py'"'"',), "", "exec"))' --cpp_ext --cuda_ext install --record /tmp/pip-record-_mp6xe2r/install-record.txt --single-version-externally-managed --compile --install-headers /data1/lym/anaconda3/envs/lab2/include/python3.10/apex

cwd: /data2/InternLM/apex/

Running setup.py install for apex ... error

error: legacy-install-failure

× Encountered error while trying to install package.

╰─> apex

note: This is an issue with the package mentioned above, not pip.

hint: See above for output from the failure.

其他信息

No response

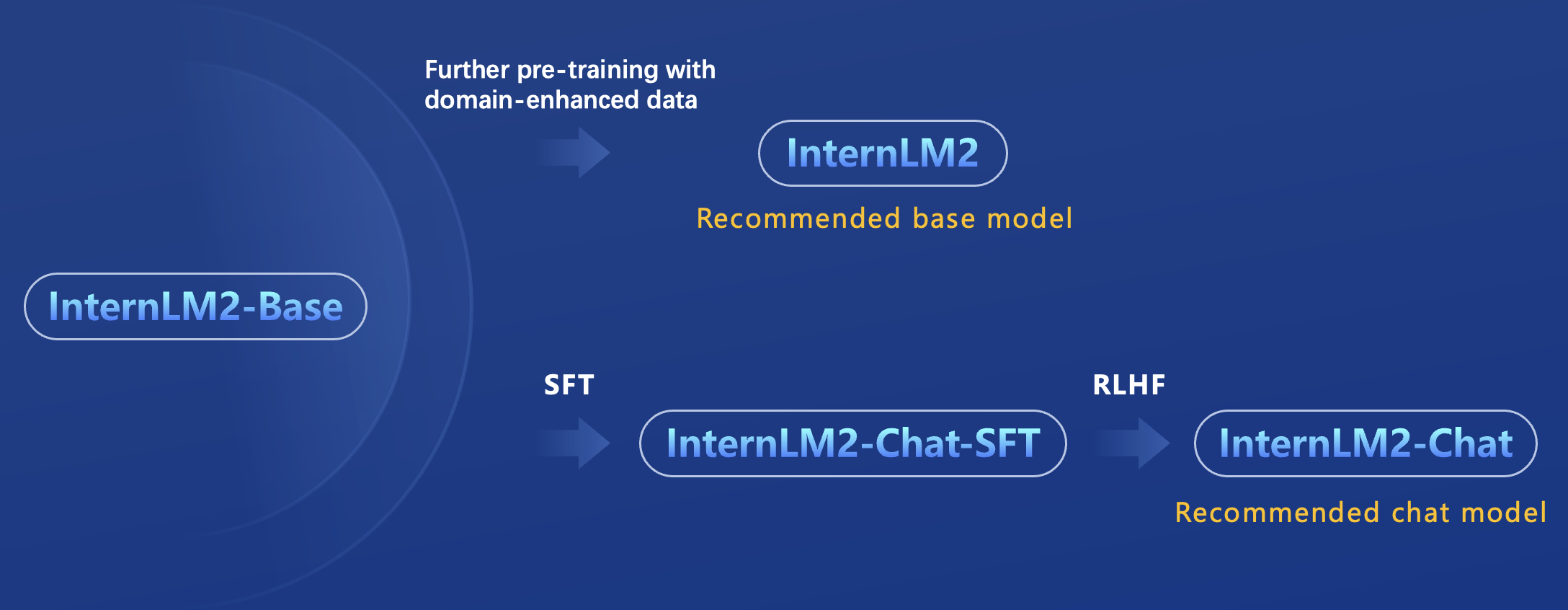

internlm2-1.8b

internlm2-1.8b

internlm2-chat-1.8b-sft

internlm2-chat-1.8b-sft

internlm2-chat-1.8b

internlm2-chat-1.8b

internlm2-base-7b

internlm2-base-7b

internlm2-7b

internlm2-7b

internlm2-chat-7b-sft

internlm2-chat-7b-sft

internlm2-chat-7b

internlm2-chat-7b

internlm2-base-20b

internlm2-base-20b

internlm2-20b

internlm2-20b

internlm2-chat-20b-sft

internlm2-chat-20b-sft

internlm2-chat-20b

internlm2-chat-20b