TimeSHAP is a model-agnostic, recurrent explainer that builds upon KernelSHAP and extends it to the sequential domain. TimeSHAP computes event/timestamp- feature-, and cell-level attributions. As sequences can be arbitrarily long, TimeSHAP also implements a pruning algorithm based on Shapley Values, that finds a subset of consecutive, recent events that contribute the most to the decision.

This repository is the code implementation of the TimeSHAP algorithm

present in the paper TimeSHAP: Explaining Recurrent Models through Sequence Perturbations

published at KDD 2021.

Links to the paper here, and to the video presentation here.

pip install timeshap

Clone the repository into a local directory using:

git clone https://github.com/feedzai/timeshap.git

Move into the cloned repo and install the package:

cd timeshap

pip install .

Start a Python session in your terminal using

python

And import TimeSHAP

import timeshap

- Model being explained;

- Instance(s) to explain;

- Background instance.

- Local pruning output; (explaining a single instance)

- Local event explanations; (explaining a single instance)

- Local feature explanations; (explaining a single instance)

- Global pruning statistics; (explaining multiple instances)

- Global event explanations; (explaining multiple instances)

- Global feature explanations; (explaining multiple instances)

In order for TimeSHAP to explain a model, an entry point must be provided.

This Callable entry point must receive a 3-D numpy array, (#sequences; #sequence length; #features)

and return a 2-D numpy array (#sequences; 1) with the corresponding score of each sequence.

In addition, to make TimeSHAP more optimized, it is possible to return the hidden state of the model together with the score (if applicable). Although this is optional, we highly recommended it, as it has a very high impact. If you choose to return the hidden state, this hidden state should either be: (see notebook for specific examples)

- a 3-D numpy array,

(#rnn layers, #sequences, #hidden_dimension)(classExplainedRNNon notebook); - a tuple of numpy arrays that follows the previously described characteristic

(usually used when using stacked RNNs with different hidden dimensions) (class

ExplainedGRU2Layeron notebook); - a tuple of tuples of numpy arrays (usually used when using LSTM's) (class

ExplainedLSTMon notebook);; TimeSHAP is able to explain any black-box model as long as it complies with the previously described interface, including both PyTorch and TensorFlow models, both examplified in our tutorials (PyTorch, TensorFlow).

Example provided in our tutorials:

- TensorFLow

model = tf.keras.models.Model(inputs=inputs, outputs=ff2)

f = lambda x: model.predict(x)

- Pytorch - (Example where model receives and returns hidden states)

model_wrapped = TorchModelWrapper(model)

f_hs = lambda x, y=None: model_wrapped.predict_last_hs(x, y)

In order to facilitate the interface between models and TimeSHAP,

TimeSHAP implements ModelWrappers. These wrappers, used on the PyTorch

tutorial notebook, allow for greater flexibility

of explained models as they allow:

- Batching logic: useful when using very large inputs or NSamples, which cannot fit on GPU memory, and therefore batching mechanisms are required;

- Input format/type: useful when your model does not work with numpy arrays. This is the case of our provided PyToch example;

- Hidden state logic: useful when the hidden states of your models do not match the hidden state format required by TimeSHAP

TimeSHAP offers several methods to use depending on the desired explanations. Local methods provide detailed view of a model decision corresponding to a specific sequence being explained. Global methods aggregate local explanations of a given dataset to present a global view of the model.

local_pruning() performs the pruning

algorithm on a given sequence with a given user defined tolerance and returns

the pruning index along the information for plotting.

plot_temp_coalition_pruning() plots the pruning

algorithm information calculated by local_pruning().

local_event() calculates event level explanations

of a given sequence with the user-given parameteres and returns the respective

event-level explanations.

plot_event_heatmap() plots the event-level explanations

calculated by local_event().

local_feat() calculates feature level explanations

of a given sequence with the user-given parameteres and returns the respective

feature-level explanations.

plot_feat_barplot() plots the feature-level explanations

calculated by local_feat().

local_cell_level() calculates cell level explanations

of a given sequence with the respective event- and feature-level explanations

and user-given parameteres, returing the respective cell-level explanations.

plot_cell_level() plots the feature-level explanations

calculated by local_cell_level().

local_report() calculates TimeSHAP

local explanations for a given sequence and plots them.

prune_all() performs the pruning

algorithm on multiple given sequences.

pruning_statistics() calculates the pruning

statistics for several user-given pruning tolerances using the pruning

data calculated by prune_all(), returning a pandas.DataFrame with the statistics.

event_explain_all() calculates TimeSHAP

event level explanations for multiple instances given user defined parameters.

plot_global_event() plots the global event-level explanations

calculated by event_explain_all().

feat_explain_all() calculates TimeSHAP

feature level explanations for multiple instances given user defined parameters.

plot_global_feat() plots the global feature-level

explanations calculated by feat_explain_all().

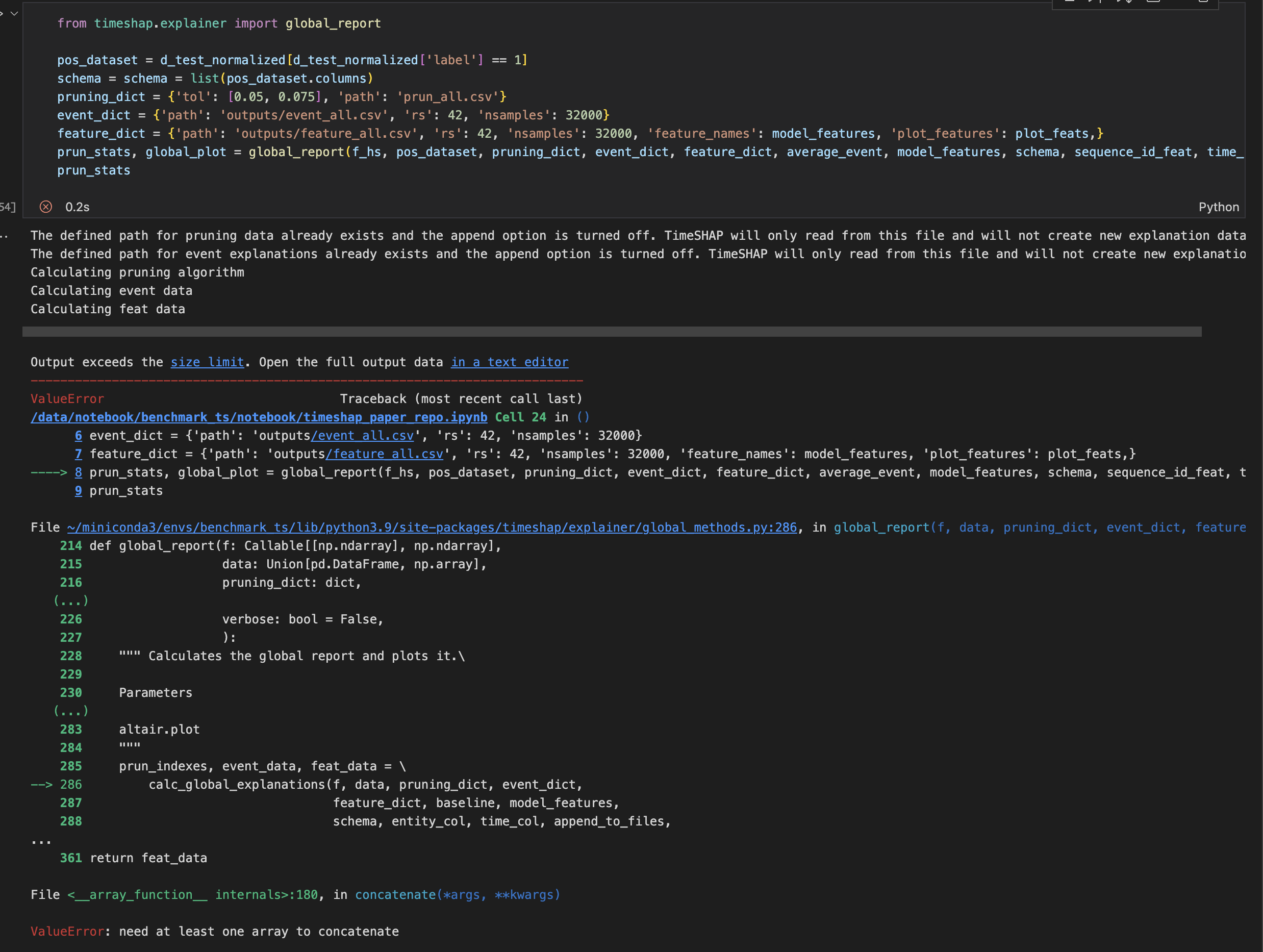

global_report() calculates TimeSHAP

explanations for multiple instances, aggregating the explanations on two plots

and returning them.

In order to demonstrate TimeSHAP interfaces and methods, you can consult AReM.ipynb. In this tutorial we get an open-source dataset, process it, train Pytorch recurrent model with it and use TimeSHAP to explain it, showcasing all previously described methods.

Additionally, we also train a TensorFlow model on the same dataset AReM_TF.ipynb.

notebooks- tutorial notebooks demonstrating the package;src/timeshap- the package source code;src/timeshap/explainer- TimeSHAP methods to produce the explanationssrc/timeshap/explainer/kernel- TimeSHAPKernelsrc/timeshap/plot- TimeSHAP methods to produce explanation plotssrc/timeshap/utils- util methods for TimeSHAP executionsrc/timeshap/wrappers- Wrapper classes for models in order to ease TimeSHAP explanations

@inproceedings{bento2021timeshap,

author = {Bento, Jo\~{a}o and Saleiro, Pedro and Cruz, Andr\'{e} F. and Figueiredo, M\'{a}rio A.T. and Bizarro, Pedro},

title = {TimeSHAP: Explaining Recurrent Models through Sequence Perturbations},

year = {2021},

isbn = {9781450383325},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3447548.3467166},

doi = {10.1145/3447548.3467166},

booktitle = {Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining},

pages = {2565–2573},

numpages = {9},

keywords = {SHAP, Shapley values, TimeSHAP, XAI, RNN, explainability},

location = {Virtual Event, Singapore},

series = {KDD '21}

}