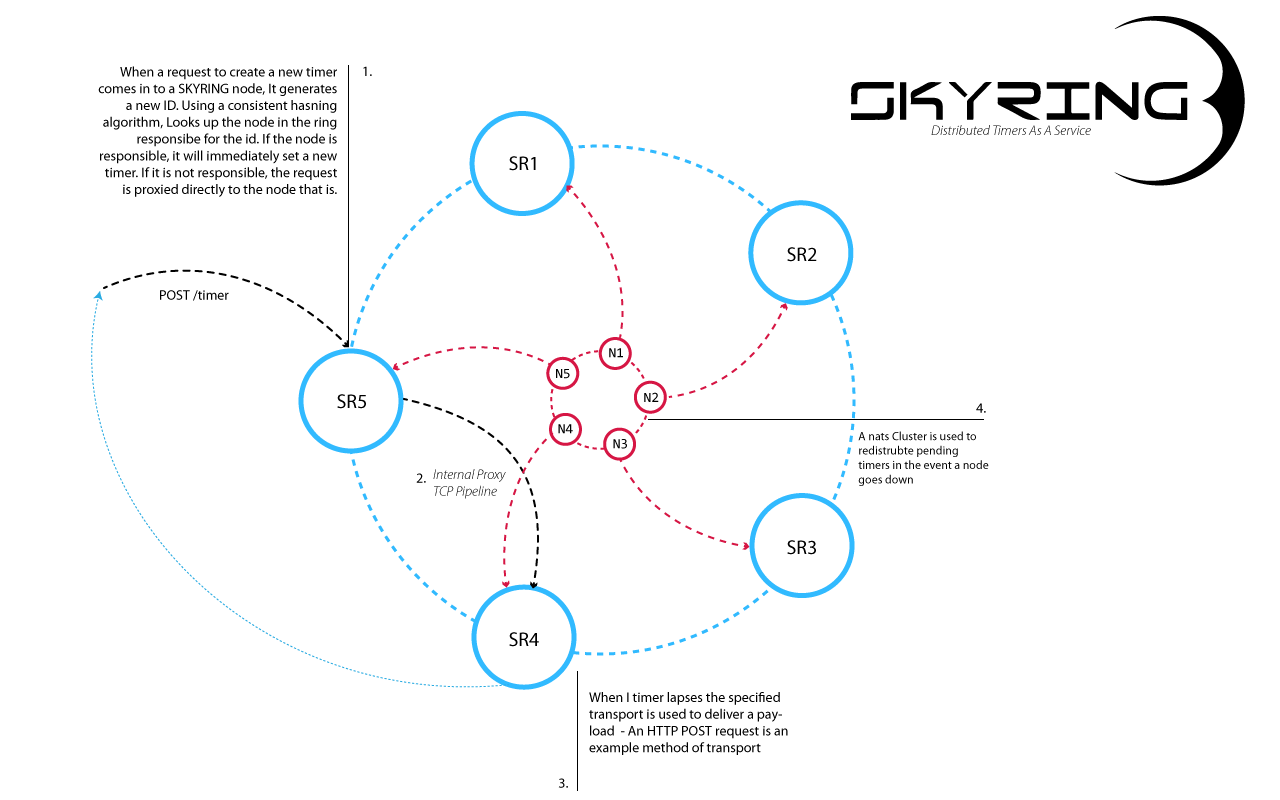

A distributed reliable timer service providing setTimeout functionality in a distributed fashion.

Skyring servers are clustered into a hashring using consistent hashing to partition timers to specific nodes in the ring. Skyring exposes a simple HTTP API that allows to you create and cancel timers. Timer execution comes in to the form of an HTTP webhook ( more transports to come )

- Pluggable transports (timer execution)

- Plugable Storage (crash recovery + balancing)

- Auto Rebalancing

- Crash Recovery

A request can be issued to any active node in the cluster. If that node is not responsible for the timer in question,

it will forward the request directly to the node that is keeping network latency to a minimum. This makes Skyring very

suitable for high performance, stateless, and distributed environments.

The minimum recommended cluster size is 3 nodes, 2 of which being seed or bootstrapping nodes. A cluster of this size can average

between 2K - 5K requests per second.

Request

Since timers managed in Skyring are done so through the use of setTimeout, there is a maximum timeout value of 2^31 - 1 or

2147483647 milliseconds, which is approximately 24.8 days. Attempting to request a timeout great than this value will result in a

400 Bad Request response. Additionally, the timeout must be greater than 0.

curl -i -XPOST http://localhost:8080/timer -d '{

"timeout": 6000,

"data" : "{\"foo\":\"bar\"}",

"callback": {

"transport": "http",

"method": "post",

"uri": "http://api.someservice.com/hook/timeout"

}

}'Skyring is a monorepo managed by pnpm. Clone the repo and bootstrap the project

$ git clone https://github.com/esatterwhite/skyring.git project-skyring

$ pnpm install -r

$ docker-compose -f compose/nats.yml up -d

$ pnpm test- Skyring: Primary server

- TCP Transport: TCP Timer transport

- ZeroMQ Transport: ZMQ Timer transport

- Ringpop: Gossip Clustering

- Scylladown: Scyalldb backed timer Storage

MIT Licensed, Copyright (c) 2020 Eric Satterwhite