Dear folks, we are offering challenging opportunities located in Beijing for both professionals and students who are keen on AutoML/NAS. Come be a part of DataCanvas! Please send your CV to [email protected]. (Application deadline: TBD.)

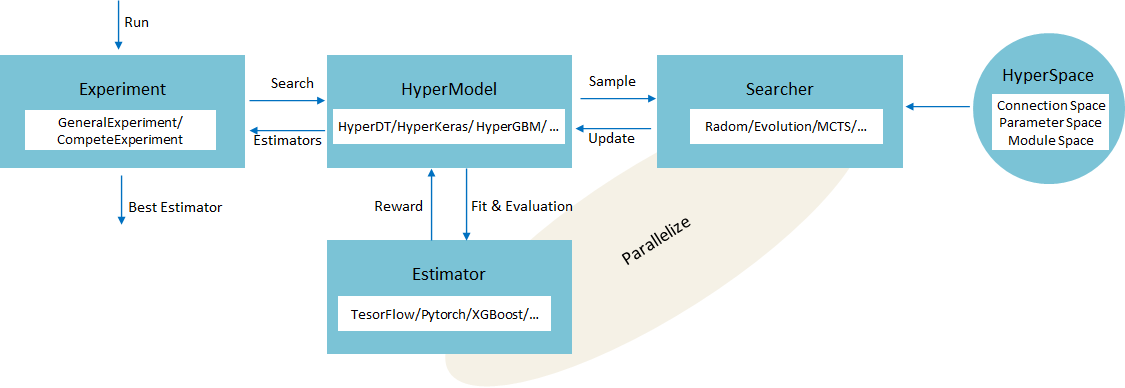

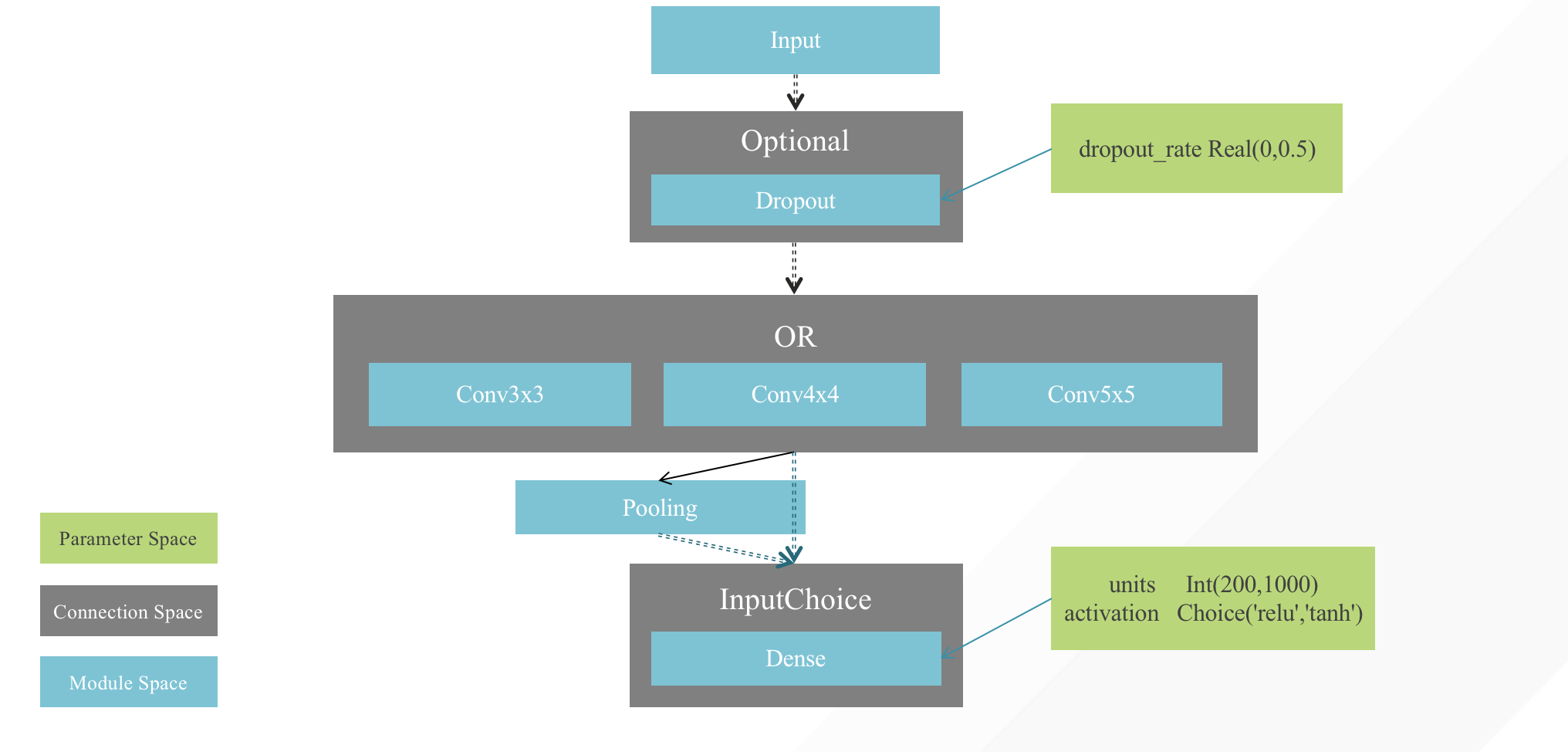

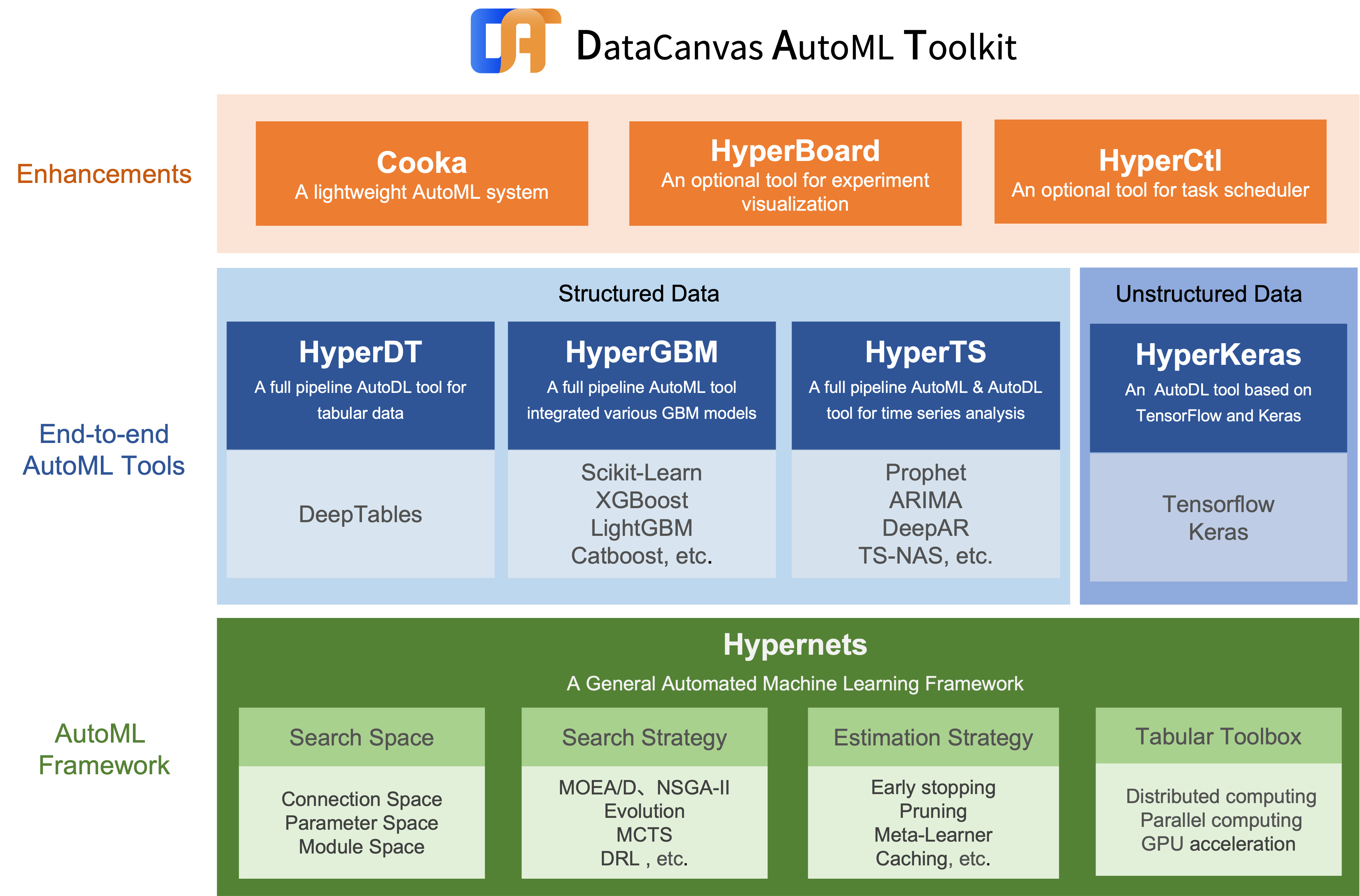

Hypernets is a general AutoML framework, based on which it can implement automatic optimization tools for various machine learning frameworks and libraries, including deep learning frameworks such as tensorflow, keras, pytorch, and machine learning libraries like sklearn, lightgbm, xgboost, etc. It also adopted various state-of-the-art optimization algorithms, including but not limited to evolution algorithm, monte carlo tree search for single objective optimization and multi-objective optimization algorithms such as MOEA/D,NSGA-II,R-NSGA-II. We introduced an abstract search space representation, taking into account the requirements of hyperparameter optimization and neural architecture search(NAS), making Hypernets a general framework that can adapt to various automated machine learning needs. As an abstraction computing layer, tabular toolbox, has successfully implemented in various tabular data types: pandas, dask, cudf, etc.

- New feature: Multi-objectives optimization support

- New feature: Performance and model complexity measurement metrics

- New feature: Distributed computing and GPU acceleration base on computational abstraction layer

Install Hypernets with conda from the channel conda-forge:

conda install -c conda-forge hypernetsInstall Hypernets with different options:

- Typical installation:

pip install hypernets- To run Hypernets in JupyterLab/Jupyter notebook, install with command:

pip install hypernets[notebook]- To run Hypernets in distributed Dask cluster, install with command:

pip install hypernets[dask]- To support dataset with simplified Chinese in feature generation,

- Install

jiebapackage before running Hypernets. - OR install Hypernets with command:

- Install

pip install hypernets[zhcn]- Install all above with one command:

pip install hypernets[all]To Verify your installation:

python -m hypernets.examples.smoke_testing- Hypernets: A general automated machine learning (AutoML) framework.

- HyperGBM: A full pipeline AutoML tool integrated various GBM models.

- HyperDT/DeepTables: An AutoDL tool for tabular data.

- HyperTS: A full pipeline AutoML&AutoDL tool for time series datasets.

- HyperKeras: An AutoDL tool for Neural Architecture Search and Hyperparameter Optimization on Tensorflow and Keras.

- HyperBoard: A visualization tool for Hypernets.

- Cooka: Lightweight interactive AutoML system.

If you use Hypernets in your research, please cite us as follows:

Jian Yang, Xuefeng Li, Haifeng Wu. Hypernets: A General Automated Machine Learning Framework. https://github.com/DataCanvasIO/Hypernets. 2020. Version 0.2.x.

BibTex:

@misc{hypernets,

author={Jian Yang, Xuefeng Li, Haifeng Wu},

title={{Hypernets}: { A General Automated Machine Learning Framework}},

howpublished={https://github.com/DataCanvasIO/Hypernets},

note={Version 0.2.x},

year={2020}

}

Hypernets is an open source project created by DataCanvas.