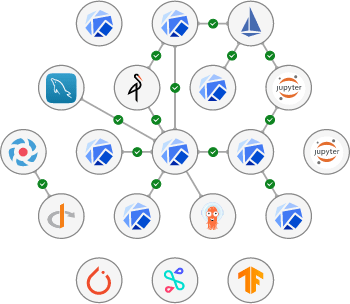

This repository hosts the Kubernetes Python Operator for Dex Auth (see CharmHub).

Upstream documentation can be found at https://github.com/dexidp/dex

The Dex Auth Operator may be deployed using the Juju command line as follows

juju deploy dex-authCanonical Charmed Kubeflow is a state of the art, fully supported MLOps platform that helps data scientists collaborate on AI innovation on any cloud from concept to production, offered by Canonical - the publishers of Ubuntu.

Charmed Kubeflow is free to use: the solution can be deployed in any environment without constraints, paywall or restricted features. Data labs and MLOps teams only need to train their data scientists and engineers once to work consistently and efficiently on any cloud – or on-premise.

Charmed Kubeflow offers a centralised, browser-based MLOps platform that runs on any conformant Kubernetes – offering enhanced productivity, improved governance and reducing the risks associated with shadow IT.

Learn more about deploying and using Charmed Kubeflow at https://charmed-kubeflow.io.

- Centralised, browser-based data science workspaces: familiar experience

- Multi user: one environment for your whole data science team

- NVIDIA GPU support: accelerate deep learning model training

- Apache Spark integration: empower big data driven model training

- Ideation to production: automate model training & deployment

- AutoML: hyperparameter tuning, architecture search

- Composable: edge deployment configurations available

- LDAP Authentication

- Jupyter Notebooks

- Work with Python and R

- Support for TensorFlow, Pytorch, MXNet, XGBoost

- TFServing, Seldon-Core

- Katib (autoML)

- Apache Spark

- Argo Workflows

- Kubeflow Pipelines

- Maintenance: Charmed Kubeflow offers up to two years of maintenance on select releases

- Optional 24/7 support available, contact us here for more information

- Optional dedicated fully managed service available, contact us here for more information or learn more about Canonical’s Managed Apps service.

- Portability: Charmed Kubeflow can be deployed on any conformant Kubernetes, on any cloud or on-premise

Please see the official docs site for complete documentation of the Charmed Kubeflow distribution.

If you find a bug in our operator or want to request a specific feature, please file a bug here: https://github.com/canonical/dex-auth-operator/issues

Charmed Kubeflow is free software, distributed under the Apache Software License, version 2.0.

Canonical welcomes contributions to Charmed Kubeflow. Please check out our contributor agreement if you're interested in contributing to the distribution.

Security issues in Charmed Kubeflow can be reported through LaunchPad. Please do not file GitHub issues about security issues.