bitnami / containers Goto Github PK

View Code? Open in Web Editor NEWBitnami container images

Home Page: https://bitnami.com

License: Other

Bitnami container images

Home Page: https://bitnami.com

License: Other

docker.io/bitnami/phpbb:3.3.8

Hi,

I'm running a phpBB (bitnami/phpbb:3.3.8) instance with (bitnami/mariadb:10.7.4) database.

I've recently update my docker compose file to update from 3.3.7 to 3.3.8.

Now when I try accessing the phpBB instance the compose logs gives me this error.

phpbb | [Tue Jun 28 09:24:59.475985 2022] [php:error] [pid 220] [client 127.0.0.1:59308] PHP Fatal error: Uncaught Twig\\Error\\LoaderError: Unable to find template "cron.html" (looked into: /bitnami/phpbb/styles/prosilver/template, /bitnami/phpbb/styles/prosilver/theme, /bitnami/phpbb/styles/all/template). in /opt/bitnami/phpbb/vendor/twig/twig/src/Loader/FilesystemLoader.php:250\nStack trace:\n#0 /opt/bitnami/phpbb/phpbb/template/twig/loader.php(135): Twig\\Loader\\FilesystemLoader->findTemplate()\n#1 /opt/bitnami/phpbb/vendor/twig/twig/src/Loader/FilesystemLoader.php(150): phpbb\\template\\twig\\loader->findTemplate()\n#2 /opt/bitnami/phpbb/vendor/twig/twig/src/Environment.php(299): Twig\\Loader\\FilesystemLoader->getCacheKey()\n#3 /opt/bitnami/phpbb/vendor/twig/twig/src/Environment.php(381): Twig\\Environment->getTemplateClass()\n#4 /opt/bitnami/phpbb/phpbb/template/twig/environment.php(277): Twig\\Environment->loadTemplate()\n#5 /opt/bitnami/phpbb/vendor/twig/twig/src/Environment.php(359): phpbb\\template\\twig\\environment->loadTemplate()\n#6 /opt/bitnami/phpbb/vendor/twig/twig/src/Environment.php(318): Twig\\Environment->load()\n#7 /opt/bitnami/phpbb/phpbb/template/twig/environment.php(224): Twig\\Environment->render()\n#8 /opt/bitnami/phpbb/phpbb/template/twig/environment.php(186): phpbb\\template\\twig\\environment->display_with_assets()\n#9 /opt/bitnami/phpbb/phpbb/template/twig/twig.php(335): phpbb\\template\\twig\\environment->render()\n#10 /opt/bitnami/phpbb/phpbb/cron/task/wrapper.php(129): phpbb\\template\\twig\\twig->assign_display()\n#11 /opt/bitnami/phpbb/phpbb/controller/helper.php(366): phpbb\\cron\\task\\wrapper->get_html_tag()\n#12 /opt/bitnami/phpbb/phpbb/controller/helper.php(315): phpbb\\controller\\helper->set_cron_task()\n#13 /opt/bitnami/phpbb/includes/functions.php(4276): phpbb\\controller\\helper->display_footer()\n#14 /opt/bitnami/phpbb/index.php(253): page_footer()\n#15 {main}\n thrown in /opt/bitnami/phpbb/vendor/twig/twig/src/Loader/FilesystemLoader.php on line 250

phpbb2 | 127.0.0.1 - - [28/Jun/2022:09:24:59 +0200] "GET / HTTP/1.1" 500 -

I can't find any cron.html file.

Regards.

Upgrade from 3.3.7 to 3.3.8 correctly.

A blank page with a 404 error.

Reverting to the version 3.3.7 now gives me version 3.3.8 on the ACP and gives me back my acces to the board.

I'm wondering if that is a problem with prosilver theme ?

bitnami-docker-spark/3.3/debian-11/Dockerfile

I am looking to upgrade Spark Image which has latest version of Spark i.e. 3.3.0 , I got the Dockerfile which has latest version of Spark , Java , Gosu etc. but found that Python version is downgraded to 3.8.13 . Is it possible to provide new Dockerfile with latest version of Python.

Requesting for Dockefile with latest version of Python i.e. 3.9.*

Python with older version 3.8

No response

I am relatively new to Airflow, Docker, and Bitnami but I am having trouble getting pyodbc to be installed on the bitnami airflow containers. I want to be able to use Airflow on Azure for work projects so that's how I found out about the bitnami-docker-airflow project.

I have followed the directions from this page: https://github.com/bitnami/bitnami-docker-airflow/blob/master/README.md

I started with the curl -sSL https://raw.githubusercontent.com/bitnami/bitnami-docker-airflow/master/docker-compose.yml > docker-compose.yml and got the docker-compose.yaml file in my documents folder. I then went in to make some changes to mount a local folder with DAG files I wanted to use and mounted another folder that had a requirements.txt file in it.

The lines below in the docker-compose.yml are the ones I added for mounting. ./dags and ./packages are the folders that the DAG .py and requirement.txt files are in. My docker-compose file will be attached.

The requirements.txt has the text pyodbc===4.0.30 as the only content in the file.

I run docker-compose up to get the containers up and running.

The output shows me that pyodbc install is failing but I can't exactly figure out what the source of the error is and what could fix it. Will attach the copy and pasted output that shows the error, having a hard time interpreting it. I have tried the docker-compose without the requirements file mounting and airflow will start up and I can see my DAGs at localhost:8080 as I would expect. I want to be able to use pyodbc though in my DAGs

Please let me know if I can provide more context

bitnami/spark:3

Create custom spark container image with additional JAR files

Attached docker file has hadoop-azure-3.3.1.jar, azure-storage-8.6.6.jar, and dependencies

Dockerfile.spark.txt

Run produced custom docker image using command similar to below

docker run --rm -it <custom_image_from_above_step>

pyspark --packages org.apache.hadoop:hadoop-azure:3.3.1,com.microsoft.azure:azure-storage:8.6.6

packages must loaded

:hadoop-azure:3.3.1,com.microsoft.azure:azure-storage:8.6.6

Python 3.8.13 (default, Apr 11 2022, 12:27:15)

[GCC 8.3.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

:: loading settings :: url = jar:file:/opt/bitnami/spark/jars/ivy-2.5.0.jar!/org/apache/ivy/core/settings/ivysettings.xml

Ivy Default Cache set to: /opt/bitnami/spark/.ivy2/cache

The jars for the packages stored in: /opt/bitnami/spark/.ivy2/jars

org.apache.hadoop#hadoop-azure added as a dependency

com.microsoft.azure#azure-storage added as a dependency

:: resolving dependencies :: org.apache.spark#spark-submit-parent-0aaec6b9-5d40-4793-b19d-35af6d4d7168;1.0

confs: [default]

Exception in thread "main" java.io.FileNotFoundException: /opt/bitnami/spark/.ivy2/cache/resolved-org.apache.spark-spark-submit-parent-0aaec6b9-5d40-4793-b19d-35af6d4d7168-1.0.xml (No such file or directory)

at java.io.FileOutputStream.open0(Native Method)

at java.io.FileOutputStream.open(FileOutputStream.java:270)

at java.io.FileOutputStream.<init>(FileOutputStream.java:213)

at java.io.FileOutputStream.<init>(FileOutputStream.java:162)

at org.apache.ivy.plugins.parser.xml.XmlModuleDescriptorWriter.write(XmlModuleDescriptorWriter.java:71)

at org.apache.ivy.plugins.parser.xml.XmlModuleDescriptorWriter.write(XmlModuleDescriptorWriter.java:63)

at org.apache.ivy.core.module.descriptor.DefaultModuleDescriptor.toIvyFile(DefaultModuleDescriptor.java:553)

at org.apache.ivy.core.cache.DefaultResolutionCacheManager.saveResolvedModuleDescriptor(DefaultResolutionCacheManager.java:183)

at org.apache.ivy.core.resolve.ResolveEngine.resolve(ResolveEngine.java:259)

at org.apache.ivy.Ivy.resolve(Ivy.java:522)

at org.apache.spark.deploy.SparkSubmitUtils$.resolveMavenCoordinates(SparkSubmit.scala:1445)

at org.apache.spark.util.DependencyUtils$.resolveMavenDependencies(DependencyUtils.scala:185)

at org.apache.spark.deploy.SparkSubmit.prepareSubmitEnvironment(SparkSubmit.scala:308)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:898)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1043)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1052)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Traceback (most recent call last):

File "/opt/bitnami/spark/python/pyspark/shell.py", line 35, in <module>

SparkContext._ensure_initialized() # type: ignore

File "/opt/bitnami/spark/python/pyspark/context.py", line 339, in _ensure_initialized

SparkContext._gateway = gateway or launch_gateway(conf)

File "/opt/bitnami/spark/python/pyspark/java_gateway.py", line 108, in launch_gateway

raise RuntimeError("Java gateway process exited before sending its port number")

RuntimeError: Java gateway process exited before sending its port number

FROM bitnami/spark:3

USER root

# Download hadoop-azure, azure-storage, and dependencies (See above)

RUN curl https://repo1.maven.org/maven2/org/apache/hadoop/hadoop-azure/3.3.1/hadoop-azure-3.3.1.jar --output /opt/bitnami/spark/jars/hadoop-azure-3.3.1.jar

RUN curl https://repo1.maven.org/maven2/com/microsoft/azure/azure-storage/8.6.6/azure-storage-8.6.6.jar --output /opt/bitnami/spark/jars/azure-storage-8.6.6.jar

RUN curl https://repo1.maven.org/maven2/org/apache/httpcomponents/httpclient/4.5.13/httpclient-4.5.13.jar --output /opt/bitnami/spark/jars/httpclient-4.5.13.jar

RUN curl https://repo1.maven.org/maven2/org/apache/hadoop/thirdparty/hadoop-shaded-guava/1.1.1/hadoop-shaded-guava-1.1.1.jar --output /opt/bitnami/spark/jars/hadoop-shaded-guava-1.1.1.jar

RUN curl https://repo1.maven.org/maven2/org/eclipse/jetty/jetty-util-ajax/9.4.40.v20210413/jetty-util-ajax-9.4.40.v20210413.jar --output /opt/bitnami/spark/jars/jetty-util-ajax-9.4.40.v20210413.jar

RUN curl https://repo1.maven.org/maven2/org/codehaus/jackson/jackson-mapper-asl/1.9.13/jackson-mapper-asl-1.9.13.jar --output /opt/bitnami/spark/jars/jackson-mapper-asl-1.9.13.jar

RUN curl https://repo1.maven.org/maven2/org/codehaus/jackson/jackson-core-asl/1.9.13/jackson-core-asl-1.9.13.jar --output /opt/bitnami/spark/jars/jackson-core-asl-1.9.13.jar

RUN curl https://repo1.maven.org/maven2/org/wildfly/openssl/wildfly-openssl/1.0.7.Final/wildfly-openssl-1.0.7.Final.jar --output /opt/bitnami/spark/jars/wildfly-openssl-1.0.7.Final.jar

RUN curl https://repo1.maven.org/maven2/org/apache/httpcomponents/httpcore/4.4.13/httpcore-4.4.13.jar --output /opt/bitnami/spark/jars/httpcore-4.4.13.jar

RUN curl https://repo1.maven.org/maven2/commons-logging/commons-logging/1.1.3/commons-logging-1.1.3.jar --output /opt/bitnami/spark/jars/commons-logging-1.1.3.jar

RUN curl https://repo1.maven.org/maven2/commons-codec/commons-codec/1.11/commons-codec-1.11.jar --output /opt/bitnami/spark/jars/commons-codec-1.11.jar

RUN curl https://repo1.maven.org/maven2/org/eclipse/jetty/jetty-util/9.4.40.v20210413/jetty-util-9.4.40.v20210413.jar --output /opt/bitnami/spark/jars/jetty-util-9.4.40.v20210413.jar

RUN curl https://repo1.maven.org/maven2/com/fasterxml/jackson/core/jackson-core/2.9.4/jackson-core-2.9.4.jar --output /opt/bitnami/spark/jars/jackson-core-2.9.4.jar

RUN curl https://repo1.maven.org/maven2/org/slf4j/slf4j-api/1.7.12/slf4j-api-1.7.12.jar --output /opt/bitnami/spark/jars/slf4j-api-1.7.12.jar

RUN curl https://repo1.maven.org/maven2/org/apache/commons/commons-lang3/3.4/commons-lang3-3.4.jar --output /opt/bitnami/spark/jars/commons-lang3-3.4.jar

RUN curl https://repo1.maven.org/maven2/com/microsoft/azure/azure-keyvault-core/1.2.4/azure-keyvault-core-1.2.4.jar --output /opt/bitnami/spark/jars/azure-keyvault-core-1.2.4.jar

RUN curl https://repo1.maven.org/maven2/com/google/guava/guava/24.1.1-jre/guava-24.1.1-jre.jar --output /opt/bitnami/spark/jars/guava-24.1.1-jre.jar

RUN curl https://repo1.maven.org/maven2/com/google/code/findbugs/jsr305/1.3.9/jsr305-1.3.9.jar --output /opt/bitnami/spark/jars/jsr305-1.3.9.jar

RUN curl https://repo1.maven.org/maven2/org/checkerframework/checker-compat-qual/2.0.0/checker-compat-qual-2.0.0.jar --output /opt/bitnami/spark/jars/checker-compat-qual-2.0.0.jar

RUN curl https://repo1.maven.org/maven2/com/google/errorprone/error_prone_annotations/2.1.3/error_prone_annotations-2.1.3.jar --output /opt/bitnami/spark/jars/error_prone_annotations-2.1.3.jar

RUN curl https://repo1.maven.org/maven2/com/google/j2objc/j2objc-annotations/1.1/j2objc-annotations-1.1.jar --output /opt/bitnami/spark/jars/j2objc-annotations-1.1.jar

RUN curl https://repo1.maven.org/maven2/org/codehaus/mojo/animal-sniffer-annotations/1.14/animal-sniffer-annotations-1.14.jar --output /opt/bitnami/spark/jars/animal-sniffer-annotations-1.14.jar

ENV BITNAMI_APP_NAME=spark BITNAMI_IMAGE_VERSION=3.0.0-debian-10-r48 JAVA_HOME=/opt/bitnami/java LD_LIBRARY_PATH=/opt/bitnami/python/lib/:/opt/bitnami/spark/venv/lib/python3.6/site-packages/numpy.libs/: LIBNSS_WRAPPER_PATH=/opt/bitnami/common/lib/libnss_wrapper.so NSS_WRAPPER_GROUP=/opt/bitnami/spark/tmp/nss_group NSS_WRAPPER_PASSWD=/opt/bitnami/spark/tmp/nss_passwd SPARK_HOME=/opt/bitnami/spark

WORKDIR /opt/bitnami/spark

USER 1001

ENTRYPOINT ["/opt/bitnami/scripts/spark/entrypoint.sh"]

CMD ["/opt/bitnami/scripts/spark/run.sh"]bitnami/wordpress-nginx:6

wp-content/plugins and wp-content/themes foldersdocker compose upcp: cannot create regular file '/bitnami/wordpress/wp-content/index.php': Permission denied

cp: cannot create directory '/bitnami/wordpress/wp-content/languages': Permission denied

cp: cannot create directory '/bitnami/wordpress/wp-content/upgrade': Permission denied

cp: cannot create directory '/bitnami/wordpress/wp-content/uploads': Permission denied

Expected behavior is for the installation to work and complete successfully

cp: cannot create regular file '/bitnami/wordpress/wp-content/index.php': Permission denied

cp: cannot create directory '/bitnami/wordpress/wp-content/languages': Permission denied

cp: cannot create directory '/bitnami/wordpress/wp-content/upgrade': Permission denied

cp: cannot create directory '/bitnami/wordpress/wp-content/uploads': Permission denied

The mapped volumes are owned by root:

drwxr-xr-x 4 root root 4096 Jun 27 11:02 wp-content

There is something wrong with this image. I'm still investigating the performance issue.

First lets run a simple benchmark with the ruby:2.7 image:

root@localhost:~# docker run -it --rm ruby:2.7 ruby -ve 't = Time.now; i=0;while i<100_000_000;i+=1;end; puts "#{ Time.now - t } sec"'

ruby 2.7.5p203 (2021-11-24 revision f69aeb8314) [x86_64-linux]

2.271846678 sec

Then compare it with bitnami/ruby:2.7-debian-10 image:

root@localhost:~# docker run -it --rm bitnami/ruby:2.7-debian-10 ruby -ve 't = Time.now; i=0;while i<100_000_000;i+=1;end; puts "#{ Time.now - t } sec"'

ruby 2.7.2p137 (2020-10-01 revision 5445e04352) [x86_64-linux]

15.051291622 sec

I think this could be related to seccomp profile or misconfiguration of the base debian image. Please see these for more information:

For security considerations the image should be able to run with the docker/kubernetes parameter read-only root file-system and only directories, that need to be written into, mounted as volumes.

At the moment this is not possible and leaves the keycloak container open for attack vectors.

Steps to reproduce the issue:

docker

append --read-only --volume <volume-name>:/mount/path to the start command

docker-compose

add read_only: true into the compose file

kubernetes

Add to statefulset container specs

securityContext:

readOnlyRootFilesystem: trueThe following command from README.md :

docker run --detach --rm --name test-openldap --network my-network --env LDAP_ADMIN_USERNAME=admin --env LDAP_ADMIN_PASSWORD=adminpassword --env LDAP_USERS=customuser --env LDAP_PASSWORDS=custompassword bitnami/openldap:latest

triggers this error :

# docker logs -f test-openldap

13:48:24.30 INFO ==> ** Starting LDAP setup **

13:48:24.36 INFO ==> Validating settings in LDAP_* env vars

13:48:24.37 INFO ==> Initializing OpenLDAP...

13:48:24.40 INFO ==> Creating LDAP online configuration

13:48:24.42 INFO ==> Starting OpenLDAP server in background

13:48:43.59 INFO ==> Configure LDAP credentials for admin user

13:48:45.05 INFO ==> Adding LDAP extra schemas

13:48:45.74 INFO ==> Creating LDAP default tree

13:48:46.70 INFO ==> ** LDAP setup finished! **

13:48:46.74 INFO ==> ** Starting slapd **

5ee2363e @(#) $OpenLDAP: slapd 2.4.50 (May 4 2020 16:17:50) $

@5fb3c780904c:/bitnami/blacksmith-sandox/openldap-2.4.50/servers/slapd

5ee23655 hdb_db_open: database "dc=example,dc=org": database already in use.

5ee23655 backend_startup_one (type=hdb, suffix="dc=example,dc=org"): bi_db_open failed! (-1)

5ee23655 slapd stopped.

Description

External elasticsearch plugins install successfully, but are misnamed when copied to plugin.mandatory causing each node to fail to start with an exception.

Steps to reproduce the issue:

docker run --name test -e ELASTICSEARCH_PLUGINS=http://es-learn-to-rank.labs.o19s.com/ltr-plugin-v1.5.1-es7.9.2.zip -d bitnami/elasticsearch:7.9.2-debian-10-r0

Describe the results you received:

Fails to start with exception:

...

uncaught exception in thread [main]

java.lang.IllegalStateException: missing mandatory plugins [ltr-plugin-v1.5.1-es7.9.2], found plugins [ltr, repository-s3]

at org.elasticsearch.plugins.PluginsService.<init>(PluginsService.java:186)

Describe the results you expected:

Bitnami populates the plugin.mandatory value from ELASTICSEARCH_PLUGINS which prevents a search node from starting if all plugins are not successfully installed. This works well with default (included) plugins which Elasticsearch will install by "plugin name". However when using an external plugin resource (zipfile over http or local filesystem), even though the plugin is installed correctly, the plugin name used to populate plugin.mandatory is not correctly derived from the plugin resource.

In the example to reproduce (above), the plugin (named ltr) is correctly installed, however, the value copied to plugin.mandatory is ltr-plugin-v1.5.1-es7.9.2 which fails the startup check, since it is not also ltr.

Additional information you deem important (e.g. issue happens only occasionally):

There is an attempt to derive the "plugin name" from the downloaded filename here: https://github.com/bitnami/bitnami-docker-elasticsearch/blob/master/7/debian-10/rootfs/opt/bitnami/scripts/libelasticsearch.sh#L500

However there is no such plugin file-naming convention required by Elastic. Instead, there is a plugin-descriptor.properties inside every external elasticsearch plugin archive containing a required property name which should be used instead.

Version

docker version:Client: Docker Engine - Community

Version: 19.03.5

API version: 1.40

Go version: go1.12.12

Git commit: 633a0ea

Built: Wed Nov 13 07:22:34 2019

OS/Arch: darwin/amd64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 19.03.5

API version: 1.40 (minimum version 1.12)

Go version: go1.12.12

Git commit: 633a0ea

Built: Wed Nov 13 07:29:19 2019

OS/Arch: linux/amd64

Experimental: true

containerd:

Version: v1.2.10

GitCommit: b34a5c8af56e510852c35414db4c1f4fa6172339

runc:

Version: 1.0.0-rc8+dev

GitCommit: 3e425f80a8c931f88e6d94a8c831b9d5aa481657

docker-init:

Version: 0.18.0

GitCommit: fec3683

docker info:Client:

Debug Mode: false

Server:

Containers: 19

Running: 0

Paused: 0

Stopped: 19

Images: 32

Server Version: 19.03.5

Storage Driver: overlay2

Backing Filesystem: extfs

Supports d_type: true

Native Overlay Diff: true

Logging Driver: json-file

Cgroup Driver: cgroupfs

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog

Swarm: inactive

Runtimes: runc

Default Runtime: runc

Init Binary: docker-init

containerd version: b34a5c8af56e510852c35414db4c1f4fa6172339

runc version: 3e425f80a8c931f88e6d94a8c831b9d5aa481657

init version: fec3683

Security Options:

seccomp

Profile: default

Kernel Version: 4.19.76-linuxkit

Operating System: Docker Desktop

OSType: linux

Architecture: x86_64

CPUs: 4

Total Memory: 2.924GiB

Name: docker-desktop

ID: CWGO:3SKD:BICU:JSQL:7RFU:MVGE:UPLF:EQVJ:VJSP:GCBO:NTHO:FQQY

Docker Root Dir: /var/lib/docker

Debug Mode: true

File Descriptors: 35

Goroutines: 52

System Time: 2020-10-23T17:13:45.597921481Z

EventsListeners: 3

HTTP Proxy: gateway.docker.internal:3128

HTTPS Proxy: gateway.docker.internal:3129

Registry: https://index.docker.io/v1/

Labels:

Experimental: true

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

Product License: Community Engine

docker-compose version (if applicable):(paste your output here)

Additional environment details (AWS, VirtualBox, Docker for MAC, physical, etc.):

bitnami/mongodb:latest

I realized why my connection to MongoDB was returning error thanks to Studio3t.

|_/ Connection error (MongoSocketWriteException): Exception sending message

|____/ SSL error: No subject alternative names present

|_______/ Certificate error: No subject alternative names present

Update the information. It's misleading for MongoDB 4.1+ users.

No response

bitnami/wordpress:6

docker-compose.yamlWORDPRESS_ENABLE_HTACCESS_PERSISTENCE=yesWORDPRESS_HTACCESS_OVERRIDE_NONE=no or yes./wordpress_data:/bitnami/wordpressdocker-compose up -d./wordpress_data/.htaccess or ./wordpress_data/wordpress-htaccess.confdocker-compose down && docker-compose up -d.htaccess should remain the same as edited when restarting the container.

.htaccess gets overwritten to a blank file.

I've also tried mounting a local .htaccess file: ./public:.htaccess:/opt/bitnami/wordpress/.htaccess, but the container starts and hangs because it tries and fails to remove the /opt/bitnami/wordpress/.htaccess with the error that it is locked or busy, or for some reason cp: 'wp-config.php' and '/bitnami/wordpress/wp-config.php' are the same file

The docs don't seem to be clear as to what to mount in order for the .htaccess to be persisted, but the mounting of /bitnami/wordpress doesn't appear to work.

bitnami/kafka:3.2

docker-compose.yaml

version: '3'

services:

zookeeper:

container_name: zookeeper

image: docker.io/bitnami/zookeeper:3.8

ports:

- "2181:2181"

environment:

- ZOO_ENABLE_AUTH=yes

- ZOO_SERVER_USERS=user

- ZOO_SERVER_PASSWORDS=password

- ZOO_CLIENT_USER=user

- ZOO_CLIENT_PASSWORD=password

kafka:

container_name: kafka

image: docker.io/bitnami/kafka:3.2

ports:

- "9093:9093"

environment:

- KAFKA_CFG_ZOOKEEPER_CONNECT=zookeeper:2181

- KAFKA_CFG_AUTO_CREATE_TOPICS_ENABLE=false

- KAFKA_ZOOKEEPER_PROTOCOL=SASL

- KAFKA_ZOOKEEPER_USER=user

- KAFKA_ZOOKEEPER_PASSWORD=password

- KAFKA_CLIENT_USERS=user

- KAFKA_CLIENT_PASSWORDS=password

- KAFKA_INTER_BROKER_USER=user

- KAFKA_INTER_BROKER_PASSWORD=password

- KAFKA_CFG_LISTENERS=INTERNAL://:9092,EXTERNAL://:9093

- KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP=INTERNAL:SASL_PLAINTEXT,EXTERNAL:SASL_PLAINTEXT

- KAFKA_CFG_ADVERTISED_LISTENERS=INTERNAL://kafka:9092,EXTERNAL://localhost:9093

- KAFKA_CFG_INTER_BROKER_LISTENER_NAME=INTERNAL

- KAFKA_CFG_SASL_ENABLED_MECHANISMS=SCRAM-SHA-256

- KAFKA_CFG_SASL_MECHANISM_INTER_BROKER_PROTOCOL=SCRAM-SHA-256

- KAFKA_CFG_SECURITY_INTER_BROKER_PROTOCOL=SASL_PLAINTEXT

volumes:

- bitnami-config:/opt/bitnami/kafka/config

depends_on:

- zookeeper

kafka_init:

container_name: kafka_init

image: docker.io/bitnami/kafka:3.2

command:

- /opt/bitnami/kafka/bin/kafka-topics.sh

- --create

- --bootstrap-server

- kafka:9092

- --topic

- my-topic

- --partitions

- "1"

- --replication-factor

- "1"

depends_on:

- kafka

environment:

- KAFKA_OPTS=-Djava.security.auth.login.config=/opt/bitnami/kafka/config/kafka_jaas.conf

volumes:

- bitnami-config:/opt/bitnami/kafka/config:ro

volumes:

bitnami-config:topic gets created

kafka_init container fails to create topic

kafka container log shows lots of these:

[2022-07-21 10:26:27,096] INFO [SocketServer listenerType=ZK_BROKER, nodeId=1002] Failed authentication with /172.25.0.4 (channelId=172.25.0.3:9092-172.25.0.4:46096-58) (Unexpected Kafka request of type METADATA during SASL handshake.) (org.apache.kafka.common.network.Selector)

$ cat /opt/bitnami/kafka/config/kafka_jaas.conf

KafkaServer {

org.apache.kafka.common.security.scram.ScramLoginModule required

username="user"

password="password";

};

Client {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="user"

password="password";

};Hi,

First thanks for this high quality container!

I have a very similar issue as described here. In short I'd like to set a ppolicy that forces password hash even if they are sent in clear text by the user, and the client is not respecting the ldap password modify extended operation.

In the posted link they solve it by adding olcPPolicyHashCleartext attribute and set to true.

I've naively tried to make a similar modification as described in the last comment but failed (not an ldap expert), probably the DN is different, I get this error:

$ ldapadd -Q -Y EXTERNAL -H ldapi:/// -f _file.ldif

modifying entry "cn=module,cn=config"

ldap_modify: No such object (32)

matched DN: cn=configHaving a flag to turn on this feature would be very nice. Do you have any suggestions on how to achieve this?

Thanks!

bitnami/spark:*

Easy to view logs and code optimization, etc.

Reference documentation: https://spark.apache.org/docs/latest/monitoring.html#viewing-after-the-fact

You can use this command: spark-class org.apache.spark.deploy.history.HistoryServer

I can also customize the HistoryServer myself using apache/spark

bitnami/keycloak:18.0.2-debian-11-r3

keycloak should start up and pass healthchecks

No suitable driver found for jdbc:postgresql://keycloak-postgresql:5432/bitnami_keycloak?currentSchema=public

We rolled back to r0 for the time being, but it looks like the driver got removed in the newer versions?

When starting the bitnami/solr container for the second time with docker-compose, it fails with:

Port 8983 is already being used by another process (pid: 88)

Steps to reproduce the issue:

docker-compose updocker-compose.yml file used:version: '3.5'

services:

bitnami-solr:

image: bitnami/solr:8docker-compose upDescribe the results you received:

On the very first startup, the solr instance starts up correctly and the container keeps running.

On second startup, the solr process is unable to use the same port again, probably because another instance of solr is running in the background and using that port.

The solr instance then exits with exit-code 1, and the container does the same. To be able to start a working solr container, I have to delete the existing container (i.e. with docker-compose down) and then start again.

Describe the results you expected:

I expect the container-image to be set up to allow for restarting an existing stopped container.

This problem seems to be caused by the ENTRYPOINT or CMD isn't properly handling that solr is already running in the background.

It may also be a mistake that solr is installed as a background service that automatically starts, since the ENTRYPOINT/CMD normally is supposed to be responsible for starting the "main" process of the container.

Additional information you deem important (e.g. issue happens only occasionally):

I can reproduce the error consistently with this extremely minimal docker-compose file.

Interestingly, sometimes the second startup will succeed and it will then fail on the third startup instead. I think this has to do with how quickly the container is stopped after startup.

Version

docker version:Client:

Cloud integration: v1.0.20

Version: 20.10.10

API version: 1.41

Go version: go1.16.9

Git commit: b485636

Built: Mon Oct 25 07:47:53 2021

OS/Arch: windows/amd64

Context: default

Experimental: true

Server: Docker Engine - Community

Engine:

Version: 20.10.10

API version: 1.41 (minimum version 1.12)

Go version: go1.16.9

Git commit: e2f740d

Built: Mon Oct 25 07:41:30 2021

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.4.11

GitCommit: 5b46e404f6b9f661a205e28d59c982d3634148f8

runc:

Version: 1.0.2

GitCommit: v1.0.2-0-g52b36a2

docker-init:

Version: 0.19.0

GitCommit: de40ad0

docker info:Client:

Context: default

Debug Mode: false

Plugins:

buildx: Build with BuildKit (Docker Inc., v0.6.3)

compose: Docker Compose (Docker Inc., v2.1.1)

scan: Docker Scan (Docker Inc., 0.9.0)

Server:

Containers: 8

Running: 5

Paused: 0

Stopped: 3

Images: 17

Server Version: 20.10.10

Storage Driver: overlay2

Backing Filesystem: extfs

Supports d_type: true

Native Overlay Diff: true

userxattr: false

Logging Driver: json-file

Cgroup Driver: cgroupfs

Cgroup Version: 1

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog

Swarm: inactive

Runtimes: runc io.containerd.runc.v2 io.containerd.runtime.v1.linux

Default Runtime: runc

Init Binary: docker-init

containerd version: 5b46e404f6b9f661a205e28d59c982d3634148f8

runc version: v1.0.2-0-g52b36a2

init version: de40ad0

Security Options:

seccomp

Profile: default

Kernel Version: 5.10.60.1-microsoft-standard-WSL2

Operating System: Docker Desktop

OSType: linux

Architecture: x86_64

CPUs: 16

Total Memory: 24.99GiB

Name: docker-desktop

ID: GIQ4:NRXM:3EIE:WBFD:CUTU:CMIW:T7GJ:VCKM:O3I7:IBBK:SJXR:4BM4

Docker Root Dir: /var/lib/docker

Debug Mode: false

Registry: https://index.docker.io/v1/

Labels:

Experimental: false

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

WARNING: No blkio throttle.read_bps_device support

WARNING: No blkio throttle.write_bps_device support

WARNING: No blkio throttle.read_iops_device support

WARNING: No blkio throttle.write_iops_device support

docker-compose version (if applicable):Docker Compose version v2.1.1

Additional environment details (AWS, VirtualBox, Docker for MAC, physical, etc.):

bitnami/opencart4.0.0.0

Looking to see when you might support 4.x

Moving to the latest version

building my own docker container.

bitnami/mongodb:4.4.14

We start with a simple docker-compose.yml to start a replica set with a single primary node, and a volume to persist db data:

version: '3.9'

services:

mongo:

image: bitnami/mongodb:4.4.14

container_name: mongo

ports:

- 27030:27017

volumes:

- mongodata:/bitnami/mongodb

environment:

- MONGODB_ROOT_USER=root

- MONGODB_ROOT_PASSWORD=root

- MONGODB_REPLICA_SET_MODE=primary

- MONGODB_REPLICA_SET_KEY=ThisIsAUniqueKeyThatShouldBeChanged123

volumes:

mongodata:

driver: localRunning docker-compose up the first time results in the replica set correctly starting with a single primary node:

...

"msg":"Transition to primary complete; database writes are now permitted"

Now stop the containers (by pressing ctrl+c in cli or docker-compose stop if running in detached mode) and run docker-compose down

Try to restart the container:

docker-compose up

Results in the following output:

..."msg":"This node is not a member of the config"

..."msg":"Replica set state transition","attr":{"newState":"REMOVED","oldState":"STARTUP"}

So it looks like the node did not start back up in primary mode. Please note that when using docker-compose stop and then docker-compose start it does seem to be able to restart correctly.

I should be able to restart a primary node from volume data.

Unable to restart primary node.

No response

Why should we delete all users and databses users created when cluster recovered from non-primary node? I think this is a dangerous way.

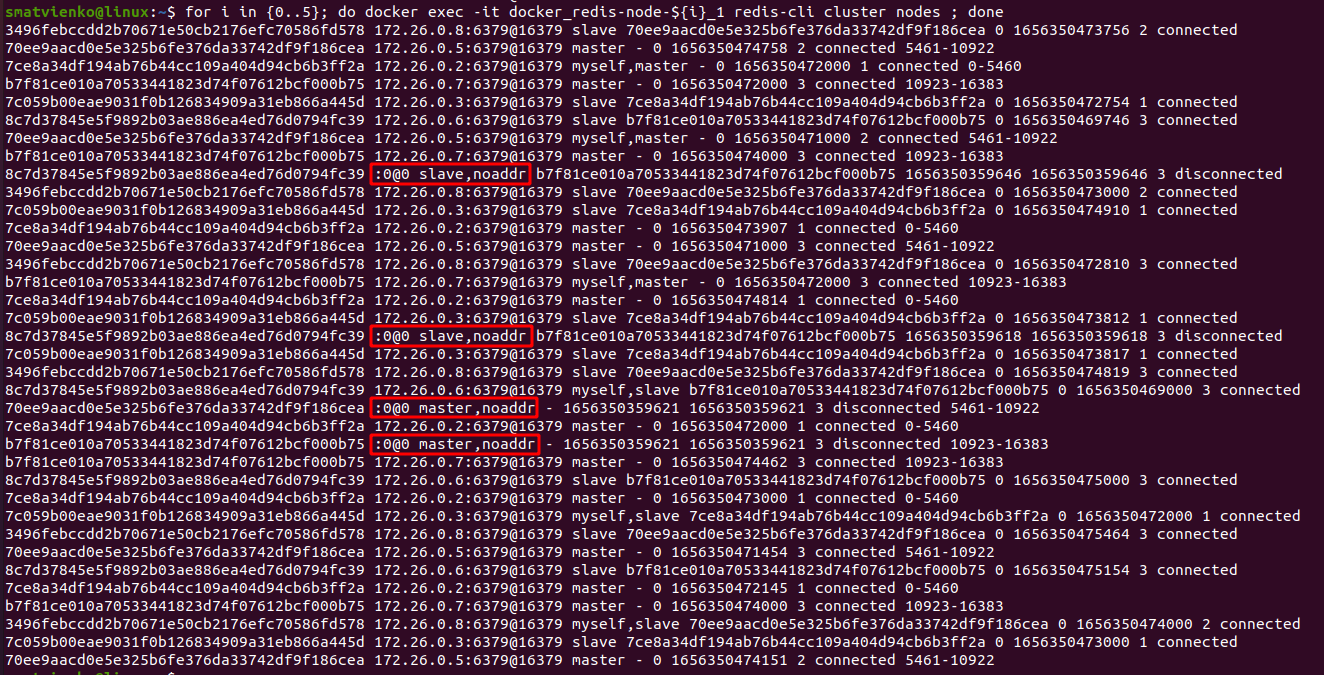

bitnami/redis-cluster:7.0

$ curl -sSL https://raw.githubusercontent.com/bitnami/bitnami-docker-redis-cluster/master/docker-compose.yml > docker-compose.yml

$ docker-compose up -d

Here is the command line to diagnose the cluster nodes

for i in {0..5}; do docker exec -it docker_redis-node-${i}_1 redis-cli cluster nodes ; done

Nominal cluster state on each node redis-cli cluster nodes:

Sometimes cluster does not create properly when nodes starts in parallel.

(:0@0 master,noaddr , :0@0 slave,noaddr)

This led to exceptions on the ThingsBoard on the Jedis driver and the cause is not obvious to a user.

Caused by: org.springframework.dao.DataAccessResourceFailureException: Cannot obtain connection to node 7ce8a34df194ab76b44cc109a404d94cb6b3ff2as as it is not associated with a hostname!

at org.springframework.data.redis.connection.jedis.JedisClusterConnection$JedisClusterNodeResourceProvider.getConnectionForSpecificNode(JedisClusterConnection.java:968)

at org.springframework.data.redis.connection.jedis.JedisClusterConnection$JedisClusterNodeResourceProvider.getResourceForSpecificNode(JedisClusterConnection.java:943)

at org.springframework.data.redis.connection.jedis.JedisClusterConnection$JedisClusterNodeResourceProvider.getResourceForSpecificNode(JedisClusterConnection.java:900)

at org.springframework.data.redis.connection.ClusterCommandExecutor.executeMultiKeyCommandOnSingleNode(ClusterCommandExecutor.java:312)

at org.springframework.data.redis.connection.ClusterCommandExecutor.lambda$executeMultiKeyCommand$2(ClusterCommandExecutor.java:297)

The problem fires randomly, probably when an unhealthy Redis node has been chosen as the seed node to discover cluster topology.

before PR (:0@0 master,noaddr, :0@0 slave,noaddr):

The restart of the cluster does not help.

The issue is quite stable to reproduce.

The workaround I managed is to start Redis nodes one by one using depends_on.

Here is my PR to the ThingsBoard where the Bitnami Redis cluster in docker-compose is used for black-box tests.

You can find the desired depends_on lines in the files changes section.

bitnami/airflow:latest

When I put my DAG file into /opt/bitnami/airflow/dags, I don't see it in the web UI. I searched the web but nothing worked for me.

No response

I don't see any DAG files.

No response

bitnami/apache:2.4

container restart normally

container restart failed with logs below:

apache 02:00:53.42 INFO ==> ** Starting Apache **

httpd: Syntax error on line 162 of /opt/bitnami/apache/conf/httpd.conf: Cannot load /usr/lib/apache2/modules/mod_dav_svn.so into server: /usr/lib/x86_64-linux-gnu/libsvn_subr-1.so.1: undefined symbol: apr_crypto_block_cleanupI had tried:

Nothing helped.

The official documentation states the following:

Due to the value of the setting SecAuditLogType=Concurrent the ModSecurity log is stored in multiple files inside the directory

/var/log/audit. The defaultSerialvalue in SecAuditLogType can impact performance.

https://kubernetes.github.io/ingress-nginx/user-guide/third-party-addons/modsecurity/

It does not seem to be the case in the Bitnami image:

$ docker run --rm -ti --entrypoint= \

quay.io/bitnami/nginx-ingress-controller:1.0.4-debian-10-r8 \

grep -E '^(SecAuditLogType|SecAuditLogStorageDir)' /etc/nginx/modsecurity/modsecurity.conf

SecAuditLogType SerialIn the official image:

$ docker run --rm -ti --entrypoint= \

k8s.gcr.io/ingress-nginx/controller:v1.0.4 \

grep -E '^(SecAuditLogType|SecAuditLogStorageDir)' /etc/nginx/modsecurity/modsecurity.conf

SecAuditLogType Concurrent

SecAuditLogStorageDir /var/log/audit/The change appear to be made by this script upstream:

https://github.com/kubernetes/ingress-nginx/blob/af7d9581f47113f4e2cfd7fac92ba02ae9cd49f0/images/nginx/rootfs/build.sh#L550-L554

It would be nice to have this discrepancy corrected in both the 0.x and 1.x Bitnami images.

bitnami/keycloak:18.0.2

Simplify docker-compose (and probably orchestrators) setup when I need to start a keycloak instance with a realm imported on startup.

I need to import a realm in json format when my KC service starts up. Essentially I wanted to run ./kc.sh -cf ... start-dev --import-realms. The --import-realms flag tells KC to check the $KC_HOME/data/import directory for json files and import those into its database as realms.

I wanted to solve this problem by

volumes section of docker-compose: mounting my realm.json into /opt/bitnami/keycloak/data/import/realm.jsonThe result: the data directory doesn't exist on the docker image by default. Hence docker-compose created it for me in the container, but this way its owner was the root user. As a result, when I tried to log in to KC, KC couldn't create the $KC_HOME/data/tmp` directory (permission error).

Create an empty /opt/bitnami/keycloak/data directory when building the Dockerfile so that it will be owned by the default user.

For now, I worked the problem around by:

adding these volumes to the compose file:

volumes:

- './import-realm.sh:/docker-entrypoint-initdb.d/0-import-realm.sh'

- './realm.json:/var/realm.json'where import-realm.sh is

#!/bin/bash

cd /opt/bitnami/keycloak

mkdir -p data/import

cp /var/realm.json data/importBut this took quite some time overall to figure out and it feels just like a sluggish workaround.

Currently, container images are published in different container registries, being Google Container Registry (GCR) and DockerHub the most popular ones.

In order to unify the source of truth, we decided to not publish new versions in GCR, keeping DockerHub as the only official registry at this time.

Helm charts use by default DockerHub (image:registry: docker.io) which means there is not any impact in default deployments.

Please note that Bitnami is a verified publisher since the past year which means that container images under the Bitnami organization are exempt from rates limiting.

For now, and until further notice, container images already present in the gcr.io/bitnami-containers/ repositories won't be deleted, just no new ones will appear. If at some point we decide to remove this registry and its images, we will update this issue.

bitnami/openldap:2.6

Set your OpenLDAP to listen to official 636 port

Drops message about a privileged port

Has to work and not drop a message. There are 0 reasons for this behaviour. Container scripts should be smarter and see if privileged port parameter has been overidden.

Error in container log about privileged port use

bitnami/mongodb:latest

Hi, I am deploying bitnami/mongodb:latest container as Azure Container Instance (ACI) in a group using ARM template inside a vNET. The bitnami/mongodb container run as non-root user but Azure file share volume mount requires the Linux container to run as root . Looking at the documentation;

(link: https://docs.bitnami.com/tutorials/work-with-non-root-containers)

it states;

If you wish to run a Bitnami non-root container image as a root container image, you can do so by adding the line user: root right after the image: directive in the container's docker-compose.yml

How to achieve the same using ARM template since it doesn't appear to have any property for it ?

I've tried various options but nothing so far working for me e.g. one possible solution was to explore init container option;

(link: https://docs.microsoft.com/en-us/azure/container-instances/container-instances-init-container)

So I tried the Use of a InitContainer and see if I can update the permissions/ownership on the mounted volume e.g. "chown 1001:1001 -R /bitnami" but then I don't have the option to call a "depends_on" so having an image that just changes permissions wouldn't necessarily work as far a I can tell before starting MongoDB container itself, and the MonogoDB container won't be started until Init Container is finished as per Microsoft documentation

"Init containers run to completion before the application container or containers start." so in my testing this approach doesn't make any difference."

Also tried a new mount path i.e. /data/mongoaz instead of default path /data/db based on some of the suggestion online related to mongo container (not the bitnami image) but it didn't work either as this goes back to the permission issue.

Here is the error that I'm getting which I believe is expected to see in this environment unless we can fix the permissions problem.

{"t":{"$date":"2022-07-25T17:12:03.203+00:00"},"s":"I", "c":"CONTROL", "id":21951, "ctx":"initandlisten","msg":"Options set by command line","attr":{"options":{"storage":{"dbPath":"/data/mongoaz"}}}}

{"t":{"$date":"2022-07-25T17:12:03.286+00:00"},"s":"I", "c":"STORAGE", "id":22315, "ctx":"initandlisten","msg":"Opening WiredTiger","attr":{"config":"create,cache_size=483M,session_max=33000,eviction=(threads_min=4,threads_max=4),config_base=false,statistics=(fast),log=(enabled=true,archive=true,path=journal,compressor=snappy),builtin_extension_config=(zstd=(compression_level=6)),file_manager=(close_idle_time=600,close_scan_interval=10,close_handle_minimum=250),statistics_log=(wait=0),verbose=[recovery_progress,checkpoint_progress,compact_progress],"}}

{"t":{"$date":"2022-07-25T17:12:04.253+00:00"},"s":"E", "c":"STORAGE", "id":22435, "ctx":"initandlisten","msg":"WiredTiger error","attr":{"error":1,"message":"[1658769124:253222][4195:0x7fc83c453c80], connection: __posix_open_file, 808: /data/mongoaz/WiredTiger.wt: handle-open: open: Operation not permitted"}}

{"t":{"$date":"2022-07-25T17:12:04.330+00:00"},"s":"E", "c":"STORAGE", "id":22435, "ctx":"initandlisten","msg":"WiredTiger error","attr":{"error":17,"message":"[1658769124:330802][4195:0x7fc83c453c80], connection: __posix_open_file, 808: /data/mongoaz/WiredTiger.wt: handle-open: open: File exists"}}

{"t":{"$date":"2022-07-25T17:12:04.354+00:00"},"s":"I", "c":"STORAGE", "id":22430, "ctx":"initandlisten","msg":"WiredTiger message","attr":{"message":"unexpected file WiredTiger.wt found, renamed to WiredTiger.wt.1"}}

{"t":{"$date":"2022-07-25T17:12:04.366+00:00"},"s":"E", "c":"STORAGE", "id":22435, "ctx":"initandlisten","msg":"WiredTiger error","attr":{"error":1,"message":"[1658769124:366555][4195:0x7fc83c453c80], connection: __posix_open_file, 808: /data/mongoaz/WiredTiger.wt: handle-open: open: Operation not permitted"}}

{"t":{"$date":"2022-07-25T17:12:04.438+00:00"},"s":"E", "c":"STORAGE", "id":22435, "ctx":"initandlisten","msg":"WiredTiger error","attr":{"error":17,"message":"[1658769124:438502][4195:0x7fc83c453c80], connection: __posix_open_file, 808: /data/mongoaz/WiredTiger.wt: handle-open: open: File exists"}}

{"t":{"$date":"2022-07-25T17:12:04.460+00:00"},"s":"I", "c":"STORAGE", "id":22430, "ctx":"initandlisten","msg":"WiredTiger message","attr":{"message":"unexpected file WiredTiger.wt found, renamed to WiredTiger.wt.2"}}

{"t":{"$date":"2022-07-25T17:12:04.473+00:00"},"s":"E", "c":"STORAGE", "id":22435, "ctx":"initandlisten","msg":"WiredTiger error","attr":{"error":1,"message":"[1658769124:473025][4195:0x7fc83c453c80], connection: __posix_open_file, 808: /data/mongoaz/WiredTiger.wt: handle-open: open: Operation not permitted"}}

{"t":{"$date":"2022-07-25T17:12:04.480+00:00"},"s":"W", "c":"STORAGE", "id":22347, "ctx":"initandlisten","msg":"Failed to start up WiredTiger under any compatibility version. This may be due to an unsupported upgrade or downgrade."}

{"t":{"$date":"2022-07-25T17:12:04.480+00:00"},"s":"F", "c":"STORAGE", "id":28595, "ctx":"initandlisten","msg":"Terminating.","attr":{"reason":"1: Operation not permitted"}}

{"t":{"$date":"2022-07-25T17:12:04.480+00:00"},"s":"F", "c":"-", "id":23091, "ctx":"initandlisten","msg":"Fatal assertion","attr":{"msgid":28595,"file":"src/mongo/db/storage/wiredtiger/wiredtiger_kv_engine.cpp","line":687}}

{"t":{"$date":"2022-07-25T17:12:04.481+00:00"},"s":"F", "c":"-", "id":23092, "ctx":"initandlisten","msg":"\n\n***aborting after fassert() failure\n\n"}

I trying to achieve both Azure file share mounted volume and mongo authentication enabled in same ARM template file, can this be done?

Azure File Share Mounted with bitnami/mongodb container that is running as non-root.

Container is crashing as per the error details above.

I can share the my ARM template if needed.

Description

I'm trying to specify a custom pg_hba.conf file for my postresql-repmgr cluster consisting of two nodes in streaming replication setup.

Unfortunately I cannot use the bind mount approach from the README as I'm using docker swarm.

Therefore I've tried two other approaches -- both without success.

/bitnami/repmgr/conf/pg_hba.conf using the docker swarm config mechanism./bitnami/repmgr/conf as a volume with the nfs driver.Approach 1

The excerpt from my compose file:

[...]

services:

pg-0:

image: bitnami/postgresql-repmgr:12.3.0

[...]

configs:

- source: pg_hba.conf

target: /bitnami/repmgr/conf/pg_hba.conf

uid: "1001"

gid: "0"

mode: 0774

[...]

configs:

pg_hba.conf:

file: pg_hba.conf

[...]

The resulting error message (from my log server):

"2020-05-25T10:58:02.049Z","t460s-dockerswarm-2","[38;5;6mrepmgr �[38;5;5m10:58:02.04 �[0m�[38;5;2mINFO �[0m ==> Preparing PostgreSQL configuration..."

"2020-05-25T10:58:02.155Z","t460s-dockerswarm-2","[38;5;6mpostgresql �[38;5;5m10:58:02.15 �[0m�[38;5;2mINFO �[0m ==> Stopping PostgreSQL..."

"2020-05-25T10:58:02.152Z","t460s-dockerswarm-2","cp: cannot create regular file '/bitnami/postgresql/conf/pg_hba.conf': Permission denied"

"2020-05-25T10:58:02.034Z","t460s-dockerswarm-2","[38;5;6mrepmgr �[38;5;5m10:58:02.03 �[0m�[38;5;2mINFO �[0m ==> There are no nodes with primary role. Assuming the primary role..."

"2020-05-25T10:58:02.056Z","t460s-dockerswarm-2","[38;5;6mpostgresql �[38;5;5m10:58:02.05 �[0m�[38;5;2mINFO �[0m ==> postgresql.conf file not detected. Generating it..."

Notes:

user: root to my services to circumvent issues due to the non-root container I encounter the same password authentication issue as in approach 2.Approach 2

The excerpt from my compose file:

[...]

services:

pg-0:

image: bitnami/postgresql-repmgr:12.3.0

[...]

volumes:

- pg-primary-vol:/bitnami/postgresql

- pg-config-vol:/bitnami/repmgr/conf/

[...]

volumes:

pg-primary-vol:

pg-config-vol:

driver: local

driver_opts:

type: "nfs"

o: "nfsvers=4,addr=192.168.137.110,rw"

device: ":/mnt/storage1/postgresql/conf"

[...]

The resulting error message (from docker service logs):

postgres_pg-0.1.akaolj1sa768@t460s-dockerswarm-2 | [2020-05-25 11:33:45] [NOTICE] repmgrd (repmgrd 5.1.0) starting up

postgres_pg-0.1.akaolj1sa768@t460s-dockerswarm-2 | [2020-05-25 11:33:45] [INFO] connecting to database "user=repmgr password=repmgr host=pg-0 dbname=repmgr port=5432 connect_timeout=5"

postgres_pg-0.1.akaolj1sa768@t460s-dockerswarm-2 | [2020-05-25 11:33:45] [DEBUG] connecting to: "user=repmgr password=repmgr connect_timeout=5 dbname=repmgr host=pg-0 port=5432 fallback_application_name=repmgr"

postgres_pg-0.1.akaolj1sa768@t460s-dockerswarm-2 | [2020-05-25 11:33:45] [ERROR] connection to database failed

postgres_pg-0.1.akaolj1sa768@t460s-dockerswarm-2 | [2020-05-25 11:33:45] [DETAIL]

postgres_pg-0.1.akaolj1sa768@t460s-dockerswarm-2 | FATAL: password authentication failed for user "repmgr"

postgres_pg-0.1.akaolj1sa768@t460s-dockerswarm-2 |

postgres_pg-0.1.akaolj1sa768@t460s-dockerswarm-2 | [2020-05-25 11:33:45] [DETAIL] attempted to connect using:

postgres_pg-0.1.akaolj1sa768@t460s-dockerswarm-2 | user=repmgr password=repmgr connect_timeout=5 dbname=repmgr host=pg-0 port=5432 fallback_application_name=repmgr

Notes:

Approach 3

I've taken the example docker-compose.yml file and added this bind mount to both postgres services:

volumes:

- ./conf:/bitnami/repmgr/conf/

Of course I've also set the correct permissions on the host holder and its content.

As long as the folder is empty everything works fine. As soon as I add the pg_hba.conf the start of the primary container fails with error 2 on the first run:

[...]

pg-0_1 | postgresql 20:17:35.41 INFO ==> Initializing PostgreSQL database...

pg-0_1 | postgresql 20:17:35.41 INFO ==> Custom configuration /opt/bitnami/postgresql/conf/postgresql.conf detected

pg-0_1 | postgresql 20:17:35.42 INFO ==> Custom configuration /opt/bitnami/postgresql/conf/pg_hba.conf detected

pg-0_1 | postgresql 20:17:36.89 INFO ==> Starting PostgreSQL in background...

pg-0_1 | postgresql 20:17:37.03 INFO ==> Changing password of postgres

pg-0_1 | postgresql 20:17:37.05 INFO ==> Stopping PostgreSQL...

postgres_pg-0_1 exited with code 2

On the second and each subsequent run (without deleting my volumes) I get this already known error:

[...]

pg-0_1 | postgresql 20:17:55.19 INFO ==> Deploying PostgreSQL with persisted data...

pg-1_1 | repmgr 20:17:55.20 INFO ==> Preparing repmgr configuration...

pg-1_1 | repmgr 20:17:55.21 INFO ==> Initializing Repmgr...

pg-1_1 | repmgr 20:17:55.22 INFO ==> Waiting for primary node...

pg-0_1 | postgresql 20:17:55.22 INFO ==> Stopping PostgreSQL...

pg-0_1 | postgresql-repmgr 20:17:55.23 INFO ==> ** PostgreSQL with Replication Manager setup finished! **

pg-0_1 |

pg-0_1 | postgresql 20:17:55.30 INFO ==> Starting PostgreSQL in background...

pg-0_1 | postgresql-repmgr 20:17:55.43 INFO ==> ** Starting repmgrd **

pg-0_1 | [2020-05-25 20:17:55] [NOTICE] repmgrd (repmgrd 5.1.0) starting up

pg-0_1 | [2020-05-25 20:17:55] [INFO] connecting to database "user=repmgr password=repmgr host=pg-0 dbname=repmgr port=5432 connect_timeout=5"

pg-0_1 | [2020-05-25 20:17:55] [DEBUG] connecting to: "user=repmgr password=repmgr connect_timeout=5 dbname=repmgr host=pg-0 port=5432 fallback_application_name=repmgr"

pg-0_1 | [2020-05-25 20:17:55] [ERROR] connection to database failed

pg-0_1 | [2020-05-25 20:17:55] [DETAIL]

pg-0_1 | FATAL: password authentication failed for user "repmgr"

pg-0_1 |

pg-0_1 | [2020-05-25 20:17:55] [DETAIL] attempted to connect using:

pg-0_1 | user=repmgr password=repmgr connect_timeout=5 dbname=repmgr host=pg-0 port=5432 fallback_application_name=repmgr

postgres_pg-0_1 exited with code 6

pg-1_1 | postgresql 20:19:56.06 INFO ==> Stopping PostgreSQL...

postgres_pg-1_1 exited with code 1

General steps to reproduce the issues in approach 1 and 2

When modifying and testing my docker-compose.yml file I use these steps to have a clean setup each time:

docker stack rm postgresdocker volume prune on all involved docker nodesdocker stack deploy --compose-file docker-compose.yml postgresThe full docker-compose.yml file used in approach 1 and 2

(currently approach 2 is active by outcommenting approach 1 elements):

---

version: "3.8"

services:

pg-0:

image: bitnami/postgresql-repmgr:12.3.0

environment:

- POSTGRESQL_PASSWORD_FILE=/run/secrets/postgres_password

- REPMGR_PARTNER_NODES=pg-0,pg-1

- REPMGR_NODE_NAME=pg-0

- REPMGR_NODE_NETWORK_NAME=pg-0

- REPMGR_PRIMARY_HOST=pg-0

- REPMGR_PASSWORD_FILE=/run/secrets/repmgr_password

- REPMGR_LOG_LEVEL=DEBUG

volumes:

- pg-primary-vol:/bitnami/postgresql

- pg-config-vol:/bitnami/repmgr/conf/

- type: tmpfs

target: /dev/shm

tmpfs:

size: 256000000

ports:

- "5432:5432"

networks:

- application-net

deploy:

placement:

constraints:

- node.labels.type == primary

- node.role == worker

#endpoint_mode: dnsrr

configs:

- source: additional-postgresql.conf

target: /bitnami/postgresql/conf/conf.d/additional-postgresql.conf

#- source: pg_hba.conf

# target: /bitnami/repmgr/conf/pg_hba.conf

# uid: "1001"

# gid: "0"

# mode: 0774

secrets:

- postgres_password

- repmgr_password

#logging:

# driver: gelf

# options:

# gelf-address: 'tcp://192.168.137.101:12201'

pg-1:

image: bitnami/postgresql-repmgr:12.3.0

environment:

- POSTGRESQL_PASSWORD_FILE=/run/secrets/postgres_password

- REPMGR_PARTNER_NODES=pg-0,pg-1

- REPMGR_NODE_NAME=pg-1

- REPMGR_NODE_NETWORK_NAME=pg-1

- REPMGR_PRIMARY_HOST=pg-0

- REPMGR_PASSWORD_FILE=/run/secrets/repmgr_password

volumes:

- pg-replica-vol:/bitnami/postgresql

- pg-config-vol:/bitnami/repmgr/conf/

- type: tmpfs

target: /dev/shm

tmpfs:

size: 256000000

ports:

- "5433:5432"

networks:

- application-net

deploy:

placement:

constraints:

- node.labels.type != primary

- node.role == worker

#endpoint_mode: dnsrr

configs:

- source: additional-postgresql.conf

target: /bitnami/postgresql/conf/conf.d/additional-postgresql.conf

#- source: pg_hba.conf

# target: /bitnami/repmgr/conf/pg_hba.conf

# uid: "1001"

# gid: "0"

# mode: 0774

secrets:

- postgres_password

- repmgr_password

#logging:

# driver: gelf

# options:

# gelf-address: 'tcp://192.168.137.101:12201'

networks:

application-net:

driver: overlay

driver_opts:

encrypted: "true"

volumes:

pg-primary-vol:

pg-replica-vol:

pg-config-vol:

driver: local

driver_opts:

type: "nfs"

o: "nfsvers=4,addr=192.168.137.110,rw"

device: ":/mnt/storage1/postgresql/conf"

configs:

additional-postgresql.conf:

file: additional-postgresql.conf

name: additional-postgresql.conf-${ADDITIONAL_POSTGRES_CONF}

pg_hba.conf:

file: pg_hba.conf

name: pg_hba.conf-${PG_HBA_CONF}

secrets:

postgres_password:

external: true

repmgr_password:

external: true

Output of docker version

Client: Docker Engine - Community

Version: 19.03.8

API version: 1.40

Go version: go1.12.17

Git commit: afacb8b7f0

Built: Wed Mar 11 01:25:56 2020

OS/Arch: linux/amd64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 19.03.8

API version: 1.40 (minimum version 1.12)

Go version: go1.12.17

Git commit: afacb8b7f0

Built: Wed Mar 11 01:24:28 2020

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.2.13

GitCommit: 7ad184331fa3e55e52b890ea95e65ba581ae3429

runc:

Version: 1.0.0-rc10

GitCommit: dc9208a3303feef5b3839f4323d9beb36df0a9dd

docker-init:

Version: 0.18.0

GitCommit: fec3683

Output of docker info

Client:

Debug Mode: false

Server:

Containers: 3

Running: 1

Paused: 0

Stopped: 2

Images: 8

Server Version: 19.03.8

Storage Driver: overlay2

Backing Filesystem: <unknown>

Supports d_type: true

Native Overlay Diff: true

Logging Driver: json-file

Cgroup Driver: cgroupfs

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog

Swarm: active

NodeID: t1hnu0591w01txen8chuue8y0

Is Manager: true

ClusterID: lv4nsc1znjt3nvuam6sr4jgt7

Managers: 1

Nodes: 3

Default Address Pool: 10.0.0.0/8

SubnetSize: 24

Data Path Port: 4789

Orchestration:

Task History Retention Limit: 5

Raft:

Snapshot Interval: 10000

Number of Old Snapshots to Retain: 0

Heartbeat Tick: 1

Election Tick: 10

Dispatcher:

Heartbeat Period: 5 seconds

CA Configuration:

Expiry Duration: 3 months

Force Rotate: 0

Autolock Managers: false

Root Rotation In Progress: false

Node Address: 192.168.137.101

Manager Addresses:

192.168.137.101:2377

Runtimes: runc

Default Runtime: runc

Init Binary: docker-init

containerd version: 7ad184331fa3e55e52b890ea95e65ba581ae3429

runc version: dc9208a3303feef5b3839f4323d9beb36df0a9dd

init version: fec3683

Security Options:

apparmor

seccomp

Profile: default

Kernel Version: 4.19.0-9-amd64

Operating System: Debian GNU/Linux 10 (buster)

OSType: linux

Architecture: x86_64

CPUs: 1

Total Memory: 1.886GiB

Name: t460s-dockerswarm-1

ID: HZD6:A6CE:KFV6:7YME:DOSW:QUPB:NVVH:CZBC:3KWJ:BMHO:EY6S:JMHF

Docker Root Dir: /var/lib/docker

Debug Mode: false

Registry: https://index.docker.io/v1/

Labels:

Experimental: false

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

WARNING: No swap limit support

Description

Cluster member is bootstrapping when no host (from clusterAddress=gcom://... ) is set in /etc/hosts when we want to have clusterBootstrap=no

this can be seen in the output:

2020-07-28 9:25:48 0 [Note] WSREP: Service thread queue flushed.

2020-07-28 9:25:48 0 [Note] WSREP: ####### Assign initial position for certification: 4c4debf7-d0b4-11ea-81f5-9778c5f86c84:12, protocol version: -1

2020-07-28 9:25:48 0 [Note] WSREP: Start replication

2020-07-28 9:25:48 0 [Note] WSREP: Connecting with bootstrap option: 1

2020-07-28 9:25:48 0 [Note] WSREP: Setting GCS initial position to 4c4debf7-d0b4-11ea-81f5-9778c5f86c84:12

2020-07-28 9:25:48 0 [Note] WSREP: protonet asio version 0

2020-07-28 9:25:48 0 [Note] WSREP: Using CRC-32C for message checksums.

2020-07-28 9:25:48 0 [Note] WSREP: backend: asio

2020-07-28 9:25:48 0 [Note] WSREP: gcomm thread scheduling priority set to other:0

2020-07-28 9:25:48 0 [Warning] WSREP: access file(/bitnami/mariadb/data//gvwstate.dat) failed(No such file or directory)

--> bootstrap option: 1 instead of 0

Steps to reproduce the issue:

have an empty /etc/hosts like:

root@host2:/opt/secon/docker-containers/mariadb# cat /etc/hosts

127.0.0.1 localhost

127.0.1.1 host2

docker-compose.yml:

root@host2:/opt/secon/docker-containers/mariadb# cat docker-compose.yml

version: "3.7"

services:

mariadb:

image: bitnami/mariadb-galera

container_name: mariadb

env_file:

- ./secret-mariadb.env

- /data/docker/mariadb/mariadb.env

network_mode: "host"

ports:

- 3306:3306

- 4567:4567

restart: unless-stopped

volumes:

- /data/docker/mariadb/volumes/bitnami/mariadb:/bitnami/mariadb

(note: network_mode host does not makes any difference)

env file1: secret-mariadb.env

root@host2:/opt/secon/docker-containers/mariadb# cat secret-mariadb.env

MARIADB_ROOT_PASSWORD=abcd

MARIADB_USER=user

MARIADB_PASSWORD=userpassword

MARIADB_GALERA_MARIABACKUP_USER=backupser

MARIADB_GALERA_MARIABACKUP_PASSWORD=backuppass

env file2: /data/docker/mariadb/mariadb.env

root@host2:/opt/secon/docker-containers/mariadb# cat /data/docker/mariadb/mariadb.env

MARIADB_GALERA_CLUSTER_ADDRESS=gcomm://198.18.1.1:4567,198.19.121.176:4567,0.0.0.0:4567

MARIADB_GALERA_CLUSTER_NAME=secondb

MARIADB_GALERA_NODE_ADDRESS=198.18.184.74

Describe the results you received:*

2020-07-28 9:25:48 0 [Note] WSREP: ####### Assign initial position for certification: 4c4debf7-d0b4-11ea-81f5-9778c5f86c84:12, protocol version: -1

2020-07-28 9:25:48 0 [Note] WSREP: Start replication

2020-07-28 9:25:48 0 [Note] WSREP: Connecting with bootstrap option: 1

2020-07-28 9:25:48 0 [Note] WSREP: Setting GCS initial position to 4c4debf7-d0b4-11ea-81f5-9778c5f86c84:12

2020-07-28 9:25:48 0 [Note] WSREP: protonet asio version 0

2020-07-28 9:25:48 0 [Note] WSREP: Using CRC-32C for message checksums.

2020-07-28 9:25:48 0 [Note] WSREP: backend: asio

2020-07-28 9:25:48 0 [Note] WSREP: gcomm thread scheduling priority set to other:0

Describe the results you expected:

2020-07-28 9:31:01 0 [Note] WSREP: Start replication

2020-07-28 9:31:01 0 [Note] WSREP: Connecting with bootstrap option: 0

2020-07-28 9:31:01 0 [Note] WSREP: Setting GCS initial position to 00000000-0000-0000-0000-000000000000:-1

2020-07-28 9:31:01 0 [Note] WSREP: protonet asio version 0

2020-07-28 9:31:01 0 [Note] WSREP: Using CRC-32C for message checksums.

2020-07-28 9:31:01 0 [Note] WSREP: backend: asio

2020-07-28 9:31:01 0 [Note] WSREP: gcomm thread scheduling priority set to other:0

2020-07-28 9:31:01 0 [Warning] WSREP: access file(/bitnami/mariadb/data//gvwstate.dat) failed(No such file or directory)

Additional information you deem important (e.g. issue happens only occasionally):

added/extended host to /etc/hosts:

root@host2:/opt/secon/docker-containers/mariadb# cat /etc/hosts

127.0.0.1 localhost

127.0.1.1 host2

198.19.121.176 host1

Version

docker version:root@host2:/opt/secon/docker-containers/mariadb# docker version

Client: Docker Engine - Community

Version: 19.03.12

API version: 1.40

Go version: go1.13.10

Git commit: 48a66213fe

Built: Mon Jun 22 15:45:44 2020

OS/Arch: linux/amd64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 19.03.12

API version: 1.40 (minimum version 1.12)

Go version: go1.13.10

Git commit: 48a66213fe

Built: Mon Jun 22 15:44:15 2020

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.2.13

GitCommit: 7ad184331fa3e55e52b890ea95e65ba581ae3429

runc:

Version: 1.0.0-rc10

GitCommit: dc9208a3303feef5b3839f4323d9beb36df0a9dd

docker-init:

Version: 0.18.0

GitCommit: fec3683

docker info:root@host2:/opt/secon/docker-containers/mariadb# docker info

Client:

Debug Mode: false

Server:

Containers: 2

Running: 1

Paused: 0

Stopped: 1

Images: 39

Server Version: 19.03.12

Storage Driver: overlay2

Backing Filesystem: extfs

Supports d_type: true

Native Overlay Diff: true

Logging Driver: json-file

Cgroup Driver: cgroupfs

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog

Swarm: inactive

Runtimes: runc

Default Runtime: runc

Init Binary: docker-init

containerd version: 7ad184331fa3e55e52b890ea95e65ba581ae3429

runc version: dc9208a3303feef5b3839f4323d9beb36df0a9dd

init version: fec3683

Security Options:

apparmor

seccomp

Profile: default

Kernel Version: 5.4.0-42-generic

Operating System: Ubuntu 20.04.1 LTS

OSType: linux

Architecture: x86_64

CPUs: 8

Total Memory: 58.88GiB

Name: host2

ID: HCLY:7DTL:UWCH:KIWS:FJCR:ZUVR:Z74C:HK4S:I5PL:67PL:XRWE:YNYC

Docker Root Dir: /var/lib/docker

Debug Mode: false

Registry: https://index.docker.io/v1/

Labels:

Experimental: false

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

WARNING: No swap limit support

WARNING: IPv4 forwarding is disabled

docker-compose version (if applicable):root@host2:/opt/secon/docker-containers/mariadb# docker-compose version

docker-compose version 1.25.0, build unknown

docker-py version: 4.1.0

CPython version: 3.8.2

OpenSSL version: OpenSSL 1.1.1f 31 Mar 2020

Additional environment details (AWS, VirtualBox, Docker for MAC, physical, etc.):

** suspected bug **

I suspect a bug in the file: /opt/bitnami/scripts/libmariadbgalera.sh

get_galera_cluster_bootstrap_value() {

local clusterBootstrap

local local_ip

local host_ip

# This block evaluate if the cluster needs to be boostraped or not.

# When the node is marked to bootstrap:

# - We want to have bootstrap enabled when executing up to "run.sh" (included), for the first time.

# To do this, we check if the node has already been initialized before with "get_previous_boot".

# - For the second "setup.sh" and "run.sh" calls, it will automatically detect the cluster was already bootstrapped, so it disables it.

# That way, the node will join the existing Galera cluster instead of bootstrapping a new one.

# We disable the bootstrap right after processing environment variables in "run.sh" with "set_previous_boot".

# - Users can force a bootstrap to happen again on a node, by setting the environment variable "MARIADB_GALERA_FORCE_SAFETOBOOTSTRAP".

# When the node is not marked to bootstrap, the node will join an existing cluster.

clusterBootstrap="$DB_GALERA_CLUSTER_BOOTSTRAP"

if is_boolean_yes "$clusterBootstrap"; then

if is_boolean_yes "$(get_previous_boot)"; then

clusterBootstrap="no"

fi

else

local clusterAddress

clusterAddress="$DB_GALERA_CLUSTER_ADDRESS" # clusterAddress=gcomm://198.18.1.1:4567,198.19.121.176:4567,0.0.0.0:4567

if [[ -z "$clusterAddress" ]]; then

clusterBootstrap="yes"

elif [[ -n "$clusterAddress" ]]; then

clusterBootstrap="yes" # culprit will be set here

local_ip=$(hostname -i)

read -r -a hosts <<< "$(tr ',' ' ' <<< "${clusterAddress#*://}")"

if [[ "${#hosts[@]}" -eq "1" ]]; then

read -r -a cluster_ips <<< "$(getent hosts "${hosts[0]}" | awk '{print $1}' | tr '\n' ' ')"

if [[ "${#cluster_ips[@]}" -gt "1" ]] || ( [[ "${#cluster_ips[@]}" -eq "1" ]] && [[ "${cluster_ips[0]}" != "$local_ip" ]] ) ; then

clusterBootstrap="no"

else

clusterBootstrap="yes"

fi

else

for host in "${hosts[@]}"; do

host_ip=$(getent hosts "${host%:*}" | awk '{print $1}')

if [[ -n "$host_ip" ]] && [[ "$host_ip" != "$local_ip" ]]; then

clusterBootstrap="no"

fi

done

fi

fi

fi

echo "$clusterBootstrap"

}

I assume the issue already where marked with 'culprit'.

Description

Our vulnerability scanning tool detects 2 versions of GO within the mariadb docker image 10.4.21-debian-10-r32.

This leads to have same CVE raised 2 times, one for each version.

Steps to reproduce the issue:

Launching the scanning tool on bitnami mariadb docker image

Describe the results you received:

Extract from the tool result:

"applications": [

{

"name": "go",

"version": "1.16.7",

"path": "/opt/bitnami/common/bin/gosu"

},

{

"name": "go",

"version": "1.16.6",

"path": "/opt/bitnami/common/bin/ini-file"

}Describe the results you expected:

Expecting only 1 version of go installed on mariadb bitnami docker image

bitnami/bitnami-docker-redis

I am getting

mkdir: cannot create directory '/opt/bitnami/redis/tmp' : Permission Denied

I have a limitation that i can't give permissions to root though

Can we somehow override this location?

No response

Permission denied

No response

Description

During persistence initialization, if the copy is too long and is killed by the container startupProbe, at next start the wordpress_initialize function do not detect a partially initialized directory

Steps to reproduce the issue:

Describe the results you received:

Errors on Wordpress due to missing plugins

Describe the results you expected:

Atomicity of the wordpress_initialize, for example creating .wordpress_initialize file in the volume at the end of the function, and checking the file existence to detect fully initalized persisent volume.

Workaround:

Increase startupProbe failureThreshold

bitnami/redis:6.2

Redis 6 or higher version arrives with multithreading for faster I/O. It's beneficial in many production environments. Of course I know that custom my own redis.conf can be do it well, but maybe put it in env just easy for it.

Add "io-threads" and "io-threads-do-reads" environment variables to redis-env.sh.

Something like docker run -it -e IO_THREADS=4 -e IO_THREADS_DO_READS=yes xxx

No response

bitnami/fluentd:latest

This is the optional dependency of https://github.com/uken/fluent-plugin-elasticsearch#http_backend

But it helps to fix this problem uken/fluent-plugin-elasticsearch#453 (comment)

Also, want to mention, that the optional gem oj is included in the package, so why not install typhoeus gem? (it's pretty small)

install typhoeus gem

Description

Master db and slave be deployed on different servers. Both master and slave synchronize data normally. When the primary node shuts down, the standby node switches normally. But when the master node starts again, PostgreSQL cannot start normally

Steps to reproduce the issue:

Describe the results you received:

log

pam-pgsql-0_1 | postgresql-repmgr 08:43:50.93 INFO ==> ** Starting PostgreSQL with Replication Manager setup **

pam-pgsql-0_1 | postgresql-repmgr 08:43:50.96 INFO ==> Validating settings in REPMGR_* env vars...

pam-pgsql-0_1 | postgresql-repmgr 08:43:50.97 INFO ==> Validating settings in POSTGRESQL_* env vars..

pam-pgsql-0_1 | postgresql-repmgr 08:43:50.98 INFO ==> Querying all partner nodes for common upstream node...

pam-pgsql-0_1 | postgresql-repmgr 08:43:51.05 INFO ==> Auto-detected primary node: '10.47.154.107:5432'

pam-pgsql-0_1 | postgresql-repmgr 08:43:51.06 INFO ==> Preparing PostgreSQL configuration...

pam-pgsql-0_1 | postgresql-repmgr 08:43:51.07 INFO ==> postgresql.conf file not detected. Generating it...

pam-pgsql-0_1 | postgresql-repmgr 08:43:51.13 INFO ==> Preparing repmgr configuration...

pam-pgsql-0_1 | postgresql-repmgr 08:43:51.13 INFO ==> Initializing Repmgr...

pam-pgsql-0_1 | postgresql-repmgr 08:43:51.14 INFO ==> Waiting for primary node...

pam-pgsql-0_1 | postgresql-repmgr 08:43:51.17 INFO ==> Cloning data from primary node...

pam-pgsql-0_1 | postgresql-repmgr 08:43:51.88 INFO ==> Initializing PostgreSQL database...

pam-pgsql-0_1 | postgresql-repmgr 08:43:51.89 INFO ==> Cleaning stale /bitnami/postgresql/data/standby.signal file

pam-pgsql-0_1 | postgresql-repmgr 08:43:51.90 INFO ==> Custom configuration /opt/bitnami/postgresql/conf/postgresql.conf detected

pam-pgsql-0_1 | postgresql-repmgr 08:43:51.90 INFO ==> Custom configuration /opt/bitnami/postgresql/conf/pg_hba.conf detected

pam-pgsql-0_1 | postgresql-repmgr 08:43:51.92 INFO ==> Deploying PostgreSQL with persisted data...

pam-pgsql-0_1 | postgresql-repmgr 08:43:51.94 INFO ==> Configuring replication parameters

pam-pgsql-0_1 | postgresql-repmgr 08:43:51.96 INFO ==> Configuring fsync

pam-pgsql-0_1 | postgresql-repmgr 08:43:51.99 INFO ==> Setting up streaming replication slave...

pam-pgsql-0_1 | postgresql-repmgr 08:43:52.02 INFO ==> Starting PostgreSQL in background...

pam-pgsql-0_1 | postgresql-repmgr 08:43:52.16 INFO ==> Unregistering standby node...

pam-pgsql-0_1 | postgresql-repmgr 08:43:52.28 INFO ==> Registering Standby node...

pam-pgsql-0_1 | postgresql-repmgr 08:43:52.33 INFO ==> Stopping PostgreSQL...

pam-pgsql-0_1 | postgresql-repmgr 08:43:53.35 INFO ==> ** PostgreSQL with Replication Manager setup finished! **

pam-pgsql-0_1 |

pam-pgsql-0_1 | postgresql-repmgr 08:43:53.38 INFO ==> Starting PostgreSQL in background...

pam-pgsql-0_1 | postgresql-repmgr 08:43:53.52 INFO ==> ** Starting repmgrd **

pam-pgsql-0_1 | [2020-10-17 08:43:53] [NOTICE] repmgrd (repmgrd 5.1.0) starting up

pam-pgsql-0_1 | [2020-10-17 08:43:53] [ERROR] PID file "/opt/bitnami/repmgr/tmp/repmgr.pid" exists and seems to contain a valid PID

pam-pgsql-0_1 | [2020-10-17 08:43:53] [HINT] if repmgrd is no longer alive, remove the file and restart repmgrd

pam-pgsql-0_1 | postgresql-repmgr 08:43:55.70

pam-pgsql-0_1 | postgresql-repmgr 08:43:55.70 Welcome to the Bitnami postgresql-repmgr container

pam-pgsql-0_1 | postgresql-repmgr 08:43:55.70 Subscribe to project updates by watching https://github.com/bitnami/bitnami-docker-postgresql-repmgr

pam-pgsql-0_1 | postgresql-repmgr 08:43:55.70 Submit issues and feature requests at https://github.com/bitnami/bitnami-docker-postgresql-repmgr/issues

pam-pgsql-0_1 | postgresql-repmgr 08:43:55.71

pam-pgsql-0_1 | postgresql-repmgr 08:43:55.72 INFO ==> ** Starting PostgreSQL with Replication Manager setup **

pam-pgsql-0_1 | postgresql-repmgr 08:43:55.75 INFO ==> Validating settings in REPMGR_* env vars...

pam-pgsql-0_1 | postgresql-repmgr 08:43:55.76 INFO ==> Validating settings in POSTGRESQL_* env vars..

pam-pgsql-0_1 | postgresql-repmgr 08:43:55.76 INFO ==> Querying all partner nodes for common upstream node...

pam-pgsql-0_1 | postgresql-repmgr 08:43:55.83 INFO ==> Auto-detected primary node: '10.47.154.107:5432'

pam-pgsql-0_1 | postgresql-repmgr 08:43:55.84 INFO ==> Preparing PostgreSQL configuration...

pam-pgsql-0_1 | postgresql-repmgr 08:43:55.84 INFO ==> postgresql.conf file not detected. Generating it...

pam-pgsql-0_1 | postgresql-repmgr 08:43:55.91 INFO ==> Preparing repmgr configuration...

pam-pgsql-0_1 | postgresql-repmgr 08:43:55.92 INFO ==> Initializing Repmgr...

pam-pgsql-0_1 | postgresql-repmgr 08:43:55.92 INFO ==> Waiting for primary node...

pam-pgsql-0_1 | postgresql-repmgr 08:43:55.96 INFO ==> Cloning data from primary node...

pam-pgsql-0_1 | postgresql-repmgr 08:43:56.68 INFO ==> Initializing PostgreSQL database...

pam-pgsql-0_1 | postgresql-repmgr 08:43:56.68 INFO ==> Cleaning stale /bitnami/postgresql/data/standby.signal file

pam-pgsql-0_1 | postgresql-repmgr 08:43:56.69 INFO ==> Custom configuration /opt/bitnami/postgresql/conf/postgresql.conf detected

pam-pgsql-0_1 | postgresql-repmgr 08:43:56.69 INFO ==> Custom configuration /opt/bitnami/postgresql/conf/pg_hba.conf detected

pam-pgsql-0_1 | postgresql-repmgr 08:43:56.71 INFO ==> Deploying PostgreSQL with persisted data...

pam-pgsql-0_1 | postgresql-repmgr 08:43:56.73 INFO ==> Configuring replication parameters

pam-pgsql-0_1 | postgresql-repmgr 08:43:56.75 INFO ==> Configuring fsync

pam-pgsql-0_1 | postgresql-repmgr 08:43:56.77 INFO ==> Setting up streaming replication slave...

pam-pgsql-0_1 | postgresql-repmgr 08:43:56.80 INFO ==> Starting PostgreSQL in background...

pam-pgsql-0_1 | postgresql-repmgr 08:43:56.92 INFO ==> Unregistering standby node...

pam-pgsql-0_1 | postgresql-repmgr 08:43:56.98 INFO ==> Registering Standby node...

pam-pgsql-0_1 | postgresql-repmgr 08:43:57.03 INFO ==> Stopping PostgreSQL...

pam-pgsql-0_1 | postgresql-repmgr 08:43:58.05 INFO ==> ** PostgreSQL with Replication Manager setup finished! **

pam-pgsql-0_1 |

pam-pgsql-0_1 | postgresql-repmgr 08:43:58.07 INFO ==> Starting PostgreSQL in background...

pam-pgsql-0_1 | postgresql-repmgr 08:43:58.20 INFO ==> ** Starting repmgrd **

pam-pgsql-0_1 | [2020-10-17 08:43:58] [NOTICE] repmgrd (repmgrd 5.1.0) starting up

pam-pgsql-0_1 | [2020-10-17 08:43:58] [ERROR] PID file "/opt/bitnami/repmgr/tmp/repmgr.pid" exists and seems to contain a valid PID

pam-pgsql-0_1 | [2020-10-17 08:43:58] [HINT] if repmgrd is no longer alive, remove the file and restart repmgrd

pam-pgsql-0_1 | postgresql-repmgr 08:44:01.82

pam-pgsql-0_1 | postgresql-repmgr 08:44:01.82 Welcome to the Bitnami postgresql-repmgr container

Describe the results you expected:

A starts again, postgresql starts and reconnects as a standby node.

Additional information you deem important (e.g. issue happens only occasionally):

when I remove /opt/bitnami/repmgr/tmp/repmgr.pid, everything is back to normal.

bitnami/openldap:2.6.3

Hey

Hi have setup openldap with this docker-compose

version: "3.9"

volumes:

openldap_data:

services:

openldap:

image: bitnami/openldap:2

ports:

- 1389:1389

- 1636:1636

environment:

- LDAP_ROOT=dc=example,dc=com

- LDAP_ADMIN_USERNAME=admin

- LDAP_ADMIN_PASSWORD=adminpassword

volumes:

- openldap_data:/bitnami/openldapSo execute this:

dn: ou=groups,dc=example,dc=com

objectclass: organizationalUnit

ou: groups

dn: ou=users,dc= example,dc=com

objectclass: organizationalUnit

ou: users

dn: cn=user01,ou=users,dc= example,dc=com

cn: user01,

objectclass: inetOrgPerson

objectclass: top

sn: bar01

uid: user01

dn: cn=group01,ou=groups,dc= example,dc=com

cn: group01

member: cn= user01,ou=users,dc= example,dc=com