This repository contains Reinforcement Learning algorithms which are being used for research activities at Medipixel. The source code will be frequently updated. We are warmly welcoming external contributors! :)

|

|

|

|---|---|---|

| BC agent on LunarLanderContinuous-v2 | RainbowIQN agent on PongNoFrameskip-v4 | SAC agent on Reacher-v2 |

Thanks goes to these wonderful people (emoji key):

Jinwoo Park (Curt) 💻 | Kyunghwan Kim 💻 | darthegg 💻 |

This project follows the all-contributors specification.

- Advantage Actor-Critic (A2C)

- Deep Deterministic Policy Gradient (DDPG)

- Proximal Policy Optimization Algorithms (PPO)

- Twin Delayed Deep Deterministic Policy Gradient Algorithm (TD3)

- Soft Actor Critic Algorithm (SAC)

- Behaviour Cloning (BC with DDPG, SAC)

- Prioritized Experience Replay (PER with DDPG)

- From Demonstrations (DDPGfD, SACfD, DQfD)

- Rainbow DQN

- Rainbow IQN (without DuelingNet) - DuelingNet degrades performance

We have tested each algorithm on some of the following environments.

The performance is measured on the commit 4248057. Please note that this won't be frequently updated.

We reproduced the performance of DDPG, TD3, and SAC on Reacher-v2 (Mujoco). They reach the score around -3.5 to -4.5. See W&B Log for more details.

RainbowIQN learns the game incredibly fast! It accomplishes the perfect score (21) within 100 episodes! The idea of RainbowIQN is roughly suggested from W. Dabney et al.. See W&B Log for more details.

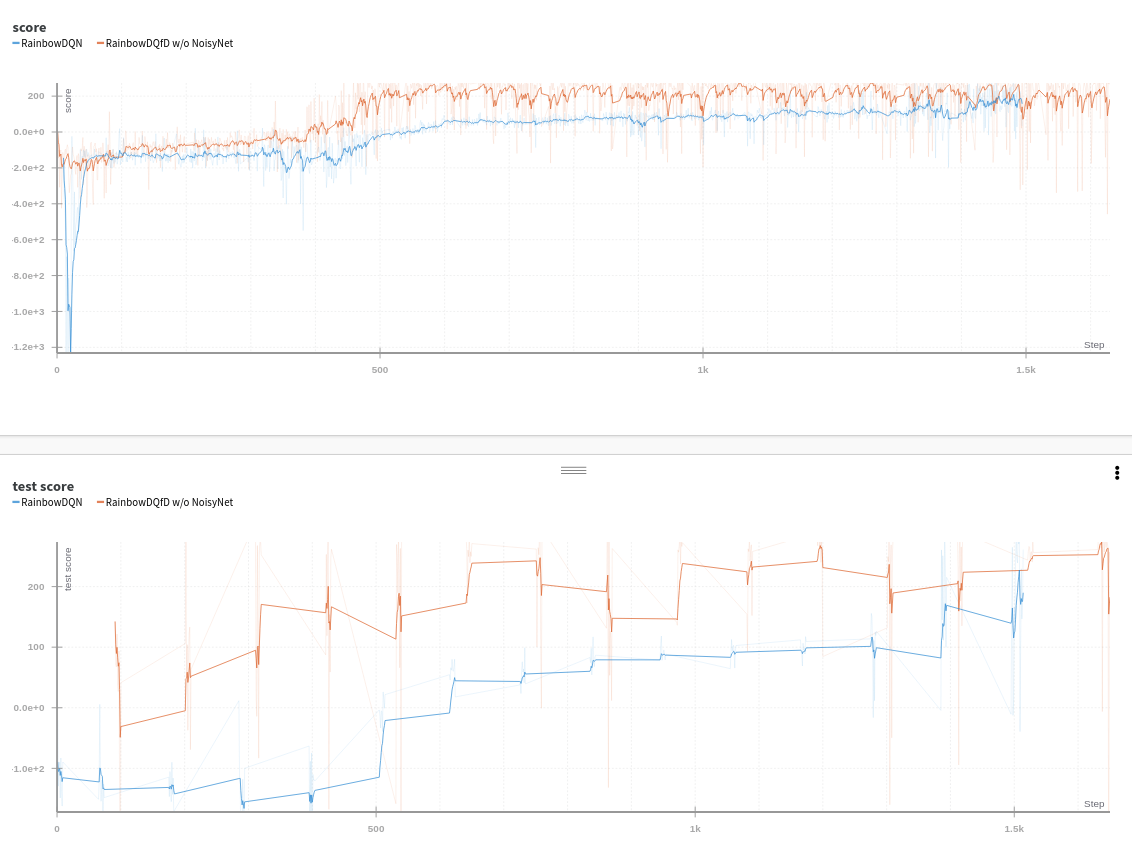

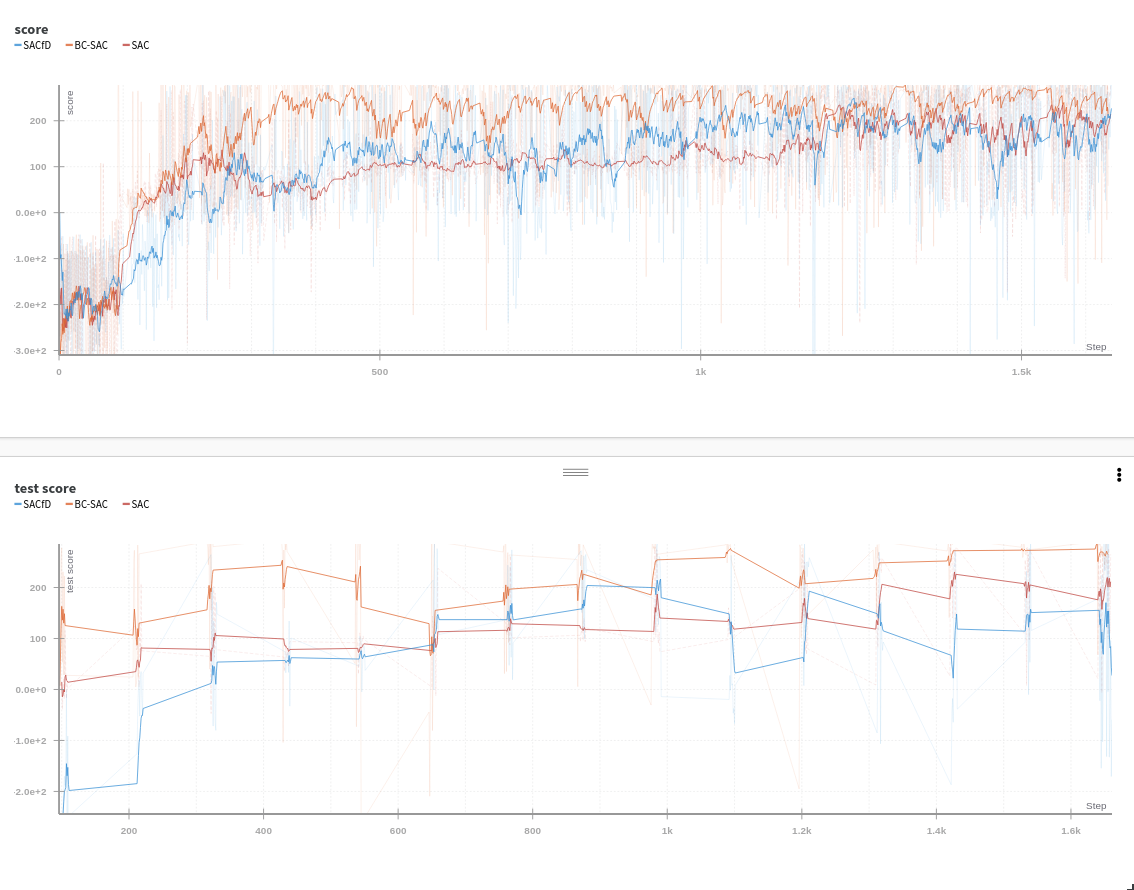

We used these environments just for a quick verification of each algorithm, so some of experiments may not show the best performance. Click the following lines to see the figures.

In order to run Mujoco environments (e.g. Reacher-v2), you need to acquire Mujoco license.

First, clone the repository.

git clone https://github.com/medipixel/rl_algorithms.git

cd rl_algorithms

Secondly, install packages required to execute the code. Just type:

make dep

You need to type the additional command which configures formatting and linting settings. It automatically runs formatting and linting when you commit the code.

make dev

After having done make dev, you can validate the code by the following commands.

make format # for formatting

make test # for linting

You can train or test algorithm on env_name if examples/env_name/algorithm.py exists. (examples/env_name/algorithm.py contains hyper-parameters and details of networks.)

python run_env_name.py --algo algorithm

e.g. running soft actor-critic on LunarLanderContinuous-v2.

python run_lunarlander_continuous_v2.py --algo sac <other-options>

e.g. running a custom agent, if you have written your own example: examples/env_name/ddpg-custom.py.

python run_env_name.py --algo ddpg-custom

You will see the agent run with hyper parameter and model settings you configured.

In addition, there are various argument settings for running algorithms. If you check the options to run file you should command

python <run-file> -h

--test- Start test mode (no training).

--off-render- Turn off rendering.

--log- Turn on logging using W&B.

--seed <int>- Set random seed.

--save-period <int>- Set saving period of model and optimizer parameters.

--max-episode-steps <int>- Set maximum episode step number of the environment. If the number is less than or equal to 0, it uses the default maximum step number of the environment.

--episode-num <int>- Set the number of episodes for training.

--render-after <int>- Start rendering after the number of episodes.

--load-from <save-file-path>- Load the saved models and optimizers at the beginning.

We use W&B for logging of network parameters and others. For more details, read W&B tutorial.

Class diagram at #135.

This won't be frequently updated.

- T. P. Lillicrap et al., "Continuous control with deep reinforcement learning." arXiv preprint arXiv:1509.02971, 2015.

- J. Schulman et al., "Proximal Policy Optimization Algorithms." arXiv preprint arXiv:1707.06347, 2017.

- S. Fujimoto et al., "Addressing function approximation error in actor-critic methods." arXiv preprint arXiv:1802.09477, 2018.

- T. Haarnoja et al., "Soft Actor-Critic: Off-Policy Maximum Entropy Deep Reinforcement Learning with a Stochastic Actor." arXiv preprint arXiv:1801.01290, 2018.

- T. Haarnoja et al., "Soft Actor-Critic Algorithms and Applications." arXiv preprint arXiv:1812.05905, 2018.

- T. Schaul et al., "Prioritized Experience Replay." arXiv preprint arXiv:1511.05952, 2015.

- M. Andrychowicz et al., "Hindsight Experience Replay." arXiv preprint arXiv:1707.01495, 2017.

- A. Nair et al., "Overcoming Exploration in Reinforcement Learning with Demonstrations." arXiv preprint arXiv:1709.10089, 2017.

- M. Vecerik et al., "Leveraging Demonstrations for Deep Reinforcement Learning on Robotics Problems with Sparse Rewards."arXiv preprint arXiv:1707.08817, 2017

- V. Mnih et al., "Human-level control through deep reinforcement learning." Nature, 518 (7540):529–533, 2015.

- van Hasselt et al., "Deep Reinforcement Learning with Double Q-learning." arXiv preprint arXiv:1509.06461, 2015.

- Z. Wang et al., "Dueling Network Architectures for Deep Reinforcement Learning." arXiv preprint arXiv:1511.06581, 2015.

- T. Hester et al., "Deep Q-learning from Demonstrations." arXiv preprint arXiv:1704.03732, 2017.

- M. G. Bellemare et al., "A Distributional Perspective on Reinforcement Learning." arXiv preprint arXiv:1707.06887, 2017.

- M. Fortunato et al., "Noisy Networks for Exploration." arXiv preprint arXiv:1706.10295, 2017.

- M. Hessel et al., "Rainbow: Combining Improvements in Deep Reinforcement Learning." arXiv preprint arXiv:1710.02298, 2017.

- W. Dabney et al., "Implicit Quantile Networks for Distributional Reinforcement Learning." arXiv preprint arXiv:1806.06923, 2018.