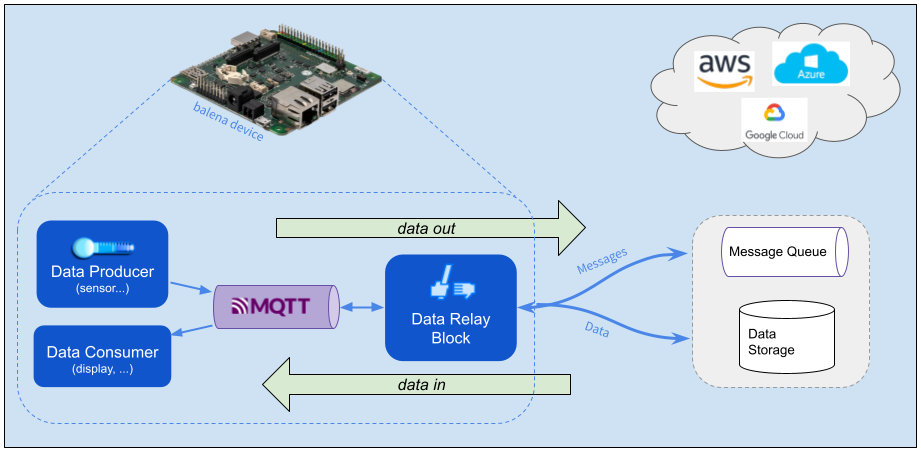

Easily send and receive application data with major cloud providers or other hosts via messaging or specialized services.

Highlights

- Messaging: Send/Receive messages with other hosts

- InfluxDB: Send data to the popular time series database

- Major cloud providers: AWS, Azure or Google Cloud Pub/Sub

Documentation

Head over to our docs to see how to get started and understand how it works.

Motivation

Cloud providers include a variety of services to consume and provide application data, but each works differently. The Data Relay block provides a common, simple way to exchange application data with the cloud or other hosts.

This project is in active development so if you have any feature requests or issues please submit them here on GitHub. PRs are welcome, too.

License

Data Relay block is free software, and may be redistributed under the terms specified in the license.