| ubuntu/python-2.7 | ubuntu/python-3.6 |

|---|---|

|

|

Multi Model Server (MMS) is a flexible and easy to use tool for serving deep learning models trained using any ML/DL framework.

Use the MMS Server CLI, or the pre-configured Docker images, to start a service that sets up HTTP endpoints to handle model inference requests.

A quick overview and examples for both serving and packaging are provided below. Detailed documentation and examples are provided in the docs folder.

Join our  slack channel to get in touch with development team, ask questions, find out what's cooking and more!

slack channel to get in touch with development team, ask questions, find out what's cooking and more!

Before proceeding further with this document, make sure you have the following prerequisites.

-

Ubuntu, CentOS, or macOS. Windows support is experimental. The following instructions will focus on Linux and macOS only.

-

Python - Multi Model Server requires python to run the workers.

-

pip - Pip is a python package management system.

-

Java 8 - Multi Model Server requires Java 8 to start. You have the following options for installing Java 8:

For Ubuntu:

sudo apt-get install openjdk-8-jre-headless

For CentOS:

sudo yum install java-1.8.0-openjdk

For macOS:

brew tap homebrew/cask-versions brew update brew cask install adoptopenjdk8

Step 1: Setup a Virtual Environment

We recommend installing and running Multi Model Server in a virtual environment. It's a good practice to run and install all of the Python dependencies in virtual environments. This will provide isolation of the dependencies and ease dependency management.

One option is to use Virtualenv. This is used to create virtual Python environments. You may install and activate a virtualenv for Python 2.7 as follows:

pip install virtualenvThen create a virtual environment:

# Assuming we want to run python2.7 in /usr/local/bin/python2.7

virtualenv -p /usr/local/bin/python2.7 /tmp/pyenv2

# Enter this virtual environment as follows

source /tmp/pyenv2/bin/activateRefer to the Virtualenv documentation for further information.

Step 2: Install MXNet MMS won't install the MXNet engine by default. If it isn't already installed in your virtual environment, you must install one of the MXNet pip packages.

For CPU inference, mxnet-mkl is recommended. Install it as follows:

# Recommended for running Multi Model Server on CPU hosts

pip install mxnet-mklFor GPU inference, mxnet-cu92mkl is recommended. Install it as follows:

# Recommended for running Multi Model Server on GPU hosts

pip install mxnet-cu92mklStep 3: Install or Upgrade MMS as follows:

# Install latest released version of multi-model-server

pip install multi-model-serverTo upgrade from a previous version of multi-model-server, please refer migration reference document.

Notes:

- A minimal version of

model-archiverwill be installed with MMS as dependency. See model-archiver for more options and details. - See the advanced installation page for more options and troubleshooting.

Once installed, you can get MMS model server up and running very quickly. Try out --help to see all the CLI options available.

multi-model-server --helpFor this quick start, we'll skip over most of the features, but be sure to take a look at the full server docs when you're ready.

Here is an easy example for serving an object classification model:

multi-model-server --start --models squeezenet=https://s3.amazonaws.com/model-server/model_archive_1.0/squeezenet_v1.1.marWith the command above executed, you have MMS running on your host, listening for inference requests. Please note, that if you specify model(s) during MMS start - it will automatically scale backend workers to the number equal to available vCPUs (if you run on CPU instance) or to the number of available GPUs (if you run on GPU instance). In case of powerful hosts with a lot of compute resoures (vCPUs or GPUs) this start up and autoscaling process might take considerable time. If you would like to minimize MMS start up time you can try to avoid registering and scaling up model during start up time and move that to a later point by using corresponding Management API calls (this allows finer grain control to how much resources are allocated for any particular model).

To test it out, you can open a new terminal window next to the one running MMS. Then you can use curl to download one of these cute pictures of a kitten and curl's -o flag will name it kitten.jpg for you. Then you will curl a POST to the MMS predict endpoint with the kitten's image.

In the example below, we provide a shortcut for these steps.

curl -O https://s3.amazonaws.com/model-server/inputs/kitten.jpg

curl -X POST http://127.0.0.1:8080/predictions/squeezenet -T kitten.jpgThe predict endpoint will return a prediction response in JSON. It will look something like the following result:

[

{

"probability": 0.8582232594490051,

"class": "n02124075 Egyptian cat"

},

{

"probability": 0.09159987419843674,

"class": "n02123045 tabby, tabby cat"

},

{

"probability": 0.0374876894056797,

"class": "n02123159 tiger cat"

},

{

"probability": 0.006165083032101393,

"class": "n02128385 leopard, Panthera pardus"

},

{

"probability": 0.0031716004014015198,

"class": "n02127052 lynx, catamount"

}

]You will see this result in the response to your curl call to the predict endpoint, and in the server logs in the terminal window running MMS. It's also being logged locally with metrics.

Other models can be downloaded from the model zoo, so try out some of those as well.

Now you've seen how easy it can be to serve a deep learning model with MMS! Would you like to know more?

To stop the current running model-server instance, run the following command:

$ multi-model-server --stopYou would see output specifying that multi-model-server has stopped.

MMS enables you to package up all of your model artifacts into a single model archive. This makes it easy to share and deploy your models. To package a model, check out model archiver documentation

- MMS doesn't provide authentication. You have to have your own authentication proxy in front of MMS.

- MMS doesn't provide throttling, it's vulnerable to DDoS attack. It's recommended to running MMS behind a firewall.

- MMS only allows localhost access by default, see Network configuration for detail.

- SSL is not enabled by default, see Enable SSL for detail.

- MMS use a config.properties file to configure MMS's behavior, see Manage MMS page for detail of how to configure MMS.

- For better security, we recommend running MMS inside docker container. This project includes Dockerfiles to build containers recommended for production deployments. These containers demonstrate how to customize your own production MMS deployment. The basic usage can be found on the Docker readme.

Browse over to the Docs readme for the full index of documentation. This includes more examples, how to customize the API service, API endpoint details, and more.

Here are some example demos of deep learning applications, powered by MMS:

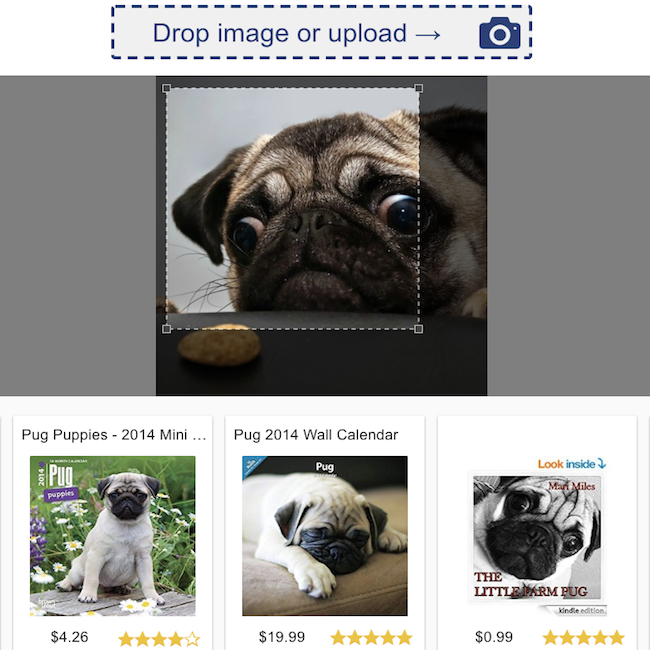

Product Review Classification  |

Visual Search  |

Facial Emotion Recognition  |

Neural Style Transfer  |

We welcome all contributions!

To file a bug or request a feature, please file a GitHub issue. Pull requests are welcome.