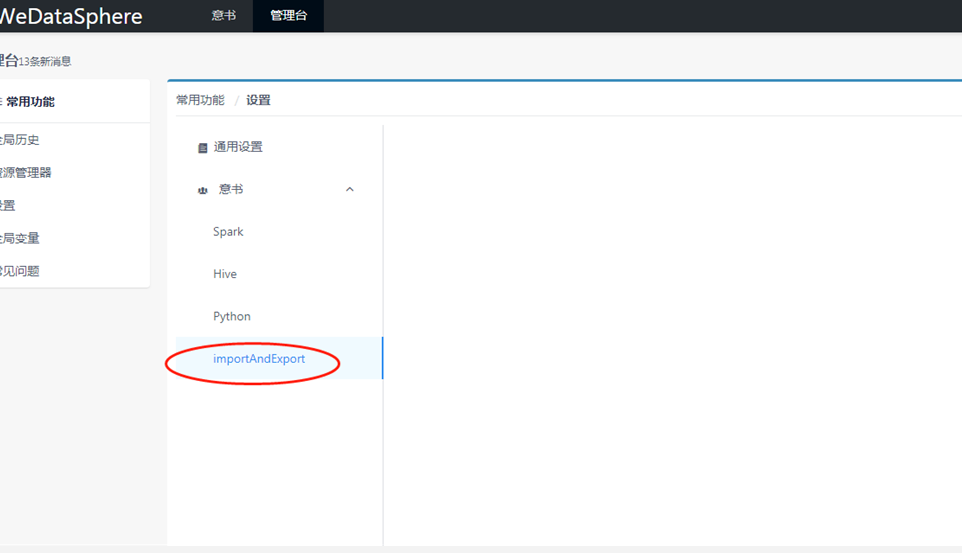

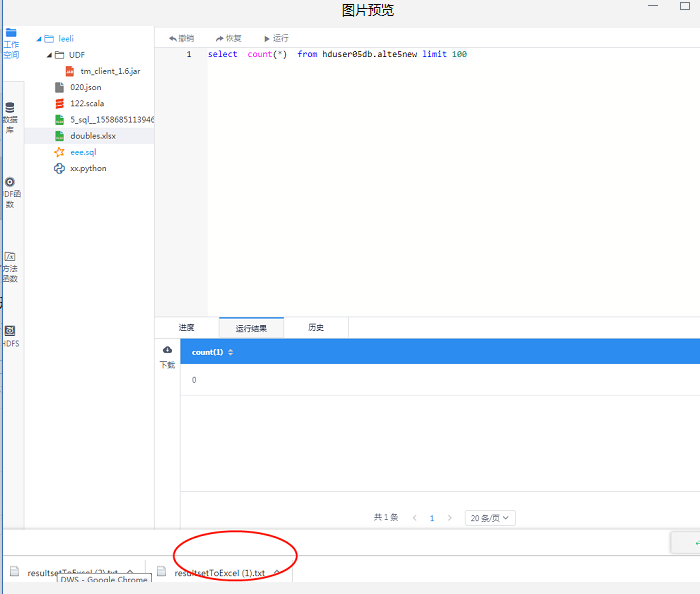

RUN HQL script

val source = """{"path":"/tmp/linkis/leeli/Result_asda.sql_07221642.csv","pathType":"share","encoding":"utf-8","fieldDelimiter":",","hasHeader":true,"sheet":"","quote":"","escapeQuotes":false}"""

val destination = """{"database":"leeli_ind","tableName":"Result_asda","importData":false,"isPartition":false,"partition":"","partitionValue":"","isOverwrite":false,"columns":[{"name":"Pregnancies","comment":"","type":"string","dateFormat":""},{"name":"Glucose","comment":"","type":"string","dateFormat":""},{"name":"BloodPressure","comment":"","type":"string","dateFormat":""},{"name":"SkinThickness","comment":"","type":"string","dateFormat":""},{"name":"Insulin","comment":"","type":"string","dateFormat":""},{"name":"BMI","comment":"","type":"string","dateFormat":""},{"name":"DiabetesPedigreeFunction","comment":"","type":"string","dateFormat":""},{"name":"Age","comment":"","type":"string","dateFormat":""},{"name":"Outcome","comment":"","type":"string","dateFormat":""}]}"""

com.webank.wedatasphere.linkis.engine.imexport.LoadData.loadDataToTable(spark,source,destination)

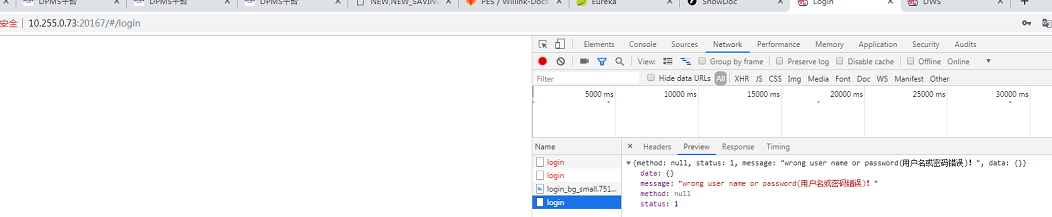

ERROR LOG info:

2019-07-25 18:21:29.304 ERROR [sparkEngineEngine-Thread-4] org.apache.hadoop.hdfs.KeyProviderCache 87 createKeyProviderURI - Could not find uri with key [dfs.encryption.key.provider.uri] to create a keyProvider !!