English| 简体中文

Alink是基于Flink的通用算法平台,由阿里巴巴计算平台PAI团队研发,欢迎大家加入Alink开源用户钉钉群进行交流。

- Alink组件列表:http://alinklab.cn/manual/index.html

- Alink教程:http://alinklab.cn/tutorial/index.html

- Alink插件下载器:https://www.yuque.com/pinshu/alink_guide/plugin_downloader

- Alink教程(Java版):http://alinklab.cn/tutorial/book_java.html

- Alink教程(Python版):http://alinklab.cn/tutorial/book_python.html

- 源代码地址:https://github.com/alibaba/Alink/tree/master/tutorial

- Java版的数据和资料链接:http://alinklab.cn/tutorial/book_java_00_reference.html

- Python版的数据和资料链接:http://alinklab.cn/tutorial/book_python_00_reference.html

- Alink教程(Java版)代码的运行攻略 http://alinklab.cn/tutorial/book_java_00_code_help.html

- Alink教程(Python版)代码的运行攻略 http://alinklab.cn/tutorial/book_python_00_code_help.html

- PyAlink 根据 Alink 所支持的 Flink 版本提供不同的 Python 包:

其中,

pyalink包对应为 Alink 所支持的最新 Flink 版本,当前为 1.13,而pyalink-flink-***为旧版本的 Flink 版本,当前提供pyalink-flink-1.12,pyalink-flink-1.11,pyalink-flink-1.10和pyalink-flink-1.9。 - Python 包的版本号与 Alink 的版本号一致,例如

1.6.2。

####安装步骤:

- 确保使用环境中有Python3,版本限于 3.6,3.7 和 3.8。

- 确保使用环境中安装有 Java 8。

- 使用 pip 命令进行安装:

pip install pyalink、pip install pyalink-flink-1.12、pip install pyalink-flink-1.11、pip install pyalink-flink-1.10或者pip install pyalink-flink-1.9。

pyalink和pyalink-flink-***不能同时安装,也不能与旧版本同时安装。 如果之前安装过pyalink或者pyalink-flink-***,请使用pip uninstall pyalink或者pip uninstall pyalink-flink-***卸载之前的版本。- 出现

pip安装缓慢或不成功的情况,可以参考这篇文章修改pip源,或者直接使用下面的链接下载 whl 包,然后使用pip安装: - 如果有多个版本的 Python,可能需要使用特定版本的

pip,比如pip3;如果使用 Anaconda,则需要在 Anaconda 命令行中进行安装。

可以通过 Jupyter Notebook 来开始使用 PyAlink,能获得更好的使用体验。

使用步骤:

- 在命令行中启动Jupyter:

jupyter notebook,并新建 Python 3 的 Notebook 。 - 导入 pyalink 包:

from pyalink.alink import *。 - 使用方法创建本地运行环境:

useLocalEnv(parallism, flinkHome=None, config=None)。 其中,参数parallism表示执行所使用的并行度;flinkHome为 flink 的完整路径,一般情况不需要设置;config为Flink所接受的配置参数。运行后出现如下所示的输出,表示初始化运行环境成功:

JVM listening on ***

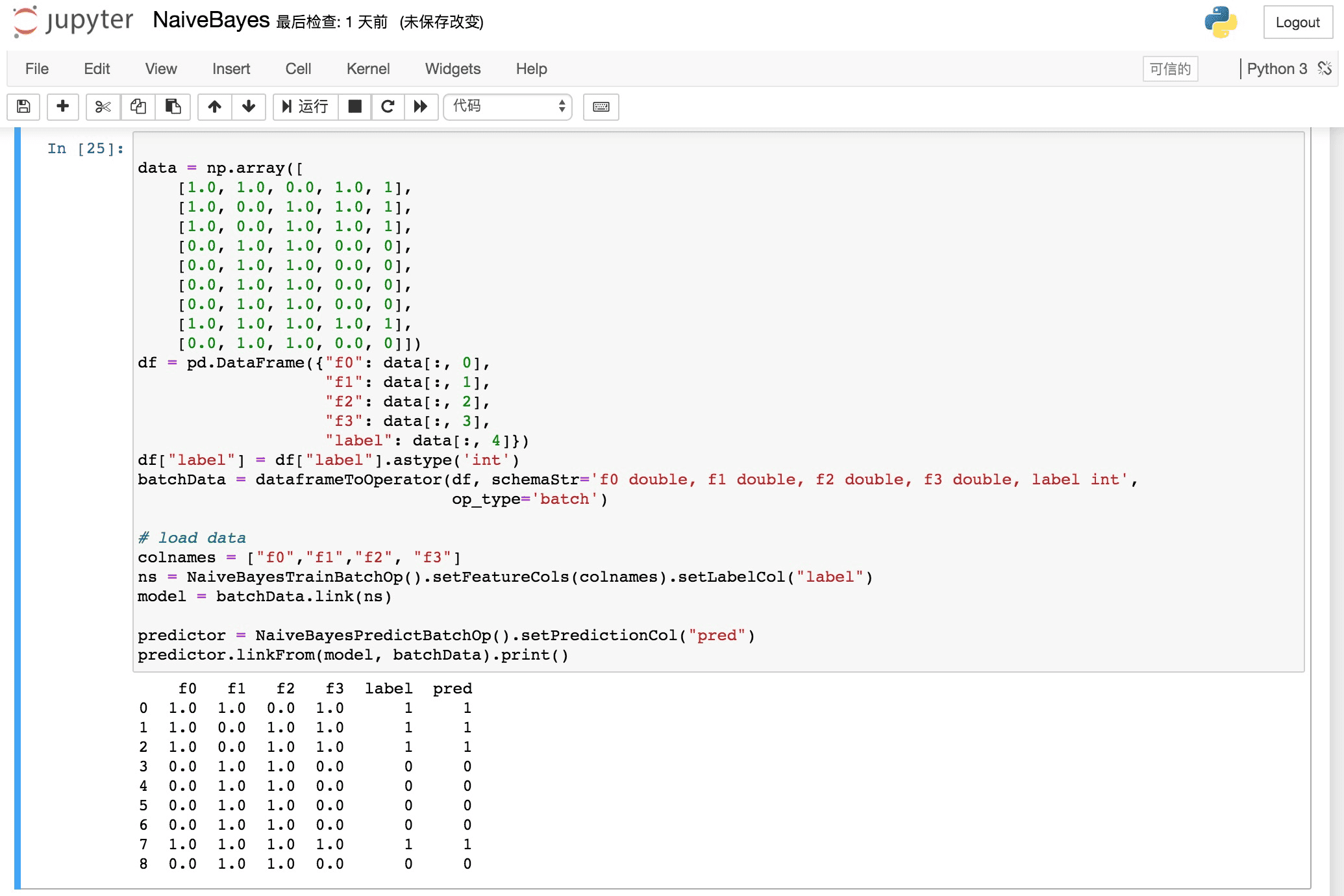

- 开始编写 PyAlink 代码,例如:

source = CsvSourceBatchOp()\

.setSchemaStr("sepal_length double, sepal_width double, petal_length double, petal_width double, category string")\

.setFilePath("https://alink-release.oss-cn-beijing.aliyuncs.com/data-files/iris.csv")

res = source.select(["sepal_length", "sepal_width"])

df = res.collectToDataframe()

print(df)在 PyAlink 中,算法组件提供的接口基本与 Java API 一致,即通过默认构造方法创建一个算法组件,然后通过 setXXX 设置参数,通过 link/linkTo/linkFrom 与其他组件相连。

这里利用 Jupyter Notebook 的自动补全机制可以提供书写便利。

对于批式作业,可以通过批式组件的 print/collectToDataframe/collectToDataframes 等方法或者 BatchOperator.execute() 来触发执行;对于流式作业,则通过 StreamOperator.execute() 来启动作业。

String URL = "https://alink-release.oss-cn-beijing.aliyuncs.com/data-files/iris.csv";

String SCHEMA_STR = "sepal_length double, sepal_width double, petal_length double, petal_width double, category string";

BatchOperator data = new CsvSourceBatchOp()

.setFilePath(URL)

.setSchemaStr(SCHEMA_STR);

VectorAssembler va = new VectorAssembler()

.setSelectedCols(new String[]{"sepal_length", "sepal_width", "petal_length", "petal_width"})

.setOutputCol("features");

KMeans kMeans = new KMeans().setVectorCol("features").setK(3)

.setPredictionCol("prediction_result")

.setPredictionDetailCol("prediction_detail")

.setReservedCols("category")

.setMaxIter(100);

Pipeline pipeline = new Pipeline().add(va).add(kMeans);

pipeline.fit(data).transform(data).print();<dependency>

<groupId>com.alibaba.alink</groupId>

<artifactId>alink_core_flink-1.13_2.11</artifactId>

<version>1.6.2</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_2.11</artifactId>

<version>1.13.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner_2.11</artifactId>

<version>1.13.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.11</artifactId>

<version>1.13.0</version>

</dependency><dependency>

<groupId>com.alibaba.alink</groupId>

<artifactId>alink_core_flink-1.12_2.11</artifactId>

<version>1.6.2</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_2.11</artifactId>

<version>1.12.1</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner_2.11</artifactId>

<version>1.12.1</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.11</artifactId>

<version>1.12.1</version>

</dependency><dependency>

<groupId>com.alibaba.alink</groupId>

<artifactId>alink_core_flink-1.11_2.11</artifactId>

<version>1.6.2</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_2.11</artifactId>

<version>1.11.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner_2.11</artifactId>

<version>1.11.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.11</artifactId>

<version>1.11.0</version>

</dependency><dependency>

<groupId>com.alibaba.alink</groupId>

<artifactId>alink_core_flink-1.10_2.11</artifactId>

<version>1.6.2</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_2.11</artifactId>

<version>1.10.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner_2.11</artifactId>

<version>1.10.0</version>

</dependency><dependency>

<groupId>com.alibaba.alink</groupId>

<artifactId>alink_core_flink-1.9_2.11</artifactId>

<version>1.6.2</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_2.11</artifactId>

<version>1.9.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner_2.11</artifactId>

<version>1.9.0</version>

</dependency>- 准备Flink集群

wget https://archive.apache.org/dist/flink/flink-1.13.0/flink-1.13.0-bin-scala_2.11.tgz

tar -xf flink-1.13.0-bin-scala_2.11.tgz && cd flink-1.13.0

./bin/start-cluster.sh- 准备Alink算法包

git clone https://github.com/alibaba/Alink.git

# add <scope>provided</scope> in pom.xml of alink_examples.

cd Alink && mvn -Dmaven.test.skip=true clean package shade:shade- 运行Java示例

./bin/flink run -p 1 -c com.alibaba.alink.ALSExample [path_to_Alink]/examples/target/alink_examples-1.5-SNAPSHOT.jar

# ./bin/flink run -p 1 -c com.alibaba.alink.GBDTExample [path_to_Alink]/examples/target/alink_examples-1.5-SNAPSHOT.jar

# ./bin/flink run -p 1 -c com.alibaba.alink.KMeansExample [path_to_Alink]/examples/target/alink_examples-1.5-SNAPSHOT.jar