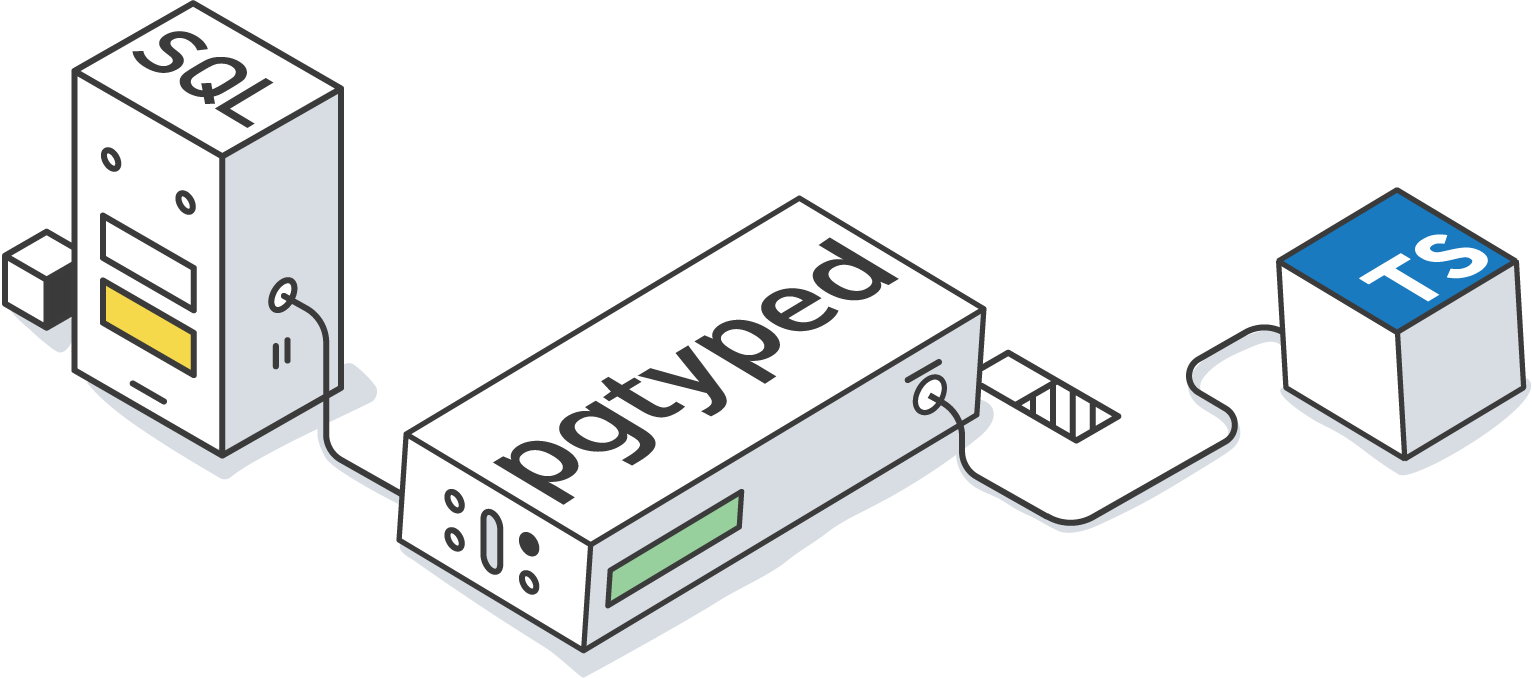

PgTyped makes it possible to use raw SQL in TypeScript with guaranteed type-safety.

No need to map or translate your DB schema to TypeScript, PgTyped automatically generates types and interfaces for your SQL queries by using your running Postgres database as the source of type information.

- Automatically generates TS types for parameters/results of SQL queries of any complexity.

- Supports extracting and typing queries from both SQL and TS files.

- Generate query types as you write them, using watch mode.

- Useful parameter interpolation helpers for arrays and objects.

- No need to define your DB schema in TypeScript, your running DB is the live source of type data.

- Prevents SQL injections by not doing explicit parameter substitution. Instead, queries and parameters are sent separately to the DB driver, allowing parameter substitution to be safely done by the PostgreSQL server.

- Native ESM support. Runtime dependencies are also provided as CommonJS.

Visit our documentation page at https://pgtyped.dev/

npm install -D @pgtyped/cli typescript(typescript is a required peer dependency for pgtyped)npm install @pgtyped/runtime(@pgtyped/runtimeis the only required runtime dependency of pgtyped)- Create a PgTyped

config.jsonfile. - Run

npx pgtyped -w -c config.jsonto start PgTyped in watch mode.

More info on getting started can be found in the Getting Started page. You can also refer to the example app for a preconfigured example.

Lets save some queries in books.sql:

/* @name FindBookById */

SELECT * FROM books WHERE id = :bookId;PgTyped parses the SQL file, extracting all queries and generating strictly typed TS queries in books.queries.ts:

/** Types generated for queries found in "books.sql" */

//...

/** 'FindBookById' parameters type */

export interface IFindBookByIdParams {

bookId: number | null;

}

/** 'FindBookById' return type */

export interface IFindBookByIdResult {

id: number;

rank: number | null;

name: string | null;

author_id: number | null;

}

/**

* Query generated from SQL:

* SELECT * FROM books WHERE id = :bookId

*/

export const findBookById = new PreparedQuery<

IFindBookByIdParams,

IFindBookByIdResult

>(...);Query findBookById is now statically typed, with types inferred from the PostgreSQL schema.

This generated query can be imported and executed as follows:

import { Client } from 'pg';

import { findBookById } from './books.queries';

export const client = new Client({

host: 'localhost',

user: 'test',

password: 'example',

database: 'test',

});

async function main() {

await client.connect();

const books = await findBookById.run(

{

bookId: 5,

},

client,

);

console.log(`Book name: ${books[0].name}`);

await client.end();

}

main();- Configuring pgTyped

- Writing queries in SQL files

- Advanced queries and parameter expansions in SQL files

- Writing queries in TS files

- Advanced queries and parameter expansions in TS files

This project is being actively developed and its APIs might change. All issue reports, feature requests and PRs appreciated.

Copyright (c) 2019-present, Adel Salakh