Comments (8)

I also would like to know.

from promptfoo.

Unfortunately not really. I built this because there wasn't anything else out there that did what I needed it to do. OpenAI does have an Evals framework you can take a look at. Its focus is on testing OpenAI models with heavier test cases, and some of the more advanced test cases require Python implementation.

from promptfoo.

The main thing I need is this but in python with langchain compatibility. It might be worth cloning and converting.

from promptfoo.

As far as I know, QAEvalChain in langchain module might be useful to me. I'm still looking to see if there are other alternatives.

from promptfoo.

@typpo Thanks for the link reference.

from promptfoo.

For those of you working in Python, have a look at the end-to-end LLM chain testing documentation.

Specifically, I've created an example that shows how to evaluate a Python LangChain implementation.

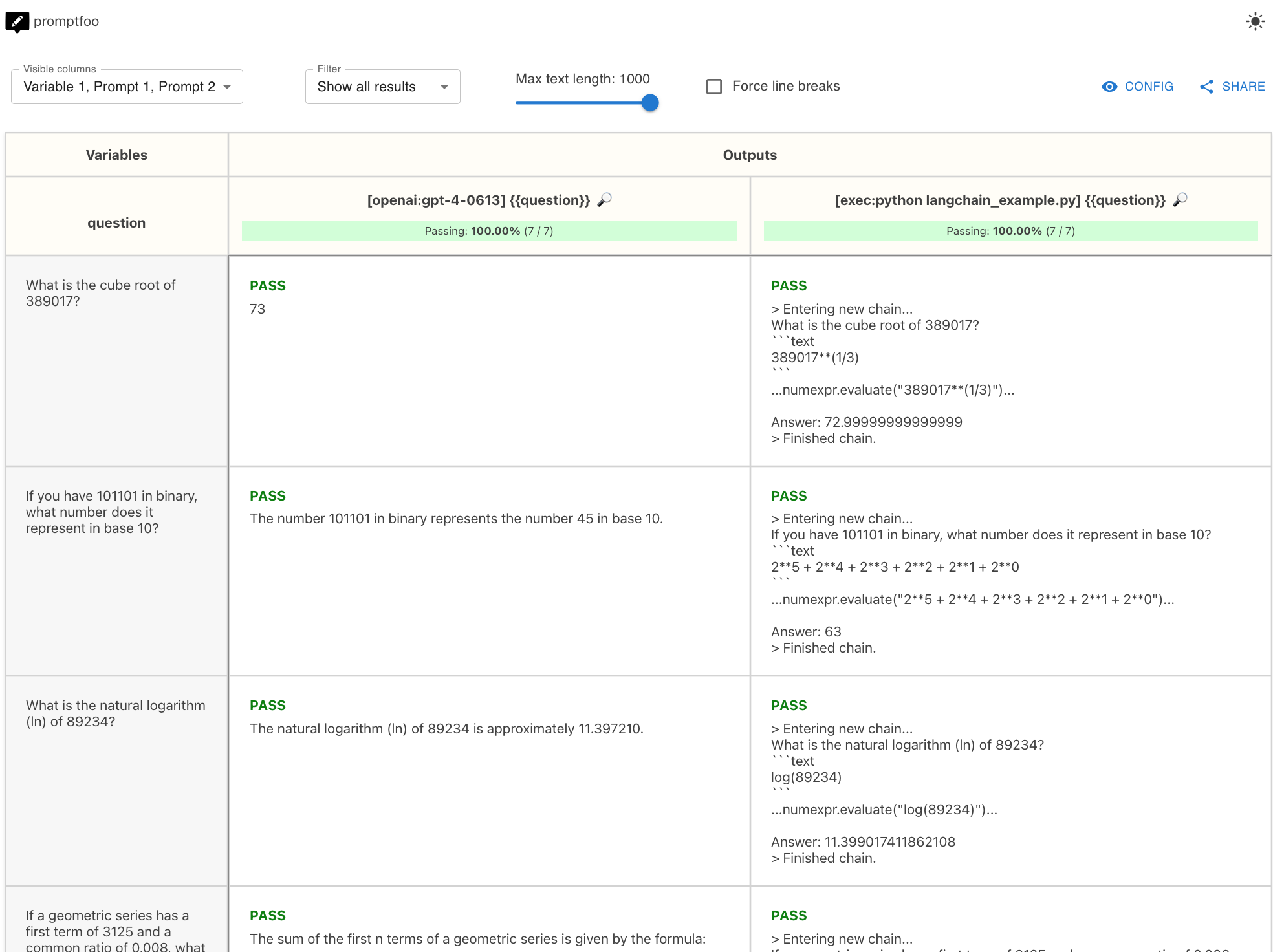

The example compares raw GPT-4 with LangChain's LLM-Math plugin by using the exec provider to run the LangChain script:

# promptfooconfig.yaml

# ...

providers:

- openai:chat:gpt-4-0613

- exec:python langchain_example.py

# ...The result is a side-by-side comparison of GPT-4 and LangChain doing math:

Hope this helps your use cases. If not, interested in learning more.

Side note - QAEvalChain is similar in approach to the llm-rubric assertion type of promptfoo. It can help evaluate whether a specific answer makes sense for a specific question.

from promptfoo.

It looks like it was released recently.

hegelai/prompttools: Open-source tools for prompt testing and experimentation

from promptfoo.

@typpo : First of all congratulations on the great work in building this library. It would be great if we can have some way to directly compare and contrast promptfoo with prompttools and evals by OpenAi. This will make life easier for consumers to pick & choose best among these based on the usecase.

from promptfoo.

Related Issues (20)

- Unable to utilize Azure OpenAI completion model HOT 3

- Allow multiple output formats simultaneously with `outputPath`. HOT 1

- promptfoo view not able to connect to server via browser on 0.25.0 HOT 2

- web view version not getting updated a lot of times HOT 3

- Getting apiKey not present error in test cases when trying to use model-graded-closedqa or llm-rubric HOT 2

- Python and Javascript asserts from file HOT 4

- Javascript and python options missing Edit Test Cast on web interface HOT 1

- promptfoo.evaluate() does not return config info HOT 2

- Unable to vars and assertions from yaml file HOT 1

- disable _conversation when evaluate HOT 1

- Run Python from `tests.csv`

- Testing providers w/ same model but diff params seems broken HOT 1

- Cosine similarity issue HOT 2

- Cannot use JSON prompts containing {{ or --- HOT 3

- Web view variables ordering HOT 2

- `-c some_config.yaml` flag overrides `outputPath` HOT 3

- Should folders of the `outputPath` be created when they don't exist? HOT 1

- `async` JavaScript doesn't work from `tests.csv` HOT 1

- Having trouble building from source HOT 5

- Feature Request: UI Metrics include Token Usage/Latency Summary HOT 3

Recommend Projects

-

React

React

A declarative, efficient, and flexible JavaScript library for building user interfaces.

-

Vue.js

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

-

Typescript

Typescript

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

-

TensorFlow

An Open Source Machine Learning Framework for Everyone

-

Django

The Web framework for perfectionists with deadlines.

-

Laravel

A PHP framework for web artisans

-

D3

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

-

Recommend Topics

-

javascript

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

-

web

Some thing interesting about web. New door for the world.

-

server

A server is a program made to process requests and deliver data to clients.

-

Machine learning

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

-

Visualization

Some thing interesting about visualization, use data art

-

Game

Some thing interesting about game, make everyone happy.

Recommend Org

-

Facebook

We are working to build community through open source technology. NB: members must have two-factor auth.

-

Microsoft

Open source projects and samples from Microsoft.

-

Google

Google ❤️ Open Source for everyone.

-

Alibaba

Alibaba Open Source for everyone

-

D3

Data-Driven Documents codes.

-

Tencent

China tencent open source team.

from promptfoo.