The data and code for the paper L. Lu, X. Meng, S. Cai, Z. Mao, S. Goswami, Z. Zhang, & G. E. Karniadakis. A comprehensive and fair comparison of two neural operators (with practical extensions) based on FAIR data. Computer Methods in Applied Mechanics and Engineering, 393, 114778, 2022.

- Burgers' equation

- Darcy problem in a rectangular domain

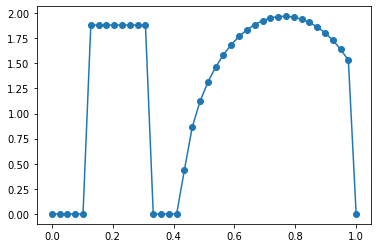

- Case: Piecewise constant

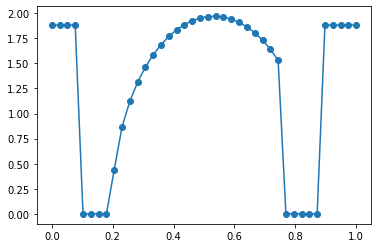

- Case: Continuous

- Darcy problem in a pentagram with a hole

- Darcy problem in a triangular domain

- Darcy problem in a triangular domain with notch

- Electroconvection problem

- Advection equation

- Linear instability waves in high-speed boundary layers

- Compressible Euler equations with non-equilibrium chemistry

- A flapping airfoil

- Navier-Stokes equation in the vorticity-velocity form

- Regularized cavity flows

- Case: Steady

- Case: Unsteady

- Burgers' equation

- Darcy problem in a rectangular domain

- Case: Piecewise constant

- Case: Continuous

- Darcy problem in a pentagram with a hole

- Darcy problem in a triangular domain

- Darcy problem in a triangular domain with notch

- Electroconvection problem

- Advection equation

- Linear instability waves in high-speed boundary layers

- Compressible Euler equations with non-equilibrium chemistry

- A flapping airfoil

- Navier-Stokes equation in the vorticity-velocity form

- Regularized cavity flows

- Case: Steady

- Case: Unsteady

If you use this data or code for academic research, you are encouraged to cite the following paper:

@article{lu2022comprehensive,

title = {A comprehensive and fair comparison of two neural operators (with practical extensions) based on FAIR data},

author = {Lu, Lu and Meng, Xuhui and Cai, Shengze and Mao, Zhiping and Goswami, Somdatta and Zhang, Zhongqiang and Karniadakis, George Em},

journal = {Computer Methods in Applied Mechanics and Engineering},

volume = {393},

pages = {114778},

year = {2022}

}

To get help on how to use the data or code, simply open an issue in the GitHub "Issues" section.

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.